- COMMENT

One statistical analysis must not rule them all

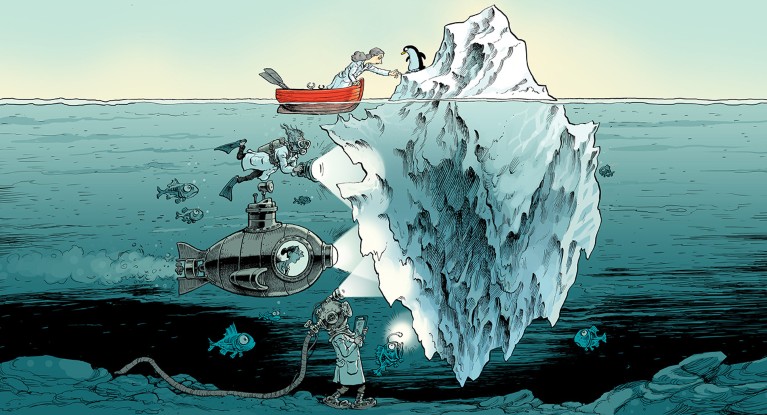

Illustration by David Parkins

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Rent or buy this article

Prices vary by article type

from$1.95

to$39.95

Prices may be subject to local taxes which are calculated during checkout

Nature 605, 423-425 (2022)

doi: https://doi.org/10.1038/d41586-022-01332-8

References

Scientific Pandemic Influenza Group on Modelling. SPI-M-O: Consensus statement on COVID-19, 8 October 2020 (2020).

Botvinik-Nezer, R. et al. Nature 582, 84–88 (2020).

Menkveld, A. J. et al. Preprint at SSRN https://doi.org/10.2139/ssrn.3961574 (2021).

Silberzahn, R. et al. Adv. Methods Pract. Psychol. Sci. 1, 337–356 (2018).

Steegen, S., Tuerlinckx, F., Gelman, A. & Vanpaemel, W. Perspect. Psychol. Sci. 11, 702–712 (2016).

Hoogeveen, S. et al. Preprint at PsyArXiv https://doi.org/10.31234/osf.io/pbfye (2022).

Marek, S. et al. Nature 603, 654–660 (2022).

Wagenmakers, E.-J. et al. Nature Hum. Behav. 5, 1473–1480 (2021).

Aczel, B. et al. eLife 10, e72185 (2021).

van Dongen, N. N. N. et al. Am. Stat. 73, 328–339 (2019).

Button, K. Nature 561, 287 (2018).

Supplementary Information

Competing Interests

The authors declare no competing interests.

No publication without confirmation

No publication without confirmation

Hide results to seek the truth

Hide results to seek the truth

Many hands make tight work

Many hands make tight work