- NEWS FEATURE

The broken promise that undermines human genome research

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Rent or buy this article

Prices vary by article type

from$1.95

to$39.95

Prices may be subject to local taxes which are calculated during checkout

Nature 590, 198-201 (2021)

doi: https://doi.org/10.1038/d41586-021-00331-5

References

International Human Genome Sequencing Consortium. Nature 409, 860–921 (2001).

Chong, J. X. et al. Am. J. Hum. Genet. 97, 199–215 (2015).

Buniello, A. et al. Nucl. Acids Res. 47, D1005–D1012 (2019).

Mills, M. C. & Rahal, C. Commun. Biol. 2, 9 (2019).

Fernandes, J. D. et al. Nature Genet. 52, 991–998 (2020).

The next 20 years of human genomics must be more equitable and more open

The next 20 years of human genomics must be more equitable and more open

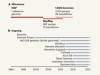

A wealth of discovery built on the Human Genome Project — by the numbers

A wealth of discovery built on the Human Genome Project — by the numbers

Sequence three million genomes across Africa

Sequence three million genomes across Africa

Breaking through the unknowns of the human reference genome

Breaking through the unknowns of the human reference genome

How the human genome transformed study of rare diseases

How the human genome transformed study of rare diseases

From one human genome to a complex tapestry of ancestry

From one human genome to a complex tapestry of ancestry

Milestones in genomic sequencing

Milestones in genomic sequencing