Illustration by The Project Twins

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Rent or buy this article

Prices vary by article type

from$1.95

to$39.95

Prices may be subject to local taxes which are calculated during checkout

Nature 584, 159-160 (2020)

doi: https://doi.org/10.1038/d41586-020-02284-7

Updates & Corrections

-

Correction 07 August 2020: An earlier version of this story erroneously affiliated Dara Norman with the National Optical Astronomy Observatory.

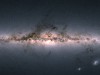

Billion-star map of Milky Way set to transform astronomy

Billion-star map of Milky Way set to transform astronomy

SKA data take centre stage in China

SKA data take centre stage in China

Why Jupyter is data scientists’ computational notebook of choice

Why Jupyter is data scientists’ computational notebook of choice

Astronomy data bounty spurs debate over access

Astronomy data bounty spurs debate over access

NatureTech hub

NatureTech hub