Nobel-prizewinning physicist Richard Feynman challenged researchers to make computing less binary.Credit: Kevin Fleming/Corbis via Getty

In 1981, physicist Richard Feynman famously talked about the problem of simulating physics with computers. This posed a challenge because machines that make calculations based on binary logic — 1s and 0s — are not very good at capturing the uncertainty inherent in quantum mechanics. One way to tackle this, Feynman suggested, is to use quantum building blocks to make a computer that mirrors quantum behaviour — in other words, a quantum computer.

But Feynman had another idea: a classical computer capable of mimicking the probabilistic behaviour of quantum mechanics. Nearly 40 years on, Shunsuke Fukami and his colleagues at Tohoku University in Japan and Purdue University in Indiana have built the hardware for such a probabilistic computer — also known as a stochastic computer — and they outline their work in this issue (W. A. Borders et al. Nature 573, 390–393; 2019). Among other things, this advance could lead to more-energy-efficient devices capable of faster and more complex calculations.

Read the paper: Integer factorization using stochastic magnetic tunnel junctions

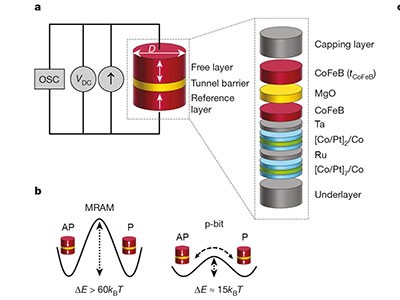

The researchers combined three conventional silicon transistors with a tiny magnet to create what are called p-bits (or probabilistic bits). These magnets are around just ten atoms thick and, at this size, they start to behave stochastically. One of the team’s key advances was to tune the thickness of the magnets to balance stability with thermal noise and introduce stochasticity in a controllable way.

What is remarkable about this stochastic computing scheme is that it can solve some types of problem that are difficult for conventional computers to address, such as machine learning, which involves the processing of ever-increasing amounts of big data. But how do we know that this stochastic computer performs better than conventional approaches?

The research team programmed the device to calculate the factors of integers up to 945. Such calculations are so difficult for standard computers to solve that they have become the basis of public encryption keys used in passwords. A conventional probabilistic computer — one that uses silicon transistors — would require more than 1,000 transistors to complete this task. But Fukami andcolleagues’ machine did it using just eight p-bits. Moreover, their components needed just one three-hundredth of the surface area and used one-tenth of the energy.

For a while, advances in miniaturization technology meant that the number of operations silicon chips could complete per kilowatt hour of energy was doubling about every 1.6 years. But the trend has been slowing since around 2000, and researchers think it might be approaching a physical limit. The word ‘revolutionize’ is overused in the tech world, but Fukami and colleagues’ demonstration shows that stochastic computing has the potential to drastically improve the energy efficiency of these types of calculation.

More widespread use of stochastic computing, however, will need a bigger effort from both public funders and manufacturers of silicon chips. Public funders in the European Union, Japan and the United States do have modest stochastic-computing research programmes. Companies, too, are funding research, through consortia such as the Semiconductor Research Corporation (go.nature.com/2mlhmoo).

But when faced with technology disruptions, governments and large corporations can understandably be slow to change — partly because they have interests to protect. As the demands of big data continue to increase, energy efficiency is becoming harder to ignore, which is why industry and policymakers need to step up the pace.

Fukami’s team has come up with a potential solution, and has successfully proved a concept. Going forwards, governments and corporations will need to create funding opportunities to give this innovation — and Feynman’s quest — a chance to see the light of day.

Stochastic magnetic circuits rival quantum computing

Stochastic magnetic circuits rival quantum computing

Read the paper: Integer factorization using stochastic magnetic tunnel junctions

Read the paper: Integer factorization using stochastic magnetic tunnel junctions

Computing a hard limit on growth

Computing a hard limit on growth

How to stop data centres from gobbling up the world’s electricity

How to stop data centres from gobbling up the world’s electricity

The chips are down for Moore’s law

The chips are down for Moore’s law

Computer engineering: Feeling the heat

Computer engineering: Feeling the heat