Some statisticians are calling for P values to be abandoned as an arbitrary threshold of significance.Credit: Erik Dreyer/Getty

Fans of The Hitchhiker’s Guide to the Galaxy know that the answer to life, the Universe and everything is 42. The joke, of course, is that truth cannot be revealed by a single number.

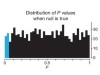

And yet this is the job often assigned to P values: a measure of how surprising a result is, given assumptions about an experiment, including that no effect exists. Whether a P value falls above or below an arbitrary threshold demarcating ‘statistical significance’ (such as 0.05) decides whether hypotheses are accepted, papers are published and products are brought to market. But using P values as the sole arbiter of what to accept as truth can also mean that some analyses are biased, some false positives are overhyped and some genuine effects are overlooked.

Scientists rise up against statistical significance

Change is in the air. In a Comment in this week’s issue, three statisticians call for scientists to abandon statistical significance. The authors do not call for P values themselves to be ditched as a statistical tool — rather, they want an end to their use as an arbitrary threshold of significance. More than 800 researchers have added their names as signatories. A series of related articles is being published by the American Statistical Association this week (R. L. Wasserstein et al. Am. Stat. https://doi.org/10.1080/00031305.2019.1583913; 2019). “The tool has become the tyrant,” laments one article.

Statistical significance is so deeply integrated into scientific practice and evaluation that extricating it would be painful. Critics will counter that arbitrary gatekeepers are better than unclear ones, and that the more useful argument is over which results should count for (or against) evidence of effect. There are reasonable viewpoints on all sides; Nature is not seeking to change how it considers statistical analysis in evaluation of papers at this time, but we encourage readers to share their views (see go.nature.com/correspondence).

If researchers do discard statistical significance, what should they do instead? They can start by educating themselves about statistical misconceptions. Most important will be the courage to consider uncertainty from multiple angles in every study. Logic, background knowledge and experimental design should be considered alongside P values and similar metrics to reach a conclusion and decide on its certainty.

When working out which methods to use, researchers should also focus as much as possible on actual problems. People who will duel to the death over abstract theories on the best way to use statistics often agree on results when they are presented with concrete scenarios.

Researchers should seek to analyse data in multiple ways to see whether different analyses converge on the same answer. Projects that have crowdsourced analyses of a data set to diverse teams suggest that this approach can work to validate findings and offer new insights.

In short, be sceptical, pick a good question, and try to answer it in many ways. It takes many numbers to get close to the truth.

Scientists rise up against statistical significance

Scientists rise up against statistical significance

Five ways to fix statistics

Five ways to fix statistics

Many hands make tight work

Many hands make tight work

Statistical errors

Statistical errors

P values and the search for significance

P values and the search for significance