- NEWS AND VIEWS

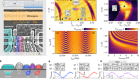

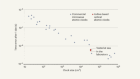

Machine learning in quantum spaces

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Rent or buy this article

Prices vary by article type

from$1.95

to$39.95

Prices may be subject to local taxes which are calculated during checkout

Nature 567, 179-181 (2019)

doi: https://doi.org/10.1038/d41586-019-00771-0

References

Havlíček, V. et al. Nature 567, 209–212 (2019).

Schölkopf, B. & Smola, A. J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond (MIT Press, 2002).

Schuld, M. & Killoran, N. Phys. Rev. Lett. 122, 040504 (2019).

Read the paper: Supervised learning with quantum-enhanced feature spaces

Read the paper: Supervised learning with quantum-enhanced feature spaces

Two artificial synapses are better than one

Two artificial synapses are better than one