Abstract

Background:

Little is known about leukaemia risk following chronic radiation exposures at low dose rates. The Techa River Cohort of individuals residing in riverside villages between 1950 and 1961 when releases from the Mayak plutonium production complex contaminated the river allows quantification of leukaemia risks associated with chronic low-dose-rate internal and external exposures.

Methods:

Excess relative risk models described the dose–response relationship between radiation dose on the basis of updated dose estimates and the incidence of haematological malignancies ascertained between 1953 and 2007 among 28 223 cohort members, adjusted for attained age, sex, and other factors.

Results:

Almost half of the 72 leukaemia cases (excluding chronic lymphocytic leukaemia (CLL)) were estimated to be associated with radiation exposure. These data are consistent with a linear dose response with no evidence of modification. The excess relative risk estimate was 0.22 per 100 mGy. There was no evidence of significant dose effect for CLL or other haematopoietic malignancies.

Conclusion:

These analyses demonstrate that radiation exposures, similar to those received by populations exposed as a consequence of nuclear accidents, are associated with long-term dose-related increases in leukaemia risks. Using updated dose estimates, the leukaemia risk per unit dose is about half of that based on previous dosimetry.

Similar content being viewed by others

Main

Previous studies suggest that both acute and protracted radiation exposures are associated with an increased risk of leukaemia (Curtis et al, 1994; Preston et al, 1994; Gilbert, 2009; Daniels and Schubauer-Berigan, 2011). An estimate of the proportion of leukaemia cases associated with natural background exposures has been made using published risk models (Kendall et al, 2011) and variation in the risk of childhood leukaemia associated with variation in natural background radiation levels observed (Kendall et al, 2013). The challenge remains to quantify and describe the dose–response relationship from low dose (<100 mGy) and low-dose-rate exposures (<5 mGy h−1) (Wakeford and Tawn, 2010).

The current analyses focus on characterising the radiation effects on the risk of leukaemia and other haematopoietic malignancies over more than 50 years in a population that received low-dose-rate radiation exposures as a consequence of environmental contamination arising from the production of plutonium for nuclear weapons in the Russian Southern Urals. The nature (i.e. protracted exposure to multiple radionuclides, including caesium and strontium) of the exposures is similar to those experienced as a consequence of nuclear accidents such as those in Chernobyl and Fukushima.

The Techa River Cohort (TRC), as described previously (Kossenko et al, 2005; Krestinina et al, 2005, 2007, 2010), is a unique resource for estimating cancer risks following chronic exposure to environmental radiation in a general population. It is one of few human populations protracted strontium exposure, a radionuclide which concentrates in the bone and is thus of great relevance for leukaemia studies. The TRC members were exposed to external γ-radiation exposure from contaminated river sediments and flood plain soil and internal exposure from radionuclides including strontium89, strontium90, and caesium137 from the consumption of contaminated water, milk, and food products following the release of radioactive waste into the River by the Mayak Radiochemical Plant between 1949 and 1956 (Akleyev et al, 1995; Degteva et al, 2006; Tolstykh et al, 2011).

We previously reported a statistically significant, dose–response relationship between the red bone marrow (RBM) dose and risk of leukaemia using an earlier dosimetry system (Techa River Dosimetry System (TRDS-2000)) (Krestinina et al, 2005; Ostroumova et al, 2006; Krestinina et al, 2010). The development of a better understanding of the nature of the releases, improved radiation transport and bio-kinetic models, and efforts to further individualise dose estimates led to the development of an updated dosimetry system (TRDS-2009) (Degtevea et al, 2012; Napier et al, 2013). Improvements to the strontium biokinetic model (Shagina et al, 2003) and the incorporation of previously unavailable information about the composition and timing of radionuclide releases into the river are of particular relevance to RBM dose estimates. Although the TRDS-2009 doses have been used for analyses of solid cancer mortality risks (Schonfeld et al, 2013), the work reported here is the first to make use of the improved doses in risk estimation for haematological malignancies. The primary focus in this work concerns estimating radiation risk for non-chronic lymphocytic leukaemia (non-CLL); however, we also describe the results for all leukaemias as a group, CLL, and other haematological malignances.

Materials and Methods

Previous publications provide detailed information about the compilation, design, and follow-up of the TRC (Kossenko et al, 2005; Krestinina et al, 2010) briefly summarised below. This study was approved by the Institutional Review Boards of the Urals Research Center for Radiation Medicine (URCRM) and the University of Illinois at Chicago.

Cohort definition

The full TRC includes 29 730 individuals who were born before 1950 and lived in one of the riverside villages between 1950 and 1960. Cohort members (n=1119) who died or were lost to follow-up prior to 1953 and not known to have lived in the study region (Chelyabinsk and Kurgan oblasts) between 1953 and the end of 2007 (n=388) were excluded. This report includes the remaining 28 223 cohort members known to have lived for a period of time in the Chelyabinsk or Kurgan Oblasts between 1 January 1953 and 31 December 2007.

Cohort follow-up

The URCRM staff conducted regular, systematic follow-up to ascertain vital status, cancer incidence, and cause of death for cohort members (Krestinina et al, 2010).

Follow-up for individual cohort members began at the latest of 1 January 1953 or the date they first lived in a riverside village and continues until the earliest of the date of the first cancer diagnosis (including leukaemia), death, migration from Chelyabinsk or Kurgan oblasts, date of last-known vital status, or 31 December 2007. Individuals who moved in and out of the two Oblasts contributed follow-up time only during periods of residence in the Chelyabinsk or Kurgan oblasts. Follow-up for this analysis begins in 1953 because of concern about underascertainment prior to that time (Krestinina et al, 2010).

Case definition

Haematological malignancies (ICD-9 codes 200–208), including leukaemia (ICD-9 codes 204–208), lymphosarcoma, and reticulosarcoma (ICD-9 200), Hodgkin lymphoma (HL) (201), non-Hodgkin lymphoma (NHL) (202), multiple myeloma (MM) (203), other/unknown haematopoietic malignancies (206–207–208) were ascertained from the URCRM medical records, Oblast oncology dispensaries, regional oncology clinics and health centres, and death certificates (Ostroumova et al, 2006; Krestinina et al, 2010).

Eligible cases included all first primary haematological malignancies ascertained in the TRC between 1953 and 2007. Nine cohort members who were diagnosed with another cancer before leukaemia were censored at the first cancer diagnosis. The ascertainment of haematological malignancies is largely complete for cohort members residing in Chelyabinsk and Kurgan Oblasts.

Dosimetry

Radiation exposures to the TRC members included external γ-radiation exposure from river sediments and flood plain soil and internal exposure from the consumption of water and milk contaminated primarily by strontium89,90 and caesium137.

The TRDS-2000 was developed in 2000 (Degteva et al, 2000b, 2006) and improved in 2009 (TRDS-2009). Recent improvements are described in (Shagina et al, 2003; Degteva et al, 2007, 2009; Tolstykh et al, 2011; Degteva et al, 2012; Shagina et al, 2012a, 2012b).

TRDS-2000 (Degteva et al, 2000a) applied individual information about age and residence history to estimated village-level average intake functions and external dose rates to obtain annual site-specific dose estimates, including those for RBM. TRDS-2009 dose estimates make use of improved source term parameters describing the time-dependent rates of radioactive release and radionuclide composition (Degteva et al, 2009, 2012). TRDS 2009 also provided greater individualisation of internal dose estimates for 27% of the entire cohort based on a resident’s or a co-inhabitant’s measurements of strontium90 body burden, available for 7903 cohort members.

The mean cumulative RBM dose (0.42 Gy, range 0–9 Gy) is markedly higher and the range broader with TRDS-2009 estimates than the corresponding statistics based on the TRDS-2000 dose estimates (0.29 Gy; range 0–2 Gy). The change in mean doses is primarily due to the fact that the new dosimetry includes a greater contribution of strontium89 in the period of maximal releases (1950–1951) and increases in the RBM dose from internal caesium137 exposure (Degteva et al, 2009). The increased range largely reflects the greater individualisation of the TRDS-2009 dose estimates.

Organisation of data for analysis

The data were organised as a highly stratified table of person-years and case counts. The stratification factors included time-varying 2-year-lagged cumulative RBM doses for both TRDS-2009 and TRDS-2000 doses with a zero dose category and 15 additional dose categories with lower bounds at 0, 0.005, 0.01, 0.025, 0.05, 0.075, 0.1, 0.15, 0.2, 0.25, 0.3, 0.5, 0.75, 1, and 1.5 Gy. Additional stratifying factors included sex, ethnicity, period of initial exposure (1950–1952, 1953–1960), calendar time (12 categories with cut points at 1 January of 1953, 1956, 1960, 1965, 1970, 1975, 1980, 1985, 1990, 1995, 2000, and 2005), attained age (16 5-year categories for ages 0–74 and a 75+ category), age at entry (eight categories with cut points at 10, 15, 20, 30, 40, 50, and 60), and time since first exposure (11 categories with cut points at 5, 10, 15, 20, 25, 30, 35, 40, 45, and 50 years).

Describing the radiation effect (excess relative risk models)

Incidence rates were modelled using excess relative risk models of the form

in which B0(a,s,x) describes the rates in an unexposed population (baseline rates) as a function of age, sex, and other factors, whereas the excess relative risk function,  (Preston et al, 1994) describes the magnitude of the radiation-associated excess risk as a proportion of the baseline rate. The ERR is described as

(Preston et al, 1994) describes the magnitude of the radiation-associated excess risk as a proportion of the baseline rate. The ERR is described as  , where

, where  is a dose response and

is a dose response and  describes how the response at a given dose depends on factors other than dose (effect modification).

describes how the response at a given dose depends on factors other than dose (effect modification).

For the analyses reported here, the logarithm of the baseline rate was assumed to be proportional to sex-specific functions of log age with, as needed for specific outcomes, allowance for ethnicity and birth cohort effects.

For the basic dose–response model, the ERR was assumed to be linear in dose but we also considered models where the dose response was taken as a linear-quadratic, a pure quadratic function of dose, or threshold models in which the ERR was assumed to be 0 up to some threshold dose and taken as linear for higher doses. We also considered a model in which the ERR was allowed to vary freely over dose categories. These category-specific estimates were then smoothed using a weighted running average with weights proportional to the product of fixed weights and one over the asymptotic variance of the category-specific ERR estimates. Effect modifiers considered included sex, ethnicity, age at diagnosis, time since exposure, and age at exposure. As we are dealing with chronic exposures, analyses of time-since-exposure effects involve looking at whether the ERR per unit dose varies across doses received in different periods prior to diagnosis as opposed to time since the first exposure. To do this, we used data sets with time-dependent stratification on the TRDS-2009 dose accumulated in periods defined by the time since the dose was received. The periods considered here were 2–4, 5–9, 10–14, 15–19, and 20 or more years prior to diagnosis. Similarly, to assess the potential effects of age at exposure, the person-years and cases were stratified using time-dependent categories of the dose received in the 0–19, 20–29, 30–39, and 40 or greater age intervals.

Analyses were conducted using internal comparisons based on models fit with the Epicure Poisson-regression risk-modelling software (Preston et al, 1993). Tests and confidence intervals were based on direct evaluation of the profile likelihood (Cox and Hinckley, 1974).

Results

Almost 60% of the cohort members were women, many were exposed before age 20, and most were living in Chelyabinsk Oblast at the time of exposure (Table 1). The higher proportion of women reflects deaths of adult males due to military service, accidents, or early deaths from disease. Cohort members identified with Tartar/Bashkir ethnicity make up about one-third of those exposed in Chelyabinsk Oblast while virtually all of those exposed in Kurgan Oblast were identified as Slavs. Most cohort members were initially exposed between 1950 and 1952, the period of maximal releases and exposure rates.

Individual annual dose rates declined rapidly with time since initial exposure and distance from the release point with almost no additional dose accumulation by the end of follow-up. In order to give some idea of representative dose rates during the periods of the greatest exposure, Table 1 presents average annual dose rates up to the time at which an individual received half of their total cumulative dose. The population average of these summary rates is 66 mGy per year. The variation by ethnicity reflects the fact that the Tartar/Bashkir villages were, on average, closer to the release point than the Slav villages. Over 90% of the cumulative RBM doses were attributed to the radioactive strontium exposures.

At the end of this follow-up period, 20% of the cohort were alive, 58% had died, and 22% were lost to follow-up (Table 2). There were 71 non-leukaemic haematological malignancies. The proportion of cases identified solely from death certificate has declined over time, ranging from about 25% for the years prior to 1990 to less than 10% over the last 17 years of follow-up. Of the 99 cases of leukaemia identified (Table 3), 52 were chronic leukaemias: 27 were CLL and 25 were chronic myeloid leukaemia (CML). In total leukaemia diagnoses were histologically confirmed for 82% of the cases.

Table 4 summarises the distribution of cases and crude incidence rates for selected haematopoietic malignancy categories by cohort characteristics and lagged cumulative dose. The dose-category-specific rates provide some suggestion of a trend with increasing dose that will be examined below.

Averaging over all ages (results not shown), modelled baseline rates were lower for women than men for all leukaemias as a group and for leukaemias other than CLL. Furthermore, the nature of the age-dependence differed by sex for both of these outcome groupings. Baseline rates did not vary significantly with birth cohort or ethnicity for either of these groupings.

Using a linear dose response for all leukaemias as a group, the ERR changed by 0.12 per 100 mGy increase in TRDS-2009 dose (95% CI 0.04–0.25; P<0.001). (This means that among people exposed to 100 mGy rates are estimated to be 12% greater than those for an unexposed population). With this dose–response model, we estimated that 32% of the 99 cases were associated with the radiation exposure. There was no evidence of a dose response for CLL (ERR per 100 mGy=0.01, 95% CI<0–0.12; P>0.5).

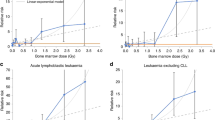

As there is no clear evidence of radiation effects on CLL rates in the literature or in these data, the remaining analyses focus on leukaemia other than CLL. For these leukaemias, the change in the ERR per 100 mGy in a linear dose–response model was 0.22 (95% CI 0.08–0.54; P<0.001). This fitted linear dose response is shown together with dose-category-specific ERR estimates in Figure 1. The addition of a quadratic term did not improve the model (P>0.5). A pure-quadratic dose–response model (ERR at 100 mGy 0.009; 95% CI 0.003–0.019; P<0.001), did not describe the data quite as well as the linear model. The right panel of Figure 1 shows a non-parametric smooth fit to the category-specific risk estimates. This smoothed curve, which does not rely on assumptions about the shape of the dose response, is similar to the linear fit over the low dose range. Although the data do not allow precise characterisation of the shape of the dose response, the data are consistent with linearity over the low dose range. Under the linear dose–response model, it was estimated that almost half of the 72 non-CLL cases in the cohort were associated with the radiation exposure (Table 5). There was no indication that the effect of doses from strontium exposure differed from doses received from other exposures (not shown); however, as 90% of the RBM dose received by TRC members arose for strontium exposure (Table 1), there is limited power to detect differences between the magnitude or nature of the dose response associated with dose arising from strontium exposure and that arising from exposures to other radionuclides.

Dose response for leukaemias other than CLL. Both panels include non-parametric estimates of dose-category-specific excess relative risks (black points) and the fitted linear dose response (solid-grey curve). The left panel, which shows the full range of dose category means, also includes the fitted linear-quadratic (dashed grey curve) dose response. The data in the right panel are limited to the 0–1 Gy dose range. This panel includes a non-parametric fit to the category-specific ERR estimates (dark-grey dashed curve) and an indication of the uncertainty in this fit (light-grey dashed curves indicating plus and minus one standard error).

The current incidence data for leukaemia other than CLL were also analysed using a linear model based on the TRDS-2000 doses. In this analysis, the ERR per 100 mGy was estimated to be 0.051 (95% CI 0.017–0.15, P<0.001), which is more than twice the TRDS-2009-based estimate, but similar to the TRDS-2000 estimate in our earlier analysis (Krestinina et al, 2010).

There was no statistically significant modification of the radiation-associated risk of non-CLL by sex (F:M ratio 1.0, 95% CI 0.14–6.7; P>0.5), or ethnicity (Tartar/Bashkir:Slav ratio 1.4, 95% CI 0.58–4.4; P=0.4). In a model in which the ERR was allowed to vary with age at diagnosis (i.e. attained age), the ERR was estimated to increase in proportion to age to the power 0.45 (95% CI −1.1 to 3.0), but this effect was not statistically significant (P>0.5).

To test for variation in the non-CLL risk with age at exposure, we carried out analyses in which the ERR/Gy was allowed to differ for doses received in four age-at-exposure groups (0–19, 20–29, 30–39, and 40 or more). There was no evidence of heterogeneity in the risk across these categories (P=0.45).

To examine time-since-exposure effects, we considered separate ERR estimates for doses received 2–4, 5–9, 10–14, 15–19, and 20 or more years prior to diagnosis. There was no evidence of significant heterogeneity across the five intervals (P=0.45), but there was a weak suggestion (P=0.11) that doses received 2–10 years prior to diagnosis were associated with a greater risk (ERR per 100 mGy 0.50, 95% CI 0.12–1.39) than that associated with doses received 10 or more years prior to diagnosis (ERR per 100 mGy 0.17, 95% CI 0.05–0.46). This pattern, although not quite as marked, is similar to that seen for the non-CLL risks associated with external doses in the Mayak worker cohort (Shilnikova et al, 2003) in which ERR per 100 mGy associated with doses received 2–4 years prior to death was 0.7, whereas that for doses received 5 or more years prior to death was 0.045. We also carried out some analyses to examine whether there were differences in the pattern of the time-since-exposure risks as a function of age at initial exposure. We considered people who were under and over 10 years of age at entry and also people who were under or over 20 at entry. There was no evidence of statistically significant difference for either comparison with P-values of >0.5 for the under/over 10 comparison and P=0.25 for the under/over 20 comparison. Furthermore, the estimate of the dose-window-specific ERRs did not exhibit clear patterns of decrease (or increase) with time since the dose was received (details not shown). However, these comparisons should be interpreted with caution as the power of the tests is limited.

Subgroups of non-CLL

The only widely recognised leukaemia subtype other than CLL with enough cases (25) for a type-specific risk assessment is CML. There was a significant linear dose response (P=0.003) for CML with an ERR per 100 mGy estimate of 0.31 (95% CI 0.05–1.8). The risk for acute/subacute leukaemias as a group (41 cases including 8 AML, 1 ALL and 32 other cases – including acute NOS and subacute leukaemias) also exhibited a significant dose dependence (P=0.002) with an estimate of the ERR at 100 mGY of 0.18 (95% CI 0.04–0.59).

Haematopoietic malignancies other than leukaemia

The baseline rates for NHL and MM increased substantially with birth year. The baseline rates for HL did not depend on either birth cohort or ethnicity.

For NHL (36 cases) and HL (18 cases), the ERR per 100 mGy estimates were <0 and not statistically significant (P>0.5) with upper 95% confidence bounds of 0.07 and 0.17, respectively. The estimated ERR per 100 mGy for MM (17 cases) was 0.01 with an upper 95% confidence bound of 0.35 (P>0.5).

Discussion

These TRC observations provide evidence of an association between low-to-moderate doses at low dose rates environmental exposures to ionising radiation and non-CLL incidence risk consistent with a linear dose–response effect. Using the best available individual doses, we estimate that 46.9% of leukaemias other than CLL could be attributed to radiation. We found no evidence that CLL was associated with radiation exposure in this population.

We estimate that incidence rates for leukaemias other than CLL among those who received a dose of 100 mGy were 20% higher than those in comparable unexposed individuals. This increase is less than half of the 50% increase reported in our previous analysis of the TRC leukaemia data (Krestinina et al, 2010). This change reflects the increase in individual dose estimates for TRDS-2009 that was largely a consequence of the improved understanding of the nature and timing of the releases. The most relevant changes were an increased contribution of strontium89 during 1951, the period of maximum releases, and an increase in the RBM dose arising from cesium137. Other related factors (albeit to a lesser extent) were changes in the river transport model, revision of the strontium biokinetic models, and the increased individualisation of cohort member dose estimates.

Although the general conclusion of a statistically significant increase in leukaemia incidence risk other than CLL is consistent with the atomic bomb survivor (ABS) data, the patterns with age as well as the shape of the dose response seem to differ. In the ABS data, the ERR for leukaemias other than CLL varies significantly with both attained age and age at exposure. Specifically, the ERR tends to decrease with increasing attained age but within any attained age group, the ERR/Gy increases with age at exposure (Hsu et al, 2013). We did not find statistically significant variation in the ERR by either age at first exposure or attained age in the TRC. There was also evidence of significant curvature of the dose–response curve in the ABS data, which we do not observe in the TRC. However, this is not surprising given the relatively low doses and limited dose range in the TRC. In the ABS data (Hsu et al, 2013) for an individual at an attained age of 70 who was exposed at age 30, the linear component of the dose–response curve is 0.08 (per 100 mGy) that is somewhat lower than our overall ERR/100 mGy of 0.22. Although it may appear that the effect in the TRC is larger than that of the ABS, there are large uncertainties and the estimates are not statistically significantly different. As such, there is no indication that leukaemia risks in this low-to-moderate dose, low-dose-rate population differ from those in the acutely exposed ABS population.

Comparison with other radiation-exposed populations

Studies of leukaemia risk among other environmentally exposed populations, specifically the Chernobyl cleanup workers from Belarus, Russia and the Baltic countries (Kesminiene et al, 2008; Ivanov et al, 2012), and Ukraine (Zablotska et al, 2013) report increased risks for all leukaemias as a group. In the Chernobyl workers in Russia an ERR of 0.50 at 100 mGy for non-CLL incidence in the 10-year period following the accident (1986–1997) (Ivanov et al, 2012) was reported and in the cleanup workers in Ukraine an ERR of 0.22 at 100 MGy for non-CLL incidence in the 20-year period following the accident (1986–2006) was reported (Zablotska et al, 2013). These estimates lie within the confidence bounds of our estimate. One substantive difference is the reported elevated risk for CLL (ERR of 0.26 with 95% CI of 0.002–0.8 at 100 mGy) in the Chernobyl studies not seen in the current cohort (Zablotska et al, 2013). Results from the Chernobyl studies reflect adults working within contaminated environments.

Studies in nuclear worker populations also provide risk information about low dose and dose rate exposures. The most recent estimates from the National Registry for Radiation Workers (Muirhead et al, 2009) report a statistically significant increase in non-CLL risk consistent with our results. A subsequent meta-analysis of 10 studies of protracted low-dose occupational and environmental exposures (including the previous estimate from our cohort) estimated a statistically significant dose–response association (ERR 0.19, CI (0.07–0.32) per 100 mGy) for leukaemia (excluding CLL) (Daniels and Schubauer-Berigan, 2011). This estimate would be lower if recalculated using the results of the analysis reported here.

Limitations and strengths of the study

There have been improvements in the quality and completeness of incidence data over time, and ongoing work to refine the dose estimates for members of TRC. Nonetheless, limitations of the data should be acknowledged. Loss to follow-up of 22% of TRC due to migration from the catchment area (14.8% of the cohort) and unknown vital status at the end of follow-up (6.7% of the cohort) reduce the statistical power of the study. Cause of death is unknown for 9% of deceased cohort members. For the first decades of follow-up (1950–1970), there was also a greater possibility of case underascertainment and a lower level of diagnostic confirmation. Although these factors reduce the statistical power of the study, particularly for subtype analysis, they would not be expected to bias the dose–response relationship effect as there was no clear relationship between any of these factors with dose. Although personal dose measurements are not available, the extensive individualised dose estimates do allow for modelling of the dose–response relationship.

The TRC is one of the few general population studies of protracted environmental radiation exposures. It provides information on low-to-moderate radiation exposures in males and females across a wide range of ages which is of particular interest in an era of increasing diagnostic medical radiation exposures (Linet et al, 2012). Risk estimates from this study are also informative for purposes of occupational radiation protection (International Commission on Radiological Protection, 2003). Additional strengths include the long follow-up period (55 years) enabling us to examine potential modification of the ERR at different time since exposure windows and detailed individualised residential histories allowing us to analyse only person-years and cases in the study area. Use of leukaemia incidence rather than mortality data increases the study power and data quality.

The present analysis utilises the best available dose estimates and shows an estimate of leukaemia risk that is approximately half that reported previously while remaining statistically significantly elevated. As such, the evidence for a chronic low-dose-rate radiation effect from this cohort for leukaemia risk remains solid.

Change history

26 November 2013

This paper was modified 12 months after initial publication to switch to Creative Commons licence terms, as noted at publication

References

Akleyev AV, Kossenko MM, Silkina LA, Degteva MO, Yachmenyov VA, Awa A, Akiyama M, Veremeyeva GA, Vozilova AV, Kyozumi S (1995) Health effects of radiation incidents in the southern Urals. Stem Cells 13 (Suppl 1): 58–68.

Cox DR, Hinckley DV (1974) Theoretical Statistics. Chapman Hall: New York.

Curtis RE, Boice JD Jr, Stovall M, Bernstein L, Holowaty E, Karjalainen S, Langmark F, Nasca PC, Schwartz AG, Schymura MJ, Storm HH, Toogood P, Moloney WC (1994) Relationship of leukemia risk to radiation dose following cancer of the uterine corpus. J Natl Cancer Inst 86: 1315–1324.

Daniels RD, Schubauer-Berigan MK (2011) A meta-analysis of leukaemia risk from protracted exposure to low-dose gamma radiation. Occup Environ Med 68: 457–464.

Degteva MO, Kozheurov VP, Tolstykh EI, Vorobiova MI, Anspaugh LR, Napier BA, Kovtun AN (2000b) The Techa River dosimetry system: methods for the reconstruction of internal dose. Health Phys 79: 24–35.

Degteva MO, Shagina NB, Tolstykh EI, Bougrov NG, Zalyapin VI, Anspaugh LR, Napier BA (2007) An approach to reduction of uncertainties in internal doses reconstructed for the Techa River population. Radiat Prot Dosimetry 127: 480–485.

Degteva MO, Shagina NB, Tolstykh EI, Vorobiova MI, Anspaugh LR, Napier BA (2009) Individual dose calculations with use of the Revised Techa River Dosimetry System TRDS-2009D. Final Report for Milestone 22. Chelyabinsk, Russia and Salt Lake City, Utah: Urals Research Center for Radiation Medicine and University of Utah.

Degteva MO, Shagina NB, Vorobiova MI, Anspaugh LR, Napier BA (2012) Reevaluation of waterborne releases of radioactive materials from the Mayak Production Association into the Techa River in 1949-1951. Health Phys 102: 25–38.

Degteva MO, Vorobiova MI, Kozheurov VP, Tolstykh EI, Vorobiova MI, Anspaugh LR, Napier B (2000a) Dose reconstruction system for the exposed population living along the Techa River. Health Phys 78: 542–554.

Degteva MO, Vorobiova MI, Tolstykh EI, Shagina NB, Shishkina EA, Anspaugh LR, Napier BA, Bougrov NG, Shved VA, Tokareva EE (2006) Development of an improved dose reconstruction system for the Techa River population affected by the operation of the Mayak Production Association. Radiat Res 166: 255–270.

Gilbert ES (2009) Ionising radiation and cancer risks: what have we learned from epidemiology? Int J Radiat Biol 85: 467–482.

Hsu WL, Preston DL, Sugiyama H, Funamotot S, Kodama K, Kimura A, Kamada N, Dohy H, Tomonaga M, Iwanaga M, Miyazaki Y, Cullings H, Suyama A, Ozasa K, Shore RE, Mabuchi K (2013) The incidence of leukemia, lymphoma, and multiple myeloma among atomic bomb survivors: 1950-2001. Radiat Res 179: 361–382.

International Commission on Radiological Protection (2003) ICRP publication 103: the 2007 recommendations of the International Commission on Radiological Protection. Ann ICRP 37: 1–332.

Ivanov VK, Tsyb AF, Khait SE, Kashcheev VV, Chekin SY, Maksioutov MA, Tumanov KA (2012) Leukemia incidence in the Russian cohort of Chernobyl emergency workers. Radiat Environ Biophys 51: 143–149.

Kendall G, Little MP, Wakeford R (2011) Numbers and proportions of leukemias in young people and adults induced by radiation of natural origin. Leuk Res 35: 1039–1043.

Kendall GM, Little MP, Wakeford R, Bunch KJ, Miles JC, Vincent TJ, Meara JR, Murphy MF (2013) A record-based case-control study of natural background radiation and the incidence of childhood leukaemia and other cancers in Great Britain during 1980-2006. Leukemia 27: 3–9.

Kesminiene A, Evrard AS, Ivanov VK, Malakhova IV, Kurtinaitis J, Stengrevics A, Tekkel M, Anspaugh LR, Bouville A, Chekin S, Chumak VV, Drozdovitch V, Gapanovich V, Golovanov I, Hubert P, Illichev SV, Khait SE, Kryuchkov VP, Maceika E, Maksyoutov M, Mirkhaidarov AK, Polyakov S, Shchukina N, Tenet V, Tserakhovich TI, Tsykalo A, Tukov AR, Cardis E (2008) Risk of hematological malignancies among Chernobyl liquidators. Radiat Res 170: 721–735.

Kossenko MM, Thomas TL, Akleyev AV, Krestinina LY, Startsev NV, Vyushkova OV, Zhidkova CM, Hoffman DA, Preston DL, Davis F, Ron E (2005) The Techa River Cohort: study design and follow-up methods. Radiat Res 164: 591–601.

Krestinina LY, Davis F, Ostroumova E, Epiifanova S, Degteva M, Preston D, Akleyev A (2007) Solid cancer incidence and low-dose-rate radiation exposures in the Techa River cohort: 1956-2002. Int J Epidemiol 36: 1038–1046.

Krestinina LY, Preston DL, Ostroumova EV, Degteva MO, Ron E, Vyushkova OV, Startsev NV, Kossenko MM, Akleyev AV (2005) Protracted radiation exposure and cancer mortality in the Techa River Cohort. Radiat Res 164: 602–611.

Krestinina L, Preston DL, Davis FG, Epifanova S, Ostroumova E, Ron E, Akleyev A (2010) Leukemia incidence among people exposed to chronic radiation from the contaminated Techa River, 1953-2005. Radiat Environ Biophys 49: 195–201.

Linet MS, Slovis TL, Miller DL, Kleinerman R, Lee C, Rajaraman P, Berrington de Gonzalez A (2012) Cancer risks associated with external radiation from diagnostic imaging procedures. CA Cancer J Clin 2: 75–100.

Muirhead CR, O'Hagan JA, Haylock RG, Phillipson MA, Willcock T, Berridge GL, Zhang W (2009) Mortality and cancer incidence following occupational radiation exposure: third analysis of the National Registry for Radiation Workers. Br J Cancer 100 (1): 206–212.

Napier BA, Degteva MO, Shagina NB, Anspaugh LR (2013) Uncertainity analysis for the Techa River Dosimetry System. Med Radiol Radiat Saf 58 (1): 5–28.

Ostroumova E, Gagniere B, Laurier D, Gudkova N, Krestinina L, Verger P, Hubert P, Bard D, Akleyev A, Tirmarche M, Kossenko M (2006) Risk analysis of leukaemia incidence among people living along the Techa River: a nested case-control study. J Radiol Prot 26: 17–32.

Preston DL, Kusumi S, Tomonaga M, Izumi S, Ron E, Kuramoto A, Kamada N, Dohy H, Matsuo T, Nonaka H, Thompson DE, Soda M, Mabuchi K (1994) Cancer incidence in atomic bomb survivors. Part III. Leukemia, lymphoma and multiple myeloma, 1950-1987. Radiat Res 137: S68–S97,, Erratum in: Radiat Res 139: 129.

Preston DL, Lubin JH, Pierce DA, McConney ME (1993) Epicure Users Guide. Hirosoft International Corporation: Seattle, Washington.

Schonfeld SJ, Krestinina LY, Epifanova S, Degteva MO, Akleyev AV, Preston DL (2013) Solid cancer mortality in the Techa River Cohort (1950-2007). Radiat Res 179: 183–189.

Shagina NB, Tolstykh EI, Degteva MO, Vorobiova MI, Anspaugh LR, Napier BA (2012b) Re-evaluation of radionuclide intakes for Techa River residents on the basis of revised source-term parameters. Health Phys 103: S96–S97.

Shagina NB, Tolstykh EI, Zalyapin VI, Degteva MO, Kozheurov VP, Tokareva EE, Anspaugh LR, Napier BA (2003) Evaluation of age and gender dependences of the rate of strontium elimination 25-45 years after intake: analysis of data from residents living along the Techa River. Radiat Res 159: 239–246.

Shagina NB, Vorobiova MI, Degteva MO, Peremyslova LM, Shishkina EA, Anspaugh LR, Napier BA (2012a) Reconstruction of the contamination of the Techa River in 1949-1951 as a result of releases from the "MAYAK" Production Association. Radiat Environ Biophys 51: 349–366.

Shilnikova NS, Preston DL, Ron E, Ron E, Gilbert ES, Vassilenko EK, Romanov SA, Kuznetsova IS, Sokolnikov ME, Okatenko PV, Kreslov VV, Koshurnikova NA (2003) Cancer mortality risk among workers at the Mayak nuclear complex. Radiat Res 159: 787–798.

Tolstykh EI, Degteva MO, Peremyslova LM, Shagina NB, Shishkina EA, Krivoshchapov VA, Anspaugh LR, Napier BA (2011) Reconstruction of long-lived radionuclide intakes for Techa riverside residents: strontium-90. Health Phys 101: 28–47.

Wakeford R, Tawn EJ (2010) The meaning of low dose and low dose-rate. J Radiol Prot 30 (1): 1–3.

Zablotska LB, Bazyka D, Lubin JH, Gudzenko N, Little MP, Hatch M, Finch S, Dyagil I, Reiss RF, Chumak VV, Bouville A, Drozdovitch V, Kryuchkov VP, Golovanov I, Bakhanova E, Babkina N, Lubarets T, Bebeshko V, Romanenko A, Mabuchi K (2013) Radiation and the risk of chronic lymphocytic and other leukemias among Chornobyl cleanup workers. Environ Health Perspect 121: 59–65.

Acknowledgements

The study is based on the work conducted by the URCRM researchers for over five decades involving collecting, storing, and processing information and clinical results in patients with haematological diseases. Our appreciation is extended to the following departments, directors, and their staff: the Regional Center of the National Radiation-Epidemiology Registry directed by NV Startsev, the Epidemiology Laboratory initially directed by MM Kossenko and currently directed by L Yu Krestinina, and the Biophysics Laboratory directed by MO Degteva, the Clinical in-patient department initially managed by Dr VI Kiryushkin, and later by Dr MM Kossenko. We are grateful for the close collaborations of the Oncology Dispensaries of Chelyabinsk and Kurgan Oblasts. We would like to express our gratitude to our late colleague and friend Elaine Ron (NCI) for the guidance and assistance she provided to us during the many years of our collaborative work. We are grateful to all colleagues who have contributed to the development and continuation of our international projects, including Mr Barrett Fountos (DOE) and Dr Martha Linet (NCI). We appreciate the thoughtful comments from the manuscript reviewers. This project is funded by the US Department of Energy, Office of Domestic and International Health Studies, Office of Health, Safety and Security, Russian Health Studies Program at the URCRM and UIC (DE-FC02-07HS0701) with scientific review by the Joint Coordinating Committee for Radiation Effects Research (a bilateral government committee representing agencies from the United States and the Russian Federation). This project incorporates data collected under the Intramural Research Program of the National Cancer Institute, National Institutes of Health (HHSN261200900089C). The Federal Department of the Russian Ministry of Health has provided support for all aspects of this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no conflict of interest.

Additional information

This work is published under the standard license to publish agreement. After 12 months the work will become freely available and the license terms will switch to a Creative Commons Attribution-NonCommercial-Share Alike 3.0 Unported License.

Rights and permissions

From twelve months after its original publication, this work is licensed under the Creative Commons Attribution-NonCommercial-Share Alike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/

About this article

Cite this article

Krestinina, L., Davis, F., Schonfeld, S. et al. Leukaemia incidence in the Techa River Cohort: 1953–2007. Br J Cancer 109, 2886–2893 (2013). https://doi.org/10.1038/bjc.2013.614

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/bjc.2013.614

Keywords

This article is cited by

-

Radiation exposure and leukaemia risk among cohorts of persons exposed to low and moderate doses of external ionising radiation in childhood

British Journal of Cancer (2023)

-

Radiation dose rate effects: what is new and what is needed?

Radiation and Environmental Biophysics (2022)

-

Lymphoma and multiple myeloma in cohorts of persons exposed to ionising radiation at a young age

Leukemia (2021)

-

In utero exposure to radiation and haematological malignancies: pooled analysis of Southern Urals cohorts

British Journal of Cancer (2017)

-

DNA damage induced by Strontium-90 exposure at low concentrations in mesenchymal stromal cells: the functional consequences

Scientific Reports (2017)