Abstract

Background:

Recorded incidence of childhood acute lymphoblastic leukaemia tends to be lower in poorer communities. A ‘pre-emptive infection hypothesis’ proposes that some children with leukaemia die from infection without diagnosis of leukaemia. Various different blood abnormalities can occur in untreated leukaemia.

Methods:

Logistic regression was used to compare pre-treatment blood counts among children aged 1–13 years at recruitment to national clinical trials for acute lymphoblastic leukaemia during 1980–2002 (N=5601), grouped by address at diagnosis within Great Britain into quintiles of the 1991 Carstairs deprivation index. Children combining severe neutropenia (risk of serious infection) with relatively normal haemoglobin and platelet counts (lack of pallor and bleeding) were postulated to be at risk of dying from infection without leukaemia being suspected. A deficit of these children among diagnosed patients from poorer communities was predicted.

Results:

As predicted, there was a deficit of children at risk of non-diagnosis (two-sided Ptrend=0.004; N=2009), and an excess of children with pallor (Ptrend=0.045; N=5535) and bleeding (Ptrend=0.036; N=5541), among cases from poorer communities.

Conclusion:

Under-diagnosis in poorer communities may have contributed to socioeconomic variation in recorded childhood acute lymphoblastic leukaemia incidence within Great Britain, and elsewhere. Implications for clinical practice and epidemiological studies should be considered.

Similar content being viewed by others

Main

Record-based studies have reported higher childhood leukaemia incidence rates in relatively affluent communities within many different countries (Borugian et al, 2005; Poole et al, 2006; Adelman et al, 2007), and internationally (Feltbower et al, 2004). Within England and Wales, the leukaemia rate for the most deprived fifth of the child population was consistently about 90% of the rate for the most affluent fifth, in each of the decades centred on the census years 1981, 1991, and 2001 (Kroll et al, 2011b). The apparent socioeconomic gradient occurs both for childhood leukaemia as a whole, and for the lymphoid subtype (∼80% of childhood leukaemia in developed countries), which in children consists almost entirely of acute lymphoblastic leukaemia. The socioeconomic gradient might be related to either of the two well-known aetiological hypotheses linking risks of childhood leukaemia with unusual patterns of infection: ‘delayed infection’ (Greaves, 2006) and ‘population mixing’ (Kinlen, 2011). However, under-diagnosis of leukaemia in poorer children, as proposed by a ‘pre-emptive infection hypothesis’ (Stewart, 1961; Greaves et al, 1985; Doll, 1989), is another possible explanation.

Acute leukaemia in children is not always easy to diagnose, as the clinical signs can be ‘vague and non-specific’ (Mitchell et al, 2009a). Neutropenia frequently occurs in untreated leukaemia, and predisposes to bacterial and fungal infections, which can lead to death from pneumonia, septicaemia, or meningitis (Baehner, 1996). Bone marrow examination is necessary for definitive diagnosis of leukaemia, but is not routinely performed in cases of severe infection (Gillespie et al, 2004). As neutropenia is a well-recognised sign of severe infection, some children suffering from leukaemia might die from infection without leukaemia ever being suspected. Within Great Britain, this might have happened more frequently in poorer communities, where primary health care provision has been less generous and childhood infection mortality rates have been higher (Reading, 1997; Health Protection Agency, 2005). If so, the recorded incidence of childhood leukaemia would be lower in poorer communities. For acute lymphoblastic leukaemia, the effect might be clearer in B-precursor than T-precursor disease, because obvious enlargement of the lymph nodes is less frequent in B-precursor cases (Greaves et al, 1985).

Untreated acute lymphoblastic leukaemia can cause various different blood abnormalities, in almost any combination. Unlike neutropenia, low levels of haemoglobin and platelets produce obvious clinical signs that strongly suggest leukaemia: pallor and bleeding, respectively. We postulated that children who combined severe neutropenia with relatively normal haemoglobin and platelet counts would be at risk of dying from infection without leukaemia being suspected, and predicted that these children would be under-represented among poorer patients.

Materials and methods

Using records from four consecutive clinical trials sponsored by the Medical Research Council (Eden et al, 2000; Mitchell et al, 2009b), pre-treatment haemoglobin, platelet, and (to September 1990) neutrophil counts were obtained for children who had been diagnosed with acute lymphoblastic leukaemia in the United Kingdom during September 1980 to November 2002. The study was restricted to the trial participants who were resident in Great Britain and diagnosed between the first and the fourteenth birthday (ages 1–13 years), as trial participation rates were relatively low at ages <1 and 14 years, and addresses were not available for older recruits, or those resident in Northern Ireland.

Address at diagnosis was obtained by linking the trial data to the National Registry of Childhood Tumours, which records cancer diagnosed since 1962 in residents of Great Britain under 15 years old (Kroll et al, 2011a). Cases were grouped according to the deprivation category of the 1991 census ward containing the postcode of the address at diagnosis (1=affluent, … 5=deprived; Office for National Statistics, 2010), using national child-population-weighted quintiles of the 1991 Carstairs deprivation index (Carstairs and Morris, 1989). Cases were classified by immunophenotype, and the T-precursor and unknown subgroups were combined for analysis.

Using the pre-treatment blood count, each patient was classified as being with or without risk of pallor, of bleeding, and of sepsis, when diagnosed with leukaemia. Following conventional clinical criteria, risks of bleeding, and sepsis were defined, respectively, as platelets <20 × 109 l−1 (Gaydos et al, 1962) and neutrophils <0.5 × 109l−1 (Bodey et al, 1966); risk of pallor (very severe anaemia) was defined as haemoglobin <5 g dl−1. Cases at risk of non-diagnosis were defined as those at risk of sepsis without pallor or bleeding (i.e., the combination of neutrophils <0.5 × 109 l−1, platelets ⩾20 × 109l−1, and haemoglobin ⩾5 g dl−1).

Logistic regression was used to estimate associations between deprivation (in quintile categories) and the odds of having been at risk of pallor, bleeding, sepsis, or non-diagnosis (each treated as a dichotomous variable), in successive univariate analyses. Terms representing effects of individual trial periods, and their interactions with deprivation, were included in preliminary models, but dropped because they were not statistically significant. Goodness-of-fit was assessed by Pearson’s χ2 test and found acceptable. Models were compared by the likelihood ratio test. All statistical tests were two-sided, using the significance level of 5%.

Results

After excluding 27 registered cases with incomplete address information, 5601 cases were eligible for analysis. These comprised 79% of children aged 1–13 years who were resident in Great Britain and diagnosed with acute lymphoblastic leukaemia during the study period. Pre-treatment haemoglobin and platelet counts were recorded in all four trials, whereas neutrophil counts were recorded in the two earlier trials only. Hence, classification according to risk of non-diagnosis of leukaemia was possible for 2009 cases. The distributions of cases by level of deprivation, age at diagnosis, cell type, and available blood counts were similar in all trials (Table 1).

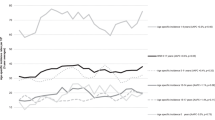

As predicted, there was a deficit of children at risk of non-diagnosis among cases from more deprived communities (Table 2). The odds ratio for risk of non-diagnosis per quintile of deprivation was 0.90 (95% confidence interval 0.84–0.97; Ptrend=0.004; N=2009); comparing the most deprived fifth of the population with the most affluent fifth, the odds ratio was 0.68 (0.48–0.96). The patterns observed separately for pallor (P=0.045; N=5535), bleeding (P=0.036; N=5541), and sepsis (P=0.083; N=2027) were weaker, but each trend went in the direction expected: odds of pallor and bleeding increased with deprivation, whereas odds of sepsis decreased. Similar trends were evident for B-precursor cases separately (Ptrend=0.002; N=1728) but not for other/unspecified cases (Ptrend=0.894; N=281). Restricting the analyses for pallor and bleeding to the earlier trials reduced the statistical significance but did not change the directions of the associations (not shown).

Discussion

For acute lymphoblastic leukaemia, these findings are consistent with the ‘pre-emptive infection hypothesis’, which proposes that some children with leukaemia die from infection without leukaemia being suspected. Among diagnosed cases from poorer communities, there was an excess of children with blood counts implying obvious clinical signs of leukaemia (pallor and bleeding) and a deficit of children with blood counts implying risk of dying from infection without diagnosis of leukaemia (sepsis without pallor or bleeding). As predicted, similar results were obtained for the B-precursor subgroup alone, but not for the other/unspecified subgroup consisting mainly of T-precursor cases, in which obvious enlargement of the lymph nodes is typically more frequent.

The results must be interpreted with caution. As the study was restricted to children with acute lymphoblastic leukaemia, the findings cannot be generalised to those with myeloid or mature lymphoid leukaemia. The main results are limited to the two clinical trials that recruited patients during 1980–1990, because neutrophil counts were not available for patients enrolled in the later trials: consistent results were, however, obtained for clinical signs in patients from all four trials up to 2002. The blood count data were derived from paper forms that may not always have been filled in correctly, and there may have been inaccuracies in the immunophenotype data, particularly for cases in the earlier two trials: nevertheless, there is no reason to suspect systematic error in the blood counts, and the associations were evident when all cases were included, regardless of immunophenotype. Finally, the diagnostic process includes safeguards, and blasts should have been recognised in the peripheral blood, or bone marrow abnormalities detected at autopsy – but, by definition, there would be no record of any cases that were missed.

Under-diagnosis may have contributed to the decreased leukaemia incidence in poorer children that has been reported within many different countries, and internationally. Other explanations might include rate calculation artefacts, registration bias, or a real increase in risk caused by some factor associated with higher socioeconomic status. For the cited comparison within England and Wales, artefact caused by numerator/denominator discrepancy seems unlikely, as rates were calculated from registry data for three separate decades, each centred on a census year, using appropriate census populations and census-specific deprivation indices (Kroll et al, 2011b); and a detailed study of cases diagnosed during 2003–2004 found very little evidence of socioeconomic variation in completeness of registration (Kroll et al, 2011a). The ‘delayed infection’ (Greaves, 2006) and ‘population mixing’ (Kinlen, 2011) hypotheses both propose that exposure to infection triggers leukaemia in susceptible children; in particular, the ‘delayed infection’ hypothesis suggests that protection from infection in infancy is a predisposing factor for common (B-precursor) acute lymphoblastic leukaemia. Either or both of these hypotheses could explain a reduction in childhood leukaemia rates in poorer communities, on the assumption of greater exposure of infants to infection and/or reduced population mobility in poorer communities (Dockerty et al, 2001; Stiller et al, 2008). Conversely, under-diagnosis caused by ‘pre-emptive infection’ in poorer communities might explain some of the existing epidemiological evidence for associations of higher childhood leukaemia risk with delayed infection, population mixing, or other factors linked to higher socioeconomic status. However, no explanation other than ‘pre-emptive infection’ seems likely to account for the specific patterns of blood abnormality observed in this study.

If confirmed with recent data, the findings would be relevant to future clinical practice. The apparent stability of the results over time is consistent with the persistence of the socioeconomic differential in England and Wales up to 1996–2005. However, the data relating to sepsis were available only for a limited time period (1980–1990), and the whole study period was relatively short (1980–2002); the analysis cannot be extended to more recent years, as the relevant national clinical trials have not collected pre-treatment blood counts in detail since 2002. Nevertheless, other approaches are possible. For example, previous studies from the United Kingdom have documented the presentation of childhood cancer in primary care by interviewing parents (Dixon-Woods et al, 2001), or examining the General Practice Research Database (Dommett et al, 2012); either of these sources, or Hospital Episode Statistics, might be used to investigate variation in response to signs of childhood leukaemia.

In conclusion, these results support the suggestion that childhood acute lymphoblastic leukaemia may have been under-diagnosed in poorer communities within Great Britain, and elsewhere. Potential implications for epidemiological studies and clinical practice should be considered.

Change history

23 January 2013

This paper was modified 12 months after initial publication to switch to Creative Commons licence terms, as noted at publication

References

Adelman AS, Groves FD, O’Rourke K, Sinha D, Hulsey TC, Lawson AB, Wartenberg D, Hoel DG (2007) Residential mobility and risk of childhood acute lymphoblastic leukaemia: an ecological study. Br J Cancer 97: 140–144

Baehner RL (1996) Neutropenia. In Nelson Textbook of Pediatrics WE Nelson, RE Behrman, RM Kliegman, AM Arvin (eds) 15th edn W.B.Saunders Philadelphia

Bodey GP, Buckley M, Sathe YS, Freireich EJ (1966) Quantitative relationships between circulating leukocytes and infection in patients with acute leukemia. Ann Intern Med 64(2): 328–340

Borugian MJ, Spinelli JJ, Mezei G, Wilkins R, Abanto Z, McBride ML (2005) Childhood leukemia and socioeconomic status in Canada. Epidemiology 16(4): 526–531

Carstairs V, Morris R (1989) Deprivation: explaining differences in mortality between Scotland and England and Wales. BMJ 299: 886–889

Dixon-Woods M, Findlay M, Young B, Cox H, Heney D (2001) Parents’ accounts of obtaining a diagnosis of childhood cancer. Lancet 357(9257): 670–674

Dockerty JD, Draper G, Vincent T, Rowan SD, Bunch KJ (2001) Case-control study of parental age, parity and socioeconomic level in relation to childhood cancers. Int J Epidemiol 30(6): 1428–1437

Doll R (1989) The epidemiology of childhood leukaemia. J R Stat Soc Series A 152(3): 341–351

Dommett RM, Redaniel MT, Stevens MCG, Hamilton W, Martin RM (2012) Features of childhood cancer in primary care: a population-based nested case–control study. Br J Cancer 106(5): 982–987

Eden OB, Harrison G, Richards S, Lilleyman JS, Bailey CC, Chessells JM, Hann IM, Hill FGH, Gibson BES (2000) Long-term follow-up of the United Kingdom Medical Research Council protocols for childhood acute lymphoblastic leukaemia, 1980–1997. Leukemia 14(12): 2307–2320

Feltbower RG, McKinney PA, Greaves MF, Parslow RC, Bodansky HJ (2004) International parallels in leukaemia and diabetes epidemiology. Arch Dis Child 89(1): 54–56

Gaydos LA, Freireich EJ, Mantel N (1962) The quantitative relation between platelet count and hemorrhage in patients with acute leukemia. N Engl J Med 266: 905–909

Gillespie SH, Sonnex C, Carne C (2004) Infectious disease. In Medicine JS Axford, CA O’Callaghan (eds) 2nd edn Blackwell Science Oxford

Greaves M (2006) Infection, immune responses and the aetiology of childhood leukaemia. Nat Rev Cancer 6(3): 193–203

Greaves MF, Pegram SM, Chan LC (1985) Collaborative group study of the epidemiology of acute lymphoblastic leukaemia subtypes: background and first report. Leuk Res 9(6): 715–733

Health Protection Agency (2005) Health Protection in the 21st Century: Understanding the Burden of Disease; Preparing for the Future http://www.hpa.org.uk/publications

Kinlen L (2011) Childhood leukaemia, nuclear sites, and population mixing. Br J Cancer 104(1): 12–18

Kroll ME, Murphy MFG, Carpenter LM, Stiller CA (2011a) Childhood cancer registration in Britain: capture-recapture estimates of completeness of ascertainment. Br J Cancer 104(7): 1227–1233

Kroll ME, Stiller CA, Murphy MFG, Carpenter LM (2011b) Childhood leukaemia and socioeconomic status in England and Wales 1976–2005: evidence of higher incidence in relatively affluent communities persists over time. Br J Cancer 105(11): 1783–1787

Mitchell C, Hall G, Clarke RT (2009a) Acute leukaemia in children: diagnosis and management. BMJ 338: 1491–1495

Mitchell C, Payne J, Wade R, Vora A, Kinsey S, Richards S, Eden T (2009b) The impact of risk stratification by early bone-marrow response in childhood lymphoblastic leukaemia: results from the United Kingdom Medical Research Council trial ALL97 and ALL97/99. Br J Haematol 146(4): 424–436

Office for National Statistics (2010) Postcode Directories. ESRC Census Programme. Census Dissemination Unit, Mimas (University of Manchester)

Poole C, Greenland S, Luetters C, Kelsey JL, Mezei G (2006) socioeconomic status and childhood leukaemia: a review. Int J Epidemiol 35(2): 370–384

Reading R (1997) Social disadvantage and infection in childhood. Sociol Health Ill 19(4): 395–414

Stewart A (1961) Aetiology of childhood malignancies: congenitally determined leukaemias. BMJ 1(5224): 452–460

Stiller CA, Kroll ME, Boyle PJ, Feng Z (2008) Population mixing, socioeconomic status and incidence of childhood acute lymphoblastic leukaemia in England and Wales: analysis by census ward. Br J Cancer 98: 1006–1011

Acknowledgements

We are grateful to colleagues at the Childhood Cancer Research Group for help with this study. We thank the Medical Research Council for the use of data from the UK childhood leukaemia trials. We thank the regional and national cancer registries of England, Wales, and Scotland, regional childhood tumour registries, the Children’s Cancer and Leukaemia Group, the Clinical Trial Services Unit, and the NHS Central Registers for providing data to the National Registry of Childhood Tumours. The Childhood Cancer Research Group receives funding from the Department of Health, the Scottish Government, and CHILDREN with CANCER UK.

Disclaimer

The views expressed here are those of the authors and not necessarily those of the Department of Health, the Scottish Government, or CHILDREN with CANCER UK.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

From twelve months after its original publication, this work is licensed under the Creative Commons Attribution-NonCommercial-Share Alike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/

About this article

Cite this article

Kroll, M., Stiller, C., Richards, S. et al. Evidence for under-diagnosis of childhood acute lymphoblastic leukaemia in poorer communities within Great Britain. Br J Cancer 106, 1556–1559 (2012). https://doi.org/10.1038/bjc.2012.102

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/bjc.2012.102

Keywords

This article is cited by

-

Childhood cancer research in oxford III: The work of CCRG on ionising radiation

British Journal of Cancer (2018)

-

Childhood cancer research in Oxford II: The Childhood Cancer Research Group

British Journal of Cancer (2018)

-

Factors associated with the quality of life of family caregivers for leukemia patients in China

Health and Quality of Life Outcomes (2017)

-

Effects of changes in diagnosis and registration on time trends in recorded childhood cancer incidence in Great Britain

British Journal of Cancer (2012)

-

Reply: ‘Childhood leukaemia and socioeconomic status’

British Journal of Cancer (2012)