Abstract

Purpose To measure the effect of cataract type, severity and location on presenting, and best-corrected visual acuity, contrast sensitivity, and glare disability.

Methods In all, 3654 (82.4% participation rate) eligible noninstitutionalised residents aged 49 years or older, living in two postcode areas of the Blue Mountains, Australia, received detailed eye examinations including visual acuity, contrast sensitivity, and glare disability testing. Data from right eyes were analysed using multiple regression modelling.

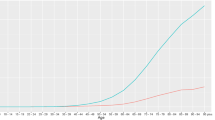

Results The effect of age on visual acuity and contrast sensitivity only became evident in persons aged at least 60 years. Cataract severity was inversely related to visual acuity and contrast sensitivity. Late posterior subcapsular cataract caused the greatest reduction in visual acuity. Early grade cataract caused significant reduction in contrast sensitivity at intermediate and high spatial frequencies, but late grade cataract reduced contrast sensitivity across all spatial frequencies. There was insufficient study power to detect consistent significant effect of cataract on glare disability tests or cortical cataract location on visual function.

Conclusions Age at least 60 years, cataract type, and cataract severity were principal determinants of visual acuity and contrast sensitivity in this study. Axial and superotemporally located cortical cataract had the greatest effect on visual function tests.

Similar content being viewed by others

Introduction

Cataract is the major cause of blindness worldwide and has been identified as the main cause of mild to moderate visual impairment in Australia.1 Cataract is known to increase intraocular scatter, thereby reducing retinal image contrast.2, 3 Cataract surgery is indicated when visual function becomes reduced to a level that interferes with a person's daily activities. Ophthalmologists currently use the combination of subjective visual impairment, objective clinical measurements, and informed consent before recommending cataract surgery.4, 5, 6 There is considerable variation, however, in the use of available visual function tests by ophthalmologists.7

Traditional visual acuity testing use broadband optotypes to measure visual spatial resolution at a single contrast level, inadequate for testing the entire range of the visual potential. Although contrast and glare sensitivity are both related to visual acuity, there may be independent loss of contrast and glare sensitivity in the presence of cataract that is not identified by simple visual acuity testing.8, 9, 10, 11, 12, 13 This becomes clinically evident in persons with early cataract who complain predominantly of decreased visual function such as reduced face recognition or night-time glare, despite well-maintained visual acuity scores.14

An inferonasal predilection of cortical opacities has been identified in several studies including the Blue Mountains Eye Study (BMES),1, 15 the Beaver Dam Eye Study (BDES),16 and the Watermen study;17 however, there has been little research into the effect of cataract location on visual function and its tests.

Many studies have examined the use of visual acuity, contrast sensitivity, and glare disability for predicting and assessing cataract severity, the majority using case–control designs.11, 14, 18, 19, 20, 21, 22, 23, 24, 25, 26 We include a population-based analysis of this subject to clarify whether different cataract types and severity or cortical cataract locations are important determinants of visual function tests, and whether these tests are useful to quantify the loss of visual function in early cataract in supporting surgical correction.

Materials and methods

Study population

The BMES is a population-based survey of vision and common eye diseases in an urban elderly population in the Blue Mountains area, west of Sydney, Australia. Between January 1992 to January 1994, 3654 (82.4%) eligible noninstitutionalised residents aged 49 years and over living in two postcode areas in the Blue Mountains participated. Of these, 501 (11.3%) of eligible people refused, 68 (1.5%) people had died, and 210 (4.8%) people had moved from the area. Assessment included an interviewer administered health and lifestyle questionnaire, followed by a detailed eye examination.

Procedures

Monocular presenting visual acuity was measured using an 85 cd/m2 retroilluminated logMAR chart read at 2.4 m (with current glasses if worn). Visual acuity was recorded as the number of letters read correctly from 0 to 70. Best-corrected visual acuity was measured following subjective refraction following protocols used in the BDES modification of the Early Treatment Diabetic Retinopathy Study (ETDRS).

Contrast sensitivity was measured only in participants living in the first postcode area using the Vectorvision CSV-1000 chart (Vectorvision, Dayton, OH, USA). Participants attempted contrast sensitivity tests monocularly using their best-corrected visual acuity. Contrast sensitivity was graded as one of 10 levels at the four different spatial frequencies tested (3, 6, 12, and 18 cycles/degree), participants unable to see any of the test strip or highest contrast bar scored –1 and 0, respectively; and between 1 and 8 for being able to see one of the eight decreasing contrast test bars. These raw grades were converted into corresponding log contrast sensitivity scores using definitions provided by Vectorvision. A distribution of participants with various contrast sensitivity scores and the Vectorvision conversion table are summarised in Table 1.

Glare disability was calculated as the difference in log contrast sensitivity scores in the absence and presence of a handheld Brightness Acuity Tester (Mentor, Nowell, MA, USA) glare source set at 342.6 cd/m2 (medium setting), while the subject read from the contrast sensitivity chart as described above.

Three principal cataract types were identified, namely nuclear, cortical, and posterior subcapsular (PSC). These were assessed according to the Wisconsin grading system closely following the protocol developed for the BDES by two masked graders using lens photographs.1 Good inter- and intragrader reproducibility was achieved for grading of all three cataract types.27

Slit-lamp photographs taken using a Topcon SL-7E slit-lamp camera (Topcon Optical Co., Tokyo, Japan) were used for nuclear cataract grading. As a result of an intermittent camera malfunction, only 68% of participants had reliable photographs in at least one eye for nuclear grading. Participants with missing photographs had some statistically significant minor differences compared to those without.1 The presence and severity of nuclear cataract were graded into five levels with reference to four Wisconsin standard photographs (courtesy Dr BEK Klein). Nuclear cataract grading was defined using the same criteria as in the BDES, with no nuclear cataract defined as levels 1 and 2, early nuclear cataract as level 3, and late nuclear cataract as level 4 or 5.

Separate retroilluminated lens photographs using Neitz CT-R cataract camera (Neitz Instruments Co, Tokyo, Japan) were used to grade cortical and PSC cataract opacities. The presence and severity of cortical and PSC cataract was graded using a grid composed of a central circle surrounded by 16 wedges (Figure 1). The severity of cortical and PSC cataract were each defined by summating the weighted percentage area of each of these segments to give the total lens area involved. Early cortical cataract was defined as involving 5-24% of cortical area, and late cortical cataract as ⩾25%, while early PSC was defined as <5% total area and late PSC as ⩾5% involvement. A summary of the distribution of cataract by type and severity is shown in Table 2.

Cortical cataract locations were defined either as axial or in one of four quadrants as shown in Figure 1. The percentage area of total lens involved was calculated for each of these cortical cataract locations, and analysis performed with respect to the effect each 1% lens area involved by cortical cataract has on the various visual function tests. PSC cataract locations and other cataract combinations were not analysed.

Statistical methods

Data were analysed using Statistics Analysis System, PC version 8.2 (SAS Institute, Cary, NC, USA). Multiple regression analysis was performed with presenting and best-corrected visual acuity, contrast sensitivity, and glare disability as dependent outcome variables; nuclear cataract, PSC cataract, and cortical cataract type or location as explanatory variables; controlling for age and sex. Dummy variables were created to define cataract severity into early and late cataract classes.

The authors were careful to analyse the assumptions of linearity, homoskedasticity, and normality required for multiple regression analysis. Other than minor skewing of the distribution of dependent variables, we were reassured from examination of residuals that our multiple regression models were sufficiently robust to give valid results. Age, sex, and cataract types were examined for interactions.

Results

Analyses were performed on the right eyes of 3654 eligible respondents. A total of 271 participants were excluded either because of enucleation, aphakia, pseudophakia, late age-related maculopathy (AMD), or late glaucoma. Participants not able to read any letters from the logMAR chart at 2.4 m (Snellen acuity <6/60) were also excluded, leaving presenting visual acuity recorded in 3334 participants and best-corrected visual acuity recorded in 3336 participants. Contrast and glare disability for spatial frequencies at 3, 6, 12 and 18 cycles/degree (cpd) were measured only in participants living in the first postcode area.

Retroilluminated photographs for cortical and PSC cataract grading were available in 3389 participants, with 265 missing or ungradable due to poor pupillary dilatation (⩽4 mm diameter). In additional, only 2308 (63.1%) of right eye slit–lamp photographs were available for nuclear cataract grading as a consequence of intermittent Topcon slit-lamp camera malfunction.

In summary, this left 2181 subjects with all the study variables available for presenting and best-corrected visual acuity multiple regression analyses; 1372 subjects with all study variables for contrast sensitivity multiple regression analyses at 3, 6, 12, and 18 cycles/degree; and 891 subjects for glare disability multiple regression analyses at 3, 6, 12, and 18 cycles/degree. A comparison between participants included and excluded from analysis as a consequence either of our exclusion criteria or missing information is shown in Table 3.

Cataract type and visual acuity

A total of 2181 eyes were included in the regression analysis and adjusted mean scores of presenting and best-corrected visual acuity on cataract type and severity controlling for age and sex. We found that presenting and best-corrected visual acuity loss were only associated with age when greater than or equal to 60 years, confirming published findings by Johnson and Choy28 that age-related vision loss does not occur until after about 55 years. As a consequence, we stratified the analysis according to age less than 60 years and greater than or equal to 60 years. We only found statistically significant reductions in presenting and best-corrected visual acuity in the presence of different cataract type and severity in the group aged greater than or equal to 60 years. These results are shown in Table 4 while corresponding nonsignificant results of participants aged less than 60 years are not shown.

All cataract types and severity caused statistically significant reduction in presenting visual acuity. When compared to participants without cataract, logMAR visual acuity scores were reduced by 1.4 and 3.6 letters in persons with early and late nuclear cataract, respectively; 2.7 and 3.6 letters in persons with early and late cortical cataract, respectively; and 3.9 and 9.5 letters in persons with early and late PSC cataract, respectively. Cataract type and severity were both associated with significant reductions in best-corrected visual acuity except early nuclear cataract (P=0.08). When compared to participants without cataract, best-corrected acuity scores were estimated to be reduced by 2.5 letters for late nuclear cataract; 3.3 and 4.4 letters for early and late cortical cataract, respectively; and 1.9 and 12.0 letters, respectively, for late PSC cataract, respectively.

We found no interactions between nuclear and PSC or cortical cataract types (P>0.05), and conclude that the estimated reduction in acuity of mixed cataract types to be at least the sum of the reduction caused by each individual cataract type.

Cataract type and contrast sensitivity

Contrast sensitivity testing performed on a subset of the study population living in one postcode area in combination with the predetermined exclusion criteria left 1372 participants with all variables available for analyses. Once again, we found that age was only significantly associated with loss in contrast sensitivity in participants older than or equal to 60 years. The analysis was therefore stratified according to age less than 60 years and greater than or equal to 60 years. We also found that presenting visual acuity and sex were significant confounders for contrast sensitivity at all tested spatial frequencies, and therefore included in our multiple regression model.

PSC cataract caused the greatest reduction in contrast sensitivity at all spatial frequencies. Contrast sensitivity was reduced significantly in all late grade cataract across the spatial frequency spectrum except late nuclear cataract at 3 cycles/degree. Early PSC and cortical cataract types both caused significant reduction in contrast sensitivity at spatial frequencies ⩾6 cycles/degree, but early nuclear cataract only caused significant reduction in contrast sensitivity at 12 cycles/degree. Once again, no significant interactions were identified between cataract types to affect contrast sensitivity. Results of the effect of cataract type on contrast sensitivity for participants aged 60 years or more are summarised in Table 5.

Cataract type and glare disability

Glare disability testing was performed on the same subset of participants as contrast sensitivity; however, there were additional missing data for glare disability at spatial frequencies of 3 and 18 cycles/degree. As a consequence, only 891 participants had all study variables available for multiple regression modelling. We did not find a corresponding cutoff age where glare disability became significantly affected by age, in contrast to visual acuity and contrast sensitivity. We found no consistent significant effect of cataract type and severity in increasing glare disability. There were no interactions between cataract types for glare disability. Results are summarised in Table 6.

Cataract location

The effect of cortical cataract location on visual function tests were analysed after adjusting for age, sex, cortical cataract severity, and the presence and severity of other cataract types. The results were inconsistently statistically significant; however, a trend emerged to show that for each 1% lens area involved by cortical cataract, axial location caused the greatest impairment in visual function. In addition, superotemporally located cataract most consistently and significantly reduced visual function including visual acuity, contrast sensitivity, and glare disability. Results are summarised in Table 7.

Discussion

It is widely known and experienced that visual acuity is inversely related to cataract severity. The results from this population-based study confirm that presenting and best-corrected acuity were most affected by the presence of late grade cataract, and that PSC cataract has a greater visual effect than the other cataract types individually. The findings also suggested that visual acuity in late PSC cataract was least remedial to refractive correction. This correlates clinically with why persons with PSC cataract present earliest for surgical correction.

Our analyses did not reveal significant interaction between cataract types, concurring with other published data.8, 29 In the absence of interactions, we conclude that the estimated effect of mixed cataract on visual acuity to be at least the sum of the independent effect from each cataract type. As an example, the reduction of presenting visual acuity in mixed late nuclear and early PSC cataract is estimated to be 7.5 letters (3.6 plus 3.9, respectively) after controlling for age, sex, and cortical cataract grade. This correlates with clinical expectation and findings from studies that mixed cataract cause greater visual disability than single type cataract.30 Having drawn the above conclusion, we are mindful that our inability to find statistically significant interaction variables may be a consequence of insufficient study power.

This study confirms previous findings that late grade cataract have the greatest effect in reducing contrast sensitivity across the spatial frequency spectrum.14, 23, 24 Previous studies have not shown a clear association between early cataract type and contrast sensitivity at different spatial frequencies.23 Owing to its imperfect correlation, contrast sensitivity testing can provide additional information to traditional acuity tests. Traditional visual acuity is most highly correlated with contrast sensitivity at high spatial frequencies. We found that early cortical and early PSC cataract caused significant attenuation of contrast sensitivity at 6, 12, and 18 cycles/degree, while early nuclear cataract only showed significant attenuation at 12 cycles/degree. The fact that we found no significant association between any of the three early grades of cataract and contrast sensitivity function at low spatial frequency (3 cycles/degree) reduces the usefulness of using contrast sensitivity testing to detect early grade cataract; however, the fact that there were significant reductions in contrast sensitivity at intermediate spatial frequencies implied that contrast sensitivity testing may be used as an adjunct to traditional acuity testing in quantifying the level of visual dysfunction in persons with early cataract to add to an argument for prescribing surgical intervention.

The results of our analysis of cataract type on glare disability were equivocal due to insufficient statistical power in our study sample consequent to the significant number of participants with missing data or our exclusion criteria. In addition, glare disability scores were obtained by calculating the difference between two measurements. This inherently increases variability and reduces the power to detect effect in our results. The only comment that can be made from these results are that the parameter estimates from our analysis imply an increase in glare disability in the presence of cataract, a finding expected from clinical experience and previous publications.5, 8 Importantly, a previous study by Elliott et al3 found a much higher correlation between light scatter and contrast sensitivity tests than either logMAR visual acuity or glare disability tests. In the context of Elliott's report, the lack of significant findings from our population-based data, albeit with limitations, and the cumbersome nature of glare disability tests, we advocate that in a clinical situation, glare disability testing may not add significantly to findings from standard visual acuity and contrast sensitivity tests.

Our analysis of cortical cataract location on visual function also suffered from inadequate power to provide statistically significant parameter estimates for each of the defined cortical cataract locations. Interestingly, our results appeared to show that for each 1% of lens area involved by cortical cataract, axially located cortical cataract caused the greatest effect on visual function, reducing visual acuity and contrast sensitivity, and increasing glare disability. In addition, despite the well-defined inferonasal predilection for cortical cataract, we found that superotemporally located cortical cataract most consistently caused significant reductions in visual acuity and contrast sensitivity compared to cortical cataract in other locations. It may be premature to presume the importance of this finding in the context of inadequate statistical power. In addition, this finding begs a credible explanation for its effect on visual function. We therefore suggest further study into the effect of cortical cataract location on visual function.

One significant difference of this study from previous published data on this topic is the use of a population-based sample. We are aware that this sampling frame has several limitations. First, by definition, the number of cataract cases in this study was determined by the prevalence of disease. Consequently, this resulted in insufficient power to detect effect due to small numbers of late stage and visually disabling cataract. Second, there may be additional selection bias where the population captured have less visually disabling cataract types or severity, because the disabling cases have presented for surgery, compounding the lack of power of our study. The results of this study are further limited by the number of missing participants because of episodic camera malfunction. A previous report had shown no significant differences between characteristics of participants with and without photographs.1 In addition, only a subset of the population had contrast sensitivity and glare disability tested.

The clinical implication drawn from results of this study is that contrast sensitivity testing at intermediate spatial frequencies provides useful additional information and should be used to complement traditional visual acuity testing in obtaining an objective measure of the level of visual function impairment caused by early cataract. This may be used to aid decision making along the pathway of managing early cataract. We therefore recommend consideration of contrast sensitivity testing at an intermediate spatial frequency as part of the clinical workup to complement standard visual acuity testing in objectively measuring visual impairment in cases of early cataract.

References

Mitchell P, Cumming RG, Attebo K, Panchapakesan J . Prevalence of cataract in Australia: the Blue Mountains eye study. Ophthalmology 1997; 104: 581–588.

Elliott DB, Bullimore MA, Patla AE, Whitaker D . Effect of a cataract simulation on clinical and real world vision. Br JOphthalmol 1996; 80: 799–804.

Elliott DB, Hurst MA, Weatherill J . Comparing clinical tests of visual loss in cataract patients using a quantification of forward light scatter. Eye 1991; 5: 601–606.

White paper on cataract surgery. American Academy of Ophthalmology and American Society of Cataract and Refractive Surgery. Ophthalmology 1996; 103: 1152–1156.

Adamsons I, Rubin GS, Vitale S, Taylor HR, Stark WJ . The effect of early cataracts on glare and contrast sensitivity. A pilot study Arch Ophthalmol 1992; 110: 1081–1086.

Obstbaum SA . Indications and timing of cataract surgery. J Cataract Refract Surg 1995; 21: 483.

Steinberg EP, Bass EB, Luthra R, Schein OD, Sharkey P, Javitt J et al. Variation in ophthalmic testing before cataract surgery. Results of a national survey of ophthalmologists. Arch Ophthalmol 1994; 112: 896–902.

Lasa MS, Podgor MJ, Datiles MB, Caruso RC, Magno BV . Glare sensitivity in early cataracts. Br J Ophthalmol 1993; 77: 489–491.

Hess R, Woo G . Vision through cataracts. Invest Ophthalmol Vis Sci 1978; 17: 428–435.

Paulsson LE, Sjostrand J . Contrast sensitivity in the presence of a glare light. Theoretical concepts and preliminary clinical studies. Invest Ophthalmol Vis Sci 1980; 19: 401–406.

Skalka HW . Arden grating test in evaluating "early" posterior subcapsular cataracts. South Med J 1981; 74: 1368–1370.

Abrahamsson M, Sjostrand J . Impairment of contrast sensitivity function (CSF) as a measure of disability glare. Invest Ophthalmol Vis Sci 1986; 27: 1131–1136.

Hard AL, Abrahamsson M, Sjostrand J . A new glare test based on low contrast letters—evaluation in cataract patients. Acta Ophthalmol 1990; 68: 145–150.

Lasa MS, Datiles MB3, Podgor MJ, Magno BV . Contrast and glare sensitivity. Association with the type and severity of the cataract. Ophthalmology 1992; 99: 1045–1049.

Rochtchina E, Mitchell P, Coroneo M, Wang JJ, Cumming RG . Lower nasal distribution of cortical cataract: the Blue Mountains Eye Study. Clin Exp Ophthalmol 2001; 29: 111–115.

Klein BE, Klein R, Linton KL . Prevalence of age-related lens opacities in a population. The Beaver Dam Eye Study. Ophthalmology 1992; 99: 546–552.

Schein OD, West S, Munoz B, Vitale S, Maguire M, Taylor HR et al. Cortical lenticular opacification: distribution and location in a longitudinal study. Invest Ophthalmol Vis Sci 1994; 35: 363–366.

Brown NA . The morphology of cataract and visual performance. Eye 1993; 7: 63–67.

Drews-Bankiewicz MA, Caruso RC, Datiles MB, Kaiser-Kupfer MI . Contrast sensitivity in patients with nuclear cataracts. Arch Ophthalmol 1992; 110: 953–959.

Elliott DB, Gilchrist J, Whitaker D . Contrast sensitivity and glare sensitivity changes with three types of cataract morphology: are these techniques necessary in a clinical evaluation of cataract? Ophthal Physiol Opt 1989; 9: 25–30.

Ariyasu RG, Lee PP, Linton KP, LaBree LD, Azen SP, Siu AL . Sensitivity, specificity, and predictive values of screening tests for eye conditions in a clinic-based population. Ophthalmology 1996; 103: 1751–1760.

Adamsons IA, Vitale S, Stark WJ, Rubin GS . The association of postoperative subjective visual function with acuity, glare, and contrast sensitivity in patients with early cataract. Arch Ophthalmol 1996; 114: 529–536.

Ivers RQ, Optom B, Macaskill P, Cumming RG, Mitchell P . Sensitivity and specificity of tests to detect eye disease in an older population. Ophthalmology 2001; 108: 968–975.

Elliott DB, Situ P . Visual acuity versus letter contrast sensitivity in early cataract. Vision Res 1998; 38: 2047–2052.

Miyajima H, Katsumi O, Wang GJ . Contrast visual acuities in cataract patients. I. Comparison with normal subjects. Acta Ophthalmol Copenh 1992; 70: 44–52.

Rouhiainen P, Rouhiainen H, Salonen JT . Contrast sensitivity in different types of early lens opacities. Acta Ophthalmol Scand 1996; 74: 379–383.

Panchapakesan J, Cumming RG, Mitchell P . Reproducibility of the Wisconsin cataract grading system in the Blue Mountains Eye Study. Ophthal Epidemiol 1997; 4: 119–126.

Johnson M, Choy D . On the definition of age-related norms for visual function testing. Appl Opt 1987; 26: 1449–1454.

Lasa MS, Datiles MB3, Podgor MJ, Magno BV . Contrast and glare sensitivity. Association with the type and severity of the cataract. Ophthalmology 1992; 99: 1045–1049.

Ivers RQ, Mitchell P, Cumming RG . Visual function tests, eye disease and symptoms of visual disability: a population-based assessment. Clin Exp Ophthalmol 2000; 28: 41–47.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chua, B., Mitchell, P. & Cumming, R. Effects of cataract type and location on visual function: The Blue Mountains Eye Study. Eye 18, 765–772 (2004). https://doi.org/10.1038/sj.eye.6701366

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/sj.eye.6701366

Keywords

This article is cited by

-

Cataract surgery in patients with Fuchs’ dystrophy and corneal decompensation indicated for Descemet's membrane endothelial keratoplasty

Scientific Reports (2022)

-

Changes in patient subjective happiness and satisfaction with cataract surgery

Scientific Reports (2020)

-

Contrast sensitivity and its determinants in people with diabetes: SN-DREAMS-II, Report No 6

Eye (2017)

-

Cutaneous reflex modulation during obstacle avoidance under conditions of normal and degraded visual input

Experimental Brain Research (2017)

-

Evaluation of contrast sensitivity measurements after retrobulbar optic neuritis in Multiple Sclerosis

Graefe's Archive for Clinical and Experimental Ophthalmology (2014)