Abstract

Active cocaine abusers have a diminished neural response to errors, particularly in the anterior cingulate cortex thought critical to error processing. The inability to detect, or adjust performance following errors has been linked to clinical symptoms including the loss of insight and perseverative behavior. We investigated the cognitive implications of this diminished error-related activity, using response inhibition tasks that required error awareness and performance adaptation. Twenty-one active cocaine users (six female subjects, mean age=40.3) and 22 non-drug using adults (six female subjects, mean=39.9) participated. The results indicated that cocaine users consistently demonstrated poorer inhibitory control, a deficit accompanied by reduced awareness of errors. Adaptation of post-error reaction times did not differ between groups, although a different measure of adaptive behavior: exerting inhibitory control on the trial immediately after failing to inhibit, was significantly poorer in the cocaine using sample. In summary, cocaine users demonstrated a diminished capacity for monitoring their behavior, but were able to perform post-error adjustment to processes not already suffering an underlying deficit. These difficulties are consistent with previous reports of cocaine-related hypoactivity in the neural system underlying cognitive control, and highlight the potential for cognitive dysfunction to manifest as behavioral deficits that likely contribute to the maintenance of drug dependence.

Similar content being viewed by others

INTRODUCTION

A critical aspect of executive control is the ability to detect and correct errors in our ongoing cognitive performance. The processing of errors serves an adaptive function, signalling to an individual that task performance was either incorrect or insufficient, and that the intervention of other attention or control processes would potentially be advantageous. Although the study of error detection, and performance monitoring more generally, is of interest because of relevance to a broad range of other processes in cognition, it has to an extent been driven by neurophysiological and behavioral evidence of error processing dysfunction in a range of clinical conditions.

A repeated finding has been the identification of discordant neural activity in the anterior cingulate cortex (ACC) during cognitive errors. Persons with schizophrenia, ADHD, Alzheimer's disease, and various disorders of drug addiction (eg, cocaine, heroin, and alcohol) show diminished ACC responses to errors (Bates et al, 2002; Forman et al, 2004; Kaufman et al, 2003; Mathalon et al, 2003; Ridderinkhof et al, 2002), whereas obsessive-compulsive disorder patients have elevated responses in this region (Gehring et al, 2000). Current evidence suggests that the neural response to errors involves a network of regions (Ridderinkhof et al, 2004), which consistently involves the ACC (Gehring and Fencsik, 2001). There remains much debate in the literature about the role these cortical regions play in error processing (for a review see Ridderinkhof et al, 2004), in particular whether their responsiveness is error specific, or whether they respond to certain properties of the stimuli used in cognitive paradigms, such as the amount of response conflict engendered by the stimulus (owing to competition between potential responses) (Carter et al, 1998).

One mechanism by which the neural response to errors has been hypothesized to contribute to ongoing cognitive control is by engaging the top–down control of attention (MacDonald et al, 2000; Ullsperger and von Cramon, 2001). Three recent studies have provided brain–behavior relationships to support such a hypothesis (Garavan et al, 2002; Gehring et al, 1993; Kerns et al, 2004), indicating that strategic processes following an error, namely adapting response speeds on the trials immediately following an error, correlated with greater activity in the ACC region during the error trial. Implementation of the increased cognitive control was related to greater dorsolateral prefrontal cortex activity (dPFC). Typically, these results have been explained using the conflict monitoring hypothesis, which suggests that ACC activation, irrespective of success or failure, is related to the extent of response conflict: conditions in which multiple responses compete for the control of action.

The aim of the present study was to examine the cognitive implications of diminished error-related activity in cocaine users. Previous studies have indicated that cocaine users have a hypoactive error-related neural response (Kaufman et al, 2003; Kubler et al, 2005), particularly in the ACC region. It remains unclear how this hypoactivity might contribute to the more general cognitive control problem identified in this population (Bolla et al, 2004; Di Sclafani et al, 2002; Fillmore and Rush, 2002; Goldstein et al, 2001), which suggests a broader dysfunction in fronto-parietal top–down control networks. Two error-related processes were chosen to examine the potential influence of a hypoactive error-related neural response: error awareness and post-error cognitive control. Previous research suggests that the ACC is active during errors irrespective of awareness, whereas activity in prefrontal and parietal regions appears to differentiate awareness of an error (Hester et al, 2005; Nieuwenhuis et al, 2001). Post-error cognitive control, which has typically been studied by examining post-error slowing during cognitive control tasks (eg, Stroop task, Go/NoGo task, and Flanker task), has been characterized as a reciprocal relationship between anterior cingulate and dorsolateral prefrontal cortices, where ACC detects the requirement for, and the dPFC implements, greater cognitive control.

Given the previous findings of error-related ACC hypoactivity in cocaine users, and the evidence from control subjects that the level of ACC activity is positively related to post-error increases in cognitive control, we predicted that our cocaine user sample would have greater difficulty modulating cognitive control. Our predictions for error awareness in cocaine users were less straightforward. The evidence from past studies suggests that while the ACC is active during both aware and unaware errors, its level of activity does not predict awareness of an error. Awareness appears to be related more to fronto-parietal activation, regions which have also been shown to be hypoactive in cocaine users (Hester and Garavan, 2004), although specific examinations of user's error-related processing have not indicated fronto-parietal hypoactivity (Kaufman et al, 2003). We therefore predicted that conscious awareness of errors would not be significantly different in cocaine users when compared to matched healthy controls.

MATERIALS AND METHODS

Subjects

Twenty-two non-drug using (six female subjects, mean age=39.9, range=26–51) and 21 active cocaine using participants (six female subjects, mean age=40.3, range=22–48) were included in the current study. Educational attainment for the two groups was not significantly different (controls: 12.7 years, users: 11.3, F(1,41)=3.80, p>0.05). The numerical difference may be the result of abrogated schooling that often accompanies drug use, and which also results in underestimation of intellect (Chatterji, 2006). Participants were fully informed of the nature of the research and provided written consent for their involvement in accordance with the Institutional Review Board of the Medical College of Wisconsin (MCW). Participants were recruited via the General Medical Research Centre (GCRC) at MCW, where staff psychiatrists screened individuals who responded to advertisements seeking active users of cocaine to volunteer for a range of different studies conducted at MCW examining the effects of cocaine use. The Structured Clinical Interview for DSM-IV was conducted to ensure that all participants were right handed and had no current or past history of neurological or psychiatric disorders, dependence on any psychoactive substance other than cocaine (for user participants only), or nicotine. Urine samples were obtained from all participants at least 1 h before testing, with all non-drug participants returning negative tests for all 96 CNS-reactive drug substances tested for, and active cocaine users returning positive tests for cocaine or its metabolites, indicating that they had used cocaine within the past 72 h. Self-report from participants indicated that the time since last use was 45 h (range 12–60 h). User participants' who were positive for any drug (on the urine screen) other than cocaine, nicotine, or marijuana were excluded. Thirteen of the cocaine user sample reported occasional use of cannabis, with 17 days being the average duration since last use and none had consumed in the 24 h before cognitive testing. The acute effects of cannabis intoxication on the cognitive performance of occasional users are short lived, peaking at 2 h post-consumption, and lasting up to 8 h, but are not present after 24 h (Curran et al, 2002; Fant et al, 1998), and ‘light’ (once per week) use of cannabis has not been associated with decrements in cognitive test performance (Pope et al, 2001). Twenty-two participants (17 cocaine users and five control) reported regular use of tobacco (M=11.1 cigarettes per day), and all participants from both groups reported regular use of alcohol. Participants were excluded for present or past dependence on alcohol, and recorded zero blood alcohol levels before testing.

Behavioral Tasks

EAT

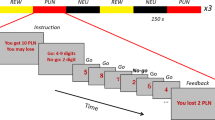

To examine conscious recognition of errors we administered the Error Awareness Task (EAT) (see Figure 1) (Hester et al, 2005), a motor Go/NoGo response inhibition task in which subjects make errors of commission of which they are aware (aware errors), or unaware (unaware errors). The EAT presents a serial stream of single color words in congruent fonts, with the word presented for 900 ms followed by a 600 ms inter-stimulus interval. Subjects were trained to respond to each of the words with a single ‘Go trial’ button press, and withhold this response when either of two different circumstances arose. The first was if the same word was presented on two consecutive trials (Repeat NoGo), and the second was if the word and font of the word did not match (Stroop NoGo). By having competing types of response inhibition rules we aimed to vary the strength of stimulus–response relationships, whereby representations of rules competitively suppress one another such that the more prepotent rule would suppress the weaker rule and so produce a significant number of errors, a small proportion of which may go unnoticed owing to focussing primarily on the prepotent rule. In particular, we aimed to capitalize on the overlearned human behavior of reading the word, rather than the color of the letters (the Stroop effect), and so predispose subjects to monitor for the Repeat, rather than the Stroop, NoGo's. To indicate ‘error awareness’ subjects were trained to press the Go trial button twice on the trial following any commission errors. Four blocks of 225 trials were administered to subjects, distributing 50 Repeat NoGo events and 50 Stroop NoGo events pseudo-randomly throughout the serial presentation of 800 Go trials, for the purpose of mixing frequent responses and infrequent response inhibitions to maintain response prepotency.

The EAT. The EAT presents a serial stream of single color words in congruent fonts, with the word presented for 900 ms followed by a 600 ms inter-stimulus interval. Subjects were trained to respond to each of the words with a single ‘Go trial’ button press, and withhold this response when either of two different circumstances arose. The first was if the same word was presented on two consecutive trials (Repeat NoGo), and the second was if the word and font of the word did not match (Stroop NoGo). By having competing types of response inhibition rules we aimed to vary the strength of stimulus–response relationships, whereby representations of rules competitively suppress one another such that the more prepotent rule would suppress the weaker rule and so produce a significant number of errors, a small proportion of which may go unnoticed owing to focussing primarily on the prepotent rule. In particular, we aimed to capitalize on the overlearned human behavior of reading the word, rather than the color of the letters (the Stroop effect), and so predispose subjects to monitor for the Repeat, rather than the Stroop, NoGo's. To indicate ‘error awareness’ subjects were trained to press the Go trial button twice on the trial following any commission errors.

BAT

Subjects' completed a second motor inhibition Go-NoGo task (see Figure 2), although the aim of this design was to examine post-error behavior for single lures in both the Single Lure (SL) and Double Lure (DL) conditions. The Behavior Adaptation Task (BAT) is a motor inhibition Go-NoGo task in which the letters X and Y were presented serially in an alternating pattern at 1 Hz. Subjects were asked to make a button response for each letter in the sequence but to withhold their responses when the alternation order was interrupted. Stimulus duration was 800 ms followed by a 200-ms fixation point. Stimuli were presented in two different conditions: in the SL condition the 80 NoGo stimuli (lures) were always followed by a Go stimulus. In the DL condition there were 44 single lures, and 18 double lures, with the double lures requiring two successive response inhibitions (eg, the seventh and eighth stimuli in the sequence below). The aim of this design was to examine post-error behavior for single lures in both the SL and the DL condition. In the DL condition only, NoGo lures were occasionally followed by another NoGo lure which was reasoned should induce post-error (and post-lure) behavioral changes such as response slowing; the advantage of such post-error slowing is reduced in the SL condition in which a NoGo lure was always followed by a Go trial.

The BAT. The BAT is a motor inhibition Go-NoGo task in which the letters X and Y were presented serially in an alternating pattern at 1 Hz. Subjects were asked to make a button response for each letter in the sequence but to withhold their responses when the alternation order was interrupted. Stimulus duration was 800 ms followed by a 200-ms fixation point. Stimuli were presented in two different conditions: in the SL condition the 80 NoGo stimuli (lures) were always followed by a Go stimulus. In the DL condition there were 44 single lures, and 18 double lures, with the double lures requiring two successive response inhibitions (eg, the seventh and eighth stimuli in the sequence below). The aim of this design was to examine post-error behavior for single lures in both the SL and the DL condition.

The experiment was conducted in six different blocks of 314 trials each, three blocks of the SL condition and three of the DL condition. Each block had a duration of 5 min and 30 s. The order of the conditions was randomized across subjects.

RESULTS

EAT

Performance indices for both control and user subjects are presented in Table 1. The distribution of scores for a number of measures was not normally distributed, and non-parametric statistical tests have been used where appropriate. Control subjects' inhibitory control, as measured by NoGo accuracy, was better than cocaine users, but this difference was not significant, F(1,42)=2.0, p=0.164. Comparing Stroop and Repeat NoGo's performance separately showed significant group differences for the latter, Mann–Whitney U (z=−2.38, p=0.017) and not the former, F(1,42)=0.314, p=0.579. Although Stroop NoGo errors were more common than Repeat NoGo errors when comparing across both groups with a repeated measures t-test, t(42)=9.25, p<0.0001, group differences in the awareness of errors were evident for Repeat NoGo errors, U(z=−2.31, p=0.021), but not Stroop errors, F(1,42)=0.809, p=0.374. Control participants were aware of over 85% of Repeat NoGo errors in comparison to 71% for cocaine users (see Figure 3).

Control participants responded significantly faster to Go trials than cocaine users, F(1,42)=5.88, p=0.020, although the faster reaction times of controls during NoGo errors was not significant, F(1,42)=2.96, p=0.092. Go RTs were significantly faster than error RT's for both groups, however this comparison was confounded by the response pattern of many participants, who, after making the initial erroneous button press response during the NoGo trial, responded again during this trial. Owing to technical limitations we were not able to obtain separate reaction times for each response, and while removal of the double responses does not alter the result it remains unclear how such responses might have influenced error RT's.

A measure of post-Aware-error slowing was calculated by subtracting the Go trial response time that immediately preceded an error from the second Go trial response time that followed an error. The second trial after an error was used due to the technical difficulty with calculating reaction times from double responses. Mean scores for post-Aware-error slowing indicated no significant change in RTs following an aware error for controls, however cocaine users showed significant slowing (33 ms, t(20)=−2.23, p=0.037). We have previously observed faster RT following errors with similar tasks, which we hypothesized was an adaptation to the task stimulus presentation ratio as subjects learn that NoGo events are widely spaced. Further support for this hypothesis includes significantly faster RT following STOPS for both groups in the current study (Post-STOP trial RT minus Pre-STOP trial: cocaine users=−73 ms, t(20)=7.95, p<0.001; control=−91 ms, t(21)=8.13, p<0.001). In contrast, both groups showed a trend towards slowing after unaware errors (post-unaware-error Go trial RT minus pre-unaware-error Go trial: cocaine users=19 ms, t(20)=−1.1, p=0.284; control=22 ms, t(21)=−2.04, p=0.05), a result observed previously with this task (Hester et al, 2005). Neither the post-STOP nor post-unaware-error effects differed between users and controls.

In summary, cocaine users displayed poorer inhibitory control (more commission errors for Repeat NoGos) and poorer error awareness (poorer awareness of Repeat NoGo errors). However, response speed slowing following errors (aware or unaware) or STOPS did not appear to be compromised (indeed, users showed significantly greater post-error slowing following aware errors relative to controls).

BAT

Performance indices for both control and user subjects is presented in Table 2. Given the significant correlation between NoGo performance for single lures in SL and DL conditions and first lure of a double lure in the DL condition (ranging between r=0.66 and 0.82), an average BAT inhibition score was created. Control subjects' BAT inhibition performance was better than cocaine users: F(1,42)=4.31, p=0.04. No group difference was identified for the second lure of a double lure, U(z=−0.71, p=0.43). A ‘post-error task adaptation’ coefficient was derived for double lures, specifically examining those occasions where participants failed to inhibit their response to the first lure, by calculating the proportion of second lures on which participants successfully inhibited or ‘adapted’ their performance. The results indicated that control participants adapted their performance following an error on 78% of occasions, which was significantly more than cocaine users who adapted post-error performance on only 66% of trials, U(z=−2.096, p=0.036).

Two separate two condition (single, double lure) × 2 group (controls, cocaine users) repeated measures ANOVAs examined Go RT and Error of Commission (EOC) RT. Go RT did not significantly differ across groups, F(1,41)=0.029, p>0.05, or conditions, F(1,41)=0.571, p>0.05, and the interaction between condition and group was also not significant, F(1,41)=1.01, p>0.05. The same pattern of nonsignificant results was observed for EOC RT.

Two RT difference scores, post-EOC RT and Post-Stop RT, were also calculated. Post-EOC RT was calculated by subtracting the RT for the trial following an EOC from the RT for the trial immediately before an EOC. Only single lures in the SL and DL conditions were used to calculate this difference score. To test whether post-error slowing occurred, one-sample t-tests against zero for each group, during each condition, were conducted. The results indicated that both controls: SL: 60 ms, t(20)=−4.37, p<0.01; DL: 97 ms, t(20)=−7.97, p<0.01, and cocaine users: SL: 56 ms, t(21)=−5.62, p<0.01; DL: 86 ms, t(21)=−5.57, p<0.01, demonstrated significant post-error slowing. A repeated measures ANOVA indicated that post-error slowing significantly differed across conditions, F(1,41)=12.13, p<0.01, with slowing in the DL condition significantly greater than for the SL condition, but did not show a significant effect of group (p>0.05), or interaction, F(1,41)=0.622, p>0.05.

To examine post-STOP behavior, one-sample t-tests against zero for each group, during each condition, were conducted. The results indicated that during the SL condition both controls: 71 ms, t(21)=4.52, p<0.01; and cocaine users: 48 ms, t(20)=2.86, p<0.01; demonstrated significant post-STOP decreases in RT. The DL condition showed a different pattern, with cocaine users post-STOP RT significantly slowing: 39 ms, t(21)=−2.19, p<0.05, and control participants not significantly different to zero: −2 ms, t(20)=−0.14, p>0.05. A repeated measures ANOVA indicated that post-STOP behavior significantly differed across conditions, F(1,41)=55.21, p<0.01, with slowing in the DL condition and speeding up for the single lure condition, but did not show a significant effect of group, F(1,41)=2.42, p>0.05, or interaction, F(1,41)=0.48, p>0.05.

In summary, no group differences in any of the RT measures were observed. Moreover, there were no group differences in response time adaptation (ie, post-error or post-STOP slowing). However, an inhibitory control and a performance adaptation score (the likelihood of making two successive commission errors on the double lures) was lower in users than controls. Although this last finding might suggest impaired performance adaptation in users the number of double lure errors may not be a pure measure of adaptation as successful performance may also depend on the ability to withhold a prepotent response (a process demonstrated above to be impaired for repeat trials in users). Double condition ‘adaptation’ was not significantly related to either measure of inhibition accuracy from the EAT (r=0.13–0.27), or single condition accuracy (r=0.27, p=0.08), but was related to double condition single lure accuracy (r=0.41, p<0.01), and double lure 1 accuracy (r=0.36, p=0.01). No relationship was observed between double condition adaptation and the awareness measures from the EAT (r=0.12–0.14). Similarly no relationship was observed with single condition post-error slowing (r=0.09), however a significant correlation was seen between the magnitude of post-error slowing in the double lure condition and double condition adaptation (r=0.34, p=0.02). On the whole, this pattern of correlations demonstrates that this performance adaptation measure very likely reflects inhibitory abilities in addition to behavioral adaptation abilities and that the poorer scores of users are not necessarily indicative of compromised post-error adaptation.

Relationship between Cocaine Use Behavior and Cognitive Task Performance

The EAT and BAT provide numerous measures of cognitive control, including response inhibition (percentage of successful inhibitions for Stroop and Repeat NoGos from EAT and the composite NoGo score from BAT), error awareness (summing Stroop and Repeat error awareness scores in the EAT), post-error slowing (post-unaware errors in the EAT and single lure trials in the DL condition) and inhibition adaptation (DL condition). NoGo accuracy and error awareness indices from the EAT task generally showed negative correlations to use behavior but these effects were not significant. Significant relationships were identified between use behavior and BAT performance, with NoGo accuracy (r=−0.39) and post-error adaptation (r=−0.49) significantly correlated with years of cocaine use. Weekly spending on cocaine also negatively correlated with post-error adaptation (r=−0.38, p=0.08) though it did not exceed the significance threshold. Post-error slowing, did not significantly relate to any of the self-report measures of cocaine use.

DISCUSSION

The results of the present study suggest that while cocaine users show a range of deficits in comparison to matched controls, a number of dissociations exist between deficits and retained cognitive performance. Cocaine users consistently demonstrated poorer inhibitory control, a deficit that was accompanied by reduced awareness of their errors when compared to matched controls. Although we had predicted post-error adaptation deficits on the basis of hypoactive ACC activity in the cocaine using population, we could find no evidence of deficits in post-error reaction times, a behavior that has previously been shown to correlate with error-related ACC activity. Interestingly, a different measurement of post-error adaptation behavior: exerting inhibitory control on the trial immediately after failing to inhibit, was significantly poorer in the cocaine using sample, suggesting a dissociation between these forms of behavior.

Inhibitory Control

Results for the EAT and BAT measures of inhibitory control demonstrated poorer performance in cocaine users; with the exception of Stroop NoGo performance in the EAT, cocaine user participants' performance was worse than that of control participants on all indices of inhibitory control.

The general pattern of poorer inhibitory control in cocaine users is consistent with the extant literature (Bolla et al, 1999; Fillmore and Rush, 2002; Hester and Garavan, 2004), although intact performance has also been reported (Bolla et al, 2004; Goldstein et al, 2001; Hoff et al, 1996). It is possible that some of the cause of inconsistency lies with sampling power, primarily the number of observations, as both current tasks indicate a significant cocaine-related deficit when inhibitory performance is averaged across within-task conditions.

Previous findings of inhibitory deficits in cocaine users have been associated with significantly faster response speeds (Fillmore and Rush, 2002; Kaufman et al, 2003), a relationship that has also been observed in control samples (Bellgrove et al, 2004). The faster response speeds are thought to reflect diminished attention to the task, or an impulsive response style, both of which would be consistent with the deficits observed in cocaine users. Interestingly however, results from both of the current tasks indicate that inhibitory control deficits persisted in the absence of response speed differences.

Error Awareness

Cocaine users explicitly recognized fewer of their inhibitory errors than control participants when performing the EAT. Again, the pattern of performance indicated differences between experimental conditions, with awareness of repeat errors, but not stroop lure errors, showing significant group differences. The interaction between group and condition appeared to be driven by poorer awareness of cocaine users on Repeat errors, but not Stroop errors. Repeat lures require sustained attention to the processing of trial sequence, whereas Stroop lures require phasic detection of the incongruency between word and font color. The pattern of results observed here may indicate that performance monitoring of cocaine users may be particularly poor for tasks that require sustained attention, or, that place demands on the updating process typically associated with working memory (Jansma et al, 2000). We have previously shown that cocaine users find inhibitory control under increased working memory demands particularly difficult (Hester and Garavan, 2004), partly due to the inability to modulate ACC activity in response to these demands. Although the working memory demands of the EAT task are minimal, certainly in comparison to n-back tasks that have also demonstrated cognitive impairment in cocaine users (Verdejo-Garcia et al, 2006), they may have been sufficient to interfere with the process of explicit error awareness. For example, even minimal increased in working memory demands have been shown to deleteriously influence other executive processes such as selective attention (de Fockert et al, 2001), and inhibitory control (Bunge et al, 2001), potentially owing to placing demands on shared neural resources (Klingberg, 1998).

Although this relationship requires further study, links between working memory and error awareness are of particular pertinence to drug abuse. Research suggests that cue-related cocaine craving involves the activation of a network of cortical regions involved in the engagement of attention, and the subsequent ruminations also involve the fronto-parietal network seen in WM rehearsal (Childress et al, 1999; Garavan et al, 2000; Grant et al, 1996; Kilts et al, 2001; Maas et al, 1998). Although speculative, a link between WM demands, cocaine craving and poor error awareness may help explain why cocaine users self-monitoring, or insight into their own behavior, is particularly poor during craving for the drug (Miller and Gold, 1994). The reduced awareness of errors by cocaine users has not previously been reported, though animal research has alluded to this type of deficit with tasks examining post-error responses to cognitive task performance (Gendle et al, 2003, 2004; Morgan et al, 2002).

Post-Error Adaptation

Contrary to expectation, cocaine users showed intact post-error slowing of response times in both the single and double lure conditions of the BAT. The magnitude and pattern of post-error slowing did not differ significantly between the groups on any measure derived from the BAT. Post-error slowing is a common phenomenon typically seen in experimental paradigms that require fast responses from a multiple-choice set of alternatives. However, RT slowing does not necessarily confer a direct benefit to post-error performance, particularly for tasks that emphasize speed over accuracy. However, the benefit to performance of post-error slowing was specifically manipulated within the BAT, by introducing a double lure condition where 50% of NoGo lure trials were immediately followed by a second consecutive NoGo lure. This manipulation proved successful, with both groups showing significantly greater post-error slowing for the double lure condition when compared to the single lure condition.

In contrast to the post-error slowing result, cocaine users did show significantly less post-error adaptation of inhibitory control performance. During the double lure condition, a significantly smaller proportion of first lure errors were followed by successful inhibition on the subsequent second lure of double lures. This result was surprising given that cocaine users showed significant levels of post-error slowing, particularly during the double lure condition, which suggested an ability to detect errors and modify behavior accordingly. The intercorrelations between measures on the BAT and EAT indicated that our derived measure of post-error adaptation was related to both inhibitory control performance and error awareness, but showed no relationship to the post-error slowing measures. Consequently, the dissociation between deficient post-error adaptation of inhibitory control and intact post-error slowing may have resulted from cocaine users' impairment in inhibitory control, whereby the magnitude of slowing following errors (which was equivalent to that of control participants) was not sufficient to overcome their significantly poorer inhibitory control.

We had predicted, on the basis of previous studies demonstrating a relationship between diminished error-related ACC responses and decreased post-error slowing (de Bruijn et al, 2004; Kerns et al, 2005; Ridderinkhof et al, 2002), that cocaine users, who have previously shown this diminished error-related ACC response (Kaufman et al, 2003), would have impaired post-error slowing. Given the intact post-error slowing of the current cocaine-using sample, we must consider whether any significant methodological or sample differences between the current study and those that had formed the hypothesis might account for the unexpected finding. One obvious explanation is that the current sample of cocaine users may not have a significantly diminished ACC response to errors. In the absence of neuroimaging results we cannot rule out this explanation, however, given the similarities between the current sample and Kaufman et al (2003), in terms of both lifetime and recent cocaine use behavior, as well as demographic characteristics, there appears to be no direct evidence to support this hypothesis. In addition, ACC hypoactivity appears to be a general feature of drug abusers, with findings in opiate (Forman et al, 2004), cannabis (Gruber and Yurgelun-Todd, 2005), and methamphetamine (London et al, 2005) samples. Furthermore, this sample does show impairment in other ACC-related cognitive functions, such as inhibitory control and error awareness. Although we do not have neuroimaging data from the BAT, it is based on the Go/NoGo format which has previously shown a relationship between post-error slowing and error-related ACC activity (Garavan et al, 2002), replicating a relationship demonstrated with other cognitive tasks such as the flanker (Gehring et al, 1993) and Stroop tasks (Kerns et al, 2004, 2005). Despite this evidence some studies have failed to demonstrate a relationship between error-related ACC activity and post-error slowing (Gehring and Fencsik, 2001), or have associated different neural signatures with post-error slowing, such as the ERP error positivity (Pe) waveform (Hajcak et al, 2003). Studies examining the diminished error-related ACC response of older adults have also failed to show that it bears any relationship to post-error slowing (Themanson et al, 2005; West and Moore, 2005), and studies examining drug-related increases (Riba et al, 2005a; Tieges et al, 2004) or decreases (Easdon et al, 2005; Riba et al, 2005b) in the ACC response to errors have also failed to show a relationship to post-error slowing. It is intriguing that those studies examining within-subject relations between ACC activity and post-error slowing have found significant correlations (eg, Kerns et al, 2005, Gehring et al, 1993), whereas the above-mentioned studies using between-group comparisons have not. Between-group comparisons may be influenced by other independent variables that distinguish the groups, for example, anatomical variability, which while not directly related to performance monitoring could potentially mask the ACC/post-error slowing relationship seen at the single-subject level.

The present result of intact post-error slowing and deficient post-error adaptation is remarkably consistent with two previous reports from the neuropsychological literature. Gehring and Knight (2000) examined a group of six brain-injured patients with focal lesions within the lateral prefrontal cortex, finding that response correction, but not post-error slowing, was significantly different to age-matched controls. ERP data from the brain-injured sample indicated a ‘normal’ error-related negativity (ERN) waveform, however an ERN-like waveform of similar magnitude was also present on correct trials. Gehring and Knight (2000) suggested that the inability of the ERN signal to differentiate errors from correct trials argued against the models of executive control that postulated signalling by the ACC of the PFC, or the reverse, for exertion of greater cognitive control. They argued that a more complex model, where the ACC detected properties of the stimuli that required information held in the prefrontal cortices for interpretation, and potentially also implementation of corrective behavior. Swick and Turken (2002) presented a single case, RN, who had an extensive left hemisphere ACC lesion that was associated with impaired correction but intact post-error slowing when performing the Stroop task. ERP data from this patient indicated a similar result to Gehring and Knight's patients, with the ERN waveform unable to be distinguished from the equivalent negative ERP waveform during correct trials. In comparison to a control sample, both the corrected errors and overall errors ERN signals were diminished, suggesting that the detection, and or correction, of errors could not be directly related to the magnitude of the ERN.

Theoretical and computational models of error processing also offer some predictions as to the relationship between error-related neural processes and post-error behavior. The reinforcement learning model from Holroyd and Coles (2002), Holroyd et al (2005), Nieuwenhuis et al (2004, 2002) argues that errors are detected primarily by the basal ganglia, which compares known stimulus–response relationships to stimuli perceived and responses made. Although the complex model is beyond description here, it does suggest that the ERN is the result of the basal ganglia enervating the mesencephalic dopamine system, which in turn disinhibits motor neurons in the ACC. The ACC is thought to use this information to improve ongoing performance. Their model predicted, as has been shown experimentally, that within individuals, larger ERN responses were associated with greater post-error slowing. Holroyd has argued that the size of the ERN, and indirectly the magnitude of post-error slowing, are linked to the frequency of targets. Frequent targets are typically answered correctly, hence the stimulus attains a large positive value. Consequently, errors on such frequent targets result in a large value change, from very good to very bad. A Go/NoGo task is not specifically considered by this model, though it appears reasonable to predict that NoGo errors would represent errors on frequent stimuli.

Unfortunately this model, nor others attempting to explain the error-related neural and behavioral processes (Botvinick et al, 2001; Braver et al, 2002), have as yet attempted to explain the specific results obtained by either Gehring and Knight (2000) or Swick and Turken (2002). The deficient error correction observed in those studies (and the lower level of performance adaptation in the current study), appears consistent with the modelling from Holroyd and co-workers, as presumably diminished ACC activity is indicative of dysfunction in the cortical ‘interpreter’ of mesencephalic dopamine signals from the basal ganglia. However, the intact post-error slowing suggests that this behavioral change is not as closely linked to the ERN as suggested by the Holroyd model. It is possible that post-error slowing is linked indirectly to the ERN, or more directly to other error-related neural activity such as the Pe waveform. Although the Pe waveform has been localized to the rostral ACC region (Herrmann et al, 2004; Mathewson et al, 2005), other studies, including those that attempted to manipulate the awareness (or unawareness) of errors, have identified both prefrontal and parietal regions contributing to the Pe or error awareness (Brazdil et al, 2002; Nieuwenhuis et al, 2001). It appears increasingly likely that whereas certain regions are consistently linked with error-related neural activity, a network of regions is contributing to post-error processes such as response slowing and performance adaptation. Neural dysfunction in part(s) of the network may therefore not disable post-error processes, but render them somewhat less effective, or present subtle dissociations of the type seen in the present study. Alternatively, ACC activity may have a threshold-type relationship to post-error processes (Yeung et al, 2004), whereby a certain level of activity is sufficient to begin the cascade of error detection, post-error slowing and or post-error adaptation, but the overall level of activity is not tightly coupled to these processes.

The proposed role of dopamine and the mesencephalic dopamine system in error processing may also have implications for cocaine users. Cocaine is believed to exert its reinforcing effects by blocking the re-uptake of dopamine and increasing its concentration in dopamine receptor-rich regions such as the ventral striatum and ACC (Koob and Bloom, 1988; Kuhar et al, 1991). Repeated exposure to this hyper-dopaminergic state has been suggested to account for decreased dopamine receptor levels in chronic users, and consequently, decreased metabolism in the ACC region, as this region has a dense concentration of these receptors (Volkow et al, 1999, 1991). Holroyd and co-workers argue that it is the phasic increases (during success) or decreases (during failure) in dopaminergic activity, that lead to modulation of neuronal firing in the ACC, detection of errors and the post-error changes to cognitive behavior. Although speculative, this hypothesis appears consistent with both the results of the present study that indicate deficits in error detection and (some) post-error adaptive changes of behavior following chronic cocaine use, and previous work demonstrating increased error-related ACC activity in drug-naïve participants following the acute administration of amphetamines (de Bruijn et al, 2004). What is particularly intriguing is that both studies demonstrate a relationship between apparent dopaminergic levels, ACC activity and self-reported assessments of performance, but not objective measures such as post-error slowing. De Bruijn et al (2004) found that participants administered amphetamines rated their performance as significantly better than during a placebo condition, however, no difference was evident in post-error slowing or other measures of behavior (ie, inhibition and conflict adaptation). Although these results appear to suggest a role for dopamine in subjective awareness of cognitive performance, further research is clearly required to test the relationship between dopamine, cingulate activity, error-related brain activity, and behavior.

The error awareness and adaptation deficits detected in cocaine users are of direct consequence to overall cognitive functioning, and potentially have wider implications for the addiction process. Deficits in experimental measures of error awareness have shown to relate to failures in remediating everyday failures of cognitive performance (Giovannetti et al, 2002; Hart et al, 1998). Similarly, dysfunctional neural responses to errors in other clinical groups have also shown to relate to their general symptom profile. For example, patients with schizophrenia have diminished levels of ACC activity in response to errors (Kerns et al, 2005; Mathalon et al, 2002), with the level of dysfunction relating to the severity of disorganization symptoms (ie, formal thought disorder, inappropriate affect, and bizarre behavior) (Bates et al, 2002; Berman et al, 1997; Frith and Done, 1989; Liddle et al, 1992). Alzheimer's disease has also been associated with a progressive deterioration in error awareness (Cahn et al, 1997; Neils-Strunjas et al, 1998) and the neural response to errors (Mathalon et al, 2003).

Although a relationship between error-related neural and behavioral processes and the maintenance of drug abuse has not been established (Garavan and Stout, 2005), these deficits are indicative of dysfunction to the system responsible for executive or cognitive control (Posner and Rothbart, 1998; Ridderinkhof et al, 2004). Dysfunctional cognitive control has been highlighted as critical to the maintenance of drug addiction (Lyvers, 2000), particularly in relation to impulse control and attentional biases to drug stimuli. For example, higher levels of attentional bias towards drug-related stimuli has been shown to relate to both diminished cognitive control and poorer treatment outcomes for both cocaine (Carpenter et al, 2005) and alcohol abusers (Cox et al, 2002). The present results may help in specifying the particular cognitive control deficits of current cocaine users.

References

Bates AT, Kiehl KA, Laurens KR, Liddle PF (2002). Error-related negativity and correct response negativity in schizophrenia. Clin Neurophysiol 113: 1454–1463.

Bellgrove MA, Hester R, Garavan H (2004). The functional neuroanatomical correlates of response variability: evidence from a response inhibition task. Neuropsychologia 42: 1910–1916.

Berman I, Viegner B, Merson A, Allan E, Pappas D, Green AI (1997). Differential relationships between positive and negative symptoms and neuropsychological deficits in schizophrenia. Schizophr Res 25: 1–10.

Bolla K, Ernst M, Kiehl K, Mouratidis M, Eldreth D, Contoreggi C et al (2004). Prefrontal cortical dysfunction in abstinent cocaine abusers. J Neuropsychiat Clin Neurosci 16: 456–464.

Bolla KI, Rothman R, Cadet JL (1999). Dose-related neurobehavioral effects of chronic cocaine use. J Neuropsychiat Clin Neurosci 11: 361–369.

Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD (2001). Conflict monitoring and cognitive control. Psychol Rev 108: 624–652.

Braver TS, Cohen JD, Barch DM (2002). The role of prefrontal cortex in normal and disordered cognitive control: a cognitive neuroscience perspective. In: Stuss DTK, Robert T (eds). Principles of Frontal Lobe Function. Oxford University Press: London. pp 428–447.

Brazdil M, Roman R, Falkenstein M, Daniel P, Jurak P, Rektor I (2002). Error processing—evidence from intracerebral ERP recordings. Exp Brain Res 146: 460–466.

Bunge SA, Ochsner KN, Desmond JE, Glover GH, Gabrieli JD (2001). Prefrontal regions involved in keeping information in and out of mind. Brain 124: 2074–2086.

Cahn DA, Salmon DP, Bondi MW, Butters N, Johnson SA, Wiederholt WC et al (1997). A population-based analysis of qualitative features of the neuropsychological test performance of individuals with dementia of the Alzheimer type: implications for individuals with questionable dementia. J Int Neuropsychol Soc 3: 387–393.

Carpenter KM, Schreiber E, Church S, McDowell D (2005). Drug Stroop performance: relationships with primary substance of use and treatment outcome in a drug-dependent outpatient sample. Addict Behav 31: 174–181.

Carter CS, Braver TS, Barch DM, Botvinick MM, Noll D, Cohen JD (1998). Anterior cingulate cortex, error detection, and the online monitoring of performance. Science 280: 747–749.

Chatterji P (2006). Illicit drug use and educational attainment. Health Econ 15: 489–511.

Childress AR, Mozley PD, McElgin W, Fitzgerald J, Reivich M, O'Brien CP (1999). Limbic activation during cue-induced cocaine craving. Am J Psychiatry 156: 11–18.

Cox WM, Hogan LM, Kristian MR, Race JH (2002). Alcohol attentional bias as a predictor of alcohol abusers' treatment outcome. Drug Alcohol Depend 68: 237–243.

Curran HV, Brignell C, Fletcher S, Middleton P, Henry J (2002). Cognitive and subjective dose-response effects of acute oral Delta-sup-9-tetrahydrocannabinol (THC) in infrequent cannabis users. Psychopharmacology (Berlin) 164: 61–70.

de Bruijn ER, Hulstijn W, Verkes RJ, Ruigt GS, Sabbe BG (2004). Drug-induced stimulation and suppression of action monitoring in healthy volunteers. Psychopharmacology (Berlin) 177: 151–160.

de Fockert JW, Rees G, Frith CD, Lavie N (2001). The role of working memory in visual selective attention. Science 291: 1803–1806.

Di Sclafani V, Tolou-Shams M, Price LJ, Fein G (2002). Neuropsychological performance of individuals dependent on crack-cocaine, or crack-cocaine and alcohol, at 6 weeks and 6 months of abstinence. Drug Alcohol Depend 66: 161–171.

Easdon C, Izenberg A, Armilio ML, Yu H, Alain C (2005). Alcohol consumption impairs stimulus- and error-related processing during a Go/No-Go Task. Brain Res Cogn Brain Res 25: 873–883.

Fant RV, Heishman SJ, Bunker EB, Pickworth WB (1998). Acute and residual effects of marijuana in humans. Pharmacol Biochem Behav 60: 777–784.

Fillmore MT, Rush CR (2002). Impaired inhibitory control of behavior in chronic cocaine users. Drug Alcohol Depend 66: 265–273.

Forman SD, Dougherty G, Casey BJ, Siegle G, Braver T, Barch D et al (2004). Opiate addicts lack error-dependent activation of rostral anterior cingulate. Biol Psychiatry 55: 531–537.

Frith CD, Done DJ (1989). Experiences of alien control in schizophrenia reflect a disorder in the central monitoring of action. Psychol Med 19: 359–363.

Garavan H, Pankiewicz J, Bloom A, Cho JK, Sperry L, Ross TJ et al (2000). Cue-induced cocaine craving: neuroanatomical specificity for drug users and drug stimuli. Am J Psychiatry 157: 1789–1798.

Garavan H, Ross TJ, Murphy K, Roche RA, Stein EA (2002). Dissociable executive functions in the dynamic control of behavior: inhibition, error detection, and correction. Neuroimage 17: 1820–1829.

Garavan H, Stout JC (2005). Neurocognitive insights into substance abuse. Trends Cogn Sci 9: 195–201.

Gehring WJ, Fencsik DE (2001). Functions of the medial frontal cortex in the processing of conflict and errors. J Neurosci 21: 9430–9437.

Gehring WJ, Goss B, Coles M, Meyer D, Donchin E (1993). A neural system for error detection and compensation. Psychol Sci 4: 385–390.

Gehring WJ, Himle J, Nisenson LG (2000). Action-monitoring dysfunction in obsessive-compulsive disorder. Psychol Sci 11: 1–6.

Gehring WJ, Knight RT (2000). Prefrontal-cingulate interactions in action monitoring. Nat Neurosci 3: 516–520.

Gendle MH, Strawderman MS, Mactutus CF, Booze RM, Levitsky DA, Strupp BJ (2003). Impaired sustained attention and altered reactivity to errors in an animal model of prenatal cocaine exposure. Brain Res Dev Brain Res 147: 85–96.

Gendle MH, White TL, Strawderman M, Mactutus CF, Booze RM, Levitsky DA et al (2004). Enduring effects of prenatal cocaine exposure on selective attention and reactivity to errors: evidence from an animal model. Behav Neurosci 118: 290–297.

Giovannetti T, Libon DJ, Hart T (2002). Awareness of naturalistic action errors in dementia. Journal Int Neuropsychol Soc 8: 633–644.

Goldstein RZ, Volkow ND, Wang GJ, Fowler JS, Rajaram S (2001). Addiction changes orbitofrontal gyrus function: involvement in response inhibition. Neuroreport 12: 2595–2599.

Grant S, London ED, Newlin DB, Villemagne VL, Liu X, Contoreggi C et al (1996). Activation of memory circuits during cue-elicited cocaine craving. Proc Natl Acad Sci USA 93: 12040–12045.

Gruber SA, Yurgelun-Todd DA (2005). Neuroimaging of marijuana smokers during inhibitory processing: a pilot investigation. Brain Res Cogn Brain Res 23: 107–118.

Hajcak G, McDonald N, Simons RF (2003). To err is autonomic: error-related brain potentials, ANS activity, and post-error compensatory behavior. Psychophysiology 40: 895–903.

Hart T, Giovannetti T, Montgomery MW, Schwartz MF (1998). Awareness of errors in naturalistic action after traumatic brain injury. J Head Trauma Rehabil 13: 16–28.

Herrmann MJ, Rommler J, Ehlis AC, Heidrich A, Fallgatter AJ (2004). Source localization (LORETA) of the error-related-negativity (ERN/Ne) and positivity (Pe). Brain Res Cogn Brain Res 20: 294–299.

Hester R, Foxe JJ, Molholm S, Shpaner M, Garavan H (2005). Neural mechanisms involved in error processing: a comparison of errors made with and without awareness. Neuroimage 27: 602–608.

Hester R, Garavan H (2004). Executive dysfunction in cocaine addiction: evidence for discordant frontal, cingulate, and cerebellar activity. J Neurosci 24: 11017–11022.

Hoff AL, Riordan H, Morris L, Cestaro V, Wieneke M, Alpert R et al (1996). Effects of crack cocaine on neurocognitive function. Psychiatry Res 60: 167–176.

Holroyd CB, Coles MG (2002). The neural basis of human error processing: reinforcement learning, dopamine, and the error-related negativity. Psychol Rev 109: 679–709.

Holroyd CB, Yeung N, Coles MG, Cohen JD (2005). A mechanism for error detection in speeded response time tasks. J Exp Psychol Gen 134: 163–191.

Jansma JM, Ramsey NF, Coppola R, Kahn RS (2000). Specific versus nonspecific brain activity in a parametric N-back task. Neuroimage 12: 688–697.

Kaufman JN, Ross TJ, Stein EA, Garavan H (2003). Cingulate hypoactivity in cocaine users during a GO/NOGO task as revealed by event-related fMRI. J Neurosci 23: 7839–7843.

Kerns JG, Cohen JD, MacDonald III AW, Cho RY, Stenger VA, Carter CS (2004). Anterior cingulate conflict monitoring and adjustments in control. Science 303: 1023–1026.

Kerns JG, Cohen JD, Macdonald III AW, Johnson MK, Stenger VA, Aizenstein H et al (2005). Decreased conflict- and error-related activity in the anterior cingulate cortex in subjects with schizophrenia. Am J Psychiatry 162: 1833–1839.

Kilts CD, Schweitzer JB, Quinn CK, Gross RE, Faber TL, Muhammad F et al (2001). Neural activity related to drug craving in cocaine addiction. Arch Gen Psychiatry 58: 334–341.

Klingberg T (1998). Concurrent performance of two working memory tasks: potential mechanisms of interference. Cereb Cortex 8: 593–601.

Koob GF, Bloom FE (1988). Cellular and molecular mechanisms of drug dependence. Science 242: 715–723.

Kubler A, Murphy K, Garavan H (2005). Cocaine dependence and attention switching within and between verbal and visuospatial working memory. Eur J Neurosci 21: 1984–1992.

Kuhar MJ, Ritz MC, Boja JW (1991). The dopamine hypothesis of the reinforcing properties of cocaine. Trends Neurosci 14: 299–302.

Liddle PF, Friston KJ, Frith CD, Hirsch SR, Jones T, Frackowiak RS (1992). Patterns of cerebral blood flow in schizophrenia. Br J Psychiatry 160: 179–186.

London ED, Berman SM, Voytek B, Simon SL, Mandelkern MA, Monterosso J et al (2005). Cerebral metabolic dysfunction and impaired vigilance in recently abstinent methamphetamine abusers. Biol Psychiatry 58: 770–778.

Lyvers M (2000). ‘Loss of control’ in alcoholism and drug addiction: a neuroscientific interpretation. Exp Clin Psychopharmacol 8: 225–249.

Maas LC, Lukas SE, Kaufman MJ, Weiss RD, Daniels SL, Rogers VW et al (1998). Functional magnetic resonance imaging of human brain activation during cue-induced cocaine craving. Am J Psychiatry 155: 124–126.

MacDonald III AW, Cohen JD, Stenger VA, Carter CS (2000). Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science 288: 1835–1838.

Mathalon DH, Bennett A, Askari N, Gray EM, Rosenbloom MJ, Ford JM (2003). Response-monitoring dysfunction in aging and Alzheimer's disease: an event-related potential study. Neurobiol Aging 24: 675–685.

Mathalon DH, Fedor M, Faustman WO, Gray M, Askari N, Ford JM (2002). Response-monitoring dysfunction in schizophrenia: an event-related brain potential study. J Abnorm Psychol 111: 22–41.

Mathewson KJ, Dywan J, Segalowitz SJ (2005). Brain bases of error-related ERPs as influenced by age and task. Biol Psychol 70: 88–104.

Miller NS, Gold MS (1994). Dissociation of ‘conscious desire’ (craving) from and relapse in alcohol and cocaine dependence. Ann Clin Psychiatry 6: 99–106.

Morgan RE, Garavan HP, Mactutus CF, Levitsky DA, Booze RM, Strupp BJ (2002). Enduring effects of prenatal cocaine exposure on attention and reaction to errors. Behav Neurosci 116: 624–633.

Neils-Strunjas J, Shuren J, Roeltgen D, Brown C (1998). Perseverative writing errors in a patient with Alzheimer's disease. Brain Lang 63: 303–320.

Nieuwenhuis S, Holroyd CB, Mol N, Coles MG (2004). Reinforcement-related brain potentials from medial frontal cortex: origins and functional significance. Neurosci Biobehav Rev 28: 441–448.

Nieuwenhuis S, Ridderinkhof KR, Blom J, Band G, Kok A (2001). Error-related brain potentials are differentially related to awareness of response errors: evidence from an antisaccade task. Psychophysiology 38: 752–760.

Nieuwenhuis S, Ridderinkhof KR, Talsma D, Coles MG, Holroyd CB, Kok A et al (2002). A computational account of altered error processing in older age: dopamine and the error-related negativity. Cogn Affect Behav Neurosci 2: 19–36.

Pope Jr HG, Gruber AJ, Hudson JI, Huestis MA, Yurgelun-Todd D (2001). Neuropsychological performance in long-term cannabis users. Arch Gen Psychiatry 58: 909–915.

Posner MI, Rothbart MK (1998). Attention, self-regulation and consciousness. Philos Trans R Soc Lond B Biol Sci 353: 1915–1927.

Riba J, Rodriguez-Fornells A, Morte A, Munte TF, Barbanoj MJ (2005a). Noradrenergic stimulation enhances human action monitoring. J Neurosci 25: 4370–4374.

Riba J, Rodriguez-Fornells A, Munte TF, Barbanoj MJ (2005b). A neurophysiological study of the detrimental effects of alprazolam on human action monitoring. Brain Res Cogn Brain Res 25: 554–565.

Ridderinkhof KR, de Vlugt Y, Bramlage A, Spaan M, Elton M, Snel J et al (2002). Alcohol consumption impairs detection of performance errors in mediofrontal cortex. Science 298: 2209–2211.

Ridderinkhof KR, Ullsperger M, Crone EA, Nieuwenhuis S (2004). The role of the medial frontal cortex in cognitive control. Science 306: 443–447.

Swick D, Turken AU (2002). Dissociation between conflict detection and error monitoring in the human anterior cingulate cortex. Proc Natl Acad Sci USA 99: 16354–16359.

Themanson JR, Hillman CH, Curtin JJ (2005). Age and physical activity influences on action monitoring during task switching. Neurobiol Aging 27: 1335–1345.

Tieges Z, Richard Ridderinkhof K, Snel J, Kok A (2004). Caffeine strengthens action monitoring: evidence from the error-related negativity. Brain Res Cogn Brain Res 21: 87–93.

Ullsperger M, von Cramon DY (2001). Subprocesses of performance monitoring: a dissociation of error processing and response competition revealed by event-related fMRI and ERPs. Neuroimage 14: 1387–1401.

Verdejo-Garcia A, Bechara A, Recknor EC, Perez-Garcia M (2006). Executive dysfunction in substance dependent individuals during drug use and abstinence: an examination of the behavioral, cognitive and emotional correlates of addiction. J Int Neuropsychol Soc 12: 405–415.

Volkow ND, Fowler JS, Wang GJ (1999). Imaging studies on the role of dopamine in cocaine reinforcement and addiction in humans. J Psychopharmacol (Oxford) 13: 337–345.

Volkow ND, Fowler JS, Wolf AP, Hitzemann R, Dewey S, Bendriem B et al (1991). Changes in brain glucose metabolism in cocaine dependence and withdrawal. Am J Psychiatry 148: 621–626.

West R, Moore K (2005). Adjustments of cognitive control in younger and older adults. Cortex 41: 570–581.

Yeung N, Cohen JD, Botvinick MM (2004). The neural basis of error detection: conflict monitoring and the error-related negativity. Psychol Rev 111: 931–959.

Acknowledgements

This research was supported by USPHS Grants DA14100, DA018685 and GCRC M01 RR00058, ARC Grant DP0556602 (RH) and IRCSET Grant PD/2004/29 (CSF). The assistance of Dr Jacqueline Kaufman and Veronica Dixon is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hester, R., Simões-Franklin, C. & Garavan, H. Post-Error Behavior in Active Cocaine Users: Poor Awareness of Errors in the Presence of Intact Performance Adjustments. Neuropsychopharmacol 32, 1974–1984 (2007). https://doi.org/10.1038/sj.npp.1301326

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/sj.npp.1301326

Keywords

This article is cited by

-

Neural and Behavioral Measures of Stress-induced Impairment in Error Awareness and Post-error Adjustment

Neuroscience Bulletin (2023)

-

The neurobiology of drug addiction: cross-species insights into the dysfunction and recovery of the prefrontal cortex

Neuropsychopharmacology (2022)

-

Recreational drug use and prospective memory

Psychopharmacology (2022)

-

Neural and Behavioral Correlates of Impaired Insight and Self-awareness in Substance Use Disorder

Current Behavioral Neuroscience Reports (2021)

-

How the brain prevents a second error in a perceptual decision-making task

Scientific Reports (2016)