Abstract

According to the second law of thermodynamics, for every transformation performed on a system which is in contact with an environment of fixed temperature, the average extracted work is bounded by the decrease of the free energy of the system. However, in a single realization of a generic process, the extracted work is subject to statistical fluctuations which may allow for probabilistic violations of the previous bound. We are interested in enhancing this effect, i.e. we look for thermodynamic processes that maximize the probability of extracting work above a given arbitrary threshold. For any process obeying the Jarzynski identity, we determine an upper bound for the work extraction probability that depends also on the minimum amount of work that we are willing to extract in case of failure, or on the average work we wish to extract from the system. Then we show that this bound can be saturated within the thermodynamic formalism of quantum discrete processes composed by sequences of unitary quenches and complete thermalizations. We explicitly determine the optimal protocol which is given by two quasi-static isothermal transformations separated by a finite unitary quench.

Similar content being viewed by others

Introduction

In classical thermodynamics1 the (Helmholtz) free energy of a system at thermal equilibrium is defined as F := U − TS, where U is the internal energy, T is the temperature and S is the entropy. Whenever the environment is characterized by a fixed and unique temperature T, in every process connecting two states having free energy Fin and Ffin respectively, the work done by the system is upper bounded by the free energy reduction

The previous inequality is a direct manifestation of the second law of thermodynamics. Indeed for a cyclic process ΔF = 0 and the bound (1) states that no positive work can be extracted from a single heat bath. However, according to the microscopic theory of statistical mechanics1, the work done by a system in a given transformation is non-deterministic and can present statistical fluctuations2,3,4,5. For macroscopic systems, like a gas in contact with a moving piston, these fluctuations are usually negligible and one can replace all random variables with their averages recovering the thermodynamic bound (1). For sufficiently “small” systems, i.e. those in which the number of degrees of freedom are limited and the energies involved are of the order of kBT, fluctuations are important and for each repetition of a given protocol the system can produce a different amount of work, which can be described as a random variable with an associated probability distribution3,4,5. Moreover a further source of difficulty in the description of “small” systems, like nano-scale devices, molecules, atoms, electrons, etc., is that quantum effects are often non-negligible and quantum fluctuations can also affect the work extraction process6,7,8,9.

The properties and the constraints characterizing the work probability distribution of a given process are captured by the so called fluctuation theorems which can be defined both for classical2,5,10,11,12 and quantum systems13,14,15,16. These theorems can be seen as generalizations of the second law for processes characterized by large statistical fluctuations. Indeed it can be shown11,12 that the expectation value of the work distribution always satisfies the bound (1), while for a single-shot realization of the protocol it is in principle possible to extract a larger amount of work at the price of succeeding with a small probability3,4,12,17,18.

Our aim is to identify the optimal protocols for maximizing the probability of extracting work above a given threshold Λ, arbitrarily larger than the bound (1). Differently from standard thermodynamics in which the optimal procedures are usually identified with the quasi-static (reversible) transformations saturating the inequality (1), for the problem we are considering, fluctuations are necessary in order to probabilistically violate the second law. Consequently, in this case optimality will require some degree of irreversibility. In our analysis we shall focus on processes obeying the Jarzynski identity11,13,14,15 which include all those transformations where a system originally at thermal equilibrium evolves under an externally controlled, time-dependent Hamiltonian and proper concatenations of similar transformations. In this context, as a first step we identify an upper bound for the probability of work extraction above the threshold Λ which depends on the minimum amount of work Wmin that we are willing to extract in case of failure of the procedure. We also identify the class of optimal protocols that enable one to saturate such bound. These correspond to have two quasi-static transformations separated by a single, abrupt modification of the Hamiltonian (unitary quench), the associated work distribution being characterised by only two possible outcomes: one arbitrarily above the bound (1) (success) and one below (failure). Explicit examples are presented in the context of discrete thermal processes19,20 and in the context of one-molecule Szilard-like heat engines21.

In the second part of the paper we focus instead on the upper bound for the probability of work extraction above a given threshold Λ which applies to all those processes that ensure a fixed value μ of the average extracted work. Also in this case we present explicit protocols which enable one to saturate the bound: it turns out that they belong to the same class of the optimal protocols we presented in the first part of the manuscript (i.e. two quasi-static transformations separated by a single, unitary quench).

Work Extraction Above Threshold Under Minimal Work Constraint

In 199711, motivated by molecular biology experiments, C. Jarzynski derived an identity characterizing the probability distribution P (W) of the work done by a system which is initially in a thermal state of temperature T and it is subject to a process interpolating from Hin to Hfin. The process can be an Hamiltonian transformation or, more generally, an open dynamics obeying the requirement of microreversibility10. The identity is the following:

where  , ΔF = Ffin − Fin, with Fin, Ffin being the free energies associated to the canonical thermal states with Hamiltonian Hin and Hfin respectively. Notice that, while Fin corresponds to the actual free energy of the initial state, Ffin is not directly related to the final state which may be out of equilibrium but only to its final Hamiltonian (in all the processes considered in this work, however the initial and final states are always thermal and Ffin is also the actual free energy of the system). Notice also that by a simple convexity argument applied to the exponent on the right-hand-side of Eq. (2) one gets the inequality

, ΔF = Ffin − Fin, with Fin, Ffin being the free energies associated to the canonical thermal states with Hamiltonian Hin and Hfin respectively. Notice that, while Fin corresponds to the actual free energy of the initial state, Ffin is not directly related to the final state which may be out of equilibrium but only to its final Hamiltonian (in all the processes considered in this work, however the initial and final states are always thermal and Ffin is also the actual free energy of the system). Notice also that by a simple convexity argument applied to the exponent on the right-hand-side of Eq. (2) one gets the inequality

which is the counterpart of (1) for non-deterministic processes.

For quantum systems, because of the intrinsic uncertainties characterizing quantum states, identifying a proper definition of work is still a matter of research6,9,22,23,24. However, if we assume to perform a measurement of the energy of the system before and after a given unitary process, the work extracted during the process is operationally well defined as the decrease of the measured energy. Also in this quantum scenario, it can be shown13,14,15 that the work distribution obeys the Jarzynski identity (2).

Among all (unitary or non-unitary) processes interpolating from an initial Hamiltonian Hin to a final Hamiltonian Hfin and fulfilling (2), we are interested in maximizing the probability of extracting work above a given arbitrary threshold Λ, i.e. in computing the quantity

where

This problem is particularly interesting and non-trivial only when the threshold is beyond the limit (1) imposed by the second law, i.e. when Λ > −ΔF. Indeed for lower values of Λ, it is known that the probability (4) can be maximized to 1 by an arbitrary thermodynamically reversible (quasi-static) process, deterministically extracting W = −ΔF. Exploiting statistical fluctuations, larger values of work can be extracted3,4,12,17,18. But what are the corresponding probabilities and the associated optimal processes?

To tackle this problem we start considering those processes which, beside fulfilling (2), satisfy also the constraint

with Wmin an assigned value smaller than or equal to Λ (consistency condition being Λ the work threshold above which we would like to operate) and −ΔF (by construction, being the latter an upper bound to the average work of the process, see Equation (3)). This corresponds to set a lower limit on the minimum amount of work that we are willing to extract in the worst case scenario, the unconstrained scenario being recovered by setting Wmin → −∞ (instead taking Wmin = 0 we select those processes where, in all the statistical realizations no work is ever provided to the system).

Under the above hypothesis the following inequality can be established

which in the unrestricted regime Wmin → −∞, yields

that was already demonstrated in ref. 12. Again we stress that both bounds (7) and (8) are relevant only for Λ > −ΔF, while for Λ ≤ −ΔF they can be trivially replaced by P (W ≥ Λ) ≤ 1.

The proof of the general bound (7) follows straightforwardly from the identity (2) and the definitions of Λ and Wmin. Indeed, we can split the integral appearing in (2) as the sum of two terms that can be independently bounded as follows:

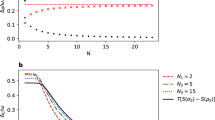

Equation (7) finally follows by solving the resulting inequality for P (W ≥ Λ). We summarize the bounds given in this this paragraph in Fig. 1.

Contourplot of the optimal probability  of Eq. (12) as a function of Λ and Wmin for

of Eq. (12) as a function of Λ and Wmin for  and −ΔF = 1.

and −ΔF = 1.

If Λ ≤ −ΔF the probability of success is equal to 1 independently from Wmin. For Λ ≥ −ΔF the bound is non trivial and is given by Eq. (7). Values of Wmin larger than Λ or larger than −ΔF needs not to be considered.

Attainability of the bound (7)

By close inspection of the derivation (9) it is clear that the only way to saturate the inequality (7) is by means of processes whose work distribution is the convex combination of two delta functions centered respectively at W = Wmin and W = Λ, i.e.

with p = P (W ≥ Λ) equal to the term on the rhs of Eq. (7), i.e.

Yet it is not obvious whether such transformations exist nor how one could implement them while still obeying the Jarzynski identity. To deal with this question in the following we present two different schemes both capable of fulfilling these requirements proving hence that the following identity holds:

(see Fig. 1 for a contour plot of this optimal probability). The first example is based on a specific theoretical framework (the discrete quantum process approach) introduced in ref. 20 for modeling thermodynamic transformations applied to quantum systems. While the attainability of (7) does not require us to consider a full quantum treatment (only the presence of discrete energy exchanges being needed, not quantum coherence), the use of this technique turns out to be useful as it provides a simplified, yet fully exhaustive description of the involved transformations. The second example is instead fully classical and it is based on an idealized one-molecule Szilard-like heat engine.

Optimal transformations by discrete quantum processes

Following the approach presented in refs 19 and 20 in this section we consider protocols composed by the concatenation of only two types of operations: discrete unitary quenches (DUQs) and discrete thermalizing transformations (DTTs). A DUQ applied to a system described by an input density matrix ρ and Hamiltonian H, is an arbitrary change of the latter which does not affect the former, i.e.

H′ being the final Hamiltonian of the system. A DTT instead is a complete thermalization towards a Gibbs state of temperature T without changing the system Hamiltonian, i.e.

with  , where

, where  and Z := Tr[e−βH] the associated partition function. Operationally, a DUQ can be implemented by an instantaneous change of the Hamiltonian realized while keeping the system thermally isolated. A DTT instead can be obtained by weakly coupling the system with the environment for a sufficiently long time. The convenience for introducing such elementary processes is that the energy exchanged during a DUQ and a DTT can be thermodynamically interpreted as work and heat respectively, without any risk of ambiguity typical of continuous transformations in which the Hamiltonian and the state are simultaneously changed. On the other hand, as shown in refs 19 and 20, continuous transformations can be well approximated by a sequence of infinitesimal DUQs and DTTs.

and Z := Tr[e−βH] the associated partition function. Operationally, a DUQ can be implemented by an instantaneous change of the Hamiltonian realized while keeping the system thermally isolated. A DTT instead can be obtained by weakly coupling the system with the environment for a sufficiently long time. The convenience for introducing such elementary processes is that the energy exchanged during a DUQ and a DTT can be thermodynamically interpreted as work and heat respectively, without any risk of ambiguity typical of continuous transformations in which the Hamiltonian and the state are simultaneously changed. On the other hand, as shown in refs 19 and 20, continuous transformations can be well approximated by a sequence of infinitesimal DUQs and DTTs.

In order to show that Eq. (7) can be saturated we then focus on a N-long sequence of alternating DUQs and DTTs operated at the same temperature T and connecting an input Gibbs state  to a final Gibbs state

to a final Gibbs state  via the following steps (an example for N = 2 is shown in Fig. 2):

via the following steps (an example for N = 2 is shown in Fig. 2):

where for j = 0, 1, ···, N, Hj represents the Hamiltonian of the system at the end of the DUQ of the j-th step and where for easy of notation we set H0 := Hin and HN := Hfin. As further assumption we shall also restrict the analysis to those cases where all the Hj entering the sequence (the initial and the final one included) mutually commute, i.e. [Hj, Hj+1] = 0. Accordingly the action of each DUQ corresponds to a simple shift of the energy levels without changing the corresponding eigenstates:

Also, since all the Gibbs states  are diagonal in the same energy basis

are diagonal in the same energy basis  , quantum coherence will not play any role in the process, meaning that the results we obtain could be directly applicable to classical models. This is not a strong limitation since, as we are going to show, thermodynamically optimal processes saturating (7) are already obtainable within this limited set of semi-classical operations.

, quantum coherence will not play any role in the process, meaning that the results we obtain could be directly applicable to classical models. This is not a strong limitation since, as we are going to show, thermodynamically optimal processes saturating (7) are already obtainable within this limited set of semi-classical operations.

To determine the probability distribution of work for a generic sequence (15) observe that in the j-th step work can be extracted from (or injected to) the system only during the associated DUQ20. Here the state is described by the density matrix  , with

, with

being the probability of finding it into the k-th energy eigenstate whose energy passes from  to

to  during the quench. When this happens the system acquires a

during the quench. When this happens the system acquires a  increment of internal energy, corresponding to an amount of

increment of internal energy, corresponding to an amount of  of work production (the system being thermally isolated during each DUQ). Accordingly the probability distribution of the work done by the system during the j-th step can be expressed as the sum of a collection of Dirac delta functions:

of work production (the system being thermally isolated during each DUQ). Accordingly the probability distribution of the work done by the system during the j-th step can be expressed as the sum of a collection of Dirac delta functions:

At the next step the system first thermalizes via a DTT which, independently from the previous history of the process, brings in the Gibbs state  and then undergoes to a new DUQ that produces an extra amount of work Wj+1 whose statistical distribution Pj+1(Wj+1) can be expressed as in Eq. (18) by replacing j with j + 1. The total work W extracted during the whole transformation (15) can finally be computed by summing all the Wj’s, the resulting probability distribution being

and then undergoes to a new DUQ that produces an extra amount of work Wj+1 whose statistical distribution Pj+1(Wj+1) can be expressed as in Eq. (18) by replacing j with j + 1. The total work W extracted during the whole transformation (15) can finally be computed by summing all the Wj’s, the resulting probability distribution being

which can be easily shown to satisfy the Jarzynski identity (2) (see section Methods) and hence the inequality (7) which follows from it.

It is a basic result of thermodynamics and statistical mechanics that the inequality (1) can be saturated by isothermal transformations in which the system is changed very slowly in such a way that its state remains always in equilibrium with the bath1. These operations are usually called quasi-static or reversible. In the framework of discrete quantum processes quasi-static transformations can be obtained in the limit of infinite steps N → ∞ while keeping fixed the initial and final Hamiltonian of the sequence. Indeed, as shown in refs 19 and 20, interpolating between the initial and final Hamiltonian by a sequence of infinitesimal changes (e.g. fulfilling the constraint  ) each followed by complete thermalizations, one can saturate the bound (1). In terms of the probability distribution (19) one can easily show19 that in the quasi-static limit we obtain a delta function centered in −ΔF = Fin − Ffin, i.e.

) each followed by complete thermalizations, one can saturate the bound (1). In terms of the probability distribution (19) one can easily show19 that in the quasi-static limit we obtain a delta function centered in −ΔF = Fin − Ffin, i.e.

which means that for every realization of the process the work extracted is the maximum allowed by the second law (1) with negligible fluctuations. This can be understood from the fact that the total work W is the sum of N independent random variables Wj and therefore we expect the fluctuations around the mean 〈W〉 to decay as  . Moreover, since the Jarzynski identity (2) must hold, the only possible value for the mean of an infinitely sharp distribution is 〈W〉 = −ΔF.

. Moreover, since the Jarzynski identity (2) must hold, the only possible value for the mean of an infinitely sharp distribution is 〈W〉 = −ΔF.

We can now come back to our original problem of determining the maximum probability of extracting an amount of work larger than an arbitrary value Λ, for fixed values of the initial and final Hamiltonians Hin and Hfin.

If the threshold is below the free energy decrease of the system, i.e. if Λ ≤ −ΔF, the problem is trivial. In this case a quasi-static transformation interpolating between the initial and final Hamiltonian is optimal. Indeed, as expressed in Eq. (20), the work extracted in the process is deterministically equal to −ΔF which is larger than Λ. Formally, integrating (20), we have that for a quasi-static process

The cumulative work extraction probability (21) shows that, although quasi-static processes are optimal for Λ ≤ −ΔF, they are absolutely useless for Λ > −ΔF where the probability drops to zero. Then, if we want to explore the region Λ > −ΔF which is beyond the limit imposed by the second law, it is clear that we have to exploit statistical fluctuations typical of non-equilibrium processes.

Consider next the case of a threshold larger than the decrease of free energy Λ > −ΔF. To identify an optimal process fulfilling (7) it is sufficient to focus on the simplest scenario of a two-level system with energy eigenstates |0〉 and |1〉 and such that the eigenvalue associated with |0〉 always nullifies, i.e.  for all j. Accordingly each Hamiltonian and corresponding Gibbs state can be expressed in terms of a single real parameter

for all j. Accordingly each Hamiltonian and corresponding Gibbs state can be expressed in terms of a single real parameter  :

:

In this way the generic process described in (15) is completely characterized by assigning a sequence of N + 1 parameters  arbitrarily interpolating between the initial value

arbitrarily interpolating between the initial value  and the final value

and the final value  .

.

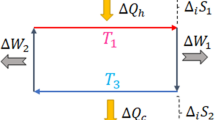

Let us then focus on the protocol composed by the following three steps and summarized in Fig. 3:

Scheme of the optimal process saturating the bound (7), valid for a two-level system with Hamiltonian H = E1|1〉〈1|.

The process is divided in three steps: a) a quasi-static transformation where the value of E1 varies from Ein to Ea, b) an Hamiltonian quench from Ea to Eb followed by a thermalization, c) a final quasi-static transformation from Eb to Efin.

(a) perform a quasi-static transformation from the initial value Ein to the value Ea to be fixed later on:

with  being a small increment which we shall send to zero while sending the associated number of steps

being a small increment which we shall send to zero while sending the associated number of steps  to infinity;

to infinity;

(b) apply a finite DUQ from Ea to another arbitrary value Eb > Ea also to be fixed later on, followed by a complete thermalization:

(c) perform a quasi-static transformation from Eb to the desired final value Efin:

where, as in step a),  is a small increment which we shall send to zero by sending the associated number of steps

is a small increment which we shall send to zero by sending the associated number of steps  to infinity.

to infinity.

In the limit  , since the initial and final configurations are fixed the only free parameters of this protocol are Ea and Eb and they will affect the final probability distribution of the work done by the system. In particular according to Eq. (20), the work extracted in the two quasi-static transformations a) and c) is deterministically given by the corresponding free energy reductions, i.e. the quantities

, since the initial and final configurations are fixed the only free parameters of this protocol are Ea and Eb and they will affect the final probability distribution of the work done by the system. In particular according to Eq. (20), the work extracted in the two quasi-static transformations a) and c) is deterministically given by the corresponding free energy reductions, i.e. the quantities

respectively, with  . The work extracted in the intermediate operation b) instead is Wb = 0 if the system is in the the state |0〉 (this happens with probability

. The work extracted in the intermediate operation b) instead is Wb = 0 if the system is in the the state |0〉 (this happens with probability  ) while it is equal to the negative quantity Wb = Ea − Eb if the system is in the state |1〉 (which happens with probability 1 − p). Accordingly the total work W = Wa + Wb + Wc is a convex combination of two delta functions:

) while it is equal to the negative quantity Wb = Ea − Eb if the system is in the state |1〉 (which happens with probability 1 − p). Accordingly the total work W = Wa + Wb + Wc is a convex combination of two delta functions:

where

Equation (27) is of the form required to saturate (7), see Eq. (10). Indeed from Eqs (28) and (29) it is easy to check that all values of Wmax > −ΔF and Wmin < −ΔF can be obtained by properly choosing Ea and Eb, with Eb > Ea. Moreover the function that gives Wmin and Wmax from Ea and Eb is a bijection, that can be inverted obtaining:

and hence

the positivity of this expression being guaranteed by the ordering

which naturally follows from Eq. (3). Equations (10) and (11) are finally obtained from (27) and (32) by simply taking Wmax = Λ.

From the above analysis it is evident that optimal processes saturating (7) can be obtained only for transformations that are quasi-static apart from a single finite DUQ which introduces a single probabilistic dichotomy on the final work distribution as required by Eq. (10). For a two-level system, we have just shown that they are characterized by the two parameters Ea and Eb (the values of Ein and Efin being fixed by the initial and final Hamiltonians). However their choice is also completely determined by the desired maximum and minimum work values entering (7): Λ and Wmin. Therefore we conclude that, for a two-level system, the optimal process presented here is unique up to global shifts of the energy levels (which we have fixed imposing  ). This however is no longer the case when operating on d-level systems with d ≥ 3, multiple number of optimal protocols being allowed in this case (see section Methods for details).

). This however is no longer the case when operating on d-level systems with d ≥ 3, multiple number of optimal protocols being allowed in this case (see section Methods for details).

Optimal transformations by one-molecule Szilard-like heat engine

In this section we present a second example of a process which allows us to saturate the bound (7). At variance with the one introduced in the previous section the model we analyze here is fully classical even though slightly exotic as it assumes the existence of an ideal gas composed by a single particle (same trick adopted in ref. 21). As shown in Fig. 4 such a classical particle is placed in a box divided in two chambers by a wall, in which a little door can be opened allowing the particle to switch from a side to the other. The right edge of the box is connected to a piston, that can extract mechanical work and the whole system is in contact with a heat bath of temperature T.

In the initial and final configuration the door is open, the only difference being the position of the piston. The free energy difference can be computed following the relation F = U − TS and the fact that the entropy depends logarithmically on the volume1:

We will show that, in order to saturate the equation (7) with the above initial and final conditions the optimal protocol is the following (see Fig. 5):

Scheme of the optimal process saturating the bound (7) for a one particle perfect gas.

The process is divided in three steps: (a) a reversible isothermal expansion from Vin to Va in which the door is open, (b) a reversible isothermal compression between Va and Vb in which the door is closed, (c) a reversible isothermal expansion to the final volume Vfin in which the door is open.

(a) keeping the door opened, perform a reversible isothermal expansion from the volume Vin to the volume Va to be fixed later on;

(b) after closing the door, do a reversible isothermal compression from the volume Va to the volume Vb, then open the door and let the system thermalize;

(c) perform a reversible isothermal expansion from the volume Vb to the volume Vfin.

The work extracted during the isothermal expansions a) and c) is

On the contrary to compute the work extracted in the compression we have to distinguish two cases:

1. The particle is in the left side, then the compression requires no work.

2. The particle is in the right side then the work extracted is the negative quantity

where VL is the constant volume of the left chamber.

Now observing that the probability of the particle being in the left chamber is just equal to the ratio between VL and Va, i.e. p = VL/Va the work distribution of the process can be expressed as:

with

Notice also that from Eqs (34), (35), (36) and (38) we can cast p as in Eq. (32), indeed

where the second equality can be obtained with a little algebra and the last one follows multiplying both the numerator and the denominator by  . Then, as in the case of the discrete quantum process analyzed in the previous section, this protocol saturates the inequality (7) by simply setting Wmax = Λ, the values of Va and Vb being univocally fixed by the relations:

. Then, as in the case of the discrete quantum process analyzed in the previous section, this protocol saturates the inequality (7) by simply setting Wmax = Λ, the values of Va and Vb being univocally fixed by the relations:

whose positivity is guaranteed once again by the ordering (33).

The protocol presented here clearly shares strong similarities with the two-level model presented in the previous section. Indeed the two reversible isothermal expansions in which the particle is free to go throw the door can be put in a formal correspondence with the two quasi-static transformations of Fig. 5. Analogously the intermediate compression of Fig. 5 corresponds to the finite DUQ of the quantum model. Notice finally that, since in the ideal gas model the closing of the door at stage b) is a reversible operation, it looks like we are extracting work in a reversible way over the threshold −ΔF, a fact which is impossible1. This however is not the case since the thermalization that follows the opening of the door after the compression makes the process globally irreversible and the probabilistic outdoing of −ΔF is fully justified.

Work Extraction Above Threshold Under Average Work Constraint

In the previous section we derived a bound for the probability P (W > Λ) when the minimum extracted work Wmin is fixed. We are going to solve the same problem with a different constraint, fixing the average extracted work instead of the minimum, i.e. replacing Eq. (6) with the condition

with μ ≤ Λ being a fixed value. As we shall see in the following this problem admits optimal processes which have the same dichotomic structure as the optimal solutions one gets when imposing the constraint on the minimal work production. To be more precise Eqs (10) and (11) still provide the optimal solutions by setting Wmin to fulfil Eq. (42), i.e. solving for the following transcendental equation

Noticing that μ is strictly increasing in Wmin for every fixed Λ (see Fig. 6) and so there exists one and only one value Wmin[Λ, μ] that solves equation (43). We can then conclude that the optimal probability in this case is given by the function

which we have plotted in Fig. 7 for fixed values of ΔF and β.

Contourplot of the optimal probability  of Eq. (44) for

of Eq. (44) for  and −ΔF = 1, as a function of μ and Λ.

and −ΔF = 1, as a function of μ and Λ.

By definition μ can not exceed −ΔF and the problem for Λ ≤ −ΔF becomes trivial, so we excluded those regions from the plot.

To prove the above results we adopt the Lagrange multiplier technique to study the stationary points of Eq. (4) under the constraint (42). Also, to avoid technicalities we find it convenient to discretize the probability distribution, a trick which allows us to impose the positivity of P (W) by parametrizing it as q2(W) with q(W) being an arbitrary function. Accordingly the Lagrangian of the problem can be written in this way:

where λJ, λμ and λ1 are the Lagrange multipliers that enforce, respectively, the Jarzynski identity (2), the average constraint (42) and the normalization. Deriving with respect to q(W) we hence obtain the following Lagrange equation:

with Θ(W) being the Heaviside step function. The above identity must hold for all W: accordingly the supports of q(W) and of the function in the square brackets must be complementary. This last term nullifies in at most three points (say W1, W2 and W3), only one of which (say W3) is above the threshold Λ (verifying this property is easy, since the zeroes are the crossing points between an exponential function and a piecewise linear function). Thus we can suppose that q(W) and hence the corresponding probability distribution P (W) = q2(W), is non zero on only these selected points. Indicating with p1, p2 and p3 the values assumed by P (W) on W1, W2 and W3 we can then express the problem constraints as follows

Among all possible solutions of these last equations we have finally to select those which provide the maximum value for the probability of extracting work above the threshold Λ, i.e. remembering that only W3 can be larger than or equal to Λ, this corresponds to select the solution with the largest value of p3. To solve this last problem we resort once more to the Lagrange multiplier technique under the Karush-Kuhn-Tucker conditions25 to enforce the positivity of W3 − Λ. Accordingly the new Lagrangian is now

with

The KKT conditions are necessary (even if not sufficient) for a point to be a constrained maximum and they allow two kind of solutions:

1. The maximum is in the internal part of the region described by the inequality constraint, then W3 ≠ Λ and by the conditions (51) we obtain η = 0. In this case the Lagrange equations relative to Wi (i = 1, 2, 3) are:

then either W1 = W2 = W3 > Λ or, if one or more of the pi are equal to zero, the Wk for k ≠ i are all equal. These solutions have to be rejected, because they fail to satisfy the constraint (48).

2. The maximum is on the boundary of the inequality constraint then W3 = Λ. From the conditions (51) η can be different from 0, then, although the Lagrange equations for W1 and W2 are still described by the (52) the one for W3 is not. Thus either W1 = W2 ≤ Λ = W3 or one between p1 and p2 vanishes. In both cases the support of the work distribution reduces to two points.

We conclude that in order to maximize P (W ≥ Λ) when the average extracted work μ is fixed, the distribution must be different from zero in only two values, Λ and a smaller one we call Wmin, i.e. as anticipated at the beginning of the section, they must have the form (10) yielding Eq. (44) as the optimal probability of work extraction above threshold.

Discussion

In this paper we studied single-shot thermodynamic processes focusing on the specific task of probabilistically extracting more work than what is allowed by the second law of thermodynamics. We found that for all processes obeying the Jarzynski identity, there exists an upper bound (7) to the work extraction probability which depends on: how large is the desired violation, the minimum work that we are willing to extract in case of failure and the free energy difference between the final and initial states. Moreover, within the formalism of discrete quantum processes, we have shown that the bound can be saturated and we determined the corresponding optimal protocols. Analogous results have been obtained also when replacing the constraint on the minimal work with a constraint on the average work extracted during the process.

With our analysis we hope to contribute to the yet quite unexplored regime3,4,12,17,18 in which statistical fluctuations are not considered as a problem but as an advantage of microscopic thermodynamics, in the sense that they can be artificially enhanced in order to obtain tasks which are otherwise impossible in the thermodynamic limit. With respect to standard thermodynamics, in this regime we should adopt a completely different paradigm for judging what is a “good” process. Indeed quasi-static processes are usually considered as optimal since they are reversible, they do not produce excess entropy, they allow to reach the Carnot efficiency, etc. On the other hand, as we have shown in this work, in specific regimes in which fluctuations are “useful” the perspective is reversed and non-equilibrium processes becomes operationally optimal.

Our findings could be experimentally demonstrated in every classical or quantum thermodynamics experiment involving large work fluctuations. For example experimental scenarios which are currently promising are: organic molecules26,27, NMR systems28,29, electronic circuits30,31, trapped ions32, colloidal particles33, etc. In all these contexts, up to now the task has been mainly focused on the verification of quantum thermodynamic principles and fluctuation theorems. We believe that, similar experimental settings can be easily optimized in order to maximize the probability of work extraction, approximately realizing the ideal processes proposed in this work.

Methods

Jarzynski identity for the discrete process

Here we show that the discrete process described by the sequence (15) satisfies the Jarzynski identity (2). Ultimately this is a direct consequence of the fact that each single DUT + DTT process fulfills such relation13. Indeed from Eq. (19) we can write

where in the last identities we used Eq. (17) and the fact that the partition function Zj of the Gibbs state  is connected to its Helmholtz Fj free energy via the identity

is connected to its Helmholtz Fj free energy via the identity

Optimal processes for discrete transformation with d > 2 level systems

In the main text we have shown that a two level system is already enough to achieve the maximum work extraction probability dictated by the bound (7). However, for experimental reasons, one may be forced to work with a system characterized by d > 2 energy levels and we may be interested in determining what are the optimal processes in this context. In the main text we noticed that the presence of only one quench is a necessary condition to saturate the bound (7). That kind of reasoning holds for systems of arbitrary dimension, from which Eq. (10) is obviously independent. Thus, looking for an optimal process involving a d-dimensional system we have to slightly modify the procedure described in the main text although the structure remains the same. Explicitly:

1. Perform a quasi-static transformation that brings all the initial energies eigenvalues  of the system to their final values, except one of them (say the m-th one), that is instead brought to the value Ea which will be fixed later on, i.e.

of the system to their final values, except one of them (say the m-th one), that is instead brought to the value Ea which will be fixed later on, i.e.

2. Apply a finite DUQ which moves the m-th level from Ea to a value Eb > Ea followed by a complete thermalization of the system.

3. Perform a quasi-static transformation that brings Eb to the final value  , in this way the system reaches the final configuration.

, in this way the system reaches the final configuration.

As in the two-level case discussed in the main text the distribution of work is given by the sum of two delta functions terms (27) the only difference being in the value of the probability p0 which now is given by

while the difference of the free energies associated with the intermediate steps of the protocol which enters Eq. (28) is now expressed as

We can then calculate Ea and Eb from Eqs (28), (29) and (56) obtaining:

Then, computing the success probability with the equation (55) we find that p as exactly the same form we have for the d = 2 case, i.e. Eq. (32). As a final remark we notice that the protocol saturating the bound is not unique for d ≥ 3, because in this case there are multiple degrees of freedom in the system. For example there are different equivalent choices of the energy level Em, moreover other optimal protocols involving multi-level quenches could exist.

Additional Information

How to cite this article: Cavina, V. et al. Optimal processes for probabilistic work extraction beyond the second law. Sci. Rep. 6, 29282; doi: 10.1038/srep29282 (2016).

References

Huang, K. Statistical Mechanics, 2nd ed. (Wiley, 1987).

Bochkov, G. N. & Kuzovlev, Y. E. General theory of thermal fluctuations in nonlinear systems. Zh. Eksp. Teor. Fiz 72, 238–243 (1977).

Evans, D. J., Cohen, E. G. D. & Morriss, G. P. Probability of second law violations in shearing steady states. Physical Review Letters 71, 2401 (1993).

Wang, G. M., Sevick, E. M., Mittag, E., Searles, D. J. & Evans, D. J. Experimental demonstration of violations of the second law of thermodynamics for small systems and short time scales. Physical Review Letters 89, 050601 (2002).

Esposito, M., Harbola, U. & Mukamel, S. Nonequilibrium fluctuations, fluctuation theorems and counting statistics in quantum systems. Reviews of Modern Physics 81, 1665 (2009).

Horodecki, M. & Oppenheim, J. Fundamental limitations for quantum and nanoscale thermodynamics. Nature communications 4 (2013).

Vinjanampathy, S. & Anders, J. Quantum thermodynamics. arXiv preprint arXiv:1508.06099 (2015).

Goold, J., Huber, M., Riera, A., del Rio, L. & Skrzypzyk, P. The role of quantum information in thermodynamics—a topical review. arXiv preprint arXiv:1505.07835 (2015).

Skrzypczyk, P., Short, A. J. & Popescu, S. Work extraction and thermodynamics for individual quantum systems. Nature communications 5 (2014).

Crooks, G. E. Path-ensemble averages in systems driven far from equilibrium. Physical review E 61, 2361 (2000).

Jarzynski, C. Nonequilibrium equality for free energy differences. Physical Review Letters, 78, 2690 (1997).

Jarzynski, C. Equalities and inequalities: irreversibility and the second law of thermodynamics at the nanoscale. Annu. Rev. Condens. Matter Phys. 2, 329–351 (2011).

Tasaki, H. Jarzynski relations for quantum systems and some applications. arXiv preprint cond-mat/0009244 (2000).

Esposito, M. & Mukamel, S. Fluctuation theorems for quantum master equations. Physical Review E 73, 046129 (2006).

Campisi, M., Hänggi, P. & Talkner, P. Colloquium: Quantum fluctuation relations: Foundations and applications. Reviews of Modern Physics 83, 771 (2011).

Åberg, J. Fully quantum fluctuation theorems. arXiv preprint arXiv:1601.01302 (2016).

Alhambra, Á. M., Oppenheim, J. & Perry, C. What is the probability of a thermodynamical transition? arXiv preprint arXiv:1504.00020 (2015).

Yunger Halpern, N., Garner, A. J., Dahlsten, O. C. & Vedral, V. Unification of fluctuation theorems and one-shot statistical mechanics. New Journal of Physics 17, 095003 (2015).

Åberg, J. Truly work-like work extraction via a single-shot analysis. Nature communications 4 (2013).

Anders, J. & Giovannetti, V. Thermodynamics of discrete quantum processes. New Journal of Physics 15, 033022 (2013).

Szilard, L. Über die Entropieverminderung in einem thermodynamischen System bei Eingriffen intelligenter Wesen. Zeitschrift für Physik 53, 840–856 (1929).

Gallego, R., Eisert, J. & Wilming, H. Defining work from operational principles. arXiv preprint arXiv:1504.05056 (2015).

Roncaglia, A. J., Cerisola, F. & Paz, J. P. Work measurement as a generalized quantum measurement. Physical review letters 113, 250601 (2014).

Talkner, P., Lutz, E. & Hänggi, P. Fluctuation theorems: Work is not an observable. Physical Review E 75, 050102 (2007).

Boyd, S. & Vandenberghe, L. Convex optimization (Cambridge university press, 2004).

Collin, D. et al. Verification of the Crooks fluctuation theorem and recovery of RNA folding free energies. Nature 437, 231–234 (2005).

Liphardt, J., Dumont, S., Smith, S. B., Tinoco, I. & Bustamante, C. Equilibrium information from nonequilibrium measurements in an experimental test of Jarzynski’s equality. Science 296, 1832–1835 (2002).

Toyabe, S., Sagawa, T., Ueda, M., Muneyuki, E. & Sano, M. Experimental demonstration of information-to-energy conversion and validation of the generalized Jarzynski equality. Nature Physics 6, 988–992 (2010).

Batalh‹o, T. B. et al. Experimental reconstruction of work distribution and study of fluctuation relations in a closed quantum system. Physical review letters 113, 140601 (2014).

Saira, O. et al. Test of the Jarzynski and Crooks fluctuation relations in an electronic system. Physical review letters 109, 180601 (2012).

Pekola, J. P. Towards quantum thermodynamics in electronic circuits. Nature Physics 11, 118–123 (2015).

An, S. et al. Experimental test of the quantum Jarzynski equality with a trapped-ion system. Nature Physics 11, 193–199 (2015).

Bérut, A. et al. Experimental verification of Landauer’s principle linking information and thermodynamics. Nature 483, 187–189 (2012).

Acknowledgements

The authors are grateful to J. Anders and M. Campisi for useful discussions. This work is partially supported by the EU Collaborative Project TherMiQ (grant agreement 618074) and by the ERC Advanced Grant no. 321122 SouLMan.

Author information

Authors and Affiliations

Contributions

V.C., A.M. and V.G. equally contributed to the derivation and writing of this work.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Cavina, V., Mari, A. & Giovannetti, V. Optimal processes for probabilistic work extraction beyond the second law. Sci Rep 6, 29282 (2016). https://doi.org/10.1038/srep29282

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep29282

This article is cited by

-

Multicyclic Norias: A First-Transition Approach to Extreme Values of the Currents

Journal of Statistical Physics (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

and −ΔF = 1, as a function of Wmin and Λ.

and −ΔF = 1, as a function of Wmin and Λ.