Abstract

In this work we aim to highlight a close analogy between cooperative behaviors in chemical kinetics and cybernetics; this is realized by using a common language for their description, that is mean-field statistical mechanics. First, we perform a one-to-one mapping between paradigmatic behaviors in chemical kinetics (i.e., non-cooperative, cooperative, ultra-sensitive, anti-cooperative) and in mean-field statistical mechanics (i.e., paramagnetic, high and low temperature ferromagnetic, anti-ferromagnetic). Interestingly, the statistical mechanics approach allows a unified, broad theory for all scenarios and, in particular, Michaelis-Menten, Hill and Adair equations are consistently recovered. This framework is then tested against experimental biological data with an overall excellent agreement. One step forward, we consistently read the whole mapping from a cybernetic perspective, highlighting deep structural analogies between the above-mentioned kinetics and fundamental bricks in electronics (i.e. operational amplifiers, flashes, flip-flops), so to build a clear bridge linking biochemical kinetics and cybernetics.

Similar content being viewed by others

Introduction

Cooperativity is one of the most important properties of molecular interactions in biological systems and it is often invoked to account for collective features in binding phenomena.

In order to investigate and predict the effects of cooperativity, chemical kinetics proved to be a fundamental tool and, also due to its broadness over several fields of biosciences, a number of cooperativity quantifiers (e.g. Hills number1, Koshland cooperativity test2, global dissociation quotient3, weak and strong fine tunings4, etc.), apparently independent or distinct, have been introduced. However, a clear, unified, theoretical scheme where all cooperative behaviors can be framed would be of great importance, especially in biotechnology research5,6 and for scientists dealing with interdisciplinary applications7,8. To this task, statistical mechanics offers a valuable approach as, from its basic principles, it aims to figure out collective phenomena, possibly overlooking the details of the interactions to focus on the very key features. Indeed, a statistical mechanics description of reaction kinetics has already been paved through theoretical models based on linear Ising chains9, spin lattices with nearest neighbors interactions10, transfer matrix theory9,10 and structural probabilistic approaches11.

In this work we expand such statistical mechanics picture toward a mean-field perspective12 by assuming that the interactions among the system constituents are not limited by any topological or spatial constraint, but are implicitly taken to be long-ranged, as in a system that remains spatially homogeneous. This approach is naturally consistent with the rate-equation picture, typical of chemical kinetics investigations and whose validity is restricted to the case of vanishing correlations13,14 and requires a sufficiently high spatial dimension or the presence of an effective mixing mechanism (hence, ultimately, long-range interactions). In general, in the mean-field limit, fluctuations naturally decouple from the volume-averaged quantities and can be treated as negligible noise.

By adopting a mean-field approach, we abandon a direct spatial representation of binding structures and we introduce a renormalization of the effective couplings. The reward lies in a resulting unique model exhibiting a rich phenomenology (e.g. phase transitions), which low-dimensional models typically lack, yet being still feasible for an exact solution. In particular, we obtain an analytical expression for the saturation function which is successfully compared with recent experimental findings, taken from different (biological) contexts to check robustness. Furthermore, from this theory basic chemical kinetics equations (e.g. Michaelis-Menten, Hill and Adair equations) are recovered as special cases.

Further, there is a deep theoretical motivation underlying the development of a mean-field statistical mechanics approach to chemical kinetics: it can be used to code collective behavior of biosystems into a cybernetical framework. In fact, cybernetics, meant as the science dedicated to the understanding of self-organization and emergent communication among the constituents of a system, can be naturally described via (mean-field) statistical mechanics15,16,17. Thus, the latter provides a shared formalism which allows to automatically translate chemical kinetics into cybernetics and vice versa. In this perspective, beyond theoretical interest, at least two concrete benefits may stem from our investigation: first, in the field of biotechnologies, logical gates have already been obtained through biological hardware (see e.g.5,6) and for their proper functioning signal amplification turns out to be crucial. In this paper, cooperativity in reaction kinetics is mapped into amplification in electronics, hence offering a solid scaffold for biological amplification theory.

Then, as statistical mechanics has been successfully applied in the world of computing (for instance in neural networks18, machine learning19 or complex satisfiability20), its presence in the theory of biological processors could be of relevant interest. In particular, we discuss how to map ultra-sensitive kinetics to logical switches and how to read anti-cooperative kinetics as the basic ingredient for memory storage in biological flip-flops, whose interest resides in several biological machineries as gene regulatory networks21, riboswitches22, synaptic switches23, autopoietic systems24 and more25,26,27.

To summarize, a rigorous, promising link between cybernetics and collective biological systems can be established via statistical mechanics and this point will be sketched and corroborated by means of several examples throughout this paper, which is structured as follows:

First, we review the main concepts, facts and methods from both chemical kinetics and statistical mechanics perspectives. Then, we develop a proper theoretical framework able to bridge statistical mechanics and chemical kinetics; the former can also serve as a proper tool for describing and investigating cybernetics, thus, as a syllogism, chemical kinetics and cybernetics become also related. The agreement of our framework with real data, carefully extrapolated from recent biological researches, covering the various standard behaviors in chemical kinetics, is also successfully checked. Finally, results and outlooks are discussed.

Results

Collective behaviors in chemical kinetics

Many polymers and proteins exhibit cooperativity, meaning that their ligands bind in a non-independent way: if, upon a ligand binding, the probability of further binding (by other ligands) is enhanced, like in the paradigmatic case of hemoglobin9, the cooperativity is said to be positive, vice versa there is negative cooperativity when the binding of more ligands is inhibited28, as for instance in some insulin receptors29,30 and most G-protein coupled receptors23,31. Several mechanisms can be responsible for this effect: for example, if two neighbor docking sites on a polymer can bind charged ions, the electrostatic attraction/repulsion may be the cause of a positive/negative cooperativity. However, the most common case is that the binding of a ligand somehow modifies the structure of the hosting molecule, influencing the binding over the other sites and this is the so-called allosteric mechanism32.

Let us now formalize such behavior by considering a hosting molecule P that can bind N identical molecules S on its structure; calling Pj the complex of a molecule P with j ∈ [0,N] molecules attached, the reactions leading to the chemical equilibrium are the following

hence the time evolution of the concentration of the unbounded protein P0 is ruled by

where  ,

,  are, respectively, the forward and backward rate constants for the state j = 1 and their ratios define the association constant

are, respectively, the forward and backward rate constants for the state j = 1 and their ratios define the association constant  and dissociation constant

and dissociation constant  . Focusing on the steady state we get, iteratively,

. Focusing on the steady state we get, iteratively,

Unfortunately, measuring [Pj] is not an easy task and one usually introduces, as a convenient experimental observable, the average number  of substrates bound to the protein as

of substrates bound to the protein as

which is the well-known Adair equation3, whose normalized expression defines the saturation function  . In a non-cooperative system, one expects independent and identical binding sites, whose steady states can be written as (explicitly only for j = 1 and j = 2 for simplicity)

. In a non-cooperative system, one expects independent and identical binding sites, whose steady states can be written as (explicitly only for j = 1 and j = 2 for simplicity)

where K+ and K− are the rates for binding and unbinding on any arbitrary site. Being  the intrinsic association constant, we get

the intrinsic association constant, we get

and, in general, K(j) = (N − j + 1)K/j. Plugging this expression into the Adair equation (2) we get

which is the well-known Michaelis-Menten equation3.

If interaction among binding sites is expected, the kinetics becomes far less trivial. Let us first sketch the limit case where intermediates steps can be neglected, that is

then

More generally, one can allow for a degree of sequentiality and write

which is the well-known Hill equation3, where nH, referred to as Hill coefficient, represents the effective number of substrates which are interacting, such that for nH = 1 the system is said to be non-cooperative and the Michaelis-Menten law is recovered; for nH > 1 it is cooperative; for  it is ultra sensitive; for nH < 1 it is anti cooperative.

it is ultra sensitive; for nH < 1 it is anti cooperative.

From a practical point of view, from experimental data for Y([S]), one measures nH as the slope of log(Y/(1 − Y)) versus [S].

Mean-field statistical mechanics

One of the best known statistical mechanics model is the mean-field Ising model, namely the Curie-Weiss model33. It describes the macroscopic behavior of a magnetic system microscopically represented by N binary spins, labeled by i = 1, 2, …, N and whose state is denoted by σi = ±1. In the presence of an external field h and being J the N × N symmetric matrix encoding for pairwise interactions among spins, the (extensive, macroscopic) internal energy associated to a the configuration {σ} = {σ1, σ2, …, σN} is defined as

It is easy to see that the spin configurations leading to a lower energy are those where spins are aligned with the pertaining field, i.e. σihi > 0 and pairs (i, j) associated to positive (negative) coupling Jij are parallel (anti-parallel), i.e. σiσj = 1 (σiσj = −1). Notice that, in eq. 11, we implicitly assumed that any arbitrary spin possibly interacts with any other. This is a signature of the mean field approach which, basically, means that interactions among spins are long-range and/or that the time-scale of reactions is longer than the typical time for particles to diffuse, in such a way that each spin/particle actually sees any other. We stress that the mean field approximation also implies that the probability distribution P({σ}) for the whole configuration is factorized into the product of the distribution for each single constituents, namely  , analogously to classical chemical kinetic prescriptions10.

, analogously to classical chemical kinetic prescriptions10.

For the analytic treatment of the system it is convenient to adopt a mesoscopic description where the phase space, made of all the 2N possible distinct spin configurations, undergoes a coarse-graining and is divided into a collection  of sets, each representing a mesoscopic state of a given energy Ek, (we dropped the dependence on the parameters J, h to lighten the notation). In this way, all the microscopic states belonging to the set

of sets, each representing a mesoscopic state of a given energy Ek, (we dropped the dependence on the parameters J, h to lighten the notation). In this way, all the microscopic states belonging to the set  share the same value of energy Ek, calculated according to (11). In order to describe the macroscopic behavior of the system through its microscopical degrees of freedom, we introduce a statistical ensemble

share the same value of energy Ek, calculated according to (11). In order to describe the macroscopic behavior of the system through its microscopical degrees of freedom, we introduce a statistical ensemble  , meant as the probability distribution over the sets in

, meant as the probability distribution over the sets in  ; consequently, ρi ≥ 0 and

; consequently, ρi ≥ 0 and  must be fulfilled. Accordingly, the internal energy and the entropy read as

must be fulfilled. Accordingly, the internal energy and the entropy read as

where KB is the Boltzmann constant, hereafter set equal to 1. Being β > 0 the absolute inverse temperature of the system, we define the free-energy

Notice that the minimum of F ensures, contemporary, the minimum for E and the maximum for S, hence it provides a definition for the thermodynamic equilibrium. As a consequence, from eq. 13 we calculate the derivative with respect to the probability distribution and require ∂F/∂ρi = 0; the solution, referred to as  , reads as

, reads as

and it is called the Maxwell-Bolzmann distribution. The normalization condition implies  and this quantity is called “partition function”. We therefore have

and this quantity is called “partition function”. We therefore have

In general, given a function f({σ}), its thermal average is  .

.

As this system is expected to display two different behaviors, an ordered one (at low temperature) and a disordered one (at high temperature), we introduce the magnetization  , which provides a primary description for the macroscopic behavior of the system. In particular, it works as the “order parameter” and it characterizes the onset of order at the phase transition between the two possible regimes. More precisely, as the parameters β, J, h are tuned (here for simplicity Jij > 0,

, which provides a primary description for the macroscopic behavior of the system. In particular, it works as the “order parameter” and it characterizes the onset of order at the phase transition between the two possible regimes. More precisely, as the parameters β, J, h are tuned (here for simplicity Jij > 0,  i, j), the system can be either disordered (i.e. paramagnetic), where spins are randomly oriented and 〈m〉 = 0, or ordered (i.e. ferromagnetic), where spins are consistently aligned and 〈m〉 ≠ 0. The phase transition, separating regions where one state prevails against the other, is a consequence of the collective microscopic interactions.

i, j), the system can be either disordered (i.e. paramagnetic), where spins are randomly oriented and 〈m〉 = 0, or ordered (i.e. ferromagnetic), where spins are consistently aligned and 〈m〉 ≠ 0. The phase transition, separating regions where one state prevails against the other, is a consequence of the collective microscopic interactions.

In a uniform system where  ,

,  i ≠ j, Jii = 0 and

i ≠ j, Jii = 0 and  , all spins display the same expected value, i.e. 〈σi〉 = 〈σ〉,

, all spins display the same expected value, i.e. 〈σi〉 = 〈σ〉,  i, which also corresponds to the average magnetization 〈m〉. Remarkably, in this case the free energy of the system can be expressed through 〈m〉 by a straightforward calculation18 that yields

i, which also corresponds to the average magnetization 〈m〉. Remarkably, in this case the free energy of the system can be expressed through 〈m〉 by a straightforward calculation18 that yields

whose extremization w.r.t. to 〈m〉 ensures again that thermodynamic principles hold and it reads off as

which is the celebrated Curie-Weiss self-consistency. By simply solving eq. 17 (e.g. graphically or numerically) the macroscopic behavior can be inferred. Before proceeding, we fix β = 1, without loss of generality as it can be reabsorbed trivially by h → βh = h and J → βJ = J.

In the non-interacting case (J = 0), eq. 17 gets m(J = 0, h) = tanh(h), which reminds to an input-output relation for the system. When interactions are present (J > 0), one can see that the solution of eq. 17 crucially depends on J. Of course, 〈m(J = 0, h)〉 < 〈m(J > 0, h)〉, due to cooperation among spins, and, more remarkably, there exists a critical value Jc such that when J ≥ Jc the typical sigmoidal response encoded by 〈m(J, h)〉 possibly becomes a step function (a true discontinuity is realized only in the thermodynamic limit N → ∞, while at finite N the curve gets severely steep but still continuous). Hence, to summarize, the Curie Weiss model exhibits two phases: A small-coupling phase where the system behaves paramagnetically and a strong-coupling phase where it behaves ferromagnetically. The ferromagnetic states are two, characterized by positive and negative magnetization, according to the sign of the external field.

Statistical mechanics and chemical kinetics

In this section we develop the first part of our formal bridge and show how the Curie-Weiss model can be looked from a biochemical perspective. We will start from the simplest case of independent sites and later, when dealing with interacting sites, we will properly generalize this model in order to consistently include both positive and negative cooperativity.

The simplest framework: non interacting sites

Let us consider an ensemble of elements (e.g. identical macromolecules, homo-allosteric enzymes, a catalyst surface), whose interacting sites are overall N and labelled as i = 1, 2, …, N. Each site can bind one smaller molecule (e.g. of a substrate) and we call α the concentration of the free molecules ([S] in standard chemical kinetics language). We associate to each site an Ising spin such that when the ith site is occupied σi = +1, while when it is empty σi = −1. A configuration of the elements is then specified by the set {σ}.

First, we focus on non-collective systems, where no interaction between binding sites is present, while we model the interaction between the substrate and the binding site by an “external field” h meant as a proper measure for the concentration of free-ligand molecules, hence h = h(α). One can consider a microscopic interaction energy given by

Note that writing  instead of

instead of  implicitly assumes homogeneity and thermalized reactions: each site displays the same coupling with the substrate and they all had the time to interact with the substrate.

implicitly assumes homogeneity and thermalized reactions: each site displays the same coupling with the substrate and they all had the time to interact with the substrate.

We can think at h as the chemical potential for the binding of substrate molecules on sites: When it is positive, molecules tend to bind to diminish energy, while when it is negative, bound molecules tend to leave occupied sites. The chemical potential can be expressed as the logarithm of the concentration of binding molecules and one can assume that the concentration is proportional to the ratio of the probability of having a site occupied with respect to that of having it empty. In this simple case and in all mean-field approaches33, the probability of each configuration is the product of the single independent probabilities of each site to be occupied and, applying the Maxwell-Boltzmann distribution P(σi = ±1) = e±h, one finds

and we can pose

The mean occupation number (close to the magnetization in statistical physics) reads off as

Therefore, using ergodicity to shift  into 〈S〉 (see eq. 7), the saturation function can be written as

into 〈S〉 (see eq. 7), the saturation function can be written as

By using eq. 17 we get

which, substituting 2h = log α, recovers the Michaelis-Menten behavior, consistently with the assumption of no interaction among binding sites (J = 0) in eq. 18.

A refined framework: two-sites interactions

We now focus on pairwise interactions and, seeking for a general scheme, we replace the fully-connected network of the original Curie-Weiss model by a complete bipartite graph: sites are divided in two groups, referred to as A and B, whose sizes are NA and NB (N = NA + NB), respectively. Each site in A (B) is linked to all sites in B (A), but no link within the same group is present. With this structure we mirror dimeric interactions [Note: Notice that for the sake of clearness, we introduced the simplest bipartite structure, which naturally maps dimeric interactions, but one can straightforwardly generalize to the case of an n-mer by an n-partite system and of course values of ρA ≠ ρB can be considered too. We did not perform these extensions because we wanted to recover the broader phenomenology with the smaller amount of parameters, namely J, α only.], where a ligand belonging to one group interacts in a mean field way with ligands in the other group (cooperatively or competitively depending on the sign of the coupling, see below) and they both interact with the substrate. As a result, given the parameters J and h, the energy associated to the configuration {σ} turns out be

Some remarks are in order now. First, we stress that in eq. 23 the sums run over all the binding sites. As we will deal with the thermodynamic limit (N → ∞), this does not imply that we model macromolecules of infinite length, which is somehow unrealistic. Rather, we consider N as the total number of binding sites, localized even on different macromolecules and the underlying mean-field assumption implies that binding sites belonging to the same group are all equivalent, despite some may correspond to the bulk and others to the boundaries of the pertaining molecule; such differences can be reabsorbed in an effective renormalization of the couplings. In this way the system, as a whole, can exhibit (anti) cooperative effects, as for instance shown experimentally in34.

Moreover, for the sake of clearness, in the following we will assume that couplings between sites belonging to different groups are all the same and equal to J and, similarly, hi = h, for any i. This homogeneity assumption allows to focus on the simplest cooperative effects and can be straightforwardly relaxed.

We also notice that this two-groups model can mimic both cooperative and non-cooperative systems but, while for the former case bipartition is somehow redundant as qualitatively analogous results are obtained by adopting a fully-connected structure, for the latter case the underlying competitive interactions intrinsically require a bipartite structure.

Now, the two groups are assumed as equally populated, i.e., NA = NB = N/2, such that their relative densities are ρA = NA/N = ρB = NB/N = 1/2. The order parameter can be trivially extended as

and, according to statistical mechanics prescriptions, we minimize the free energy coupled to the cost function (23) and we get, in the thermodynamic limit, the following self-consistencies

Through eqs. 25 and 26, the number of occupied sites can be computed as

from which we get the overall binding isotherm

From eqs. 25–28 one can see that Y (α) fulfills the following free-energy minimum condition

This expression returns the average fraction of occupied sites corresponding to the equilibrium state for the system. We are now going to study separately the two cases of positive (J > 0) and negative (J < 0) cooperativity.

The cooperative case: Chemical kinetics

When J > 0 interacting units tend to “imitate” each other. In this ferromagnetic context one can prove that the bipartite topology does not induce any qualitative effects: results are the same (under a proper rescaling) as for the Curie Weiss model; indeed, in this case one can think of bipartition as a particular dilution on the previous fully-connected scheme and we know that (pathological cases apart), dilution does not affect the physical scenario35,36,37,38.

Differently from low-dimensional systems such as the linear Ising-chains, the Curie-Weiss model admits sharp (eventually discontinuous in the thermodynamic limit) transitions from an empty (〈mA〉 = 〈mB〉 = 0) to a completely filled (〈mA〉 = 〈mB〉 = 1) configuration as the field h is tuned. More precisely, eqs. 25 and 26 describe a transition at α = 1 and such a transition is second order (Y changes continuously, but its derivative may diverge) when J is smaller than the critical value Jc = 1, while it is first order (Y has a discontinuity) when J > Jc. The latter case is remarkable as a discontinuous behavior is experimentally well evidenced and at the basis of the so-called ultra-sensitive chemical switches39.

On the other hand, when J → 0, the interaction term disappears and we expect to recover Michaelis-Menten kinetics. In fact, eq. 29 can be rewritten as

which, for J = 0, recovers the Michaelis-Menten equation Y (α) = α/(1 + α) [Note: Note that we do not lose generality when obtaining eq. (7) and not Y (α) = α/(K + α) (which is the more familiar MM expression) because we can rewrite the latter as Y (α) = K−1α/(1 + K−1α) by shifting α → αK−1 and h → [log(α/K)]/2.]. In this case there is no signature of phase transition as Y (α) is continuous in any of its derivatives.

In general, the Hill coefficient can be obtained as the slope of Y (α) in eq. 29 at the symmetric point Y = 1/2, namely

where the role of J is clear: a large J, i.e. J close to 1, implies a strong cooperativity and vice versa.

One step forward, as the whole theory is now described through the functions appearing in the self-consistency, we can expand them obtaining polynomials at all the desired orders, more typical of the standard route of chemical kinetics. In particular, expanding eq. 30 at the first order in J we obtain

which is nothing but the Adair equation (eq. 2) as far as we set  and we rescale

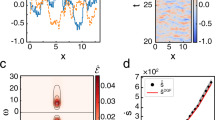

and we rescale  . These results and, in particular, the expression in eq. 30 are shown in complete generality in figs. 1 and 2.

. These results and, in particular, the expression in eq. 30 are shown in complete generality in figs. 1 and 2.

Theoretical predictions of typical binding isotherms obtained from the statistical mechanics approach (see eq. 28) are shown versus the substrate concentration α (main plot) and versus the logarithm of the concentration (inset).

Different colors refer to different systems (hence different coupling strengths J), as explained by the legend. In particular, as J is varied, all the expected behaviors emerge: ultra-sensitive for J = 2, cooperative for J = 1/2, anti-cooperative for J = −1/2, non-cooperative for J = 0.

Velocity of reactions versus substrate concentration α.

These plots have been included to show the full agreement between our theoretical outcomes and the results presented in the celebrated paper by Levitzki and Koshland (see fig. 4 in Ref. 50). Different values of nH = 1/2, 1, 2, corresponding to J = −1, 0, 1/2, are shown in different colors. Note that for this analysis there is complete proportionality between the reaction rates v and the saturation curves Y due to the law of mass action50.

The expression in eq. 30 can also be used to fit experimental data for saturation versus substrate concentration. Indeed, through an iterative fitting procedure, implied by the self-consistency nature of our theoretical expression, we can derive an estimate for the parameter J and, from this, evaluate the Hill coefficient through eq. 31. As shown in fig. 3, fits are successful for several sets of experimental data, taken from different fields of biotechnology. The Hill coefficients derived in this way and the related estimates found in the literature are also in excellent agreement.

These plots show comparison between data from recent experiments (symbols) and best-fits through statistical mechanics (lines).

Data refer to non-cooperative and positive-cooperative systems22,51 (left panel) and an ultra-sensitive system52 (right panel). For the latter we report two fits: Dashed line is the result obtained by constraining the system to be cooperative but not-ultra-sensitive (that is, J ≤ 1), while solid line is the best fit yielding J ~ 1.1, hence a “first order phase transition” in the language of statistical mechanics. The relative goodness of the fits are  and

and  , confirming an ultra-sensitive behavior. The tables in the bottom present the value of J derived from the best fit and the resulting nH; the estimate of the Hill coefficient taken from the literature is also shown.

, confirming an ultra-sensitive behavior. The tables in the bottom present the value of J derived from the best fit and the resulting nH; the estimate of the Hill coefficient taken from the literature is also shown.

Cooperative kinetics and cybernetics: Amplifiers and comparators

Having formalized cooperativity through statistical mechanics, we now want to perform a further translation in cybernetic terms. In particular, we focus on the electronic declination of cybernetics because this is probably the most practical and known branch. We separate the small coupling case (J < Jc, cooperative kinetics) from the strong coupling case (J > Jc, ultra-sensitive kinetics) and we mirror them to, respectively, the saturable operational amplifier and the analog-to-digital converter40. The plan is to compare the saturation curves (binding isotherms) in chemical kinetics with self-consistencies in statistical mechanics and transfer functions in electronics so to reach a unified description for these systems.

Before proceeding, we recall a few basic concepts. The core of electronics is the operational amplifier, namely a solid-state integrated circuit (transistor) which uses feed-back regulation to set its functions. In fig. 4 we show the easiest representation for operational amplifiers: there are two signal inputs (one positive received (+) and one negative received (−)), two voltage supplies (Vsat, −Vsat) and an output (Vout). An ideal amplifier is the “linear” approximation of the saturable one and essentially assumes that the voltage at the input collectors (Vsat and −Vsat) is always at the same value so that no current flows inside the transistor, namely, retaining the obvious symbols of fig. 4, i+ = i− = 040. Obtaining its transfer function is straightforward as we can apply Kirchhoff law at the node 1 to delete the afferent currents, hence i1 + i2 + i− = 0. Then, assuming R1 = 1 Ω (without loss of generality as only the ratio R2/R1 matters), in the previous equation we can pose i1 = −V−, i2 = (Vout − V−)/R2 and i− = 0 (because the amplifier is ideal). We can further note that V− = V+ and V+ = Vin so to rewrite Kirchhoff law as 0 = −Vin + (Vout − Vin)/R2, by which the transfer function reads off as

where G = 1 + R2 is called “gain” of the amplifier.

Several sets of experimental data (symbols)53 are fitted by eq. 30 with minus sign (solid line).

The values of J corresponding to the best fits are shown in the table together with the related estimates for nH according to eq. 31. The estimates for nH obtained via standard Hill fit are also shown.

Therefore, as far as R2 > 0, the gain is larger than one and the circuit is amplifying the input (R2 < 0 is actually thermodynamically forbidden, suggesting that anti-cooperativity, from cybernetic perspective, must be accounted by the inverter configuration40, see next section).

Let us emphasize some structural analogies with ferromagnetic behaviors and cooperative kinetics. First, we notice that all these systems “saturate”. Indeed, it is very intuitive to see that by applying a magnetic field h > 0 to a collection of spins, they will (at least partially, depending on the noise level) align with the field, resulting in 〈m(h)〉 > 0. However, once reached the critical value  such that

such that  , any further increase in the strength of the field (i.e. any

, any further increase in the strength of the field (i.e. any  ) will produce no variations in the output of the system as all the spins are already aligned. Similarly, in reaction kinetics, once all the ligands of a given protein have bound to the substrate, any further growth in the substrate concentration will produce no net effect on the system. In the same way, given an arbitrary operational amplifier supplied with Vsat, then its output voltage will be a function of the input voltage Vin. However, there exists a critical input

) will produce no variations in the output of the system as all the spins are already aligned. Similarly, in reaction kinetics, once all the ligands of a given protein have bound to the substrate, any further growth in the substrate concentration will produce no net effect on the system. In the same way, given an arbitrary operational amplifier supplied with Vsat, then its output voltage will be a function of the input voltage Vin. However, there exists a critical input  such that Vout = Vsat and when input is larger than

such that Vout = Vsat and when input is larger than  no further amplification is possible; the amplifier is then said to be “saturated”. Of course, the sigmoidal shape of the hyperbolic tangent is not accounted by ideal amplifiers, yet for real amplifiers ± Vsat are upper bounds for the growth, hence recovering the expected behavior, as shown in the plot of fig. 6 l [Note: One may notice that the outlined amplifier is linear, while in chemical kinetics usually slopes are sigmoidal on lin-log plots. This is only a technical point and to obtain the logarithmic amplifier it is enough to substitute R2 with a diode to use the exponential scale of the latter40.]. Moreover, we notice that the transfer function is an input/output relation, exactly as the equation for the order parameter m. In fact, the latter, for small values of the coupling J (so to mirror ideal amplifier), can be written as (see eq. 17)

no further amplification is possible; the amplifier is then said to be “saturated”. Of course, the sigmoidal shape of the hyperbolic tangent is not accounted by ideal amplifiers, yet for real amplifiers ± Vsat are upper bounds for the growth, hence recovering the expected behavior, as shown in the plot of fig. 6 l [Note: One may notice that the outlined amplifier is linear, while in chemical kinetics usually slopes are sigmoidal on lin-log plots. This is only a technical point and to obtain the logarithmic amplifier it is enough to substitute R2 with a diode to use the exponential scale of the latter40.]. Moreover, we notice that the transfer function is an input/output relation, exactly as the equation for the order parameter m. In fact, the latter, for small values of the coupling J (so to mirror ideal amplifier), can be written as (see eq. 17)

Thus, the external signal Vin is replaced by the external field h and the voltage Vout is replaced by the magnetization 〈m〉. By comparing eq. 33 and eq. 34 we see that R2 plays as J, and, consistently, if R2 = 0 the retroaction is lost (see fig. 3) and the gain is no longer possible. This is perfectly coherent with the statistical mechanics perspective, where, if J = 0, spins do not mutually interact and no feed-back is allowed.

Analogously, in the chemical kinetics scenario, the Hill coefficient can be written as nH = 1/(1 − J) ~ 1 + J, in the limit of small J (namely for J < Jc = 1, which is indeed the case under investigation). Therefore, again, we see that if J = 0 there is no amplification and the kinetics returns the Michaelis-Menten scenario, while for positive J we obtain amplification and a cooperative behavior. This leads to the conceptual equivalence

hence the Hill coefficient in chemical kinetics plays as the gain in electronics. This implicitly accounts for a quantitative comparison between amplification in electronics and in biological devices.

One step forward, if J > Jc the equation 〈m(h)〉 becomes discontinuous in statistical mechanics just like the corresponding (ultra-sensitive) saturation curve in chemical kinetics: The analogy with cybernetics can still be pursued, but with analog-to-digital converters (ADCs), which are the corresponding limits of operational amplifiers.

The ADC, roughly speaking a switch, takes a continuous. i.e. analogue, input and has discrete (dichotomic in its basic implementation) states as outputs. The simplest ADC, namely flash converters, are built through cascades of voltage comparators40. A voltage comparator is sketched in fig. 6h and it simply “compares” the incoming voltage values between the negative input and the positive one as follows: Let us use as the negative input the ground (V = 0) as a reference value (to mirror one to one the equivalence with chemical kinetics or statistical mechanics we deal with only one input, namely the substrate concentration α in the former and the magnetic field h in the latter). Then, if Vin is positive the output will be Vsat > 0, vice versa, if Vin is negative, the output will be −Vsat < 0 as reported in the plot in fig. 6m, representing the ADC transfer function.

An ADC is simply an operational amplifier in an open loop (i.e. R2 = ∞), hence its theoretical gain is infinite. Coherently, this corresponds to values of J → 1 that imply a theoretical divergence in the Hill coefficient, while, practically, reactions are referred to as “ultra-sensitive” already at  . Consistently, as J → 1 the curve 〈m(h)〉 starts to develop a discontinuity at h = 0 (see Fig. 6, panels d, e, f), marking the onset of a first order phase transition. As a last remark, despite we are not analyzing these systems in the frequency domain in this first paper, we highlight that, when using time-dependent fields, for instance oscillatory input signals, full structural consistency is preserved as all these systems display hysteresis effects at high enough frequencies of the input signal.

. Consistently, as J → 1 the curve 〈m(h)〉 starts to develop a discontinuity at h = 0 (see Fig. 6, panels d, e, f), marking the onset of a first order phase transition. As a last remark, despite we are not analyzing these systems in the frequency domain in this first paper, we highlight that, when using time-dependent fields, for instance oscillatory input signals, full structural consistency is preserved as all these systems display hysteresis effects at high enough frequencies of the input signal.

The anti-cooperative case: Chemical kinetics and cybernetics

We can now extend the previous scheme for the description of a negative-cooperative system, by simply taking a negative coupling J < 0. Hence, eqs. 25 and 26 still hold and we can analogously reconstruct Y (α) = (〈mA〉 + 〈mB〉)/N versus α, whose theoretical outcomes are still shown in figs. 1 and 2 and fit them against experimental results as shown in the plots of fig. 5.

This figure summarizes all the analogies described in the paper: In the first row, pictures of three biological systems exhibiting cooperativity, namely Mitogen-activated protein kinase 14 (positive cooperativity, panel (a), Ca2+ calmodulin dependent protein kinases II (ultra-sensitive cooperativity, panel (b) and Synaptic Glutamate receptors (negative cooperativity, panel (c) are shown. The related saturation curves (binding isotherms) are shown in the second row (panels (d), (e) and (f), respectively), where symbols with the relative error-bars stand for real data taken from41,52,54 respectively and lines are best fits performed through the analytical expression in eq. 28, obtained from statistical mechanics. The related best-fit parameters are J = 0.14, J = 1.16, J = 0.29, respectively. Notice that in panel (d) it is possible to see clearly the “saturation” phenomenon as the first and the last experimental points are far from the linear fit (red line), while are perfectly accounted by the hyperbolic tangent predicted by statistical mechanics (green line), whose correspondence with saturation in electronics is represented in panel (l). Notice further that in panel (e), we compared the ultra-sensitive fit (solid line), with a simple cooperative fit (dashed line): at small substrate concentration the latter case does not match, within its variance, the data points (so accurately measured that error bars are not reported), while the former case is in perfect agreement with data points. In the third row we sketch the cybernetic counterparts, i.e., the operational amplifier (panel (g)), represented as an inverted flip-flop mirroring the symmetry by which we presented the statistical mechanics framework (the standard amplifier is shown in fig. 3), the analog-to-digital converter (panel (h)) and the flip-flop (panel (i)). The (theoretical) transfer functions corresponding to the circuits are finally shown in the fourth row (panels (l), (m) and (n), respectively) for visual comparison with the second one.

Again, it is easy to check that there are two possible behaviors depending on the interaction strength J. If J < Jc, the two partial fractions nA, nB are always equal, but when the interaction is larger than Jc, the two partial fractions are different in a region where the chemical potential log α is around zero, as shown in fig. 1. In this region, due to the strong interaction and small chemical potential, it is more convenient for the system to fill sites on a subsystem and keep less molecules of ligands on the other subsystem. The critical value of the chemical potential | log α| depends on the interaction strength: it vanishes when the average interaction equals Jc and grows from this value on. The region where the two fractions are different corresponds, in the magnetic models, to the anti-ferromagnetic phase. When J < Jc, the binding isotherm, plotted as a function of the logarithm of concentration, has a form resembling the Michaelis-Menten curve, even if anti-cooperativity is at work. Conversely, when J > Jc, in the region around α = 1 the curve has a concavity with an opposite sign with respect to the Michaelis-Menten one. In particular, there is a plateau around α = 1, which can be interpreted as the inhibition of the system, once it is half filled, towards further occupation.

Finally, in order to complete our analogy to electronics, let us consider the simplest bistable flip-flop40, built through two saturable operational amplifiers as sketched in fig. 6 i, such that the output of one of the two amplifiers is used as the inverted input of the other amplifiers, tuned by a resistor. This configuration, encoded in statistical mechanics by negative couplings among groups, makes the amplifiers reciprocally inhibiting because (and indeed they are called ”inverters” in this configuration), for instance, a large output from the first amplifier (say A) induces a fall in the second amplifier (say B) and vice versa. Since each amplifier pushes the other in the opposite state, there exist two stationary stable configurations (one amplifier with positive output and the other with negative output and vice versa). Thus, it is possible to assign a logical 0 (or 1) to one state and the other logical 1 (or 0) to the other state which can be regarded respectively as low concentration versus high concentration of bind ligands in chemical kinetics; negative or positive magnetization in ferromagnetic systems, low versus high output voltage of flip-flops in electronics. In this way, as the flip-flop can serve as an information storage device (in fact, the information (1 bit) is encoded by the output itself), the same feature holds also for the other systems. The behavior of the two flip-flop transfer functions (one for each inverter) are also shown in fig. 6 n, where the two (opposite) sigmoidal shapes are displayed versus the input voltage.

Still in fig. 6, those behaviors are compared with experimental data from biochemical anti-cooperativity and their statistical-mechanics best fits with an overall remarkable agreement.

Possible extensions: Heterogeneity and multiple binding sites

Another point worth of being highlighted is the number of potential and straightforward extensions included in the statistical-mechanics modelization. In fact, as the literature of mean-field statistical-mechanics model is huge, once self-consistencies are properly mapped into the saturation curves, one can perturb, generalize, or adjust the initial energy (cost) function and check the resulting effects.

As an example, we discuss chemical heterogeneity, which has been shown by recent experiments41,42 to play a crucial role in equilibrium reaction rates. To include this feature in our theory we can replace  in eq. 18 with

in eq. 18 with  with hi drawn from, e.g., a Gaussian distribution

with hi drawn from, e.g., a Gaussian distribution

such that for a → 1 homogeneous chemical kinetics is recovered, while for a → 0 we get standard Gaussian distribution  for heterogeneity.

for heterogeneity.

We fitted data from41 through the self-consistencies obtained by either fixing a = 1, or by taking a as a free parameter; the results obtained are in strong agreement with the original one. In particular, the authors in41 found a ratio R between the ”real” Hill coefficient (assuming heterogeneity) and the standard (homogeneous) one as R ~ 0.53, while, theoretically we found R ~ 0.57 (and a ~ 0.3).

Further, we notice that nH grows with |a − 1|, namely the higher the degree of inhomogeneity within the system and the smaller nH, in agreement with several recent experimental findings42,43.

As a last example of possible extension, we discuss quickly also the multiple binding site case, which can be simply encoded, at least within the cooperative case, by considering an interaction energy of the P-spin type as

which results in multiple discontinuities for the binding isotherms as for instance happens when considering surfactants onto a polymer gel44,45, where the affinity of the surfactants to the gel is cooperatively altered by a conformational change of the polymer chains (and actually these systems show hysteresis with respect to the surfactant concentration, which is another typical feature of “ferromagnetism”).

Discussion

In this work, we describe collective behaviors in chemical kinetics through mean-field statistical mechanics. Stimulated by the successes of the latter in formalizing classical cybernetic subjects, as neural networks in artificial intelligence15,16,18 or NP-completeness problems in logic46,47,48, we successfully tested the statistical mechanics framework as a common language to read from a cybernetic perspective chemical kinetic reactions, whose complex features are at the very basis of several biological devices.

In particular, we introduced an elementary class of models able to mimic possibly heterogeneous systems covering all the main chemical kinetics behaviors, namely ultra-sensitive, cooperative, anti-cooperative and non-cooperative reactions. Predictions yielded by such theoretical frame have been tested for comparison with experimental data taken from biological systems (e.g. nervous system, plasma, bacteria), finding overall excellent agreement. Furthermore, we showed that our analytical results recover all the standard chemical kinetics, e.g. Michaelis-Menten, Hills and Adair equations, as particular cases of this broader theory and confer to these a strong and simple physical background. Due to the presence of first order phase transition in statistical mechanics we offer a simple prescription to define a reaction as ultra-sensitive: Its best fit is achieved through a discontinuous function, whose extremization through other routes is not simple as e.g. least-squares can not be applied due to the discontinuity itself.

It is worth noticing that, despite we developed mean field techniques, hence we neglected any spatial structure, we get a direct mapping between statistical-mechanics and chemical kinetics formulas, in such a way that we can derive from the former a simple estimate for the Hill coefficient, namely for ”effective number” of interacting binding sites, in full agreement with experimental data and standard approaches.

One step forward, toward a unifying cybernetic perspective, we described a conceptual and practical mapping between kinetics of ultra-sensitive, cooperative and anti-cooperative reactions, with the behavior of analog-to-digital converters, saturable amplifiers and flip-flops respectively, highlighting how statistical mechanics can act as a common language between electronics and biochemistry. Remarkably, saturation curves in chemical kinetics mirror transfer functions of these three fundamental electronic devices which are the very bricks of robotics.

The bridge built here inspires and makes feasible several challenges and improvements in biotechnology research. For instance, we can now decompose complex reactions into a sequence of elementary ones (modularity property5) and map the latter into an ensemble of interacting spin systems to investigate potentially hidden properties of the latter such as self-organization and computational capabilities (as already done adopting spin-glass models of neural networks49). Moreover, we can reach further insights in the development of better performing biological processing hardware, which are currently poorer than silico-made references. Indeed, from our equivalence between Hill and gain coefficients the more power of electronic devices is clear as G can range over several orders of magnitude, while in chemical kinetics Hill coefficients higher than nH ~ 10 are difficult to find. This, in turn, may contribute in developing a biological amplification theory whose fruition is at the very basis of biological computations6,7,8.

We believe that this is an important, intermediary, brick in the multidisciplinary research scaffold of biological complexity.

Lastly, we remark that this is only a first step: Analyzing, within this perspective, more structured biological networks as for instance the cytokine one at extracellular level or the metabolic one at intracellular level is still an open point and requires extending the mean-field statistical mechanics of glassy systems (i.e. frustrated combinations of ferro and antiferro magnets), on which we plan to report soon.

Change history

11 July 2014

A correction has been published and is appended to both the HTML and PDF versions of this paper. The error has not been fixed in the paper.

11 July 2014

In this work we aim to highlight a close analogy between cooperative behaviors in chemical kinetics and cybernetics; this is realized by using a common language for their description, that is mean-field statistical mechanics. First, we perform a one-to-one mapping between paradigmatic behaviors in chemical kinetics (i.e., non-cooperative, cooperative, ultra-sensitive, anti-cooperative) and in mean-field statistical mechanics (i.e., paramagnetic, high and low temperature ferromagnetic, anti-ferromagnetic). Interestingly, the statistical mechanics approach allows a unified, broad theory for all scenarios and, in particular, Michaelis-Menten, Hill and Adair equations are consistently recovered. This framework is then tested against experimental biological data with an overall excellent agreement. One step forward, we consistently read the whole mapping from a cybernetic perspective, highlighting deep structural analogies between the above-mentioned kinetics and fundamental bricks in electronics (i.e. operational amplifiers, flashes, flip-flops), so to build a clear bridge linking biochemical kinetics and cybernetics.

References

Hill, A. V. The possible effects of the aggregation of the molecules of haemoglobin on its oxygen dissociation curve. J. Phisiol. 40, (1910).

Koshland Jr, D. E. The structural basis of negative cooperativity: receptors and enzymes. Curr. Opin. Struc. Biol. 6, 757–761 (1996).

House, J. Principles of chemical kinetics. (Elsevier Press, 2007).

Marangoni, A. Enzyme Kinetics: A Modern Approach. (Wiley Press, 2002).

Seelig, G., Soloveichik, D., Zhang, D. & Winfree, E. Enzyme-free nucleic acid logic circuits. Science 314, 1585–1589 (2006).

Zhang, D., Turberfield, A., Yurke, B. & Winfree, E. Engingeering entropy-driven reactions and networks catalyzed by dna. Science 318, 1121–1125 (2007).

Berry, G. & Boudol, G. The chemical abstract machine. Theoretical computer science 96, 217–248 (1992).

Păun, G. Computing with membranes. Journal of Computer and System Sciences 61, 108–143 (2000).

Thompson, C. J. Mathematical Statistical Mechanics. (Princeton University Press, 1972).

Chay, T. & Ho, C. Statistical mechanics applied to cooperative ligand binding to proteins. Proc. Natl. Acad. Sci. 70, 3914–3918 (1973).

Wyman, J. & Phillipson, P. A probabilistic approach to cooperativity of ligand binding by a polyvalent molecule. Proc. Natl. Acad. Sci. 71, 3431–3434 (1974).

Di Biasio, A., Agliari, E., Barra, A. & Burioni, R. Mean-field cooperativity in chemical kinetics. Theor. Chem. Acc. 131, 1104–1117 (2012).

ben Avraham, D. & Havlin, S. Diffusion and Reactions in Fractals and Disordered Systems. (Cambridge University Press, 2000).

van Kampen, N. G. Stochastic Processes in Physics and Chemistry. (North-Holland Personal Library, 2007).

Mezard, M., Parisi, G. & Virasoro, A. Spin glass theory and beyond. World Scientific, 1987.

Hopfield, J. & Tank, D. Computing with neural circuits: A model. Science 233, 625–633 (1986).

Amit, D., Gutfreund, H. & Sompolinsky, H. Statistical mechanics of neural networks near saturation. Annals of Physics 71, 3431–3434 (1987).

Amit, D. Modeling brain function. (Cambridge University Press, 1987).

Bishop, C. & Nasser, N. Pattern recognition and machine learning. Springer (New York), 2006.

Mezard, M. & Montanari, A. Information, Physics and Computation. (Oxford University Press, 2009).

Martelli, C. et al. Identifying essential genes in Escherichia coli from a metabolic optimization principle. Proc. Natl. Acad. Sci. 106, 2607–2611 (2009).

Mandal, M. et al. A glycine-dependent riboswitch that uses cooperative binding to control gene expression. Science 306, 275–279 (2004).

Rovira, X. et al. Modeling the binding and function of metabotropic glutamate receptors. The Journal of Pharmacology and Experimental Therapeutics 325, 443–456 (2008).

Barra, A. & Agliari, E. A statistical mechanics approach to autopoietic immune networks. J. Stat. Mech. P07004 (2010).

Li, Z. et al. Dissecting a central flip-flop circuit that integrates contradictory sensory cues in c. elegans feeding regulation. Nature Ccommunications 3, 776–781 (2011).

Bonnet, J. et al. Amplifying genetic logic gates. Science 340, 599–603 (2013).

Chang, H. et al. A unique series of reversibly switchable fluorescent proteins with beneficial properties for various applications. Proc. Natl. Acad. Sci. (2012).

Levitzki, A. & Koshland Jr, D. E. Negative cooperativity in regulatory enzymes. Proc. Natl. Acad. Sci. 1121–1128 (1969).

De Meyts. et al. Insulin interactions with its receptors: experimental evidence for negative cooperativity. Biochem. Biophys. Res. Commun. 55, 154–161 (1973).

De Meyts, P., Bianco, A. R. & Roth, J. Site-site interactions among insulin receptors. characterization of the negative cooperativity. Journ. Biol. Chem. 251, 1877–1888 (1976).

Kinazeff, J. et al. Closed state of both binding domains of homodimeric mglu receptors is required for full activity. Nature Structural and Molecular Biology 11, 706–713, (2004).

Rebek, J. et al. Allosteric effects in organic chemistry: Binding cooperativity in a model of subunit interactions. Journ. Amer. Chem. Soc. 25, 7481–7488 (1985).

Ellis, R. S. Entropy, large deviations and Statistical Mechanics. (Springer Press, 2005).

Ackers, G. K., Doyle, M. L., Myers, D. & Daugherty, M. A. Molecular code for cooperativity in hemoglobin. Science 255, 54–63 (1992).

Agliari, E., Barra, A. & Camboni, F. Criticality in diluted ferromagnet. J. Stat. Mech. P10003 (2008).

Agliari, E. & Barra, A. A Hebbian approach to complex-network generation. Europhys. Lett. 94, 10002 (2011).

Barra, A. & Agliari, E. A statistical mechanics approach to Granovetter theory. Physica A 391, 3017–3026 (2012).

Agliari, E., Burioni, R. & Sgrignoli, P. A two-populations ising model on a two-populations ising model on diluted random graphs. J. Stat. Mech. P07021 (2010).

Germain, R. The art of the probable: system control in the adaptive immune system. Science 293, 240–246 (2001).

Millman, J. & Grabel, A. Microelectronics. (McGraw Hill, 1987).

Solomatin, S. V., Greenfeld, M. & Hershlag, D. Implications of molecular heterogeneity for the cooperativity of biological macromolecules. Nature Structural & Molecular Biology 18, 732–734 (2011).

Macdonald, J. L. & Pike, L. J. Heterogeneity in EGF-binding affinities arises from negative cooperativity in an aggregating system. Proc. Natl. Acad. Sci. 105, 112–117 (2008).

Solomatin, S. S., Greenfeld, M. & Herschlag, D. Implications of molecular heterogeneity for the cooperativity of biological macromolecules. Nature Structural & Molecular Biology 18, 732–734 (2011).

Murase, Y., Onda, T., Tsujii, K. & Tanaka, T. Discontinuous Binding of Surfactants to a Polymer Gel Resulting from a Volume Phase Transition. Macromolecules 32, 8589–8594 (1999).

Chatterjee, A. P. & Gupta-Bhaya, P. Multisite interactions in the lattice gas model: shapes of binding isotherm of macromolecules. Molecular Physics 89, 1173–1179 (1996).

Martin, O. C., Monasson, R. & Zecchina, R. Statistical mechanics methods and phase transitions in optimization problems. Theoretical Computer Science 265, 3–67 (2001).

Macrae, N. John von Neumann: The scientific genius who pioneered the modern computer, game theory, nuclear deterrence and much more (American Mathematical Society, 1999).

Sourlas, N. Statistical mechanics and the travelling salesman problem. Europhys. Lett. 2, 919 (1986).

Agliari, E., Barra, A., Galluzzi, A., Guerra, F. & Moauro, F. Multitasking associative networks. Phys. Rev. Lett. 109, 268101 (2012).

Levitzki, A. & Koshland Jr, D. E. Negative cooperativity in regulatory enzymes. Proc. Natl. Acad. Sci. 62, 1121–1128 (1969).

Chao, L. H. et al. Intersubunit capture of regulatory segments is a component of cooperative camkii activation. Nature Structural & Molecular Biology 17, 264–272 (2010).

Bradshaw, M., Kubota, Y., Meyer, T. & Schulman, H. An ultrasensitive Ca2+/calmodulin-dependent protein kinase ii-protein phosphatase 1 switch facilitates specificity in postsynaptic calcium signaling. Proc. Natl. Acad. Sci. 100, 10512–10517 (2003).

Bolognesi, M. et al. Anticooperative ligand binding properties of recombinant ferric vitrescilla homodimeric hemoglobin: A thermodynamic, kinetic and x-ray crystallographis study. J. Mol. Biol. 291, 637–650 (1999).

Suzuki, Y., Moriyoshi, E., Tsuchiya, D. & Jingami, H. Negative Cooperativity of Glutamate Binding in the Dimeric Metabotropic Glutamate Receptor Subtype 1*. The Journal of Biological Chemistry 279, 35526–35534 (2004).

Acknowledgements

This works is supported by the FIRB grant RBFR08EKEV. Sapienza Università di Roma, INdAM through GNFM and INFN are acknowledged too for partial financial support.

Author information

Authors and Affiliations

Contributions

The bridge between chemical kinetics and statistical mechanics has been built by all the authors. The next bridge to cybernetics has been built by A.B. E.A., A.D.B. and G.U. performed data analysis and produced all the plots. E.A., A.B. and R.B. wrote the manuscript.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/3.0/

About this article

Cite this article

Agliari, E., Barra, A., Burioni, R. et al. Collective behaviours: from biochemical kinetics to electronic circuits. Sci Rep 3, 3458 (2013). https://doi.org/10.1038/srep03458

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep03458

This article is cited by

-

PDE/Statistical Mechanics Duality: Relation Between Guerra’s Interpolated p-Spin Ferromagnets and the Burgers Hierarchy

Journal of Statistical Physics (2021)

-

Complete integrability of information processing by biochemical reactions

Scientific Reports (2016)

-

Notes on stochastic (bio)-logic gates: computing with allosteric cooperativity

Scientific Reports (2015)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.