Abstract

Inhomogeneous temporal processes, like those appearing in human communications, neuron spike trains and seismic signals, consist of high-activity bursty intervals alternating with long low-activity periods. In recent studies such bursty behavior has been characterized by a fat-tailed inter-event time distribution, while temporal correlations were measured by the autocorrelation function. However, these characteristic functions are not capable to fully characterize temporally correlated heterogenous behavior. Here we show that the distribution of the number of events in a bursty period serves as a good indicator of the dependencies, leading to the universal observation of power-law distribution for a broad class of phenomena. We find that the correlations in these quite different systems can be commonly interpreted by memory effects and described by a simple phenomenological model, which displays temporal behavior qualitatively similar to that in real systems.

Similar content being viewed by others

Introduction

In nature there are various phenomena, from earthquakes1 to sunspots2 and neuronal activity3, that show temporally inhomogeneous sequence of events, in which the overall dynamics is determined by aggregate effects of competing processes. This happens also in human dynamics as a result of individual decision making and of various kinds of correlations with one's social environment. These systems can be characterized by intermittent switching between periods of low activity and high activity bursts4,5,6, which can appear as a collective phenomenon similar to processes seen in self-organized criticality7,8,9,10,11,12,13,14,15,16. In contrast with such self-organized patterns intermittent switching can be detected at the individual level as well (see Fig. 1), seen for single neuron firings or for earthquakes at a single location17,18,19,20,21 where the famous Omori's law22,23 describes the temporal decay of aftershock rates at a given spot.

Activity of single entities with color-coded inter-event times.

(a): Sequence of earthquakes with magnitude larger than two at a single location (South of Chishima Island, 8th–9th October 1994) (b): Firing sequence of a single neuron (from rat's hippocampal) (c): Outgoing mobile phone call sequence of an individual. Shorter the time between the consecutive events darker the color.

Further examples of bursty behavior at the individual level have been observed in the digital records of human communication activities through different channels4,24,25,26,27,28. Over the last few years different explanations have been proposed about the origin of inhomogeneous human dynamics4,24,29, including the single event level30 and about the impact of circadian and weekly fluctuations31. Moreover, by using novel technology of Radio Frequency ID's, heterogeneous temporal behavior was observed in the dynamics of face-to-face interactions32,33. This was explained by a reinforcement dynamics34,35 driving the decision making process at the single entity level.

For systems with discrete event dynamics it is usual to characterize the observed temporal inhomogeneities by the inter-event time distributions, P(tie), where tie = ti + 1−ti denotes the time between consecutive events. A broad P(tie)3,25,36 reflects large variability in the inter-event times and denotes heterogeneous temporal behavior. Note that P(tie) alone tells nothing about the presence of correlations, usually characterized by the autocorrelation function, A(τ), or by the power spectrum density. However, for temporally heterogeneous signals of independent events with fat-tailed P(tie) the Hurst exponent can assign false positive correlations37 together with the autocorrelation function (see Supplementary Information). To understand the mechanisms behind these phenomena, it is important to know whether there are true correlations in these systems. Hence for systems showing fat-tailed inter-event time distributions, there is a need to develop new measures that are sensitive to correlations but insensitive to fat tails.

In this paper we define a new measure that is capable of detecting whether temporal correlations are present, even in the case of heterogeneous signals. By analyzing the empirical datasets of human communication, earthquake activity and neuron spike trains, we observe universal features induced by temporal correlations. In the analysis we establish a close relationship between the observed correlations and memory effects and propose a phenomenological model that implements memory driven correlated behavior.

Results

Correlated events

A sequence of discrete temporal events can be interpreted as a time-dependent point process, X(t), where X(ti) = 1 at each time step ti when an event takes place, otherwise X(ti) = 0. To detect bursty clusters in this binary event sequence we have to identify those events we consider correlated. The smallest temporal scale at which correlations can emerge in the dynamics is between consecutive events. If only X(t) is known, we can assume two consecutive actions at ti and ti + 1 to be related if they follow each other within a short time interval, ti + 1−ti ≤ Δt30,38. For events with the duration di this condition is slightly modified: ti + 1−(ti + di) ≤ Δt.

This definition allows us to detect bursty periods, defined as a sequence of events where each event follows the previous one within a time interval Δt. By counting the number of events, E, that belong to the same bursty period, we can calculate their distribution P(E) in a signal. For a sequence of independent events, P(E) is uniquely determined by the inter-event time distribution P(tie) as follows:

for n > 0. Here the integral  defines the probability to draw an inter-event time P(tie) ≤ Δt randomly from an arbitrary distribution P(tie). The first term of (1) gives the probability that we do it independently n−1 consecutive times, while the second term assigns that the nth drawing gives a P(tie) > Δt therefore the evolving train size becomes exactly E = n. If the measured time window is finite (which is always the case here), the integral

defines the probability to draw an inter-event time P(tie) ≤ Δt randomly from an arbitrary distribution P(tie). The first term of (1) gives the probability that we do it independently n−1 consecutive times, while the second term assigns that the nth drawing gives a P(tie) > Δt therefore the evolving train size becomes exactly E = n. If the measured time window is finite (which is always the case here), the integral  where a < 1 and the asymptotic behaviour appears like P(E = n) ~ a(n−1) in a general exponential form (for related numerical results see SI). Consequently for any finite independent event sequence the P(E) distribution decays exponentially even if the inter-event time distribution is fat-tailed. Deviations from this exponential behavior indicate correlations in the timing of the consecutive events.

where a < 1 and the asymptotic behaviour appears like P(E = n) ~ a(n−1) in a general exponential form (for related numerical results see SI). Consequently for any finite independent event sequence the P(E) distribution decays exponentially even if the inter-event time distribution is fat-tailed. Deviations from this exponential behavior indicate correlations in the timing of the consecutive events.

Bursty sequences in human communication

To check the scaling behavior of P(E) in real systems we focused on outgoing events of individuals in three selected datasets: (a) A mobile-call dataset from a European operator; (b) Text message records from the same dataset; (c) Email communication sequences26 (for detailed data description see Methods). For each of these event sequences the distribution of inter-event times measured between outgoing events are shown in Fig. 2 (left bottom panels) and the estimated power-law exponent values are summarized in Table 1. To explore the scaling behavior of the autocorrelation function, we took the averages over 1,000 randomly selected users with maximum time lag of τ = 106. In Fig. 2.a and b (right bottom panels) for mobile communication sequences strong temporal correlation can be observed (for exponents see Table 1). The power-law behavior in A(τ) appears after a short period denoting the reaction time through the corresponding channel and lasts up to 12 hours, capturing the natural rhythm of human activities. For emails in Fig. 2.c (right bottom panels) long term correlation are detected up to 8 hours, which reflects a typical office hour rhythm (note that the dataset includes internal email communication of a university staff).

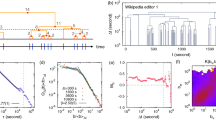

The characteristic functions of human communication event sequences.

The P(E) distributions with various Δt time-window sizes (main panels), P(tie) distributions (left bottom panels) and average autocorrelation functions (right bottom panels) calculated for different communication datasets. (a) Mobile-call dataset: the scale-invariant behavior was characterized by power-law functions with exponent values  ,

,  and

and  (b) Almost the same exponents were estimated for short message sequences taking values

(b) Almost the same exponents were estimated for short message sequences taking values  ,

,  and

and  . (c) Email event sequence with estimated exponents

. (c) Email event sequence with estimated exponents  ,

,  and

and  . A gap in the tail of A(τ) on figure (c) appears due to logarithmic binning and slightly negative correlation values. Empty symbols assign the corresponding calculation results on independent sequences. Lanes labeled with s, m, h and d are denoting seconds, minutes, hours and days respectively.

. A gap in the tail of A(τ) on figure (c) appears due to logarithmic binning and slightly negative correlation values. Empty symbols assign the corresponding calculation results on independent sequences. Lanes labeled with s, m, h and d are denoting seconds, minutes, hours and days respectively.

The broad shape of P(tie) and A(τ) functions confirm that human communication dynamics is inhomogeneous and displays non-trivial correlations up to finite time scales. However, after destroying event-event correlations by shuffling inter-event times in the sequences (see Methods) the autocorrelation functions still show slow power-law like decay (empty symbols on bottom right panels), indicating spurious unexpected dependencies. This clearly demonstrates the disability of A(τ) to characterize correlations for heterogeneous signals (for further results see SI). However, a more effective measure of such correlations is provided by P(E). Calculating this distribution for various Δt windows, we find that the P(E) shows the following scale invariant behavior

for each of the event sequences as depicted in the main panels of Fig. 2. Consequently P(E) captures strong temporal correlations in the empirical sequences and it is remarkably different from P(E) calculated for independent events, which, as predicted by (1), show exponential decay (empty symbols on the main panels).

Exponential behavior of P(E) was also expected from results published in the literature assuming human communication behavior to be uncorrelated29,30,39. However, the observed scaling behavior of P(E) offers direct evidence of correlations in human dynamics, which can be responsible for the heterogeneous temporal behavior. These correlations induce long bursty trains in the event sequence rather than short bursts of independent events.

We have found that the scaling of the P(E) distribution is quite robust against changes in Δt for an extended regime of time-window sizes (Fig. 2). In addition, the measurements performed on the mobile-call sequences indicate that the P(E) distribution remains fat-tailed also when it is calculated for users grouped by their activity. Moreover, the observed scaling behavior of the characteristic functions remains similar if we remove daily fluctuations (for results see SI). These analyses together show that the detected correlated behavior is not an artifact of the averaging method nor can be attributed to variations in activity levels or circadian fluctuations.

Bursty periods in natural phenomena

As discussed above, temporal inhomogeneities are present in the dynamics of several natural phenomena, e.g. in recurrent seismic activities at the same location19,20,21 (for details see Methods and SI). The broad distribution of inter-earthquake times in Fig. 3.a (right top panel) demonstrates the temporal inhomogeneities. The characterizing exponent value γ = 0.7 is in qualitative agreement with the results in the literature23 as γ = 2−1/p where p is the Omori decay exponent22,23. At the same time the long tail of the autocorrelation function (right bottom panel) assigning long-range temporal correlations. Counting the number of earthquakes belonging to the same bursty period with Δt = 2…32 hours window sizes, we obtain a broad P(E) distribution (see Fig. 3.a main panel), as observed earlier in communication sequences, but with a different exponent value β = 2.5 (see in Table 1). This exponent value meets with known seismic properties as it can be derived as β = b/a + 1, where a denotes the productivity law exponent40, while b is coming from the well known Gutenberg-Richter law41. Note that the presence of long bursty trains in earthquake sequences were already assigned to long temporal correlations by measurements using conditional probabilities42,43.

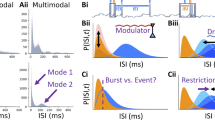

The characteristic functions of event sequences of natural phenomena.

The P(E) distributions of correlated event numbers with various Δt time-window sizes (main panels), P(tie) distributions (right top panels) and average autocorrelation functions (right bottom panels). (a) One station records of Japanese earthquake sequences from 1985 to 1998. The functional behavior is characterized by the fitted power-law functions with corresponding exponents  ,

,  and

and  . Inter-event times for P(tie) were counted with 10 second resolution. (b) Firing sequences of single neurons with 2 millisecond resolution. The corresponding exponents take values as

. Inter-event times for P(tie) were counted with 10 second resolution. (b) Firing sequences of single neurons with 2 millisecond resolution. The corresponding exponents take values as  ,

,  and

and  . Empty symbols assign the calculation results on independent sequences. Lanes labeled with ms, s, m, h, d and w are denoting milliseconds, seconds, minutes, hours, days and weeks respectively.

. Empty symbols assign the calculation results on independent sequences. Lanes labeled with ms, s, m, h, d and w are denoting milliseconds, seconds, minutes, hours, days and weeks respectively.

Another example of naturally occurring bursty behavior is provided by the firing patterns of single neurons (see Methods). The recorded neural spike sequences display correlated and strongly inhomogeneous temporal bursty behavior, as shown in Fig. 3.b. The distributions of the length of neural spike trains are found to be fat-tailed and indicate the presence of correlations between consecutive bursty spikes of the same neuron.

Memory process

In each studied system (communication of individuals, earthquakes at given location, or neurons) qualitatively similar behaviour was detected as the single entities performed low frequency random events or they passed through longer correlated bursty cascades. While these phenomena are very different in nature, there could be some element of similarities in their mechanisms. We think that this common feature is a threshold mechanism.

From this point of view the case of human communication data seems problematic. In fact generally no accumulation of stress is needed for an individual to make a phone call. However, according to the Decision Field Theory of psychology44, each decision (including initiation of communication) is a threshold phenomenon, as the stimulus of an action has to reach a given level for to be chosen from the enormously large number of possible actions.

As for earthquakes and neuron firings it is well known that they are threshold phenomena. For earthquakes the bursty periods at a given location are related to the relaxation of accumulated stress after reaching a threshold7,8,9. In case of neurons, the firings take place in bursty spike trains when the neuron receives excitatory input and its membrane potential exceeds a given potential threshold45. The spikes fired in a single train are correlated since they are the result of the same excitation and their firing frequency is coding the amplitude of the incoming stimuli46.

The correlations taking place between consecutive bursty events can be interpreted as a memory process, allowing us to calculate the probability that the entity will perform one more event within a Δt time frame after it executed n events previously in the actual cascade. This probability can be written as:

Therefore the memory function, p(n), gives a different representation of the distribution P(E). The p(n) calculated for the mobile call sequence are shown in Fig. 4.a for trains detected with different window sizes. Note that in empirical sequences for trains with size smaller than the longest train, it is possible to have p(n) = 1 since the corresponding probability would be P(E = n) = 0. At the same time due to the finite size of the data sequence the length of the longest bursty train is limited such that p(n) shows a finite cutoff.

Empirical and fitted memory functions of the mobile call sequence (a) Memory function calculated from the mobile call sequence using different Δt time windows.

(b) 1−p(n) complement of the memory function measured from the mobile call sequence with Δt = 600 second and fitted with the analytical curve defined in equation (4) with ν = 2.971. Grey symbols are the original points, while black symbols denotes the same function after logarithmic binning. (c) P(E) distributions measured in real and in modeled event sequences.

We can use the memory function to simulate a sequence of correlated events. If the simulated sequence satisfies the scaling condition in (2) we can derive the corresponding memory function by substituting (2) into (3), leading to:

with the scaling relation (see SI):

In order to check whether (5) holds for real systems and whether the memory function in (4) describes correctly the memory in real processes we compare it to a memory function extracted from an empirical P(E) distributions. We selected the P(E) distribution of the mobile call dataset with Δt = 600 second and derived the corresponding p(n) function. The complement of the memory function, 1−p(n), is presented in Fig. 4.b where we show the original function with strong finite size effects (grey dots) and the same function after logarithmic binning (black dots).

Taking equation (4) we fit the theoretical memory function to the log-binned empirical results using least-squares method with only one free parameter, υ. We find that the best fit offers an excellent agreement with the empirical data (see Fig. 4.b and also Fig. 4.a) with υ = 2.971 ± 0.072. This would indicate  through (5), close to the approximated value

through (5), close to the approximated value  , obtained from directly fitting the empirical P(E) distributions in the main panel of Fig. 2.a (for fits of other datasets see SI). In order to validate whether our approximation is correct we take the theoretical memory function p(n) of the form (4) with parameter υ = 2.971 and generate bursty trains of 108 events. As shown in Fig. 5.c, the scaling of the P(E) distribution obtained for the simulated event trains is similar to the empirical function, demonstrating the validity of the chosen analytical form for the memory function.

, obtained from directly fitting the empirical P(E) distributions in the main panel of Fig. 2.a (for fits of other datasets see SI). In order to validate whether our approximation is correct we take the theoretical memory function p(n) of the form (4) with parameter υ = 2.971 and generate bursty trains of 108 events. As shown in Fig. 5.c, the scaling of the P(E) distribution obtained for the simulated event trains is similar to the empirical function, demonstrating the validity of the chosen analytical form for the memory function.

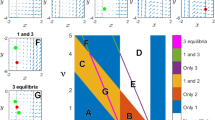

Schematic definition and numerical results of the model study.

(a) P(E) distributions of the synthetic sequence after logarithmic binning with window sizes Δt = 1…1024. The fitted power-law function has an exponent β = 3.0. (b) Transition probabilities of the reinforcement model with memory. (c) Logarithmic binned inter-event time distribution of the simulated process with a maximum interevent time  . The corresponding exponent value is γ = 1.3. (d) The average logarithmic binned autocorrelation function with a maximum lag τmax = 104. The function can be characterized by an exponent α = 0.7. Simulation results averaged over 1000 independent realizations with parameters µA = 0.3, µB = 5.0, ν = 2.0, π = 0.1 and T = 109. For the calculation we chose the maximum inter-event time

. The corresponding exponent value is γ = 1.3. (d) The average logarithmic binned autocorrelation function with a maximum lag τmax = 104. The function can be characterized by an exponent α = 0.7. Simulation results averaged over 1000 independent realizations with parameters µA = 0.3, µB = 5.0, ν = 2.0, π = 0.1 and T = 109. For the calculation we chose the maximum inter-event time  , which is large enough not to influence short temporal behavior, but it increases the program performance considerably.

, which is large enough not to influence short temporal behavior, but it increases the program performance considerably.

Model study

As the systems we analysed are of quite different nature, from physics (earthquakes) to social (human communication) and biological (neuron spikes) systems, finding a single mechanistic model to describe them all is impossible. Therefore, our goal is not to reproduce in detail our observations for the different systems but to identify minimal conditions or common characteristics that may play a role in all of their dynamics and are sufficient to reproduce the observed overall temporal behaviour. Here we define a phenomenological model which integrates the deliberated features and study how they are related to each other.

Reinforcement dynamics with memory

We assume that the investigated systems can be described with a two-state model, where an entity can be in a normal state A, executing independent events with longer inter-event times, or in an excited state B, performing correlated events with higher frequency, corresponding to the observed bursts. To induce the inter-event times between the consecutive events we apply a reinforcement process based on the assumption that the longer the system waits after an event, the larger the probability that it will keep waiting. Such dynamics shows strongly heterogeneous temporal features as discussed in34,35. For our two-state model system we define a process, where the generation of the actual inter-event time depends on the current state of the system. The inter-event times are induced by the reinforcement functions that give the probability to wait one time unit longer after the system has waited already time tie since the last event. These functions are defined as

where µA and µB control the reinforcement dynamics in state A and B, respectively. These functions follow the same form as the previously defined memory function in (4) and satisfy the corresponding scaling relation in (5). If  the characteristic inter-event times at state A and B become fairly different, which induces further temporal inhomogeneities in the dynamics. The actual state of the system is determined by transition probabilities shown in Fig. 5.b, where to introduce correlations between consecutive excited events performed in state B we utilize the memory function defined in equation (4).

the characteristic inter-event times at state A and B become fairly different, which induces further temporal inhomogeneities in the dynamics. The actual state of the system is determined by transition probabilities shown in Fig. 5.b, where to introduce correlations between consecutive excited events performed in state B we utilize the memory function defined in equation (4).

To be specific, the model is defined as follows: first the system performs an event in a randomly chosen initial state. If the last event was in the normal state A, it waits for a time induced by fA(tie), after which it switches to excited state B with probability π and performs an event in the excited state, or with probability 1−π stays in the normal state A and executes a new normal event. In the excited state the inter-event time for the actual event comes from fB(tie) after which the system decides to execute one more excited event in state B with a probability p(n) that depends on the number n of excited events since the last event in normal state. Otherwise it switches back to a normal state with probability 1−p(n). Note that a similar model without memory was already defined in the literature47.

The numerical results predicted by the model are summarized in Fig. 5 and Table 1. We find that the inter-event time distribution in Fig. 5.c reflects strong inhomogeneities as it takes the form of a scale-free function with an exponent value γ = 1.3, satisfying the relation γ = µA + 1. As a result of the heterogeneous temporal behavior with memory involved, we detected spontaneously evolving long temporal correlations as the autocorrelation function shows a power-law decay. Its exponent α = 0.7 (see Fig. 5.d) also satisfies the relation α + γ = 2 (see SI). The P(E) distribution also shows fat-tailed behavior for each investigated window size ranging from Δt = 1 to 210 (see Fig. 5.a). The overall signal here is an aggregation of correlated long bursty trains and uncorrelated single events. This explains the weak Δt dependence of P(E) for larger window sizes, where more independent events are merged with events of correlated bursty cascades, which induces deviation of P(E) from the expected scale-free behavior. The P(E) distributions can be characterized by an exponent β = 3.0 in agreement with the analytical result in (5) and it confirms the presence of correlated bursty cascades. In addition, even if we fix the value of β and γ, the α exponent satisfies the condition α < γ < β, an inequality observed in empirical data (see Table 1).

Discussion

In the present study we introduced a new measure, the number of correlated events in bursty cascades, which detects correlations and heterogeneity in temporal sequences. It offers a better characterization of correlated heterogeneous signals, capturing a behavior that cannot be observed from the inter-event time distribution and the autocorrelation function. The discussed strongly heterogeneous dynamics was documented in a wide range of systems, from human dynamics to natural phenomena. The time evolution of these systems were found to be driven by temporal correlations that induced scale-invariant distributions of the burst lengths. This scale-free feature holds for each studied system and can be characterized by different system-dependent exponents, indicating a new universal property of correlated temporal patterns emerging in complex systems.

We found that the bursty trains can be explained in terms of memory effects, which can account for the heterogeneous temporal behavior. In order to better understand the dynamics of temporally correlated bursty processes at single entity level we introduced a phenomenological model that captures the common features of the investigated empirical systems and helps us understand the role they play during the temporal evolution of heterogeneous processes.

Methods

Data processing

To study correlated human behavior we selected three datasets containing time-stamped records of communication through different channels for a large number of individuals. For each user we extract the sequence of outgoing events as we are interested in the correlated behavior of single entities. The datasets we have used are as follows: (a) A mobile-call dataset from a European operator covering ~325×106 million voice call records of ~6.5×106 users during 120 days48. (b) Text message records from the same dataset consisting of 125.5 × 106 events between the same number of users. Note that to consider only trusted social relations these events were executed between users who mutually called each other at least one time during the examined period. Consecutive text messages of the same user with waiting times smaller than 10 seconds were considered as a single multipart message49 though the P(tie) and A(τ) functions do not take values smaller than 10 seconds in Fig. 2.b. (c) Email communication sequences of 2, 997 individuals including 20.2×104 events during 83 days26. From the email sequence the multicast emails (consecutive emails sent by the same user to many other with inter-event time 0) were removed in order to study temporally separated communication events of individuals. To study earthquake sequences we used a catalog that includes all earthquake events in Japan with magnitude larger than two between 1st July 1985 and 31st December 199850. We considered each recorded earthquake as a unique event regardless whether it was a main-shock or an after-shock. For the single station measurement we collected a time order list of earthquakes with epicenters detected at the same region7,21 (for other event collection methods see SI). The resulting data consists of 198, 914 events at 238 different regions. The utilized neuron firing sequences consist of 31, 934 outgoing firing events of 1, 052 single neurons which were collected with 2 millisecond resolution from rat's hippocampal slices using fMCI techniques51,52.

Random shuffling of inter-event times of real sequences

To generate independent event sequences from real data in Fig. 2 and 3 (empty symbols) we randomly shuffled the inter-event times of entities (persons, locations and neurons) allowing to change tie values between any entities but keeping the original event number unchanged for each of them. This way the original inter-event time and node strength distributions remain unchanged but we receive null model sequences where every temporal correlations were destroyed. The aim of this calculation was twofold as to show on real data that the autocorrelation function still scales as a power-law after temporal correlations are removed and that the P(E) distribution decays exponentially for uncorrelated signals. The presented P(E) distributions were calculated with one Δt window size to demonstrate this behaviour.

References

Corral, Á. Long-Term Clustering, Scaling and Universality in the Temporal Occurrence of Earthquakes. Phys. Rev. Lett. 92, 108501 (2004).

Wheatland, M. S. & Sturrock, P. A. The Waiting-Time Distribution of Solar Flare Hard X-ray Bursts. Astrophys. J. 509, 448 (1998).

Kemuriyama, T. et al. A power-law distribution of inter-spike intervals in renal sympathetic nerve activity in salt-sensitive hypertension-induced chronic heart failure. BioSystems 101, 144–147 (2010).

Barabási, A.-L. The origin of bursts and heavy tails in human dynamics. Nature 435, 207–211 (2005).

Oliveira, J. G. & Barabási, A.-L. Human dynamics: Darwin and Einstein correspondence patterns. Nature 437 1251 (2005).

Barabási, A.-L. Bursts: The Hidden Pattern Behind Everything We Do (Dutton Books, 2010).

Bak, P., Christensen, K., Danon, L. & Scanlon, T. Unified Scaling Law for Earthquakes. Phys. Rev. Lett. 88, 178501 (2002).

Bak, P. How Nature Works: The Science of Self-Organized Criticality (Copernicus, Springer-Verlag New York, 1996).

Jensen, H. J. Self-Organized Criticality: Emergent Complex Behavior in Physical and Biological Systems (Cambridge University Press, Cambridge, 1996).

Paczuski, M., Maslov, S. & Bak, P. Avalanche dynamics in evolution, growth and depinning models. Phys. Rev. E. 53, 414 (1996).

Zapperi, S., Lauritsen, K. B. & Stanley, H. E. Self-Organized Branching Processes: Mean-Field Theory for Avalanches. Phys. Rev. Lett. 75, 4071 (1995).

Beggs, J. M. & Plenz, D. Neuronal Avalanches in Neocortical Circuits. J. Neurosci. 23, 11167–11177 (2003).

Lippiello, E., de Arcangelis, L. & Godano, C. Influence of Time and Space Correlations on Earthquake Magnitude. Phys. Rev. Lett. 100, 038501 (2008).

de Arcangelis, L., Godano, C., Lippiello, E. & Nicodemi, M. Universality in Solar Flare and Earthquake Occurrence. Phys. Rev. Lett. 96, 051102 (2006).

Brunk, G. G. Self organized criticality: A New Theory of Political Behaviour and Some of Its Implications. B. J. Pol. S. 31, 427–445 (2001).

Ramos, R. T., Sassib, R. B. & Piqueira, J. R. C. Self-organized criticality and the predictability of human behavior. New. Id. Psy. 29, 38–48 (2011).

Kepecs, A. & Lisman, J. Information encoding and computation with spikes and bursts. Network: Comput. Neural. Syst. 14, 103–18 (2003).

Grace, A. A. & Bunney, B. S. The control of firing pattern in nigral dopamine neurons: burst firing. J. Neurosci. 4, 2877–2890 (1984).

Smalley, R. F. et al. A fractal approach to the clustering of earthquakes: Applications to the seismicity of the New Hebrides. Bull. Seism. Soc. Am. 77, 1368–1381 (1987).

Udias, A. & Rice, J. Statistical analysis of microearthquake activity near San Andreas geophysical observatory, Hollister, California. Bull. Seism. Soc. Am. 65, 809–827 (1975).

Zhao, X. et al. A non-universal aspect in the temporal occurrence of earthquakes. New. J. Phys. 12, 063010 (2010).

Omori, F. On the aftershocks of earthquakes. J. Coll. Sci. Imp. Univ. Tokyo 7, 111–216 (1894).

Utsu, T. A statistical study of the occurrence of aftershocks. Geophys. Mag. 30, 521–605 (1961).

Vázquez, A. et al. Modeling bursts and heavy tails in human dynamics. Phys. Rev. E 73, 036127 (2006).

Goh, K.-I. & Barabási, A.-L. Burstiness and memory in complex systems. Euro. Phys. Lett. 81 48002 (2008).

Eckmann, J., Moses, E. & Sergi, D. Entropy of dialogues creates coherent structures in e-mail traffic. Proc. Natl. Acad. Sci. (USA) 101, 14333–14337 (2004).

Pica Ciamarra, M., Coniglio, A. & de Arcangelis, L. Correlations and Omori law in spanning. Eur. Phys. Lett. 84 28004 (2008).

Ratkiewicz, J., Fortunato, S., Flammini, A., Menczer, F. & Vespignani, A. Characterizing and Modeling the Dynamics of Online Popularity. Phys. Rev. Lett. 105, 158701 (2010).

Malmgren, R. D. et al. A Poissonian explanation for heavy tails in e-mail communication. Proc. Natl. Acad. Sci. 105, 18153–18158 (2008).

Wu, Y. et al. Evidence for a bimodal distribution in human communication. Proc. Natl. Acad. Sci. 107, 18803–18808 (2010).

Jo, H-H., Karsai, M., Kertész, J. & Kaski, K. Circadian pattern and burstiness in mobile phone communication. New J. Phys. 14 013055 (2012).

Cattuto, C., Van den Broeck, W., Barrat, A., Colizza, V., Pinton, J. F. & Vespignani, A. Dynamics of person-to-person interactions from distributed RFID sensor networks. PLoS ONE 5 e11596 (2010).

Takaguchi, T. et al. Predictability of conversation partners. Phys. rev. X 1, 011008 (2011).

Stehlé, J., Barrat, A. & Bianconi, G. Dynamical and bursty interactions in social networks. Phys. Rev. E 81, 035101 (2010).

Zhao, K., Stehlé, J., Bianconi, G. & Barrat, A. Social network dynamics of face-to-face interactions. Phys. Rev. E 83, 056109 (2011).

Saichev, A. & Sornette, D. Universal Distribution of Interearthquake Times Explained. Phys. Rev. Lett. 97, 078501 (2006).

Hansen, A. & Mathiesen, J. Survey of Scaling Surface in Modelling Critical and Catastrophic Phenomena in Geoscience pp 93–110 Series: Lecture Notes in Physics, Vol. 705 (Springer, 2006).

Turnbull, L., Dian, E. & Gross G. The string method of burst identification in neuronal spike trains. J. Neurosci. Meth. 145, 23–35 (2005).

Anteneodo, C., Malmgren, R. D. & Chialvo, D. R. Poissonian bursts in e-mail correspondence. Eur. Phys. J. B 75, 389–394 (2010).

Helmstetter, A. Is Earthquake Triggering Driven by Small Earthquakes? Phys. Rev. Lett. 91, 058501 (2003).

Gutenberg, B. & Richter, C. F. Seismicity of the Earth and Associated Phenomena (Princeton Univ. Press., Princeton, 1949).

Bunde, A., Eichner, J. F., Kantelhardt, J. W. & Havlin, S. Long-Term Memory: A Natural Mechanism for the Clustering of Extreme Events and Anomalous Residual Times in Climate Records. Phys. Rev. Lett. 94, 048701 (2005).

Livina, V. N., Havlin, S. & Bunde, A., Memory in the Occurrence of Earthquakes. Phys. Rev. Lett. 95, 208501 (2005).

Busemeyer, J. R., & Townsend, J. T., Decision Field Theory: A dynamic cognition approach to decision making. Psychological Review 100, 432–59 (1993)

Nicholls, J. G., Martin, A. R., Wallace, B. G. & Fuchs, P. A. From Neuron to Brain (4th ed. Sinauer Associates Inc., 1991).

Kandel, E., Schwartz, J. & Jessel, T. M. Principles of Neural Science (Elsevier, New York, 1991).

Kleinberg, J. Bursty and Hierarchical Structure in Streams. Data Mining and Knowledge Discovery 7, 373–397 (2003).

Karsai, M. et al. Small but slow world: How network topology and burstiness slow down spreading. Phys. Rev. E. 83, 025102(R) (2011).

Kovanen, L. Structure and dynamics of a large-scale complex network. (Master's thesis). Aalto University, (Helsinki, 2009)

Japan University Network Earthquake Catalog File, Earthquake Research Institute, University of Tokyo.

Ikegaya, Y. et al. Synfire chains and cortical songs: Temporal modules of cortical activity. Science 304, 559–564 (2004).

Takahashi, N. et al. Watching neuronal circuit dynamics through functional multineuron calcium imaging (fMCI). Neurosci. Res. 58, 219–25 (2007).

Acknowledgements

We thank J. Saramäki, H-H. Jo, M. Kivelä, C. Song, D. Wang, L. de Arcangelis and I. Kovács for comments and useful discussions. Financial support from EUs FP7 FET-Open to ICTeCollective Project No. 238597 and TEKES (FiDiPro) are acknowledged.

Author information

Authors and Affiliations

Contributions

All authors designed the research and participated in the writing of the manuscript. MK analysed the empirical data and did the numerical calculations. MK and JK completed the corresponding analytical derivations.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Electronic supplementary material

Supplementary Information

Supplementary Informations

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareALike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/

About this article

Cite this article

Karsai, M., Kaski, K., Barabási, AL. et al. Universal features of correlated bursty behaviour. Sci Rep 2, 397 (2012). https://doi.org/10.1038/srep00397

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep00397

This article is cited by

-

Engineering the structural and electrical interplay of nanostructured Au resistive switching networks by controlling the forming process

Scientific Reports (2023)

-

Constructing temporal networks with bursty activity patterns

Nature Communications (2023)

-

Testing the power-law hypothesis of the interconflict interval

Scientific Reports (2023)

-

Temporal-topological properties of higher-order evolving networks

Scientific Reports (2023)

-

Classification of endogenous and exogenous bursts in collective emotions based on Weibo comments during COVID-19

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.