Abstract

We used a novel computerized decision-making task to compare the decision-making behavior of chronic amphetamine abusers, chronic opiate abusers, and patients with focal lesions of orbital prefrontal cortex (PFC) or dorsolateral/medial PFC. We also assessed the effects of reducing central 5-hydroxytryptamine (5-HT) activity using a tryptophan-depleting amino acid drink in normal volunteers. Chronic amphetamine abusers showed sub-optimal decisions (correlated with years of abuse), and deliberated for significantly longer before making their choices. The opiate abusers exhibited only the second of these behavioral changes. Importantly, both sub-optimal choices and increased deliberation times were evident in the patients with damage to orbitofrontal PFC but not other sectors of PFC. Qualitatively, the performance of the subjects with lowered plasma tryptophan was similar to that associated with amphetamine abuse, consistent with recent reports of depleted 5-HT in the orbital regions of PFC of methamphetamine abusers. Overall, these data suggest that chronic amphetamine abusers show similar decision-making deficits to those seen after focal damage to orbitofrontal PFC. These deficits may reflect altered neuromodulation of the orbitofrontal PFC and interconnected limbic-striatal systems by both the ascending 5-HT and mesocortical dopamine (DA) projections.

Similar content being viewed by others

Main

The ascending mesostriatal and mesocortical dopamine (DA) systems are widely believed to provide an important modulatory influence on the cognitive functions supported by the prefrontal cortex (PFC) and its associated striatal structures. Thus, a wealth of evidence involving patient populations such as those with Parkinson's disease (Bowen et al. 1975; Downes et al. 1989) and schizophrenia (Park and Holzman 1992), as well as non-human primates (Brozoski et al. 1979; Sawaguchi and Goldman-Rakic 1991), indicates that altered dopaminergic function is associated with some of the cognitive impairments typically seen after damage to PFC (e.g., Milner 1964; Owen et al. 1991; Freedman and Oscar-Berman 1996). Further evidence that these cognitive changes are the consequence of specifically altered dopaminergic modulation of the PFC and striatum, and possibly of the “functional loops” operating between the two (Alexander et al. 1986), is seen in experimental demonstrations that at least some of these impairments can be ameliorated by pharmacological agents acting on the mesostriatal and mesocortical DA pathways (Daniel et al. 1991; Lange et al. 1992; see Arnsten 1997 for review).

The existence of such neuropsychological changes raises the possibility that subjects with a history of chronic abuse of stimulant drugs, such as amphetamine and cocaine, will themselves display the kinds of cognitive deficits previously demonstrated in patients with PFC or striatal damage. Consistent with this possibility, there is now considerable evidence that chronic administration of amphetamine can induce enduring reductions in monoamine levels in the striatum and PFC of non-human primates and rats, and that, under at least some schedules of administration, neurotoxic effects (i.e., permanent axonal or nerve terminal damage) can occur (Seiden et al. 1975; Hotchkiss and Gibb 1980; Ricaurte et al. 1980, 1984; Wagner et al. 1980; see Seiden and Ricaurte 1987 for review; Ryan et al. 1990; Melega et al. 1996; Gibb et al. 1994; Villemagne et al. 1998). Moreover, recent work has now extended this evidence by demonstrating reduced levels of both DA in the striatum and 5-hydroxytryptamine (5-HT) in the orbitofrontal cortex of human methamphetamine abusers (Wilson et al. 1996a). Although chronic cocaine administration does not appear to have specifically neurotoxic consequences (Ryan et al. 1988; Wilson et al. 1991), moderate reductions in striatal DA have been found in post-mortem cocaine abusers (Wilson et al. 1996b). Moreover, studies using ligand-binding PET techniques in detoxified cocaine abusers have demonstrated reduced striatal DA (Volkow et al. 1997) and D2 receptor availability, with the latter of these correlating with reduced orbitofrontal metabolism (Volkow et al. 1988, 1991, 1993).

DECISION MAKING AND ORBITOFRONTAL CORTEX

Of the neuropsychological tasks impaired by changes in dopaminergic function, those that fall under the rubric of “executive function” seem especially sensitive (Robbins et al. 1994 for review). Such tasks typically involve a requirement to organize or control a variety of component cognitive processes, such as those involving the maintenance of short-term information or the modulation of cognitive set, in order to complete more composite cognitive operations relating to deferred or distant goals. In recent years, attention has begun to move away from the role of the dorsolateral areas of PFC in supporting these executive functions toward the more mysterious contributions of the orbital, ventromedial regions (e.g., Eslinger and Damasio 1985; Saver and Damasio 1991). The scientific challenge posed by patients who have sustained damage to such areas is that they are more likely to exhibit difficulties in social cognition, and the decisions of their everyday lives, in the absence of the marked cognitive deficits more frequently shown by patients with damage to dorsal regions of PFC.

Although historically it has proved difficult to isolate well-defined cognitive deficits in orbital PFC patients, innovative experiments recently conducted by Bechara and colleagues have now demonstrated a consistent deficit in the decision-making cognition of patients with ventromedial PFC lesions, a deficit that is essentially absent in patients with dorsolateral or dorsomedial PFC damage (Bechara et al. 1994, 1996, 1998; see Damasio 1996 for review). These advances suggest that it may also be possible to probe more effectively the orbitofrontal dysfunction in patients with established neuropsychiatric disorders and, additionally, begin to investigate the neuroanatomical and neurochemical interactions with orbital PFC that collectively mediate decision-making cognition.

DECISION MAKING AND MONOAMINE NEUROTRANSMITTER SYSTEMS

Clinical experience indicates that drug abusers display many of the behavioral traits that have been previously associated with patients who have suffered damage to orbital PFC (cf., Eslinger and Damasio 1985). Thus, abusers often show a lack of personal and social judgment, a reduced concern for the consequences of their actions, and difficulties with decision making. With this in mind, we set out to test the following hypothesis: if chronic amphetamine administration is associated with altered monoaminergic modulation of orbital PFC and its associated limbic structures including, for example, the ventral striatum and amygdala, amphetamine abusers should show the same deficits in decision-making cognition as orbital PFC patients (e.g., Bechara et al. 1994).

Before describing our decision-making paradigm, we first mention two additional comparisons. First, one important issue relates to the generality of any putative cognitive deficits found in chronic amphetamine abusers. It remains possible that abusers of other substances, such as alcohol or barbiturates, may show similar or different patterns of cognitive impairment. We addressed this issue in the present study by including an age and verbal IQ-matched sample of chronic opiate abusers as a positive control group. Chronic opiate abusers are a particularly appropriate choice for a control group because, while opiates probably exert their reinforcing effects via their action on the mesolimbic DA system (see Koob and Bloom 1988; DiChiara and Imperato 1988; Wise and Rompré 1989), there is little evidence that they produce enduring changes in DA transmission or cellular neurotoxicity.

The second issue concerns the possible role of the ascending 5-HT projection systems in the decision-making behavior of both drug abusive and other patient groups. Several studies suggest that repeated amphetamine treatment, especially in its methylated form, produces enduring changes in 5-HT, as well DA transmission (Seiden et al. 1975; Preston et al. 1985; Fukui et al. 1989; Woolverton et al. 1989; Axt and Molliver 1991). Indeed, it has been suggested that those limbic regions innervated by 5-HT projections are especially sensitive to the effects of repeated amphetamine administration (Ricaurte et al. 1980; Seiden and Ricaurte 1987), suggesting that one mechanism for altered decision making associated with chronic amphetamine abuse in humans might be altered serotonergic modulation of the ventral PFC and its interconnected structures (Groenewegen et al. 1997). Moreover, a wealth of clinical evidence indicates that sociopathy involving altered decision making and impulsive, aggressive behaviors is associated with reduced 5-HT metabolites in CSF (e.g., Linnoila et al. 1983; Virkkunen et al. 1994). We explored the role of 5-HT in decision making using a tryptophan-depleting procedure in young, non-drug abusing subjects in comparison with an age and verbal IQ-matched control group.

BEHAVIORAL INDICES OF DECISION MAKING

The paradigm developed by Bechara and colleagues requires subjects to sample repeatedly from four decks of cards, and after each selection, receive a given amount of reward consisting of facsimile money. Unknown to the subjects, two of the packs produce large “payouts” but, in addition, occasional larger penalties whereby reward is handed back to the experimenter. By contrast, the two remaining packs produce small rewards but only occasional smaller penalties so that, over the course of the test, subjects will maximize their reward by drawing cards consistently from the more conservative decks. The core result is that patients with damage to the ventromedial, but not dorsolateral or dorsomedial sectors of PFC, persist in drawing cards from the high payout/high penalty decks despite the ultimately punishing consequences of this behavior (see Damasio 1996 for review).

One attractive feature of this task is that it models an important aspect of the real-life decisions which patients with ventromedial PFC lesions find so difficult. Typically, these decisions involve choices between actions associated with differing magnitudes of reward and punishment under conditions in which the underlying contingencies relating actions to relevant outcomes remain hidden. Accordingly, the contingencies of the Bechara's card-gambling task are also hidden in order to capture this basic uncertainty. However, this feature of the task means that it is difficult to assess how an individual patient's pattern of choices might alter across a range of well-defined and clearly presented contingencies representing more or less favorable opportunities to earn reward. Thus, it is important to note that the precise behavioral character of the decision-making deficits shown by patients with orbitofrontal lesions remains severely underspecified. For example, little is known about the extent to which impaired decision making in such patients is independent of other hypothetical behavioral changes associated with orbitofrontal damage such as disinhibition and disrupted “impulse control.”

In this experiment, we introduce a novel decision-making task in which subjects make choices or decisions between contingencies that are presented in a readily comprehensible visual format, in order to assess the degree to which deficits in decision making shown by orbital PFC patients, and possibly chronic drug abusers, are sensitive to the quality of information available about the likely identity of the reinforced response. Several dependent measures are collected simultaneously including the speed of decision making (as reflected in the deliberation times associated with deciding between the available response options); the quality of decision making (as reflected in the propensity of the subject to choose the most adaptive response), and finally, the willingness of subjects to put at “risk” some of their already accumulated reward in the hope of earning yet more reinforcement. In this way, we hope to improve the characterization of decision-making deficits in patients with orbital PFC damage, chronic drug abusers, and normal volunteers who have undergone a low-tryptophan challenge.

To anticipate the results, we found significant parallels between the pattern of choices exhibited by chronic amphetamine abusers, chronic opiate abusers, and patients with focal lesions of the orbital PFC. In particular, chronic amphetamine abusers showed a pattern of decision making that was especially similar to that seen after orbital PFC damage. Moreover, the possibility that this might be mediated by serotonergic dysregulation within the orbital PFC was highlighted by the additional finding that aspects of the amphetamine abusers’ decision-making deficits were modeled by acutely reduced tryptophan in normal volunteers. In general, the deficits were not associated with increased “impulsivity”, but were indicative of difficulties in resolving competing choices.

METHODS

This study was approved by the local research ethics committee, and all subjects gave informed consent.

Amphetamine Users

Eighteen patients were recruited from the Cambridge Drug and Dependency Unit (CDDU) for participation on the basis of the following criteria: using amphetamine as the major drug of abuse for at least 3 years; satisfaction of the DSM-IV criteria for amphetamine dependence; no history of alcohol dependence syndrome; no acute intoxication or physical illness; no history of major psychiatric illness (e.g., affective disorders); ongoing attendance at the CDDU with a co-worker. Duration of abuse ranged from 3 to 29 years (mean = 13.4 ± 1.7). Eight patients were using “street” amphetamines (est. mean dose per day = 1.6 ± 0.4 g) while 10 were receiving regular prescriptions of Dexedrine (mean dose per day = 36.5 ± 9.1 mg). All patients reported at least some use of other substances. In particular, use of cannabis was especially common (16 patients), along with use of opiates (10 patients). Use of MDMA and alcohol was more limited (7 and 5 patients each). Benzodiazepines were not used extensively (2 patients). Finally, 10 patients reported intravenous injection as the preferred method of administration for their amphetamine. Demographic and psychometric information are shown in Table 1. Particular care was taken to avoid testing any amphetamine abuser whose last intake of amphetamine was within 12 h of the testing session (as indicated by self-report, the individual's behavior, and an assessment by an expert clinician who also checked for the presence of any signs of withdrawal dysphoria).

Opiate Users

Thirteen opiate abusers, also recruited from the CDDU, participated in the study. The criteria for selection were similar to those adopted for the amphetamine abusers: administering opiates, usually heroin, as the major drug of abuse for at least 3 years; no history of alcohol dependence syndrome; no acute intoxication or physical illness; no history of major psychiatric illness (e.g., affective disorders); ongoing attendance at the CDDU with a co-worker. Duration of abuse ranged from 4 to 30 years (mean = 13.9 ± 2.5; see Table 1). Only 3 patients were currently using “street” heroin (est. mean dose per day = 0.3 ± 0.1 g), while 10 patients were receiving regular prescriptions of Methadone (mean dose per day = 39.5 ± 9.4 mg). All patients reported at least some use of cannabis. However, abuse of alcohol was quite limited (only 2 patients), as was use of both amphetamine and MDMA (1 patient each). As with the amphetamine abusers, benzodiazepines were not heavily used (3 patients). Finally, 10 patients reported intravenous injection as the preferred method of administration for their opiates. Other demographic and psychometric data are shown in Table 1. As with the amphetamine abusers, careful assessment makes it unlikely that any of the selected opiate abusers were tested while in a condition of acute intoxication or withdrawal dysphoria.

Frontal Patients

Twenty patients with focal damage to the frontal lobes participated (see Table 1). Thirteen of these had undergone surgery for intractable epilepsy or tumor, while 6 had sustained their lesions through stroke. One patient had an unoperated right-side oligodendroglioma. Of the epilepsy patients, there were 3 cases each of left-sided resection and right-sided resection. Of the tumor patients, there were 2 cases of left-sided meningioma removal, 1 case of right-sided astrocytoma removal, 1 case of right-sided haemangioma removal, and 2 cases of meningioma removal affecting the olfactory groove. One of these involved bilateral cortical excision, while the other involved right-sided excision with some cortical damage on the left. Finally, one patient had undergone craniopharyngioma removal through a right-sided fronto-temporal approach. In all cases, cortical loss was confined to the frontal lobes.

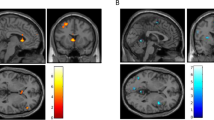

For the purposes of the study, the group was divided into two groups of 10 patients. In one group (ORB-PFC patients), cortical damage extended into the orbital sectors of the PFC while, in the other group (DL/M-PFC patients), damage was confined to more dorsolateral or dorsomedial areas. The two groups were reasonably well matched in terms of the mean number of years since lesion (see Table 1). Descriptions of the location and extent of individual cortical lesions are set out below.

ORB-PFC Patients

Patient 1: cortical loss involving most of inferior left prefrontal region, including the gyrus rectus and orbital gyri, but sparing the middle frontal gyrus in the lateral surface and the cingulate gyrus on the medial surface. Patient 2: area of cortical loss limited to posterior orbitolateral PFC on the right. The medial surface, and ventral parts of the lateral surface, are preserved. Patient 3: cortical loss involving left orbital PFC, involving the gyrus rectus medially and extending laterally along the entire extent of the orbital surface. There is also an additional lesion centered around the posterior operculum on the left. Patient 4: damage to predominantly right ventromedial sectors of PFC adjacent to the olfactory groove and extending into inferior lateral cortex. Patient 5: cortical loss in right inferior medial regions, extending into the frontal pole. The anterior horn of the ventricle is slightly deformed with some displacement of the head of the caudate nucleus. Patient 6: cortical damage involving the anterior/posterior extent of left ventromedial PFC, and extending dorsally to the level of the genu. Patient 7: extensive damage to orbital right PFC, extending to the region of the medial frontal gyrus, and middle frontal gyrus more laterally. Patient 8: loss of inferior medial and polar cortex on the left, incorporating the gyrus rectus on medial surface but extending back to the operculum on the lateral surface. Patient 9: limited damage to inferior left PFC, incorporating anterior half of gyrus rectus and orbital gyri. There is slight cortical loss in the most anterior area of the pole on the medial surface. Patient 10: extensive bilateral damage to ventromedial areas of PFC, extending to below the cingulate gyri medially, and incorporating lateral orbital cortex.

DL/M-PFC Patients

Patient 11: limited damage in the anterior part of the right sylvian region. Superior lateral and anterior aspects of the PFC, including the orbital cortex, are unaffected. Patient 12: large area of cortical loss from right superior dorsolateral PFC, including and extending forward from the precentral gyrus. Medial and fronto-polar areas are spared. Patient 13: cortical damage within posterior lateral PFC on the left, centered around the inferior frontal gyrus. Patient 14: cortical loss of posterior right PFC, just above the sylvian fissure and within the region of the inferior frontal gyrus. Orbitolateral cortex is unaffected. Patient 15: extensive damage to right superior lateral and medial prefrontal regions, completely sparing the frontal pole anteriorly but including the superior operculum posteriorly. Patient 16: cortical damage in the medial portion of right PFC, beginning at the body of the corpus callosum and extending upward in superior medial cortex. Damage extends further through the white matter. Patient 17: large area of left-sided damage, extending from the central sulcus to anterior superior cortex. The lesion extends to the body of the corpus callosum, but no further, on the medial surface, and to only superior areas on the lateral surface. Patient 18: extensive cortical damage on the right, beginning just anterior to central sulcus and incorporating superior medial and lateral areas. Damage extends to the level of the genu in the medial surface. Polar and orbital cortex is spared. Patient 19: loss of cortical tissue in right dorsolateral areas extending to superior operculum. All medial, cingulate, and orbital gyri are preserved. Patient 20: loss of cortex in left superior lateral areas anterior to precentral gyrus. Damage extends inferiorly to middle frontal gyrus.

Control Subjects and Matching Groups

Twenty-six healthy volunteers were recruited to participate as control subjects. This group was selected in order to match closely the drug abuser groups in terms of both age and premorbid verbal IQ as assessed using the National Adult Reading Test (NART; Nelson 1982). Exclusion criteria included any history of neurological or psychiatric morbidity, head injury, or substance abuse. One-way analyses of variance (ANOVAs) including the amphetamine abusers, opiate abusers, and control subjects showed no significance differences in terms of age (df = 2, 54; F < 1) or verbal IQ (df = 2, 54; F = 1.8). Means duration of abuse was also closely matched between the amphetamine and opiate abusers (13.4 ± 1.7 vs 13.9 ± 2.5 yrs; df = 1, 29; F < 1). Since both groups of frontal lobe patients tended to be somewhat older than the control subjects, their performance was assessed in two ways. First, one set of analyses compared the ORB-PFC patients, the DL/M-PFC patients and the main group of controls, but included age and verbal IQ as covariates. Second, sub-groups of control subjects were selected to serve as specific controls for each of the ORB-PFC and DL/M-PFC patient groups separately (see Table 1). One-way ANOVAs confirmed that there were no significant differences in terms of age or verbal IQ between either group of frontal lobe patients and their respective control groups (df = 1, 20; all Fs < 1).

Tryptophan-Depleted Subjects

Table 1 also details the mean ages and premorbid verbal IQs of the subject groups who participated in the tryptophan depletion experiment. There was no significant differences in terms of either measure between those subjects who underwent tryptophan depletion and those who did not (df = 1, 29; both Fs < 1). Details of the composition of the low-tryptophan drink can be found in Young et al. (1985). However, plasma tryptophan levels were significantly reduced in the experimental group compared to the placebo group (df = 1, 29; F = 96.0, p < .0001 and F = 156.9; p < .0001, respectively). Thus, levels of plasma total and free tryptophan were 27.6 ± 2.5 μg/ml and 1.2 ± 0.07 μg/ml in the placebo group but only 2.4 ± 0.4 μg/ml and 0.12 ± 0.03 μg/ml in the experimental group.

Decision-Making Task

Figure 1 shows a typical display from the decision-making task. The subject was told that the computer had hidden, on a random basis, a yellow token inside one of the red or blue boxes arrayed at the top of the screen and that he/she had to decide whether this token was hidden inside a red box or blue box. The subject indicated this decision by touching either the response panel marked “RED” or the response panel marked “BLUE.” After making this initial choice, the subject attempted to increase a total points score, shown in green ink toward the left-hand side of the display, by placing a “bet” on this choice being correct. The available bets appeared in a sequence, one after another, centered in the box positioned toward the right-hand side of the display. Each bet was displayed for a period of 5 s before being replaced by its successor, and the subject could select any bet by touching the box in which the sequence appeared. Immediately following such a selection, one of the red or blue boxes opened to reveal the location of the token, accompanied by either a “You win!” message and a short rising musical scale, or a “You lose!” message and a low tone. If the subject chose the correct color, the bet placed was added to the total points score; if the subject chose the wrong color, the bet was subtracted. The subject was instructed to treat the points as being valuable and to accumulate as many as possible during the test. However, no monetary significance was attached to the total points accumulated by the end of the task.

The subject performed the task in two separate conditions. In one condition (ascending), the first bet offered was small but was replaced by larger and larger bets until the subject made a selection. In another condition (descending), the first bet offered was large but was replaced by smaller and smaller bets. Each bet represented a fixed percentage of the current total points score although this was never made clear to the subject. Five bets were offered on each trial so that, in the ascending condition, the order of available bets was as follows: 5%, 25%, 50%, 75%, and 95%. (The bet shown in Figure 1 constitutes a bet of 50% of the current points total.) In the descending condition, this order was reversed. In both conditions, each bet was presented with a short tone whose pitch corresponded to the size of bet: higher tones accompanied larger bets and lower tones accompanied small bets. If the subject failed to select a bet by the end of a sequence, the last bet was chosen automatically.

Both the ascending and descending conditions consisted of four sequences of nine displays. At the start of each sequence, the subject was given 100 points and asked to increase this total by as much as possible. If a subject's score fell to just one point the current sequence ended and the next began. The order of presentation of the two conditions was counterbalanced across all subject groups. The same set of fixed, pseudo-random sequences were presented to all subject groups.

As discussed at the beginning of this article, three features of this task are important. First, by manipulating the ratio of red and blue boxes from trial to trial, it was possible to examine a subject's decision-making behavior over a variety of differentially weighted contingencies. For example, some ratios (e.g., 9 red : 1 blue) presented two contingencies that were quite unequal in terms of the probabilities associated with their respective outcomes. In contrast, other ratios (e.g., 4 red : 6 blue) presented contingencies that were more balanced. Thus, a subject's choice of contingency, speed of choice, and size of bet were expected to differ as a function of the ratio of red/blue boxes. Second, by allowing subjects to determine for themselves how much of their points score they wished to bet after each red/blue decision, we could assess individual willingness to place already-accumulated reinforcement at risk in the hope of acquiring more reward. For example, one might suppose that a ratio of 9 red : 1 blue represented an opportunity to bet more points on a red decision in order to gain more reward, while a ratio of 6 blue : 4 red represented a situation in which more conservative behavior might be appropriate. Finally, offering the bets in both an ascending and a descending order afforded the possibility of isolating merely impulsive behavior from genuine risk seeking (Miller 1992). If a subject were impulsive in terms of being unable to withhold manual responses to the sequence of bets as they were presented then he/she would have chosen early bets in both the ascending and descending sequences. However, if a subject was actively risk seeking then he/she would have chosen late bets in the ascending condition, but early bets in the descending condition. Thus, a large difference between the mean percentage bet in these two conditions indicated impulsivity; a low difference indicated risk seeking.

The data analyses centered around three main measures:

-

Speed of decision making: how long it takes the subject to decide which color of box is hiding the token as measured by the mean deliberation time.

-

Quality of decisions: One particularly ineffective tactic in the performance of this task is to bet points continuously on the least likely of the two possible outcomes (i.e., to choose the color with the fewest number of boxes as the one hiding the yellow token). Thus, we measured how much of the time the subject chose the most likely outcome (i.e., the color with the most number of boxes).

-

Risk-adjustment: the rate at which a subject increases the percentage of the available points put at risk in response to more favorable ratios of red:blue boxes (e.g., 9 red : 1 blue vs 4 red : 6 blue).

RESULTS

Initially, the principal measures obtained from the decision-making task were subjected to repeated measures ANOVAs with the following between- and within-subject factors: group (amphetamine abusers vs opiate abusers vs control subjects or ORB-PFC patients vs DL/M-PFC patients vs control subjects); order of condition (ascending/descending vs descending/ascending); condition (ascending vs descending); decision (red vs blue); ratio (6:4 vs 7:3 vs 8:2 vs 9:1). Additional analyses are described in the text. The mean deliberation times (i.e., the speed of decision making) were log 10 transformed in those instances where there was marked heterogeneity of the variance, while the proportions of trials on which subjects chose the most likely outcome (i.e., the quality of decision making) were arcsine-transformed as is appropriate whenever variance is proportional to the mean (Howell 1987). However, the data shown in the figures always represent untransformed values. In those instances in which the additional assumption of homogeneity of covariance in repeated-measures ANOVA was violated, as assessed using the Mauchly sphericity test, the degrees of freedom against which the F-term was tested were reduced by the value of the Greenhouse-Geisser epsilon (see Howell 1987).

Speed of Decision Making

Drug Abusers vs Controls

Figure 2a shows the mean deliberation times associated with deciding which color of box is hiding the yellow token, as a function of the ratio of red and blue boxes. In general, mean deliberation times were significantly longer at the less favorable ratios compared to the more favorable ratios, suggesting that the difficulty of the decisions was increased when the display provided only poor information about the rewarded response (df = 3, 153; F = 3.8; p < .05). Notably, both groups of drug abusers showed large and significant increases in the time needed to make their decisions (df = 2, 51; F = 7.3; p < .005), so that the mean deliberation times were only 2683 ms for the controls, but 3670 ms for the chronic amphetamine abusers, and 3766 ms for the chronic opiate abusers. In the particular case of the amphetamine abusers, this deficit was especially marked at the less favorable ratios of red and blue boxes in the descending condition, reflected in a significant three-way interaction between group, condition, and ratio (df = 6, 153; F = 4.0; p < .005).

Speed of decision making. Mean deliberation times (ms) associated with deciding which color of box is hiding the yellow token as a function of the ratio of red and blue boxes. (a) Amphetamine abusers vs. opiate abusers vs control subjects; (b) ORB-PFC patients vs DL/M-PFC patients vs. controls; (c) low-tryptophan subjects vs. placebo subjects

Finally, all subjects took more time to make their decisions on the first occasion that they completed the task compared to the second (i.e., in the ascending condition for subjects whose order of conditions was ascending/descending, and in the descending condition for those subjects whose order was descending/ascending), yielding a significant two-way interaction between order and condition (df = 1, 51; F = 30.1; p < .0001). However, the three-way interaction between these two factors and that of group was not significant (df = 2, 51; F < 1), indicating that the deficits in deliberation time shown by the drug abuser groups were not simply associated with conditions in which the task was novel or unpracticed.

Frontal Patients vs Controls

Analysis of the performance of the two groups of frontal lobe patients and the controls revealed that both age and verbal IQ were significant covariates of subjects’ deliberation times (regression: df = 2, 38; F = 4.7; p < .05; age: t = 2.2; p < .05; NART: t = −2.8; p < .01). However, after the contributions of these factors to the overall variance were removed, it was the ORB-PFC patients, and not the DL/M-PFC patients, who showed similar increases in deliberation times to those shown by the drug abuser groups (df = 2, 38; F = 6.9; p < .005). Thus, the mean time needed to decide the color of box hiding the yellow token was only 2755 ms for the DL/M-PFC patients but 4215 ms for the ORB-PFC patients. Although deliberation times in general were significantly increased at the less favorable ratios of red and blue boxes (df = 3, 120; F = 3.9; p < .05), there was no consistent evidence that this trend was especially pronounced in the case of the ORB-PFC patients compared to the DL/M-PFC patients and control subjects (df = 6, 120; F < 1.5). Finally, separate comparisons between the ORB-PFC, the DL/M-PFC patients, and their respective sub-groups of matched controls confirmed the significant increase in deliberation times shown by the former but not the latter subject group (see Table 2).

Low Tryptophan vs Placebo

There was no significant difference in the overall deliberation times for the low-tryptophan group compared to the placebo group (2865 vs 2605 ms; df = 1, 27; F < 1). However, the performance of the low-tryptophan group was characterized by marked variability on this measure, with some subjects showing large increases in the time needed to make their decisions, and some showing marked reductions. Overall, as Figure 2c shows, the low-tryptophan group tended to take longer to make their decisions than the placebo group at the more favorable ratio of red and blue boxes, reflected in a near-significant two-way interaction between group and ratio (df = 3, 81; F = 2.3; p = .09).

In summary, both the amphetamine and opiate abusers, and the ORB-PFC patients, showed large and significant increases in the deliberation times associated with deciding which color of box was hiding the yellow token. The low tryptophan subjects showed a trend toward similar increases in deliberation times. By contrast, the deliberation times of the DL/M-PFC patients were not significantly changed.

Quality of Decision Making

Drug Abusers vs Controls

Figure 3a shows the percentage of trials on which subjects chose the most likely of the two possible outcomes (i.e., the color with the most number of boxes), as a function of the ratio of red and blue boxes. Averaging over group, the percentage of choices of the most likely outcome increased significantly at the more favorable compared to the less favorable ratios (6:4, 82%; 7:3, 92%; 8:2, 95%; and 9:1, 94%; df = 2, 153; F = 18.1; p < .0001). However, the amphetamine abusers also showed a consistent tendency to make such optimal choices less often than either the control subjects or the opiate abusers (85% vs 95% and 92%; df = 2, 51; F = 3.4; p < .05). Moreover, this tendency was quite independent of the relative probabilities of the two outcomes so that the two-way interaction between group and ratio was not significant (df = 4, 153; F < 1; see Figure 3a). In contrast, while the opiate abusers also chose the most likely outcome slightly less often than the controls, this small effect was due to the behavior of one subject who was not representative of the group as a whole.

Finally, all subjects chose the most likely outcome less often on the first occasion that they completed the task compared to the second (df = 1, 51; F = 11.2; p < .005). However, this effect was amplified for the amphetamine abusers (80% vs 91%) compared to the controls (94% vs 96%) and opiate abusers (91% vs 93%), indicating that the deficit in the quality of decision making shown by the amphetamine abusers was markedly worse when the task was novel or unpracticed (df = 2, 51; F = 4.6; p < .05).

Frontal Patients vs Controls

In the comparisons involving the frontal lobe patients and the controls, only premorbid verbal IQ tended to covary with the extent to which subjects chose the most likely of the two possible outcomes (regression: df = 2, 38; F = 2.5; p = .09; NART: t = 2.0; p = .05; age: t = −.04). However, Figure 3b shows that while the pattern of choices shown by the DL/M-PFC patients and the controls was extremely similar across the range of ratios of red and blue boxes, the pattern of choices shown by the ORB-PFC patients was also characterized by a significant reduction in the choice of the most likely outcome (97%, 95% vs 85%; df = 2, 38; F = 4.2, p < .05). Furthermore, this behavior did not appear to be modulated by the quality of information available about which response was likely to be rewarded. Thus, the two way interaction between group and ratio was not significant (df = 4, 120; F < 1). However, as with the chronic amphetamine abusers, the ORB-PFC patients did show a significantly greater reduction in the choice of the most likely outcome on the first occasion that they completed the task compared to the second (81% vs 89%) relative to the DL/M patients (97% vs 96%) and the controls (94% vs 96%), suggesting that the ORB-PFC patients showed especially impaired quality of choice when the task was novel and unpracticed (df = 2, 40; F = 5.5, p < .01).

Finally, additional comparison with the age- and IQ-matched controls confirmed the poorer quality of decision making shown by the ORB-PFC patients and not the DL/M-PFC patients, and the greater magnitude of this deficit on the first administration of the task compared to the second (see Table 2).

Low Tryptophan vs Placebo

Like the amphetamine abusers and unlike the opiate abusers, normal young, healthy volunteers with acutely reduced tryptophan chose the most likely of two available outcomes significantly less often than the placebo group (89% vs 96%; df = 1, 27; F = 9.7; p < .005). Moreover, as with the amphetamine abusers, this change in the quality of decision making was not modulated in any way by the changing ratios of colored boxes (see Figure 3c) so that the two-way interaction between group and ratio was not significant (df = 2, 81; F = 1.1).

To summarize, the amphetamine abusers, the ORB-PFC patients, and the low-tryptophan group chose the more likely outcome significantly less often than their controls. By contrast, the opiate abusers and the DL-PFC patients showed a pattern of choices that was very similar to that of the control subjects.

Risk Adjustment

Drug Abusers vs Controls

Figure 4a shows the percentage of their total points score that subjects were prepared to risk in order to earn more points, as a function of the ratios of red and blue boxes. It is important to note that this analysis is restricted to those trials on which the subjects did in fact choose the most likely outcomes because it is only by comparing performance on such trials that we can assess subjects’ sensitivity to the available opportunities to earn reward represented by different ratios.

Irrespective of groups, subjects increased the percentage bet as a function of the ratio of red and blue boxes at a relatively constant rate (6:4, 45%; 7:3, 57%; 8:2, 71%; 9:1, 79%; df = 1, 153; F = 112.7; p < .0001), with post-hoc tests revealing that all pair-wise differences were significant (Newman-Keuls, p < .01). Moreover, all subjects placed larger bets in the descending compared with the ascending condition (72% vs 54%; df = 1, 51; F = 57.5; p < .0001), but slightly smaller bets on the first compared with the second occasion that they completed the task (60% vs 65%; df = 1, 51; F = 4.5; p < .05).

Despite the deficits shown by the amphetamine and opiate abusers in terms of the deliberation times and quality of decision making, there were no significant differences in their overall size of bet compared to the controls (amphetamine abusers, 58%; opiate abusers, 67%; controls, 63%; df = 2, 51; F < 2.5). Nor was there any suggestion of either reduced or increased bets at any particular ratio of red and blue boxes so that the two-way interaction between group and ratio was also not significant (df = 3, 153; F < 1). Moreover, there was no indication of impulsivity in the selection of bets since neither group of drug abusers chose consistently earlier bets in the ascending condition and in the descending condition. Thus the two-way interaction between group and condition was not significant (amphetamine abusers/ascending, 50%; amphetamine abusers/descending, 66%; opiate abusers/ascending, 55%; opiate abusers/descending, 80%; controls/ascending, 56%; controls/descending, 70%; df = 2, 51; F < 2).

Frontal Patients vs Controls

Overall, the ORB-PFC patients placed significantly smaller bets compared to the DL/M-PFC patients and controls (48% vs 58% and 63%; df = 2, 38; F = 6.8; p < .005), while both groups of frontal lobe patients showed smaller increases in their size of their bets in response to more favorable ratios of red and blue boxes (see Figure 4b; df = 3, 120; F = 2.9; p < .05). Given this conservative pattern of behavior, it is unsurprising that there was no evidence of impulsive responding, in that neither group of frontal lobe patients consistently chose earlier bets in both the ascending and descending conditions. Consequently, the two-way interaction between group and condition was not significant (ORB-PFC patients/ascending, 43%; ORB-PFC patients/descending, 52%; DL/M-PFC patients/ascending, 48%; DL/M-PFC patients/descending, 67%; controls/ascending, 56%; controls/descending, 70%; df = 2, 40; F < 1). Further comparisons with their matched sub-groups of controls confirmed that the overall size of bet was significantly reduced in the case of the ORB-PFC patients and that both the ORB-PFC patients and the DL/M-PFC patients showed smaller increases in the size of their bets as a function of the ratio of the red and blue boxes (see Table 2).

Low Tryptophan vs Placebo

There were no significant differences between the low-tryptophan and placebo subjects in terms of the overall percentage of points put at risk (62% vs 66%; df = 1, 27; F < 1.0). Nor was there any indication that the low-tryptophan subjects risked more or less of their points at the more favorable ratios of red and blue boxes (see Figure 4c; df = 2, 81; F < 1.5). Finally, the low-tryptophan subjects did not exhibit impulsivity in the selection of bets in either the ascending condition or the descending conditions, respectively (df = 1, 27; F < 1).

In summary, the amphetamine abusers, the opiate abusers, and the low-tryptophan subjects all increased the size of their bets in response to more favorable ratios of red and blue boxes at the same rate as controls. By contrast, all frontal lobe patients bet less at the more favorable ratios.

Correlational Analyses

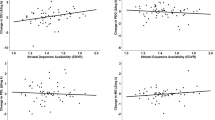

To assess whether there was any statistical association between the performance measures of the decision-making task and demographic or clinical variables of the drug abuser groups, bivariate correlational analyses were performed with the following variables: age; verbal IQ; years of abuse; deliberation times; percentage choice of the most likely outcome; and percentage points risked on each decision. In both the amphetamine and opiate abusers, age was correlated positively with years of abuse (r = 0.62; p = .006 and r = 0.97; p < .001, respectively). However, in addition, percentage choice of the most likely outcome was negatively correlated with years of abuse in the amphetamine abusers (r = −0.57; p = 0.01; see Figure 5a) but not in opiate abusers (r = 0.17; p > .50; Figure 5b ), suggesting that poorer quality of decision making is associated with prolonged abuse of amphetamines but not opiates. Finally, there were no significant correlations involving the increased deliberation times shown by the drug abuser groups. In the case of the tryptophan-depleted subjects, mean deliberation times were negatively correlated with percentage choice of the most likely outcome (r = −0.62; p = .01), indicating that those subjects who took the longest to make their decisions tended to be the same subjects who showed the greatest tendency to choose the least likely of the two outcomes. (The placebo group showed only a trend toward a similar association, r = 0.46; p = .07.) Finally, there were no significant correlations involving plasma levels of free or total tryptophan and any behavioral measure within the low-tryptophan group.

Summary

To summarize, the principal results are as follows:

Both chronic amphetamine and opiate abusers showed increased deliberation times similar to those shown by the ORB-PFC patients but not the DL/M-PFC patients.

The ORB-PFC patients and the amphetamine abusers showed poorer quality of decision making with a marked tendency to choose the less likely of two possible outcomes (i.e., the least optimal of the available responses). In both cases, the deficit was worse on the first occasion they completed the task compared to the second. The opiate abusers and the DL/M-PFC patients were unimpaired.

In the case of the amphetamine abusers, but not the opiate abusers, the poorer quality of decision making, but not the increase in deliberation times, was correlated negatively with years of abuse.

Tryptophan depletion in normal, young volunteers, producing reduced levels of central 5-HT, induced the same increased tendency to choose the least probable of outcomes as shown here by the amphetamine abusers and ORB-PFC patients, and a trend toward increased deliberation times.

The amphetamine and opiate abusers, and low-tryptophan subjects, increased the proportion of their total reward put at risk in response to more favorable ratios of red and blue boxes similarly to controls. Both the ORB-PFC and the DL/M-PFC patients showed a reduced rate of increase.

DISCUSSION

These data demonstrate significant similarities and differences between the decision-making behavior of chronic drug abusers and patients with focal damage in orbital and superior regions of the PFC. Specifically, both the chronic amphetamine and opiate abusers, and the patients with orbital PFC lesions, took significantly longer than age- and IQ-matched controls to predict which of two mutually exclusive outcomes would occur in a decision-making task. Thus, both drug abuser groups share with orbital PFC patients a specific behavioral change in situations requiring choice between competing courses of action or response. Moreover, the amphetamine, but not opiate, abusers also shared with the orbital PFC patients a significantly increased tendency to choose the less likely outcome, and thereby, attempt to earn reward on the basis of the least favorable of available response options. This behavior was reliably more prevalent in subjects with longer histories of abuse, and could be induced in normal, healthy volunteers by a plasma tryptophan-depleting procedure. Before discussing the theoretical implications of these results for our understanding of the neuroanatomical, neurochemical, and cognitive bases of decision-making function, we consider some interpretative issues.

The above deficits cannot be attributed to impaired comprehension of the task, or of the meaning of the ratios of red and blue boxes, because the rates at which both groups of drug abusers increased the proportion of their accumulated reward risked, or bet, on more favorable ratios (i.e., their “risk-adjustment” functions) were the same as their controls, indicating that they were equally sensitive to the available opportunities to earn reinforcement. This is particularly significant in the cases of the amphetamine abusers and tryptophan-depleted subjects because it appears that their reduced quality of choice (i.e., their selective tendency to choose the least likely of the two outcomes) represented a course of action that these subjects understood would probably lead to punishment. In this specific sense, these findings may constitute experimental demonstrations of genuine risk-taking behavior.

A second issue concerns the extent to which we can differentially attribute the behavioral changes seen here to the use of amphetamines or opiates in groups of subjects who also reported at least some use of other substances of abuse. In fact, the wider drug-taking profiles of the two drug abuser groups were remarkably similar. Thus, both groups reported quite extensive use of cannabis but more restricted use of alcohol, MDMA, and benzodiazepines, indicating that the principal difference between the two groups was indeed to be found in terms of their major drug of abuse. Moreover, a relatively high proportion of the amphetamine abusers also reported at least some use of opiates as well (16 out of 18 patients), increasing the likelihood that the cognitive impairments shown selectively by these patients was linked to their predominant use of amphetamines; an impression further reinforced by the significant statistical association found between these impairments and years of abuse, the latter clinical variable having been closely matched between the amphetamine and opiate abusers.

Finally, we consider the possibility that the changes seen in our drug abuser groups represent only the acute effects of recent drug intake as opposed to the effects of chronic use. We believe that this is unlikely for three reasons. First, considerable care was taken to test only those subjects who complied with the request to desist from drug-taking behavior within the 12 h prior to the testing session (as indicated by self-report, the patient's behavior, and an assessment by the principal clinician well acquainted with the particular individual). Second, the finding that the quality of the amphetamine abusers’ choices showed a significant and negative association with years of abuse suggests that some aspects of their decision making at least were not driven by recent intake so much as by factors relating to the extent of previous use. Third, we have found that administration of the mixed catecholamine indirect agonist methylphenidate (Ritalin) has no significant effects on the decision making of young, healthy, nondrug-abusing volunteers at doses (40 mg) that significantly altered performance on several other cognitive tasks (R. D. Rogers et al. unpublished observations), suggesting that acute increases in central catecholamine activity do not simply mimic the effects of chronic amphetamine abuse.

Decision Making and Chronic Administration of Amphetamine and Opiates

It is widely agreed that both amphetamines and opiates achieve their reinforcing effects by increasing the activity of the mesolimbic DA system (Koob and Bloom 1988; DiChiara and Imperato 1988; Wise and Rompré 1989), which projects not only to the nucleus accumbens, but to extensive areas of the PFC as well as the amygdala (Thierry et al. 1973). Thus, the increased deliberation times shown by both the chronic amphetamine and opiate abusers in our decision-making task is consistent with altered neuromodulation of the circuitry incorporating ventral areas of PFC, ventral striatum, and amygdala, associated with the abuse of these substances. Moreover, the absence of any relationship between this particular impairment and years of abuse suggests that this deficit does not necessarily reflect an enduring or cumulative disruption of this circuitry. Additionally, in the case of opiates, it is possible that chronic abuse may directly alter opioid receptor functions in the PFC itself (Mansour et al. 1988).

In contrast to these changes in the speed of decision making, the second type of impairment—the increased tendency to choose the least likely of the two outcomes, and thereby attempt to earn reward on the basis of the least favorable responses—was confined to the amphetamine abusers and patients with focal lesions of orbital PFC. Thus, amphetamine abuse seems to be associated with additional changes in the quality, as opposed to merely the speed, of decision making that might also be indicative of orbitofrontal cortical dysfunction. The additional finding that this aspect of the chronic amphetamine abusers’ deficit was also successfully mimicked by acute reductions in plasma tryptophan in normal, healthy volunteers strengthens the possibility that decision-making cognition is especially sensitive to disrupted functioning of the ascending monoaminergic projection systems, possibly involving changes in the modulation of the PFC and its associated limbic-striatal circuitry. However, further research will need to address the possibility that reduced tryptophan is also able to interfere with decision-making function through altering serotonergic modulation of more posterior cortical sites that mediate as yet unspecified cognitive processes involved in performance of the task.

The significant relationship between the reduced quality of choice shown by the amphetamine abusers and their years of abuse is suggestive of a cumulative process of disrupted neuromodulation associated with prolonged stimulant abuse. Consistent with this possibility, experimental evidence does suggest that extended administration of amphetamines can produce enduring changes in monoaminergic function in the striatum and PFC of rats and non-human primates (Seiden et al. 1975; Ricaurte et al. 1980, 1984; Wagner et al. 1980; Ryan et al. 1990; Gibb et al. 1994; Melega et al. 1996; Woolverton et al. 1989). Moreover, recent work has now demonstrated reduced post-mortem levels of DA in the striatum and 5-HT in the orbitofrontal cortex of human methamphetamine abusers (Wilson et al. 1996a). However, considerable care should be taken in attributing such changes to a process of cellular neurotoxicity (i.e., axon or nerve terminal destruction) as opposed to enduring, but ultimately reversible, changes in neuromodulation of the corticostriatal targets of the monoaminergic systems. In general, neurotoxicity tends to be associated with either continuous amphetamine administration (e.g., via osmotic pumps), or very high and repeated dosages, and to show considerable inter-species variability (Robinson and Becker 1986; Seiden and Ricaurte 1987). In this context, it is interesting to note that while Wilson et al. (1996a) found reduced levels of DA and DA transporter in the striatum of human methamphetamine abusers, levels of other presynaptic markers such as DOPA-decarboxylase, dihydroxyphenylacetic acid, and vesicular monoamine transporter were measured at control levels, suggesting that the functional changes associated with amphetamine abuse in humans may be confined to specific compartments and regulatory mechanisms within DA neurones. On the other hand, Villemagne et al. (1998) have recently reported that repeated administration of amphetamine to baboons, at doses comparable with those used recreationally by humans, led to enduring reductions in DA and a wider range of presynaptic markers including those not affected in Wilson et al.’s sample of human methamphetamine users. In summary, it seems likely that the particular consequences of prolonged amphetamine abuse for presynaptic monoaminergic function is heavily dependent on the precise schedule and dosage regiment (Robinson and Becker 1986; Seiden and Ricaurte 1987).

Of course, one problem in attempting to link the cognitive deficits described here and underlying changes in neuromodulatory function associated with chronic substance abuse is the difficulty in establishing the direction of any causal relationship. While it is certainly plausible that chronic abuse of amphetamines results in altered monoaminergic modulation of ventral PFC, leading to changes in decision making, it is important to acknowledge that the true causal relationship might be reversed so that altered monoaminergic neuromodulation actually ante-dates, and itself predisposes subjects to, substance abuse. Although the correlation between the deficits of the amphetamine abusers and their years of abuse argues against this latter possibility, we acknowledge that clinical evidence of the kind presented here cannot resolve this issue alone, and needs to be supplemented with prospective studies or animal models of the cognitive sequelae of extended administration of stimulant and opiate drugs. For the moment, we restrict ourselves to demonstrating the association between chronic abuse of amphetamines and opiates, and altered decision-making cognition, in the context of information relating to the known neurochemical consequences of chronic amphetamine administration and the neuroanatomical bases of executive function, including decision-making cognition.

Finally, it is also important to point out the likely co-existence of various personality factors that might contribute both to individual differences on tasks of this kind and vulnerability to substance abuse itself. For example, researchers have linked impulsive personality characteristics, and personality disorder, to a pattern of multi-substance abuse (McCown 1988, 1990; Lacey and Evans 1986; Nace et al. 1983) while, more recently, it has been suggested that subjects whose personalities tend to “high sensation-seeking” and “low harm-avoidance” may preferentially abuse stimulants as opposed to other classes of drugs (Piazza et al. 1989, 1991, 1993; see Cloninger 1994 for theoretical review). Research is needed to explore these possibilities by incorporating independent measures of the contribution of such personality factors to performance on decision-making tasks (e.g., as they relate to choices of less favorable task contingencies) and their association with abuse of different substances. In short, altered cognition associated with chronic drug abuse is likely to reflect the interaction of a range of influences including genetic factors relating to pre-morbid personality and behavior (Cloninger et al. 1981; Cloninger 1987; Linnoila et al. 1989) as well as transient and enduring changes in neuromodulation.

Decision Making and Orbitofrontal Cortical Lesions

The present results also provide important information about the decision-making deficits associated with focal damage of orbital PFC. Research conducted by Damasio, Bechara, and colleagues, using a card “gambling” task (see beginning of this article), has highlighted a pattern of sub-optimal decision making under conditions in which information about the underlying task contingencies, that might be used to guide the selection of an adaptive response, is not represented explicitly to the subject. By contrast, the task used here was deliberately constructed to provide explicit, and variable, information about the likely identity of the rewarded response, in the form of the different ratios of red and blue boxes. Some ratios (e.g., 9 red : 1 blue) gave a strong indication of the likely reinforced response, while others less so (e.g., 4 red : 6 blue). The results indicate that, regardless of the quality of this existing contextual information, the patients with orbital PFC damage took considerably longer to make their decisions, and were more likely to make poor choices compared to age- and IQ-matched controls. By contrast, patients with dorsolateral or dorsomedial lesions were unimpaired.

Of particular interest is the observation that the choice of the most likely outcome by the ORB-PFC patients showed a similar increase from ratios of 6:4 (in which the identity of the rewarded response is relatively uncertain) toward ratios of 9:1 (in which the identity of the rewarded response is much more certain) as that seen in the normal non-brain-damaged controls. Thus, it appears that the probabilities relating candidate responses to likely outcomes affected the pattern of choices shown by the orbitofrontal patients and normal controls in similar ways. Rather, the deficit of the ORB-PFC patients appeared to manifest itself as an apparent failure to choose the most adaptive response on around 10% of the trials that was quite independent of the precise probabilities relating responses to outcomes offered at any one time. Similarly, the increase in deliberation times shown by these patients was not notably decreased relative to controls as a function of the ratio of colored boxes, significant deficits in deliberation time still being apparent at ratios of 9:1. Thus, these data confirm that the impairment in decision making following orbitofrontal lesions reflects a failure to resolve effectively between two competing response options, and suggest that uncertainty about the likely outcomes is not the only factor that modulates the magnitude of this deficit (see Bechara et al. 1996).

It is also noticeable that both the ORB-PFC and DL/M-PFC patients risked significantly less of their accumulated reward than controls at the more favorable ratios of red and blue boxes, suggestive of a pattern of conservative behavior that, superficially, seems at odds with evidence indicating that orbital PFC damage is associated with reckless, risk-taking decisions (e.g., Bechara et al. 1994). Reasons for this unexpected finding remain unclear but we suggest two possibilities. It may be that both groups of PFC patients were simply less sensitive than the controls to the opportunities to earn reward represented by the different ratios of the red and blue boxes and, for this reason, failed to take advantage of the more favorable ratios by increasing the size of their bets. Such a deficit is reminiscent of evidence suggesting that patients with prefrontal damage have difficulties in calibrating appropriate quantitative responses on the basis of visually representable information (cf., “cognitive estimates”; Shallice and Evans 1978). However, a more likely possibility is that this more conservative behavior reflects a loss of confidence in their decisions secondary to other more fundamental impairments in cognition associated with damage to orbital or superior sectors of PFC. It is also important to note that there is little evidence that the decision-making deficits associated with orbitofrontal damage reflect disruption to a unitary construct such as “impulse-control” or inhibition. Rather the present results dissociate the difficulties with resolving competing choices shown by orbital PFC patients, and other more complex aspects of decision-making function (e.g., risk adjustment) that might be impaired by damage to different PFC sectors. Further research is needed to resolve this issue.

Monoamines, Orbital PFC, and the Cognitive Basis of Decision Making

Recently, it has been proposed that the decision making of normal, healthy individuals involves the interaction of two separate sources of information (Bechara et al. 1997). One source provides “declarative knowledge” about the properties of the situation at hand while the second provides “dispositional knowledge” about previous emotional states experienced as a consequence of the various candidate responses or actions. This latter (affective) information is used to bias the reasoning processes that culminate in decisions in favor of more advantages or adaptive responses. In fact, it is likely that declarative knowledge about a situation, retrieved from limbic diencephalic structures, is supplemented by more associative knowledge, possibly mediated in part by the neostriatum, relating to the probabilities of relevant outcomes given certain events or actions (e.g., Knowlton et al. 1996). Nevertheless, affective information relating to visceral, somatic states connected with appetitive and aversive outcomes needs to be brought to bear on the evaluative processes of decision making, and the neural circuitry associated with orbital PFC is well suited for this purpose (Bechara et al. 1996).

In the context of this “somatic marker hypothesis,” it may be significant that the amphetamine abusers and the tryptophan-depleted subjects showed marked impairments in the quality of their decision making. Because it has been suggested that the evoked somatic information associated with candidate response options are mediated in part by activation of the central monoaminergic projections (Damasio 1994; Bechara et al. 1997), it is possible that the tendency of these subjects to choose the least optimal of response options reflects disrupted activity in the monoaminergic systems. Thus, further research needs to address the possibility that changed activity within the neurochemically differentiated arousal systems of the reticular core modulate the cognitive functions mediated by orbitofrontal PFC and its interconnected limbic structures that collectively support decision-making cognition.

Finally, the finding that acutely reduced tryptophan produced marked impairments in the quality of decision making, as well as a tendency to slowed deliberation times has particular importance in the context of the large body of clinical evidence indicating that aggressive, impulsive, and risk-taking behaviors is associated with reduced 5-HT function (Linnoila et al. 1983; Virkkunen et al. 1994) as well as at least some forms of substance abuse (Fishbein et al. 1988; Linnoila et al. 1989). Additional experimental evidence has also suggested that efficient 5-HT function is important in social adjustment and the attainment of position (see Raleigh et al. 1996 for review). Because much of the impetus for the recent research on the neurobiological basis of deficits in decision making is their apparent co-occurrence with such failures in social cognition and acquired sociopathy (Damasio 1996), research on the relationship between this aspect of executive function and 5-HT should be especially fruitful.

References

Alexander GE, DeLong MR, Strick PL . (1986): Parallel organisation of functionally segregated circuits linking the basal ganglia and cortex. Annu Rev Neurosci 9: 357–381

Arnsten AFT . (1997): Catecholamine regulation of the prefrontal cortex. J Psychopharm 11: 151–162

Axt KJ, Molliver ME . (1991): Immunocytochemical evidence for methamphetamine-induced serotonergic axon loss in the rat brain. Synapse 9: 302–313

Bechara A, Damasio AR, Damasio H, Anderson SW . (1994): Insensitivity to future consequences following damage to human prefrontal cortex. Cognition 50: 7–15

Bechara A, Tranel D, Damasio H, Damasio AR . (1996): Failure to respond autonomically to anticipated future outcomes following damage to prefrontal cortex. Cerebral Cortex 6: 215–225

Bechara A, Damasio H, Tranel D, Damasio AR . (1997): Deciding advantageously before knowing the advantageous strategy. Science 275: 1293–1295

Bechara A, Damasio H, Tranel D, Anderson SW . (1998): Dissociation of working memory from decision-making within human prefrontal cortex. J Neurosci 18 (1): 428–437

Bowen FP, Kamienny MA, Burns M, Yahr MD . (1975): Parkinsonism: Effects of levodopa on concept formation. Neurology 25: 701–704

Brozoski TJ, Brown R, Rosvold HE, Goldman PS . (1979): Cognitive deficit caused by regional depletion of dopamine in prefrontal cortex of rhesus monkeys. Science 205: 929–931

Cloninger CR, Bohman M, Sigvardsson S . (1981): Inheritance of alcohol abuse. Arch Gen Psychiatry 38: 861–868

Cloninger CR . (1987): Neurogenetic adaptive mechanisms in alcoholism. Science 236: 410–416

Cloninger CR . (1994): Temperament and personality. Curr Opin Neurobiol 4: 266–273

Damasio AR . (1994): Descartes’ Error, New York: Grosset/Putnam

Damasio AR . (1996): The somatic marker hypothesis and the possible functions of the prefrontal cortex. Phil Trans R Soc Lond B 351: 1413–1420

Daniel DG, Weinberger DR, Jones DW, Zigun JR, Cippola R, Handel S, Bigelow LB, Goldberg TE, Berman KF, Kleinman JE . (1991): The effect of amphetamine on regional cerebral blood flow during cognitive activation in schizophrenia. J Neurosci 11: 1907–1917

DiChiara G, Imperato A . (1988): Drugs abused by humans preferentially increase synaptic dopamine concentrations in the mesolimbic system of freely moving rats. Proc Natl Acad Sci 85: 5274–5278

Downes JJ, Roberts AC, Sahakian BJ, Evenden JL, Morris RG, Robbins TW . (1989): Impaired extra-dimensional shift performance in medicated and unmedicated Parkinson's disease: Evidence for a specific attentional dysfunction. Neuropsychologia 27: 1329–1343

Eslinger PJ, Damasio AR . (1985): Severe disturbance of higher cognition after bilateral frontal lobe ablation: Patient EVR. Neurology 35: 1731–1741

Fishbein DH, Lozovsky D, Jaffe JH . (1988): Impulsivity, aggression, and neuroendocrine response to serotonergic stimulation in substance abusers. Biol Psychiatry 25: 1049–1066

Freedman M, Oscar-Berman M . (1996): Bilateral frontal lobe disease and selective delayed response deficits in humans. Behav Neursci 100: 337–342

Fukui K, Nakajima T, Kariyama H, Kashiba A, Kato N, Tohyama I, Kimura H . (1989): Selective reduction of serotonin immunoreactivity in some forebrain regions of rats induced by acute methamphetamine treatment: Quantitative morphometric analysis by serotonin immunocytochemistry. Brain Res 482: 198–203

Gibb JW, Hanson GR, Johnson M . (1994): Neurochemical mechanisms of toxicity. In Cho AK, Segal DS (eds), Amphetamine and its Analogs. San Diego, Academic

Groenewegen HJ, Wright CI, Uylings HBM . (1997): The anatomical relationships of the prefrontal cortex with limbic structures and the basal ganglia. J Psychopharm 11: 99–106

Hotchkiss AJ, Gibb JW . (1980): Long-term effects of multiple doses of methamphetamine on tryptophan hydroxylase and tyrosine hydroxylase activity in rat brain. J Pharmacol Exp Ther 214: 257–262

Howell DC . (1987): Statistical Methods for Psychology, 2nd ed. Boston, Duxbury Press

Knowlton BJ, Mangels JA, Squire LS . (1996): A neostriatal habit learning system in humans. Science 273: 1399–1402

Koob GF, Bloom FE . (1988): Cellular and molecular mechanisms of drug dependence. Science 242: 715–723

Lacey JH, Evans CHD . (1986): The impulsivist: A multi-impulsive personality disorder. Br J Addiction 81: 641–649

Lange KW, Robbins TW, Marsden CD, James M, Owen AM, Paul GM . (1992): L-Dopa withdrawal in Parkinson's disease selectively impairs performance in tests sensitive to frontal lobe dysfunction. Psychopharmacology 107: 394–404

Linnoila M, Virkkunen M, Stein M, Nuptial A, Ripon R, Goodwill FK . (1983): Low cerebrospinal fluid 5-hydroxyindoleacetic acid differentiates impulsive from nonimpulsive violent behavior. Life Sci 33: 2609–2614

Linnoila M, DeJong J, Virkkunen M . (1989): Family history of alcoholism in violent offenders and impulsive fire setters. Arch Gen Psychiatry 46: 613–616

McCown W . (1988): Multi-impulsive personality disorder and multiple substance abuse: Evidence from members of self-help groups. Br J Addiction 83: 431–432

McGown W . (1990): The effect of impulsivity and empathy on abstinence of poly-substance abusers: A prospective study. Br J Addiction 85: 635–637

Mansour A, Khachaturian H, Lewis ME, Akil H, Watson SJ . (1988): Anatomy of CNS opioid receptors. Trends Neurosci 11: 308–314

Melega WP, Quintana J, Raleigh MJ, Stout DB, Yu DC, Lin KP, Huang SC, Phelps ME . (1996): 6-[18F]fluoro-[sca]1-DOPA-PET studies show partial reversibility of long-term effects of chronic amphetamine in monkeys. Synapse 22: 63–69

Miller LA . (1992): Impulsivity, risk-taking, and the ability to synthesize fragmented information after frontal lobectomy. Neuropsychologia 30: 69–79

Milner B . (1964): Some effects of frontal lobectomy in man. In Warren JM, Akert K (eds), The Frontal Granular Cortex and Behavior. New York, McGraw-Hill

Nace EP, Saxon JJ, Shore N . (1983): A comparison of borderline and nonborderline alcoholic patients. Arch Gen Psychiatry 40: 54–56

Nelson HE . (1982): National Adult Reading Test (NART) Test Manual. Windsor (UK), NFER-Nelson

Owen AM, Roberts AC, Polkey CE, Sahakian BJ, Robbins TW . (1991): Extra-dimensional versus intra-dimensional set shifting performance following frontal lobe excision, temporal lobe excision or amygdalo-hippocampectomy in man. Neuropsychologia 29: 993–1006

Park S, Holzman PS . (1992): Schizophrenics show spatial working memory deficits. Arch Gen Psychiatry 49: 975–982

Piazza PV, Deminierre J-M, Le Moal M, Simon H . (1989): Factors that predict individual vulnerability to amphetamine self-administration. Science 245: 1511–1513

Piazza PV, Maccari S, Deminierre J-M, Le Moal M, Mormede P, Simon H . (1991): Corticosterone levels determine individual vulnerability to amphetamine self-administration. Proc Natl Acad Sci 88: 2088–2092

Piazza PV, Deroche V, Deminierre J-M, Maccari S, Le Moal M, Simon H . (1993): Corticosterone in the range of stress-induced levels possesses reinforcing properties: Implications for sensation-seeking behaviors. Proc Natl Acad Sci 90: 11738–11742

Preston KL, Wagner GC, Schuster CR, Seiden LS . (1985): Long-term effects of repeated methylamphetamine administration on monoamine neurons in the rhesus monkey brain. Brain Res 338: 243–248

Raleigh M, McGuire M, Melega W, Cherry S, Huang S-C, Phelps M . (1996): Neural mechanisms supporting social decisions in simians. In Damasio AR (ed), Neurobiology of Decision-making. Berlin, Heidelberg, Springer-Verlag

Ricaurte GA, Schuster CR, Seiden LS . (1980): Long-term effects of repeated methylamphetamine administration on dopamine and serotonin neurons in the rat brain: A regional study. Brain Res 193: 153–163

Ricaurte GA, Schuster CR, Seiden LS . (1984): Further evidence that amphetamines produce long-lasting dopamine neurochemical deficits by destroying dopamine nerve fibers. Brain Res 303: 359–364

Robbins TW, Roberts AC, Owen AM, Sahakian BJ, Everitt BJ, Muir J, De Salvia M, Tovée M . (1994): Monoaminergic-dependent cognitive functions of the prefrontal cortex in monkeys and man. In Thierry AM (ed), Motor and Cognitive Functions of the Prefrontal Cortex . Berlin, Heidelberg, Springer-Verlag

Robinson TE, Becker JB . (1986): Enduring changes in brain and behavior produced by chronic amphetamine administration: A review and evaluation of animal models of amphetamine psychosis. Brain Behav Res 11: 157–198

Ryan LJ, Martone ME, Linder JC, Groves PM . (1988): Cocaine, in contrast to d-amphetamine, does not cause axonal terminal degeneration in neostriatum and agranular cortex of Long-Evans rats. Life Sci 43: 1403–1409

Ryan LJ, Linder JC, Martone ME, Groves PM . (1990): Histological and ultrastructural evidence that d-amphetamine causes degeneration in neostriatum and frontal cortex of rats. Brain Res 518: 67–77

Saver JL, Damasio AR . (1991): Preserved access and processing of social knowledge in a patient with acquired sociopathy due to ventromedial frontal damage. Neuropsychologia 29: 1241–1249

Sawaguchi T, Goldman-Rakic PS . (1991): D1 dopamine receptors in prefrontal cortex: Role in working memory. Science 252: 947–950

Seiden LS, Fischman MW, Schuster CR . (1975): Long-term methamphetamine induced changes in brain catecholamine in tolerant rhesus monkeys. Drug Alcohol Depend 1: 215–219

Seiden LS, Ricaurte GA . (1987): Neurotoxicity of methamphetamine and related drugs. In Meltzer H (ed), Psychopharmacology: The Third Generation of Progress. New York, Raven Press

Shallice T, Evans ME . (1978): The involvement of the frontal lobes in cognitive estimation. Cortex 14: 294–303

Thierry AM, Blanc G, Sobel A, Stinus L, Glowinski J . (1973): Dopamine terminals in the rat cortex. Science 182: 499–501

Villemagne V, Yuan J, Wong DF, Dannals RF, Hatzidimitriou G, Mathews WB, Hayden TR, Musachio J, McCann UD, Ricaurte GA . (1998): Brain dopamine neurotoxicity in baboons treated with methamphetamine comparable to those used recreationally abused by humans: Evidence from [11C]WIN-35,428 positron emission tomography studies and direct in vitro determinations. J Neurosci 18 (1): 419–433

Virkkunen M, Rawlings R, Tokola R, Poland RE, Guidotti A, Nemeroff C, Bissette G, Kalogeras K, Karonen SL, Linnoila M . (1994): CSF biochemistries, glucose metabolism, and diurnal activity rhythms in alcoholic, violent offenders, fire setters, and healthy volunteers. Arch Gen Psychiatry 51: 20–27

Volkow ND, Mullani N, Lance Gould K, Adler S, Krajewski K . (1988): Cerebral blood flow in chronic cocaine users: A study with positron emission tomography. Br J Psychiatry 152: 648–651

Volkow ND, Fowler JS, Wolf AP, Hitzemann R, Dewey SL, Bendreim B, Alpert R, Hoff A . (1991): Changes in brain glucose metabolism in cocaine dependence and withdrawal. Am J Psychiatry 148: 621–627

Volkow ND, Fowler JS, Wang GJ, Hitzemann R, Logan J, Schyler DJ, Dewey SL, Wolf A . (1993): Decreased dopamine D2 receptor availability is associated with reduced frontal metabolism in cocaine abusers. Synapse 14: 169–177

Volkow ND, Wang GJ, Fowler JS, Logan J, Gatley SJ, Hitzemann R, Chen AD, Dewey SL, Pappas N . (1997): Decreased striatal dopaminergic responsiveness in detoxified cocaine-dependent subjects. Nature 386: 830–833

Wagner GC, Ricaurte GA, Seiden LS, Schuster CR, Miller RJ, Westley J . (1980): Long-lasting depletions of striatal dopamine and loss of dopamine uptake sites following repeated administration of methamphetamine. Brain Res 181: 151–160

Wilson JM, Norbrega JN, Carroll ME, Niznik HB, Shannak K, Lac ST, Pristupa ZB, Dixon LM, Kish SJ . (1991): Heterogenous subregional binding patterns of 3H-WIN 35,428 and 3H-GBR 12,935 are differentially regulated by chronic cocaine self-administration. J Neurosci 14: 2966–2979

Wilson JM, Kalasinksky KS, Levey AI, Bergeron C, Reiber G, Anthony RM, Schmunk GA, Shannak K, Haycock JW, Kish SJ . (1996a): Striatal dopamine nerve terminal markers in human chronic methamphetamine users. Nature Medicine 2: 699–703

Wilson JM, Levey AI, Bergeron C, Kalasinksky KS, Lee Ang, Peretti F, Adams VI, Smialek J, Anderson WR, Shannak K, Deck J, Niznik HB, Kish SJ . (1996b): Striatal dopamine, dopamine transporter, and vesicular monoamine transporter in chronic cocaine users. Ann Neurol 40: 428–439

Wise RA, Rompré PP . (1989): Brain dopamine and reward. Ann Rev Psychol 40: 191–225