Abstract

Boolean satisfiability1 (k-SAT) is one of the most studied optimization problems, as an efficient (that is, polynomial-time) solution to k-SAT (for k≥3) implies efficient solutions to a large number of hard optimization problems2,3. Here we propose a mapping of k-SAT into a deterministic continuous-time dynamical system with a unique correspondence between its attractors and the k-SAT solution clusters. We show that beyond a constraint density threshold, the analog trajectories become transiently chaotic4,5,6,7, and the boundaries between the basins of attraction8 of the solution clusters become fractal7,8,9, signalling the appearance of optimization hardness10. Analytical arguments and simulations indicate that the system always finds solutions for satisfiable formulae even in the frozen regimes of random 3-SAT (ref. 11) and of locked occupation problems12 (considered among the hardest algorithmic benchmarks), a property partly due to the system’s hyperbolic4,13 character. The system finds solutions in polynomial continuous time, however, at the expense of exponential fluctuations in its energy function.

Similar content being viewed by others

Main

Boolean satisfiability1 (k-SAT, k≥3) is the quintessential constraint-satisfaction problem, lying at the basis of many decision, scheduling, error-correction and computational applications. k-SAT is in NP (refs 1, 2, 3), that is its solutions are efficiently (polynomial time) checkable, but no efficient (polynomial time) algorithms are known to compute those solutions. If such algorithms would be found for k-SAT, all NP problems would be efficiently computable, because k-SAT is NP-complete2,3.

In k-SAT there are given N Boolean variables {x1,…,xN},xi∈{0,1} and M clauses (constraints), each clause being the disjunction (OR, denoted as  ) of kvariables or their negation

) of kvariables or their negation  . One has to find an assignment of the variables such that all clauses (called collectively as a formula) are satisfied (TRUE=‘1’). When the number of constraints is small, it is easy to find solutions, whereas for too many constraints it is easy to decide that the formula is unsatisfiable (UNSAT). Deciding satisfiability, in the ‘intermediate range’, however, can be very hard: the worst-case complexity of all known algorithms for k-SAT is exponential in N.

. One has to find an assignment of the variables such that all clauses (called collectively as a formula) are satisfied (TRUE=‘1’). When the number of constraints is small, it is easy to find solutions, whereas for too many constraints it is easy to decide that the formula is unsatisfiable (UNSAT). Deciding satisfiability, in the ‘intermediate range’, however, can be very hard: the worst-case complexity of all known algorithms for k-SAT is exponential in N.

Inspired by the mechanisms of information processing in biological systems, analog computing received increasing interest from both theoretical14,15,16 and engineering communities17,18,19,20,21. Although the theoretical possibility of efficient computation using chaotic dynamical systems has been shown previously15, nonlinear dynamical systems theory has not been exploited for NP-complete problems in spite of the fact that, as shown previously19,20,21, k-SAT can be formulated as a continuous global optimization problem19, and even cast as an analog dynamical system20,21.

Here we present a continuous-time dynamical system for k-SAT, with a dynamics that is rather different from previous approaches. Let us introduce the continuous variables19si∈[−1,1], such that si=−1 if the ith variable (xi) is FALSE and si=1 if it is TRUE. We define cm i=1 for the direct form (xi),cm i=−1 for the negated form  and cm i=0 for the absence of the ith variable from clause m. Defining the constraint function

and cm i=0 for the absence of the ith variable from clause m. Defining the constraint function  corresponding to clause m, we have Km∈[0,1] and Km=0 if and only if clause m is satisfied. The goal would be to find a solution s* with si*∈{−1,1} to E(s*)=0, where E is the energy function

corresponding to clause m, we have Km∈[0,1] and Km=0 if and only if clause m is satisfied. The goal would be to find a solution s* with si*∈{−1,1} to E(s*)=0, where E is the energy function  . If such s*exists, it will be a global minimum for E and a solution to the k-SAT problem. However, finding s*by a direct minimization of E(s) will typically fail owing to non-solution attractors trapping the search dynamics. To avoid such traps, here we define a modified energy function

. If such s*exists, it will be a global minimum for E and a solution to the k-SAT problem. However, finding s*by a direct minimization of E(s) will typically fail owing to non-solution attractors trapping the search dynamics. To avoid such traps, here we define a modified energy function  , using auxiliary variables

, using auxiliary variables  similar to Lagrange multipliers20,21. Let us denote by

similar to Lagrange multipliers20,21. Let us denote by  the continuous domain [−1,1]N. Its boundary is the N-hypercube

the continuous domain [−1,1]N. Its boundary is the N-hypercube  with vertex set

with vertex set  . The set of solutions for a given k-SAT formula, called solution space, occupies a subset of

. The set of solutions for a given k-SAT formula, called solution space, occupies a subset of  . Solution clusters are formed by solutions that can be connected through single-variable flips, always staying within satisfying assignments22. Clearly, V ≥0 in

. Solution clusters are formed by solutions that can be connected through single-variable flips, always staying within satisfying assignments22. Clearly, V ≥0 in  , and V (s,a)=0 within

, and V (s,a)=0 within  if and only if

if and only if  is a k-SAT solution, for any

is a k-SAT solution, for any  . We now introduce a continuous-time dynamical system on Ω through:

. We now introduce a continuous-time dynamical system on Ω through:

where  is the gradient operator with respect to s and Km i=Km/(1−cm isi). The initial conditions for s are arbitrary

is the gradient operator with respect to s and Km i=Km/(1−cm isi). The initial conditions for s are arbitrary  ; however, for athey have to be strictly positive, am(0)>0 (for example, am(0)=1). The k-SAT solutions

; however, for athey have to be strictly positive, am(0)>0 (for example, am(0)=1). The k-SAT solutions  are fixed points of (1), for any

are fixed points of (1), for any  . The k-SAT solution clusters are spanning piecewise compact, connected sets in QN, and every point in them is a fixed point of (1) (Supplementary Section SA). System (1) has a number of key properties (see Supplementary Information). First, the dynamics in s stays confined to

. The k-SAT solution clusters are spanning piecewise compact, connected sets in QN, and every point in them is a fixed point of (1) (Supplementary Section SA). System (1) has a number of key properties (see Supplementary Information). First, the dynamics in s stays confined to  . Second, the k-SAT solutions

. Second, the k-SAT solutions  are attractive fixed points of (1). In particular, every point s from the orthant of a k-SAT solution s* with the property |s|2≥N−1+(k−1)2/(k+1)2 is guaranteed to flow into the attractor corresponding to s*. Third, there are no limit cycles. Fourth, for satisfiable formulae the only fixed point attractors of the dynamics are the global minima of V with V =0. Note that in principle, the projection of the dynamics onto

are attractive fixed points of (1). In particular, every point s from the orthant of a k-SAT solution s* with the property |s|2≥N−1+(k−1)2/(k+1)2 is guaranteed to flow into the attractor corresponding to s*. Third, there are no limit cycles. Fourth, for satisfiable formulae the only fixed point attractors of the dynamics are the global minima of V with V =0. Note that in principle, the projection of the dynamics onto  could be stuck in some point

could be stuck in some point  , while da/dt≠0 indefinitely. This does not happen here, as shown in Supplementary Section SE. Moreover, analytical arguments supported by simulations indicate that the trajectory will leave any domain that does not contain solutions, see the discussion in Supplementary Section SE1. Note that the constraint functions (hence their satisfiability) depend directly only on the location of the trajectory in

, while da/dt≠0 indefinitely. This does not happen here, as shown in Supplementary Section SE. Moreover, analytical arguments supported by simulations indicate that the trajectory will leave any domain that does not contain solutions, see the discussion in Supplementary Section SE1. Note that the constraint functions (hence their satisfiability) depend directly only on the location of the trajectory in  , Km=Km(s), and not on the auxiliary variables. The dynamics in the a-space is simple expansion, and for this reason the features of the full phase space Ωlie within its projection onto

, Km=Km(s), and not on the auxiliary variables. The dynamics in the a-space is simple expansion, and for this reason the features of the full phase space Ωlie within its projection onto  . One can actually eliminate entirely the auxiliary variables from the equations by first solving (1b) to give

. One can actually eliminate entirely the auxiliary variables from the equations by first solving (1b) to give  and then inserting it into (1a).

and then inserting it into (1a).

Another fundamental feature of (1) is that it is deterministic: for a given formula f, any initial condition generates a unique trajectory, and any set from  has a unique pre-image arbitrarily back in time. Hence, the characteristics of the solution space are reflected in the properties of the invariant sets7 of the dynamics (1) within the hypercube

has a unique pre-image arbitrarily back in time. Hence, the characteristics of the solution space are reflected in the properties of the invariant sets7 of the dynamics (1) within the hypercube  . The deterministic nature of (1) allows us to define basins of attractions of solution clusters by colouring every point in

. The deterministic nature of (1) allows us to define basins of attractions of solution clusters by colouring every point in  according to which cluster the trajectory flows to, if started from there. These basins fill

according to which cluster the trajectory flows to, if started from there. These basins fill  up to a set of zero (Lebesgue) measure, which forms the basin boundary7, from where the dynamics (by definition) cannot flow to any of the attractors. A k-SAT formula fcan be represented as a hypergraph

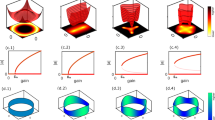

up to a set of zero (Lebesgue) measure, which forms the basin boundary7, from where the dynamics (by definition) cannot flow to any of the attractors. A k-SAT formula fcan be represented as a hypergraph  (or equivalently, a factor graph) in which nodes are variables and hyperedges are clauses connecting the nodes/variables in the clause. Pure literals are those that participate in one or more clauses but always in the same form (direct or negated); hence, they can always be chosen such as to satisfy those clauses. The core of G(f) is the subgraph left after sequentially removing all of the hyperedges having pure literals23. For simple formulae (such as those without a core), the dynamics of (1) is laminar flow and the basin boundaries form smooth, non-fractal sets (Figs 1a,c and 2, top two rows). Adding more constraints

(or equivalently, a factor graph) in which nodes are variables and hyperedges are clauses connecting the nodes/variables in the clause. Pure literals are those that participate in one or more clauses but always in the same form (direct or negated); hence, they can always be chosen such as to satisfy those clauses. The core of G(f) is the subgraph left after sequentially removing all of the hyperedges having pure literals23. For simple formulae (such as those without a core), the dynamics of (1) is laminar flow and the basin boundaries form smooth, non-fractal sets (Figs 1a,c and 2, top two rows). Adding more constraints  develops a core, the spin equations (1a) become mutually coupled, and the trajectories may become chaotic (Fig. 1b, Supplementary Section SF and Fig. S8) and the basin boundaries fractal7,8,9 (Figs 1d, 2 and Supplementary Fig. S4). Therefore, as the constraint density α=M/N is increased within predefined ensembles of formulae (random k-SAT, occupation problems, k-XORSAT and so on) a sharp change to chaotic behaviour is expected at a chaotic transition point αχ, where a chaotic core appears with non-zero statistical weight in the ensemble as

develops a core, the spin equations (1a) become mutually coupled, and the trajectories may become chaotic (Fig. 1b, Supplementary Section SF and Fig. S8) and the basin boundaries fractal7,8,9 (Figs 1d, 2 and Supplementary Fig. S4). Therefore, as the constraint density α=M/N is increased within predefined ensembles of formulae (random k-SAT, occupation problems, k-XORSAT and so on) a sharp change to chaotic behaviour is expected at a chaotic transition point αχ, where a chaotic core appears with non-zero statistical weight in the ensemble as  . As an example, let us consider 3-XORSAT. In this case, owing to its inherently linear nature, it is actually better to work directly with the parity check equations as constraints, instead of their conjunctive normal form. The chaotic core here is a small finite hypergraph, and thus αχ coincides with the so-called dynamical transition point αd computed exactly in ref. 24 (see Supplementary Section SG and Fig. S4). Note, a core can be non-chaotic, and thus the existence of a core is only a necessary condition for the appearance of chaos and in general the two transitions might not coincide. Further increasing the number of constraints (within any formula ensemble) unsatisfiability appears at the threshold value αs>αχ beyond which almost all formulae are unsatisfiable (UNSAT regime)11,12,22,24,25,26,27,28. The closer α is to αs, the harder it is to find solutions, and beyond the so-called freezing transition point αf<αs (called the frozen regime) all known algorithms take exponentially long times or simply fail to find solutions11,12. A variable is frozen if it takes on the same value for all solutions within a cluster, and a cluster is frozen if an extensive number of its variables are frozen. In the frozen regime all clusters are frozen and they are also far apart (

. As an example, let us consider 3-XORSAT. In this case, owing to its inherently linear nature, it is actually better to work directly with the parity check equations as constraints, instead of their conjunctive normal form. The chaotic core here is a small finite hypergraph, and thus αχ coincides with the so-called dynamical transition point αd computed exactly in ref. 24 (see Supplementary Section SG and Fig. S4). Note, a core can be non-chaotic, and thus the existence of a core is only a necessary condition for the appearance of chaos and in general the two transitions might not coincide. Further increasing the number of constraints (within any formula ensemble) unsatisfiability appears at the threshold value αs>αχ beyond which almost all formulae are unsatisfiable (UNSAT regime)11,12,22,24,25,26,27,28. The closer α is to αs, the harder it is to find solutions, and beyond the so-called freezing transition point αf<αs (called the frozen regime) all known algorithms take exponentially long times or simply fail to find solutions11,12. A variable is frozen if it takes on the same value for all solutions within a cluster, and a cluster is frozen if an extensive number of its variables are frozen. In the frozen regime all clusters are frozen and they are also far apart ( Hamming distance)11,12. For random 3-SAT (clauses chosen uniformly at random for fixed α)

Hamming distance)11,12. For random 3-SAT (clauses chosen uniformly at random for fixed α)  (ref. 27),

(ref. 27),  (ref. 28) and all known local search algorithms become exponential or fail beyond α=4.21 (ref. 29), and survey-propagation25-based algorithms fail beyond α=4.25 (ref. 28). As the frozen regime is very thin in random 3-SAT, the so-called locked occupation problems (LOPs) have been introduced12. In LOPs all clusters are formed by exactly one solution; hence, they are completely frozen and the frozen regime extends from the clustering (dynamical) transition point ℓdto the satisfiability threshold ℓs, and thus it is very wide12. An example LOP is random ‘+1-in-3-SAT’ (ref. 12), made of constraints that have no negated variables and a constraint is satisfied only if exactly one of its variables is 1 (TRUE). In +1-in-3-SAT

(ref. 28) and all known local search algorithms become exponential or fail beyond α=4.21 (ref. 29), and survey-propagation25-based algorithms fail beyond α=4.25 (ref. 28). As the frozen regime is very thin in random 3-SAT, the so-called locked occupation problems (LOPs) have been introduced12. In LOPs all clusters are formed by exactly one solution; hence, they are completely frozen and the frozen regime extends from the clustering (dynamical) transition point ℓdto the satisfiability threshold ℓs, and thus it is very wide12. An example LOP is random ‘+1-in-3-SAT’ (ref. 12), made of constraints that have no negated variables and a constraint is satisfied only if exactly one of its variables is 1 (TRUE). In +1-in-3-SAT  ,

,  and beyond ℓd all known algorithms have exponential search times or fail to find solutions (here ℓ=3M/N).

and beyond ℓd all known algorithms have exponential search times or fail to find solutions (here ℓ=3M/N).

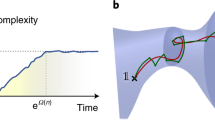

a,b, Five, closely started sample trajectories projected onto (s1,s2,s3), for a 3-SAT formula with N=200, α=3(a) and for a hard formula, N=200, α=4.25(b). The colour indicates the energy E (colour bar) in a given point of the trajectory. Whereas for easy formulae the trajectories exhibit laminar flow, for hard formulae they quickly become separated, showing a chaotic evolution. c,d, Taking a small 3-XORSAT instance with N=15 (see Supplementary Section SG) we fix a random initial condition for all si, except s1 and s2, which are varied on a 400×400 grid, and we colour each point according to the solution they flow to for γ=0.6 (c; instance shown in Supplementary Fig. S4e) and for γ=0.8 (d; instance shown in Supplementary Fig. S4f).

For a random 3-SAT instance with N=50 we vary α by successively adding new constraints. Fixing a random initial condition for si,i≥3, we vary only s1 and s2 on a 400×400 grid, and we colour each point according to the solution (first column) or solution cluster (second column) they flow to. Each colour in a given column represents a solution or solution cluster respectively; however, colours between columns are independent. The third column represents the analog search time t needed to find a solution (see colour bar) starting from the corresponding grid point. Maps are presented for values of α=3.5, 3.7, 3.9, 4.1, 4.16, 4.2 and 4.24. Easy formulae are characterized by smooth basin boundaries and small search times. Note that we see only the solutions (and clusters) that reveal themselves in the (s1,s2) plane; others might not be seen. For hard formulae the boundaries and the search time maps become fractal.

As chaos is present for satisfiable formulae, that is, when system (1) has attracting fixed points, it is necessarily of transient type. Transient chaos4,5,6,7 is ubiquitous in systems with many degrees of freedom such as fluid turbulence30. It appears as the result of homoclinic/heteroclinic intersections of the invariant manifolds of hyperbolic (unstable) fixed points of (1) lying within the basin boundary7,8,9, leading to complex (fractal) foliations of the phase space (see Supplementary Section SF). We observed the prevalence of transient chaos in the whole region αχ<α<αs for all of the problem classes we studied. Interestingly, the velocity fluctuations of trajectories in the chaotic regime are qualitatively similar to those of fluid parcels in turbulent flows as shown in Supplementary Section SK. Our findings indicate that chaotic behaviour may be a generic feature of algorithms searching for solutions in hard optimization problems, corroborating previous observations10 using a heuristic algorithm based on iterated maps.

In the following we show results on random 3-SAT and +1-in-3-SAT formulae in the frozen regime; however, the same conclusions hold for other ensembles that we tested. To investigate the complexity of computation by the flow (1), we monitored the fraction of problems p(t)not solved by continuous time t, as a function of N and α. Figure 3a,c shows that even in the frozen phase, the fraction of unsolved problems by time tdecays exponentially with t, that is, by a law p(t)=re−λ(N)t. The decay rate λ(N) obeys λ(N)=b N−β, with β≈1.6 in both cases, see Fig. 3b,d. From these two equations, the continuous time t(p,N) needed to solve a fixed (1−p)th fraction of random formulae (or to miss solving the pth fraction of them) is:

indicating that the continuous time needed to find solutions scales as a power law with N. Equation (2) also implies power-law scaling for almost all hard instances in the  limit (Supplementary Section SH). The length in

limit (Supplementary Section SH). The length in  of the corresponding continuous trajectories also scales as a power law with N (Supplementary Fig. S7b and Section SJ). However, note that this does not mean that the algorithm itself is a polynomial-cost algorithm, as the energy function V can have exponentially large fluctuations. As the numerical integration happens on a digital machine, it approximates the continuous trajectory with discrete points. Monitoring the fraction of formulae left unsolved as a function of the number of discretization steps nstep in the frozen phase, we find exponential behaviour for nstep(p,N) (Supplementary Sections SI, SJ and Fig. S6). The difference between the continuous- and discrete-time complexities is due to the wildly fluctuating nature of the chaotic trajectories (see Fig. 1b and Methods) in the frozen phase. Compounding this, we also observe the appearance of the Wada property7,8 in the basin boundaries (Fig. 4). A fractal basin boundary has Wada property if its points are simultaneously on the boundary of at least three colours/basins. (An amusing method that creates such sets uses four Christmas ball ornaments7.) Although the Wada property does not affect the true/mathematical analog trajectories, owing to numerical errors, it may switch the numerical trajectories between the basins. As the clusters are far

of the corresponding continuous trajectories also scales as a power law with N (Supplementary Fig. S7b and Section SJ). However, note that this does not mean that the algorithm itself is a polynomial-cost algorithm, as the energy function V can have exponentially large fluctuations. As the numerical integration happens on a digital machine, it approximates the continuous trajectory with discrete points. Monitoring the fraction of formulae left unsolved as a function of the number of discretization steps nstep in the frozen phase, we find exponential behaviour for nstep(p,N) (Supplementary Sections SI, SJ and Fig. S6). The difference between the continuous- and discrete-time complexities is due to the wildly fluctuating nature of the chaotic trajectories (see Fig. 1b and Methods) in the frozen phase. Compounding this, we also observe the appearance of the Wada property7,8 in the basin boundaries (Fig. 4). A fractal basin boundary has Wada property if its points are simultaneously on the boundary of at least three colours/basins. (An amusing method that creates such sets uses four Christmas ball ornaments7.) Although the Wada property does not affect the true/mathematical analog trajectories, owing to numerical errors, it may switch the numerical trajectories between the basins. As the clusters are far  apart, the switched trajectory will flow towards another cluster into a practically opposing region of

apart, the switched trajectory will flow towards another cluster into a practically opposing region of  until it may come close again to the basin boundary and so on, partially randomizing the trajectory in

until it may come close again to the basin boundary and so on, partially randomizing the trajectory in  .

.

a, The fraction of problems p(t)not yet solved by continuous time t for 3-SAT at α=4.25, for N=20, 30, 40, 50, 60, 80, 100, 125 and 150 (colours). Averages were done over 105 instances for each N. For each instance the dynamics was started from one random initial condition. Black continuous lines show the decay p(t)=rexp(−λ(N)t). b, The decay rate follows λ(N)=b N−β, with β≈1.66. c, The fraction of problems p(t) unsolved by time t for +1-in-3-SAT at l=2.34, for N=20, 25, 30, 35, 40, 50, 60, 70 and 80. For each instance the dynamics was started in parallel from 10 random initial conditions; averages were taken over 104 instances for each N. Black continuous lines show the same exponential decay as in a. d, The decay rate shows the same behaviour as in b with exponent β≈1.68.

Basin boundaries are shown for +1-in-3-SAT for an instance at N=30 and l=2.28. Fixing a random initial condition for all si, i≥3 we vary only s1 and s2 on a 200×400 grid and colour the points according to three different solutions they flow to. Successive magnifications illustrate the Wada property: the points on the basin boundaries are simultaneously on the boundary of all three basins, implying that large enough magnifications will contain all three colours (although the blue–green boundary seems void of red in the third panel, panels four and five show that red is actually present).

We conjecture that the power-law scaling of the continuous search times (2) is due in part to a generic property of the dynamical system (1), namely that it is hyperbolic4,6,13 or near-hyperbolic. It has been shown that for hyperbolic systems the trajectories escape from regions far away from the attractors to the attractors at an exponential rate, for almost all initial conditions4,6,13. That is, the fraction of trajectories still searching for a solution after time t decays as e−κt(Supplementary Fig. S9), where κ is the escape rate. Thus, κ−1 can be considered as a measure of hardness for a given formula. When taken over an ensemble at a given α, this property generates the exponential decay for p(t)with an average escape rate λ.

The form of the energy function V incorporates the influence of all of the clauses at all times, and in this sense (1) is a non-local search algorithm. As shown before, the auxiliary variables can be eliminated; however, they give a convenient interpretation of the dynamics. Namely, one can think of them as providing extra dimensions along which the trajectories escape from local wells, and their form (1b) provides positive feedback that guarantees their escape. Clearly, these equations are not unique, and other forms based on the same principles may work just as well.

Methods

To simulate (1), we use a fifth-order adaptive Cash–Karp Runge–Kutta method with monitoring of local truncation error to ensure accuracy. To keep the numerical trajectory within a tube of small, preset thickness around the true analog trajectory in Ω (Supplementary Fig. S5), the Runge–Kutta algorithm occasionally carries out an exponentially large number of discretization steps nstep. However, this happens only for hard formulae, when the analog trajectory has wild, chaotic fluctuations. For easy formulae, both p(t) and p(nstep) decay exponentially as shown in Supplementary Fig. S6a, inset.

References

Cook, S. in Proc. Third Ann. Symp. Theory of Computing 151–158 (ACM, 1971).

Fortnow, L. The status of the P versus NP problem. Commun. ACM 52, 78–86 (2009).

Garey, M. R. & Johnson, D. S. Computers and Intractability; A Guide to the Theory of NP-Completeness (W. H. Freeman, 1990).

Kadanoff, L. P. & Tang, C. Escape from strange repellers. Proc. Natl Acad. Sci. 81, 1276–1279 (1984).

Tél, T. & Lai, Y-C. Chaotic transients in spatially extended systems. Phys. Rep. 460, 245–275 (2008).

Lai, Y-C. & Tél, T. Transient Chaos: Complex Dynamics on Finite-Time Scales (Springer, 2011).

Ott, E. Chaos in Dynamical Systems 2nd edn (Cambridge Univ. Press, 2002).

Nusse, H. E. & Yorke, J. A. Basins of attraction. Science 271, 1376–1380 (1996).

Grebogi, C., Ott, E. & Yorke, J. A. Basin boundary metamorphoses: Changes in accessible boundary orbits. Physica D 24, 243–262 (1987).

Elser, V., Rankenburg, I. & Thibault, P. Searching with iterated maps. Proc. Natl Acad. Sci. 104, 418–423 (2007).

Achlioptas, D. & Ricci-Tersenghi, F. Random formulas have frozen variables. SIAM J. Comput. 39, 260–280 (2009).

Zdeborová, L. & Mézard, M. Locked constraint satisfaction problems. Phys. Rev. Lett. 101, 078702 (2008).

Cvitanovć, P., Artuso, R., Mainieri, R., Tanner, G. & Vattay, G. Chaos: Classical and Quantum (Niels Bohr Institute, 2010) ChaosBook.org/version13.

Branicky, M. IEEE Workshop on Physics and Computation 265–274 (IEEE Computer Society Press, 1994).

Siegelmann, H. T. Computation beyond the Turing limit. Science 268, 545–548 (1995).

Moore, C. Recursion theory on the reals and continuous-time computation. Theor. Comput. Sci. 162, 23–44 (1996).

Liu, S-C., Kramer, J., Indiveri, G., Delbruck, T. & Douglas, R. Analog VLSI: Circuits and Principles (MIT Press, 2002).

Chua, L. O. & Roska, T. Cellular Neural Networks and Visual Computing: Foundations and Applications (Cambridge Univ. Press, 2005).

Gu, J., Gu, Q. & Du, D. On optimizing the satisfiability (SAT) problem. J. Comput. Sci. Technol. 14, 1–17 (1999).

Nagamatu, M. & Yanaru, T. On the stability of Lagrange programming networks for satisfiability problems of propositional calculus. Neurocomputing 13, 119–133 (1996).

Wah, B. W. & Chang, Y-J. Trace-based methods for solving nonlinear global optimization and satisfiability problems. J. Glob. Opt. 10, 107–141 (1997).

Achlioptas, D., Coja-Oghlan, A. & Ricci-Tersenghi, F. On the solution-space geometry of constraint satisfaction problems. Random Struct. Algorithms 38, 251–268 (2011).

Molloy, M. Cores in random hypergraphs and Boolean formulas. Random Struct. Algorithms 27, 124–135 (2005).

Mézard, M., Ricci-Tersenghi, F. & Zecchina, R. Two solutions to diluted p-spin models and XORSAT problems. J. Stat. Phys. 111, 505–533 (2003).

Mézard, M., Parisi, G. & Zecchina, R. Analytic and algorithmic solution of random satisfiability problems. Science 297, 812–815 (2002).

Achlioptas, D., Naor, A. & Peres, Y. Rigorous location of phase transitions in hard optimization problems. Nature 435, 759–764 (2005).

Mertens, S., Mézard, M. & Zecchina, R. Threshold values of random k-SAT from the cavity method. Random Struct. Algorithms 28, 340–373 (2006).

Parisi, G. Some remarks on the survey decimation algorithm for k-satisfiability. http://arxiv.org/abs/cs/0301015 (2003).

Seitz, S., Alava, M. & Orponen, P. Focused local search for random 3-satisfiability. J. Stat. Mech. P06006 (2005).

Hof, B., de Lozar, A., Kuik, D.J. & Westerweel, J. Repeller or Attractor? Selecting the dynamical model for the onset of turbulence in pipe flow. Phys. Rev. Lett. 101, 214501 (2008).

Acknowledgements

We thank T. Tél and L. Lovász for valuable discussions and for a critical reading of the manuscript.

Author information

Authors and Affiliations

Contributions

M.E-R. and Z.T. conceived and designed the research and contributed analysis tools equally. M.E-R. carried out all simulations, collected and analysed all of the data and Z.T. wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Supplementary Information

Supplementary Information (PDF 2565 kb)

Rights and permissions

About this article

Cite this article

Ercsey-Ravasz, M., Toroczkai, Z. Optimization hardness as transient chaos in an analog approach to constraint satisfaction. Nature Phys 7, 966–970 (2011). https://doi.org/10.1038/nphys2105

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/nphys2105

This article is cited by

-

Large deviation principle for quasi-stationary distributions and multiscale dynamics of absorbed singular diffusions

Probability Theory and Related Fields (2024)

-

Searching for spin glass ground states through deep reinforcement learning

Nature Communications (2023)

-

Efficient optimization with higher-order Ising machines

Nature Communications (2023)

-

Bifurcation behaviors shape how continuous physical dynamics solves discrete Ising optimization

Nature Communications (2023)

-

Entropic herding

Statistics and Computing (2023)