Abstract

Recently it has been shown that the control energy required to control a dynamical complex network is prohibitively large when there are only a few control inputs. Most methods to reduce the control energy have focused on where, in the network, to place additional control inputs. Here, in contrast, we show that by controlling the states of a subset of the nodes of a network, rather than the state of every node, while holding the number of control signals constant, the required energy to control a portion of the network can be reduced substantially. The energy requirements exponentially decay with the number of target nodes, suggesting that large networks can be controlled by a relatively small number of inputs as long as the target set is appropriately sized. We validate our conclusions in model and real networks to arrive at an energy scaling law to better design control objectives regardless of system size, energy restrictions, state restrictions, input node choices and target node choices.

Similar content being viewed by others

Introduction

Recent years have witnessed increased interest from the scientific community regarding the control of complex dynamical networks1,2,3,4,5,6,7,8,9,10,11,12,13,14. Some common types of networks examined throughout the literature are power grids15,16, communication networks17,18, gene regulatory networks19, neuronal systems20,21, food webs22 and social systems23. We define networks as being composed of two components; the nodes that constitute the individual members of the network and the edges that describe the coupling or information sharing between nodes24. Particular focus has been paid to our ability to control these networks6,8,9,10,11,12,14,25. A network is deemed controllable if a set of appropriate control signals can drive the network from an arbitrary initial condition to any final condition in finite time. If a network is controllable, a control signal that achieves such a goal is not necessarily unique.

One important metric to characterize these control signals is the energy that each one requires. From optimal control theory, we can define the control action that, for a given distribution of the control input signals, satisfies both our initial and final conditions as well as minimizes the energy required to perform the task26. The energy associated with the minimum energy control action provides an energetic theoretical limit. Knowledge of the minimum control energy is crucial to understand how expensive it can be to control a given network when applying any alternative control signal. The minimum energy framework has recently been examined in refs 27, 28, which have shown that based on the underlying network structure, the distribution of the control input signals, the desired final state and other parameters, the energy to control a network may lie on a distribution that spans a broad range of orders of magnitude. In this paper, we focus on reducing the energy that is maximum with respect to the choice of the initial state, final state and, in general, of an arbitrary control action. We note that in real applications involving large complex networks, achieving control over all of the network nodes is often unfeasible27,28 and ultimately unnecessary.

One possible method to reduce the required energy was investigated in ref. 29, where additional control signals were added in optimal locations in the network according to each node’s distance from the current set of control signals. In refs 30, 31, the minimum dominating set (MDS) of the underlying graph of a network is determined and each node in the MDS is assumed capable of generating an independent signal along each of its outgoing edges. As every node not in the MDS is only one edge away from a node in the MDS, and each edge from an MDS node to a non-MDS represents a unique control signal, the control energy will be relatively small. In this paper, for the first time, we adjust the control goal to affect only a subset of the network nodes, chosen as the targets of the control action, and consider the effect of this choice on the required control energy. This type of target control action is typically what is needed in applications in gene regulatory networks32, financial networks33 and social systems34.

Our first main contribution is determining how the energy scales with the cardinality of the target set. In particular, we find that the minimum control energy to control a portion of the complex network decays exponentially as the number of targets is decreased. Previous work27,28 has only investigated the control energy for complex networks when the target set coincides with the set of all nodes. We also look at the energetic relation between the number of targets and other network parameters such as the number of inputs and the amount of time allocated for the control action. Our second main contribution is showing that target control is applicable to other control actions generated with respect to other cost functions. Target control has received recent attention in refs 14, 35, which examined methods to choose a minimal set of independent control signals necessary to control just the targets. Here a target control signal is examined that is optimal with respect to a general quadratic cost function that appears often in the control of many real systems.

Results

Problem formulation

Complex networks consist of two parts; a set of nodes with their interconnections that represent the topology of the network, and the dynamics that describe the time evolution of the network nodes. First, we summarize the definitions needed to describe a network. We define  , i=1,…,n to be the set of n nodes that constitute a network. The adjacency matrix is a real, square n × n matrix, A, which has non-zero elements aij if node i receives a signal from node j. For each node i we count the number of receiving connections, called the in-degree

, i=1,…,n to be the set of n nodes that constitute a network. The adjacency matrix is a real, square n × n matrix, A, which has non-zero elements aij if node i receives a signal from node j. For each node i we count the number of receiving connections, called the in-degree  , and the number of outgoing connections, called the out-degree

, and the number of outgoing connections, called the out-degree  . The average in-degree and average out-degree for a network is kav. One common way to characterize the topology of a network is by its degree distribution. Often the in-degree and out-degree distributions of networks that appear in science and engineering applications are scale-free, that is, p(k)∼k−γ where k is either the in-degree or the out-degree with corresponding γin and γout, and most often 2≤γ≤3 (ref. 36).

. The average in-degree and average out-degree for a network is kav. One common way to characterize the topology of a network is by its degree distribution. Often the in-degree and out-degree distributions of networks that appear in science and engineering applications are scale-free, that is, p(k)∼k−γ where k is either the in-degree or the out-degree with corresponding γin and γout, and most often 2≤γ≤3 (ref. 36).

While most dynamical networks that arise in science and engineering are governed by nonlinear differential equations, the fundamental differences between individual networks and the uncertainty of precise dynamics make any substantial overarching conclusions difficult6,35,36. Nonetheless, linear controllers have proven to be adequate in many applications by approximating nonlinear systems as linear systems in local regions of the n-dimensional state space37. We examine linear dynamical systems, as it is a necessary first step to understanding how target control may benefit nonlinear systems. The linear time invariant network dynamics are

where x(t)=[x1(t),…,xn(t)]T is the n × 1 time-varying state vector, u(t)=[u1(t),…,um(t)]T is the m × 1 time-varying external control input vector and y(t)=[y1(t),…,yp(t)]T is the p × 1 time-varying vector of outputs, or targets. The n × n matrix A={aij} is the adjacency matrix described previously, the n × m matrix B defines the nodes in which the m control input signals are injected, and the p × n matrix C expresses the relations between the states that are designated as the outputs. In addition, the diagonal values of A, aii, i=1,…,n, which represent self-regulation, such as birth/death rates in food webs, station keeping in vehicle consensus, degradation of cellular products and so on, are chosen to be unique at each node (see proposition 1 in ref. 38). These diagonal values are chosen to also guarantee that A is Hurwitz so the system in equation (1) is internally stable. We restrict ourselves to the case when B (C) has linearly independent columns (rows) with a single non-zero element, that is, each control signal is injected into a single node (defined as an input node) and each output is drawn from a single node (defined as a target node). Our particular choice of the matrix C is consistent with target control, as our goal is to individually control each one of the target nodes. Our selection of the matrix B is due to our assumption that different network nodes may be selectively affected by a particular control signal, for example, a drug interacting with a specific node in a protein network. Note that in today’s information-rich world, a main technological limitation is not generating control signal, but rather placing actuators at the input nodes; hence our assumption that each actuator is driven by an independent control signal is sound14. We define  as the subset of target nodes and

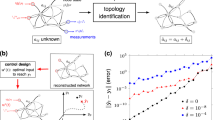

as the subset of target nodes and  as the number of target nodes. A small sample schematic is shown in Fig. 1a, which demonstrates the graphical layout of our problem emphasizing the graph structure and the role of input nodes and targets. Here by an input node, we mean a node that directly receives one and only one control input such as nodes 1 and 2 in Fig. 1a. The explicit equation for the time evolution of the outputs is

as the number of target nodes. A small sample schematic is shown in Fig. 1a, which demonstrates the graphical layout of our problem emphasizing the graph structure and the role of input nodes and targets. Here by an input node, we mean a node that directly receives one and only one control input such as nodes 1 and 2 in Fig. 1a. The explicit equation for the time evolution of the outputs is

(a) A sample network with seven nodes and colour-coded input signals (blue) and output sensors (pink). Note that each control input is directly connected to a single node, and each output sensor receives the state of a single node. Nodes directly connected to the pink outputs are target nodes, that is, they have a prescribed final state that we wish to achieve in finite time, tf. The corresponding vector y(tf) is defined in terms of the states as well. Nodes directly receiving a signal from a blue node are called input nodes and the remaining nodes are neither input nodes nor target nodes. (b) We examine a three-node network where every node is a target node (pink nodes) and one node receives a control input (blue). The edge weights are shown and the self-loop magnitude k=1. (c) The state evolution is shown where the initial condition is the origin and the final state for each target node is yi(tf)=1, i=1,2,3. (d) The square of the magnitude of the control input is also shown. The energy, or the control effort, is found by integrating the square of the magnitude of the control input. For this case, E=|u(t)|2≈382 (a.u.). (e) The same network as in b but now only one node is declared a target node. (f) The state evolution is shown where the initial condition remains the origin but the final condition is only defined for y3(tf)=1. (g) The square of the magnitude of the control input is also shown. Note the different vertical axis scale as compared to d. For the second case, E=|u(t)|2≈66.3 (a.u.).

where we are free to choose u(t) such that it satisfies the prescribed initial state, x(t0)=x0 and desired final output, y(tf)=yf. Note that if we set C=In, where In is the n × n identity matrix, then y(t)=x(t).

The minimum energy control input, well known from linear systems theory39, minimizes the cost function  and satisfies an arbitrary initial condition and an arbitrary final condition if the system is controllable. A similar control input is optimal when the final condition is imposed on only some of the states, that is, on the target nodes (see the derivation in Supplementary Note 2)

and satisfies an arbitrary initial condition and an arbitrary final condition if the system is controllable. A similar control input is optimal when the final condition is imposed on only some of the states, that is, on the target nodes (see the derivation in Supplementary Note 2)

The real, symmetric, semi-positive definite matrix  is the controllability Gramian. Note that in deriving equation (3) we must assume that the triplet (A, B, C) is output controllable, which can be determined if the matrix rank(CB|CAB|…|CAn−1B)=p. If the triplet is output controllable, it implies that the matrix CWCT is invertible39,40. This suggests the possibility that while the entire network may not be controllable (that is, C=In and W is singular), for a given B (of the form described above) there may be a controllable subspace (subset of nodes) within the network. On the other hand, every subspace of the controllable subspace is also controllable. In the following discussions we proceed under the assumption that the pair (A, B) is controllable by following the methodology in ref. 38, and focus on the effect that the choice of the matrix C has on the control energy.

is the controllability Gramian. Note that in deriving equation (3) we must assume that the triplet (A, B, C) is output controllable, which can be determined if the matrix rank(CB|CAB|…|CAn−1B)=p. If the triplet is output controllable, it implies that the matrix CWCT is invertible39,40. This suggests the possibility that while the entire network may not be controllable (that is, C=In and W is singular), for a given B (of the form described above) there may be a controllable subspace (subset of nodes) within the network. On the other hand, every subspace of the controllable subspace is also controllable. In the following discussions we proceed under the assumption that the pair (A, B) is controllable by following the methodology in ref. 38, and focus on the effect that the choice of the matrix C has on the control energy.

We also consider a more general linear-quadratic optimal control problem, that is, we attempt to minimize a quadratic cost function that applies a weight to the states, x(t), and the control inputs, u(t). This type of cost function is applied in a variety of science and engineering applications such as medical treatments or biological systems41,42, consensus or synchronization of distributed agents43,44,45, networked systems46, social interactions47 and many more

The n × n matrix Q applies a weight to the states and the m × m matrix R applies a weight to the control inputs. The n × m matrix M allows for mixed-term weights, which may arise for specially designed trajectories, optimization of human motion or other physical constraints48,49,50,51. We restrict the cost function matrix Q to be symmetric semi-positive definite and the matrix R to be symmetric positive definite. We derive a closed-form expression for the optimal control input associated with equation (4) using the property that the Hamiltonian system, which arises during the solution (derived in Supplementary Note 4) can be decoupled

The symmetric matrix S is the solution to  , the continuous time algebraic Riccati equation and the other matrices are defined as

, the continuous time algebraic Riccati equation and the other matrices are defined as

The derivation of equations (5) and (6) is detailed in Supplementary Note 4.

Optimal energy and worst case direction

The energy associated with an arbitrary control input, such as equation (3) or (5), , while only targeting the nodes in  , is defined as

, is defined as  . Note that E(p) also depends on which p nodes are in the target set,

. Note that E(p) also depends on which p nodes are in the target set,  , that is, there is a distribution of values of E(p) for all target node sets of size p. The energy E(p) is a measure of the ‘effort’, which must be provided to achieve the control goal. In the subsequent definitions and relations, when a variable is a function of p, we more specifically mean it is a function of a specific target set of size p of which there are

, that is, there is a distribution of values of E(p) for all target node sets of size p. The energy E(p) is a measure of the ‘effort’, which must be provided to achieve the control goal. In the subsequent definitions and relations, when a variable is a function of p, we more specifically mean it is a function of a specific target set of size p of which there are  possible sets. We can define the energy when the control input is of the form in equation (3) as

possible sets. We can define the energy when the control input is of the form in equation (3) as

where the vector  is the control manoeuvre and Wp is the p × p symmetric, real, non-negative definite output controllability Gramian. Note that when C is defined as above, that is, its rows are linearly independent versors, the reduced Gramian Wp is a p-dimensional principal submatrix of W. A small, three-node example of the benefits of target control is shown in Fig. 1b–g. In the first scenario (Fig. 1b–d) each node has a prescribed final state (p=n=3) and in the second scenario (Fig. 1e–g) only a single node is targeted (p=1). The energy is calculated for each scenario by integrating the curves in Fig. 1d,g from which we find that E(3)=382 and E(1)=66.3. Even though the second scenario has one-third of the targets, the energy is reduced by a sixth (compare also the different scales on the y axis of Fig. 1d,g). We denote the eigenvalues of Wp as

is the control manoeuvre and Wp is the p × p symmetric, real, non-negative definite output controllability Gramian. Note that when C is defined as above, that is, its rows are linearly independent versors, the reduced Gramian Wp is a p-dimensional principal submatrix of W. A small, three-node example of the benefits of target control is shown in Fig. 1b–g. In the first scenario (Fig. 1b–d) each node has a prescribed final state (p=n=3) and in the second scenario (Fig. 1e–g) only a single node is targeted (p=1). The energy is calculated for each scenario by integrating the curves in Fig. 1d,g from which we find that E(3)=382 and E(1)=66.3. Even though the second scenario has one-third of the targets, the energy is reduced by a sixth (compare also the different scales on the y axis of Fig. 1d,g). We denote the eigenvalues of Wp as  , i=1,…,p, which are ordered such that

, i=1,…,p, which are ordered such that  when the triplet (A, B, C) is output controllable. By defining the magnitude of the vector, |β|=β, we can define the ‘worst-case’ (or maximum) energy according to the Min–Max theorem, which provides a bound for equation (7). The bounds are functions of the extremal eigenvalues of Wp

when the triplet (A, B, C) is output controllable. By defining the magnitude of the vector, |β|=β, we can define the ‘worst-case’ (or maximum) energy according to the Min–Max theorem, which provides a bound for equation (7). The bounds are functions of the extremal eigenvalues of Wp

The upper extreme of the control energy for any control action is  , which is what we call the ‘worst-case’ energy. For an arbitrary vector β, which can be represented as a linear combination of the eigenvectors of Wp, the energy can be defined as a weighted sum of the inverse eigenvalues,

, which is what we call the ‘worst-case’ energy. For an arbitrary vector β, which can be represented as a linear combination of the eigenvectors of Wp, the energy can be defined as a weighted sum of the inverse eigenvalues,  , which includes the worst-case energy. Moreover, for the large scale-free networks that are of interest in applications, typically

, which includes the worst-case energy. Moreover, for the large scale-free networks that are of interest in applications, typically  , j=2,…,p, and

, j=2,…,p, and  provides the approximate order of the energy required to move the system in any arbitrary direction of state space. This is demonstrated with an example in Supplementary Note 5.

provides the approximate order of the energy required to move the system in any arbitrary direction of state space. This is demonstrated with an example in Supplementary Note 5.

We investigate how the selection of the target nodes affects  , the inverse of the smallest eigenvalue of the output Gramian. To better understand the role of the number of target nodes on the worst-case energy, we consider an iterative process by which we start from the case when every node is in the target set,

, the inverse of the smallest eigenvalue of the output Gramian. To better understand the role of the number of target nodes on the worst-case energy, we consider an iterative process by which we start from the case when every node is in the target set,  , and progressively remove nodes. Say

, and progressively remove nodes. Say

is an eigenvalue of Wi before (after) removal of a target node. By Cauchy’s interlacing theorem we have that

is an eigenvalue of Wi before (after) removal of a target node. By Cauchy’s interlacing theorem we have that

In particular, from (9) we note that  , indicating that the smallest eigenvalue cannot decrease after removal of a target node. This implies that the maximum energy

, indicating that the smallest eigenvalue cannot decrease after removal of a target node. This implies that the maximum energy  for all i such that 1≤i≤n−1.

for all i such that 1≤i≤n−1.

Energy scaling with reduction of target space

We would like to determine the rate of increase of  as p decreases, which is not obvious from equation (9). At each step p,

as p decreases, which is not obvious from equation (9). At each step p,  contains p nodes in the target set (such that

contains p nodes in the target set (such that  and p decreases from n−1 to 1) and the output controllability Gramian is partitioned such that Wp is a principal minor of Wp+1.

and p decreases from n−1 to 1) and the output controllability Gramian is partitioned such that Wp is a principal minor of Wp+1.

We let the matrix  be the matrix Wp+1 except that the first row of Wp+1 in equation (10) has been replaced with zeros, and we define the vectors

be the matrix Wp+1 except that the first row of Wp+1 in equation (10) has been replaced with zeros, and we define the vectors  to be the left (right) eigenvector associated with the smallest eigenvalue of Wp (

to be the left (right) eigenvector associated with the smallest eigenvalue of Wp ( ). The relation between two consecutive values,

). The relation between two consecutive values,  and

and  , can be expressed linearly as

, can be expressed linearly as  , where

, where  . The notation [a]1 denotes the first value of a vector a. Each value of ηp exactly quantifies the rate of increase at each step of the specific process and also relates the maximum energies

. The notation [a]1 denotes the first value of a vector a. Each value of ηp exactly quantifies the rate of increase at each step of the specific process and also relates the maximum energies  . We can also relate any two target sets of size k and j such that 1≤k<j≤n and

. We can also relate any two target sets of size k and j such that 1≤k<j≤n and

where  is the geometric mean of ηi, i=k,…, (j−1), which is independent of the order of the nodes chosen to be removed between

is the geometric mean of ηi, i=k,…, (j−1), which is independent of the order of the nodes chosen to be removed between  and

and  . To define a network characteristic parameter η, we average equation (11) over many possible choices of the target sets

. To define a network characteristic parameter η, we average equation (11) over many possible choices of the target sets  and

and  , where we have selected k=n/10 and j=n

, where we have selected k=n/10 and j=n

where the symbol 〈·〉 indicates an average over many possible choices of n/10 nodes for the target set. By applying equation (12) to equation (11) and by setting k=n/10 and j=p>k (for an extended discussion see Supplementary Note 3), we achieve the scaling equation used throughout the simulations

The linear relationship is shown in Figs 2, 3, 4, where p/n is decreased from 1 (the target set  ) to 0.1 (the target set consists of 10% of the nodes drawn randomly from the set of all nodes). Further details of the scaling law and its relation to the spectral characteristics of the output controllability Gramian can be found in Supplementary Note 3, and the practical calculation can be found in the Methods section. For the simulations in Figs 2, 3, 4, 5, 6, 7, around 50% of the nodes are chosen to be input nodes (which we have verified yields a controllable pair (A, B)).

) to 0.1 (the target set consists of 10% of the nodes drawn randomly from the set of all nodes). Further details of the scaling law and its relation to the spectral characteristics of the output controllability Gramian can be found in Supplementary Note 3, and the practical calculation can be found in the Methods section. For the simulations in Figs 2, 3, 4, 5, 6, 7, around 50% of the nodes are chosen to be input nodes (which we have verified yields a controllable pair (A, B)).

(a) The maximum control energy is computed for model networks constructed with the static model and the Erdos–Renyi (ER) model while varying the target node fraction. For the static model, four different power-law exponents are used. The average degree of each model network is kav=2.5 and its size is n=500. The input node fraction nd=0.5, chosen such that the pair (A, B) is controllable. Further aspects like edge weights and values along the diagonal of the adjacency matrix are discussed in the Methods section. Each set of target nodes is chosen randomly from the nodes in the network. Each point represents the mean value of the control energy taken over 50 realizations. The error bars represent one s.d. Note the linear growth of the logarithm of the control energy. The slopes of these curves are the values of η corresponding to each set of parameters. A linear fit curve is provided in grey. Also, as γ grows, that is, the scale-free models become more homogeneous, the slope approaches that of the Erdos–Renyi model. (b) The same study as in a except that kav=8.0. The same behaviour is seen but note the difference in scales of the vertical axis. Each point is the mean over 50 realizations, and error bars represent one s.d. (c) The study in a and b is performed for more values of kav, and the value of η is computed for each curve.

Besides the average degree and power-law exponent that describe the underlying graph of the network (Fig. 2), there are other parameters that can affect the control energy such as the time horizon and the number of designated input nodes. (a) The time horizon, defined as tf−t0, is varied for networks constructed using the static model with the following properties: n=500; γin=γout=3.0; kav=5.0; and nd=0.5. As we choose t0=0, the time horizon is equivalent to just tf. The main plot shows how the log of the maximum control energy changes with target node fraction, p/n. Each point represents the mean over 50 realizations, and error bars represent one s.d. The inset shows how η changes with the time horizon. We see a sharp increase as the time horizon decreases. (b) We also investigate how η varies with the number of input nodes. The same class of network is examined as in a: n=500; γin=γout=3.0; and kav=5.0. For both simulations, nodes are randomly and independently chosen to be in each target set. We see that η grows as the number of input nodes decreases as shown in the inset.

(a) We compute the maximum control energy required for the s420st circuit network and the TM metabolic network for increasing target node fraction, p/n. Each point represents the mean of 50 realizations where each realization is a specific choice of the nodes in the target node set. Error bars represent one s.d. (b) The same analysis performed for the Carpinteria food web, the protein structure 1 network and a Facebook forum network. Each point represents the mean of 50 realizations where each realization is a specific choice of the nodes in the target node set. Error bars represent one s.d. For both a and b, the linear behaviour exists only when the target fraction increases beyond p/n=0.1. (c) We numerically compute values of η for real data sets (compiled in Supplementary Table 1) for comparison when nd=0.45 or larger. The values of η are plotted against each network’s average degree as the degree distribution that best describes the degree sequence may or may not be scale-free. Nonetheless, we see a similar trend that low average-degree networks have a larger value of η, as demonstrated in Fig. 2c. Also worth noting is that networks from the same class (as defined in the legend) tend to have similar values of η.

Probability density functions (PDF) of the distribution of η for a selection of real networks that have undergone DPR and nd=0.45. (a) RHS59 from social. (b) s420st (ref. 53) from circuit. (c) TP-met54 from metabolic. (d) North Euro Grid60 from infrastructure. (e) Carpinteria55 from Food Web. (f) Each of the corresponding P values are listed in the table. The vertical lines mark the value of ηreal, which corresponds to the original network.

We demonstrate that for both model networks and real data sets, increasing ζ (where the state weight matrix, Q=ζIn), does not significantly increase the average energy. (a) The static model is used to generate model networks with parameters n=300 and kav=5.0, where nd=0.5. Note that the order of magnitude, here represented as a linear scale with respect to the logarithm of the energy, is approximately constant. Each point is averaged over 50 iterations of model networks and final desired states, which have Euclidean norm equal to one. (b) Two real networks are also examined and the average energy is computed. Each point is the mean over 50 realizations where each realization represents a choice of final condition such that the final condition has Euclidean norm equal to one. For both studies, error bars represent one s.d.

We construct a single model network using the static model52 with the parameters n=300, nd=n/4, γ=2.7 and kav=5. The energy scaling is examined for the general quadratic cost function. We compute η for different values of ζ such that the state weight matrix Q=ζIn. The values of η for ζ=0, 1 and 10 are η=13.46, 13.66 and 13.53, respectively. Each point is averaged over 50 iterations of target node sets. The simulations are performed with initial condition set to the origin and the final condition chosen randomly such that ||yf||=1. Error bars represent one s.d.

The exponential decay of the energy as p/n decreases has immediate practical relevance as it indicates that large networks, which may require a very large amount of energy to fully control27, will require much less even when a significant portion of the network is controlled. However, the rate of this exponential decrease, η, is network-specific. We compute the value of η for 50 scale-free model networks, constructed with the static model in ref. 52 for specific parameters kav, the average degree, and γin=γout=γ, the power law exponents of the in- and out-degrees, and take the mean over the realizations. We observe in Fig. 2 that η varies with both of the network parameters γ and kav. A large value of η indicates that target control is highly beneficial for that particular network, that is, the average energy required to control a portion of that network is much lower when the size of the target set is reduced. In Fig. 2a,b, the exponentially increasing value of the worst-case energy  is shown with respect to the size of the target set normalized by the size of the network, p/n, for various values of γin=γout=γ when kav=2.5 and 8.0, respectively. The bars in Fig. 2a,b are one s.d. over the 50 realizations each point represents, or in other words, when p nodes are in the target set

is shown with respect to the size of the target set normalized by the size of the network, p/n, for various values of γin=γout=γ when kav=2.5 and 8.0, respectively. The bars in Fig. 2a,b are one s.d. over the 50 realizations each point represents, or in other words, when p nodes are in the target set  , it is most likely that

, it is most likely that  will lie between those bars. The decrease of η as γ and kav increase for scale-free networks is displayed in Fig. 2c. Overall, we see that η is largest for sparse, non-homogeneous networks (that is, low kav and low γ), which are also the ‘hardest’ to control, that is, they have the largest worst-case energy when all of the nodes are targeted. This indicates that target control will be particularly beneficial when applied to metabolic interaction networks and protein structures, some of which are symmetric and which are known to have low values of γ (ref. 36), as seen in Fig. 2b, where both classes of networks are shown to have large values of η.

will lie between those bars. The decrease of η as γ and kav increase for scale-free networks is displayed in Fig. 2c. Overall, we see that η is largest for sparse, non-homogeneous networks (that is, low kav and low γ), which are also the ‘hardest’ to control, that is, they have the largest worst-case energy when all of the nodes are targeted. This indicates that target control will be particularly beneficial when applied to metabolic interaction networks and protein structures, some of which are symmetric and which are known to have low values of γ (ref. 36), as seen in Fig. 2b, where both classes of networks are shown to have large values of η.

The effects other network parameters have on η are examined in Fig. 3. Figure 3a displays some sample curves for  for shorter or longer values of (tf−t0), the time horizon. The inset shows how η increases as the time horizon (tf−t0) decreases. We see that when (tf−t0) approaches zero from the right, η increases sharply, which shows the increased benefit of target control as the time horizon is reduced. Figure 3b examines how

for shorter or longer values of (tf−t0), the time horizon. The inset shows how η increases as the time horizon (tf−t0) decreases. We see that when (tf−t0) approaches zero from the right, η increases sharply, which shows the increased benefit of target control as the time horizon is reduced. Figure 3b examines how  changes for various numbers of input nodes (represented as a fraction of the total number of nodes in the network). The inset collects values of η for different values of nd, which increases as the number of input nodes is decreased. The role of the time horizon28 and the number of input nodes27 on the control energy have been discussed in the literature for the case in which all the nodes were targeted.

changes for various numbers of input nodes (represented as a fraction of the total number of nodes in the network). The inset collects values of η for different values of nd, which increases as the number of input nodes is decreased. The role of the time horizon28 and the number of input nodes27 on the control energy have been discussed in the literature for the case in which all the nodes were targeted.

Comparing the results between both panels in Fig. 3 and the results in Fig. 2, we see that each parameter has more or less of an effect on the control energy. Shortening the time horizon from the nominal value tf=1 (which was used in Fig. 2) by four orders of magnitude doubled the value of η. Decreasing the number of input nodes from n/2 (the number used in Fig. 2) to only n/5 also roughly doubled the value of η. In comparison, increasing the heterogeneity of the network, by decreasing the power-law exponent γ, from 3 to slightly larger than 2 increased η 10- to 20-fold. Clearly the underlying topology, as described by the power-law exponent, plays the largest role in determining (and thus affecting) the control energy.

We also analyse data sets collected from various fields in science and engineering to study how the worst-case energy changes with the size of the target set for networks with more realistic structures. We are particularly interested in the possibility that these networks display different properties in terms of their target controllability, when compared to the model networks analysed. To this end, we consider different classes of networks, for example, food webs, infrastructure, metabolic networks, social interactions and so on. The name, source and some important properties of each of the data sets are collected in Supplementary Note 8. For each network we choose edge weights and diagonal values from the uniform distribution as discussed in the Methods section below. Overall, we see a similar relationship in terms of the average degree kav and η in Fig. 4c as for the model networks in Fig. 2c. The real data sets that have a large worst-case energy when all of the nodes are targeted,  , tend to also have the largest value of η, which acts as a measure of the rate of improvement with target control. It should be noted that the value of η varies little within each class of networks (for example, food webs, infrastructure, metabolic networks, social interactions and so on as seen in Fig. 4c), which suggests that the structure of each class is similar. Fields of study where networks tend to have a large η would benefit the most from examining situations when a control law could be implemented that only targets some of the elements in the network.

, tend to also have the largest value of η, which acts as a measure of the rate of improvement with target control. It should be noted that the value of η varies little within each class of networks (for example, food webs, infrastructure, metabolic networks, social interactions and so on as seen in Fig. 4c), which suggests that the structure of each class is similar. Fields of study where networks tend to have a large η would benefit the most from examining situations when a control law could be implemented that only targets some of the elements in the network.

For an arbitrary network, η cannot be accurately determined from a single value of  . Some networks, which have a large worst-case energy when every node is targeted, can have a much smaller worst-case energy when only a small portion of the network is controlled as compared to other networks. It is interesting to note from Fig. 4a,b that at some target fraction p/n the energy trends of two different real networks may cross. Specifically, in Fig. 4a, when every node is targeted, p/n=1, the s420st (ref. 53) circuit has a larger maximum energy,

. Some networks, which have a large worst-case energy when every node is targeted, can have a much smaller worst-case energy when only a small portion of the network is controlled as compared to other networks. It is interesting to note from Fig. 4a,b that at some target fraction p/n the energy trends of two different real networks may cross. Specifically, in Fig. 4a, when every node is targeted, p/n=1, the s420st (ref. 53) circuit has a larger maximum energy,  , than the TM-met54 metabolic network. However, when p/n is smaller than 0.6, it requires, on average, more energy to control a portion of the TM-met network than an equivalent portion in the s420st network. The same type of behaviour is seen in Fig. 4b between three networks: Food web Carpinteria55, a protein interaction network prot_struct_1 (ref. 53) and social network Facebook forum56. In summary, we can see that one can estimate the value of η from the average degree of the network but to determine the worst-case energy, at least one point along the energy curve for a specific cardinality of the target set is also required (as in Fig. 4a,b).

, than the TM-met54 metabolic network. However, when p/n is smaller than 0.6, it requires, on average, more energy to control a portion of the TM-met network than an equivalent portion in the s420st network. The same type of behaviour is seen in Fig. 4b between three networks: Food web Carpinteria55, a protein interaction network prot_struct_1 (ref. 53) and social network Facebook forum56. In summary, we can see that one can estimate the value of η from the average degree of the network but to determine the worst-case energy, at least one point along the energy curve for a specific cardinality of the target set is also required (as in Fig. 4a,b).

Figure 5 shows a comparison for several real networks between the value of η of each original network and the values of η for an ensemble of networks that have been generated by randomly rewiring each real network’s connectivity while preserving the degrees of its nodes (see Methods). We see that for all the real networks examined, their value of η is larger than the values of η obtained for the randomized versions to a statistically significant level. We conclude that the potential advantage of applying target control to real networks is higher than for networks derived from random connections such as the static model52, which we have used to construct our model networks.

We compute the energy for the control input  . The control consists of two parts,

. The control consists of two parts,  that is proportional to the states and

that is proportional to the states and  that is of a similar form to equation (3).

that is of a similar form to equation (3).

Note that the integral in the third line of equation (14), when R=Im, is the quadratic form  , which scales exponentially with the cardinality of the target set. The other two terms are functions of the state trajectory, which are not appreciably altered by the number of targeted nodes. We thus expect to see similar energy scaling behaviour for the cost function equation (4) with Q≠On × n and M≠On × m.

, which scales exponentially with the cardinality of the target set. The other two terms are functions of the state trajectory, which are not appreciably altered by the number of targeted nodes. We thus expect to see similar energy scaling behaviour for the cost function equation (4) with Q≠On × n and M≠On × m.

In some applications a cost applied to the states may be beneficial as it will substantially alter the state trajectories (see the example in Supplementary Note 4). In the following simulations, to restrict the number of variables we consider, the mixed-term weight matrix M=On × m and the state weight matrix Q=ζI, that is, a diagonal matrix with constant real value, ζ, on the diagonal. In Fig. 6a model networks are considered of different scale-free exponents γ. In Fig. 6b, the real networks IEEE 118 bus test grid (https://www.ee.washington.edu/research/pstca/pf118/pg_tca118bus.htm) and Florida everglades foodweb (http://vlado.fmf.uni-lj.si/pub/networks/data/) are optimally controlled with respect to the cost function in equation (4), and the approximate maximum energy (computed by numerically integrating equation (4)) is determined for increasing values of the scalar ζ. As ζ increases in Fig. 6a,b, each point along the curve is of approximately the same order of magnitude. As ζ is varied, the order of magnitude of the maximum energy does not change substantially, and mainly depends on the triplet (A, B, C) without much effect by the matrix Q.

Finally, we offer evidence to connect the energy scaling law derived for the minimum energy optimal control problem to the energy scaling apparent for the control signal that arises in the solution of the general quadratic cost function, equation (4). Figure 7 shows that not only does the order of magnitude of the maximum energy not change significantly but also the rate of increase, η, of the maximum energy does not change significantly with respect to the size of the target set either. We compute η, the energy scaling, for a single model network while we increase the state weight cost matrix defined as the diagonal matrix Q=ζIn. This suggests that if η is computed for a network with respect to the minimum energy formulation, it can be used to approximate η when the cost function is quadratic with respect to the states as well.

Discussion

This paper discusses a framework to optimally control a portion of a complex network for assigned initial conditions and final conditions, and given the sets of input nodes and target nodes. We provide an analytic solution to this problem in terms of a reduced Gramian matrix Wp, where the dimensions of this matrix are equal to the number of target nodes one attempts to control. We show that for a fixed number of input nodes, the energy required to control a portion of the network decreases exponentially with the cardinality of the target set, so even controlling a significant number of nodes requires much less energy than when every node is targeted. The energy reduction, expressed as the rate η, is largest for networks that are heterogeneous (small power-law exponent γ in a scale-free degree distribution) and sparse (small kav), with a short time horizon and fewer control inputs. The control of these networks typically has especially large control energy demands. Thus, target control is most beneficial for those networks that are most difficult to control. From the simulations that we have performed on model networks, we have seen that the effect each of these parameters has is not equal. The control energy required is most dependent on the underlying structure of the network, which we see can increase η by as much as 20 times holding all other parameters constant. Adjusting the time horizon over multiple orders of magnitude, or reducing the number of input nodes from 50% to 20% doubled the value of η, which is a comparatively small increase.

The potential applications for developing target controls are numerous, from local jobs among networked robots to economic policies designed to affect only specific sectors. We see that data sets from the literature in many fields also experience the reduced energy benefits from target control. The networks that describe metabolic interactions and protein structures have some of the largest values of η suggesting target control would be the most beneficial in those fields.

We have also considered a linear-quadratic optimal control problem (in terms of the objective function (4)) applied to dynamical complex networks. We show that the scaling factor η for a network with control parameters nd and tf remains nearly the same whether the control is optimal with respect to the minimum energy control input as in equation (3) or is optimal with respect to the quadratic cost function as in equation (5). The observed decrease of the control energy over many orders of magnitude indicates a strong potential impact of this research in applications where control over the entire network is not necessarily required.

Methods

Model networks

In our analyses, similar to ref. 27, we assume the networks have stable dynamics. The scale-free model networks we consider throughout the paper and the Supplementary Information are constructed with the static model52. The Erdos–Renyi graphs represent the static model when the nodal weights are all the same, that is, when the power-law exponent approaches infinity. Edge weights are chosen from a uniform distribution between 0.5 and 1.5. Unique values, δi, are included, drawn from a uniform distribution between−1 and 1 so that the eigenvalues of the adjacency matrix are all unique. The weighted adjacency matrix A is stabilized with a value ɛ such that each diagonal value of A is {aii}=δi+ɛ, where i=1,…, n. The value ɛ is chosen such that the maximum eigenvalue of A is equal to −1. The matrix B is constructed by choosing which nodes in the network require an independent control signal. The matrices B (C) are composed of m (p) versors as columns (rows). The controllability Gramian, Wp, can be calculated as a function of the eigendecomposition of the state matrix A=VΛV−1

where the notation V−T denotes the transpose of the inverse of a matrix V and the ○ operator denotes the direct product. Note that V must be invertible (so that A is diagonalizable), that is, the eigenvectors of A must span  . The matrix Y has elements

. The matrix Y has elements

Note that the uniqueness and negative definiteness of the eigenvalues ensure that Yij is finite for every i, j=1,…, n, that is, λi+λj≠0, and the set of eigenvectors of A are linearly independent and thus the inverse of V exists.

Choosing input nodes

When determining the set of input nodes that guarantees network controllability, often the methods presented in ref. 6, derived from structural controllability, are applied. As the networks we are concerned with have unique diagonal elements in the adjacency matrix, structural controllability states that the network can be controlled with a single control input attached to every node in the network (see theorem 1 and proof in ref. 38). Cowan et al.38 consider an adjacency matrix with unique diagonal elements along the main diagonal and state that this type of matrix can be controlled with a single control input attached to the power-dominating set (PDS) of the underlying graph. The PDS is the smallest set of nodes from which all other nodes can be reached, that is, there is at least one directed path from the nodes in the PDS to every other node in the network. In the work presented here, different from ref. 38, we compute an overestimate of the PDS (that retains the property that all other nodes in the network are reachable) and attach a unique control input to each node in the set. We then add additional nodes, chosen randomly, to the set of input nodes until there are m input nodes where m is a predefined integer less than n. Thus, if there are m input nodes, then there are m control inputs (see the sample network in Fig. 1a).

Practical computation of η

Here we provide additional details on how Figs 2, 3, 4, which show the exponential scaling of the energy with respect to the cardinality of the target set, were generated. For large networks, computing the mean over all possible sets of target nodes is computationally expensive. Instead, we approximate η by computing the mean value of  for some sample values of p, p=n/10,2n/10,…,n by randomly choosing p nodes to be in a target set and computing the inverse of the smallest eigenvalue of Wp. In each of the simulations, we compute the mean and s.d. of the logarithm of the smallest eigenvalue of Wp for typically 50 iterations. By plotting the values of

for some sample values of p, p=n/10,2n/10,…,n by randomly choosing p nodes to be in a target set and computing the inverse of the smallest eigenvalue of Wp. In each of the simulations, we compute the mean and s.d. of the logarithm of the smallest eigenvalue of Wp for typically 50 iterations. By plotting the values of  , we see that a linear model is appropriate and we compute a linear least-squares best fit for the data. The linear curve fit provides a good approximation of

, we see that a linear model is appropriate and we compute a linear least-squares best fit for the data. The linear curve fit provides a good approximation of  as shown in Figs 2, 3, 4.

as shown in Figs 2, 3, 4.

Degree-preserving randomization

To test whether the value of η measured for the real networks is a function of just the average degree, kav, and the degree distribution (scale-free, exponential and so on) or if there are other factors that play a role, we measure η for randomized versions of the real networks. We use degree-preserving randomization (DPR) to ensure that the randomized real network has the same average degree and the same degree sequence. The randomization ‘rewires’ the edges of the network by randomly choosing two edges and swapping the receiving nodes. The process is repeated for an allotted amount of iterations until the networks are sufficiently rewired. We compare each real network with its rewired counterparts in terms of their measured values of η. We see in every case that ηreal, the value of η that corresponds to an original network derived from a data set listed in Supplementary Table 1, deviates significantly from the distribution of η for the DPR networks. The corresponding P values are listed in Fig. 5. The disparity indicates that the real networks have special network features unaccounted for in the randomly rewired versions. Furthermore, because for all cases ηreal is greater than any η obtained from the DPR networks, our target strategies are more beneficial for the original networks.

Numerical controllability

Recent literature on the control of complex networks has discussed the importance of recognizing the differences between theoretically controllable networks and numerically controllable networks. The issue arises in Gramian-based control schemes as the condition number of the Gramian can be quite large for certain ‘barely’ controllable systems, that is, ones where the control inputs only just satisfy analytic controllability measures. Sun and Motter57 found a second phase transition after a system (A, B) becomes analytically controllable, named the numerical controllability transition. While we acknowledge the importance of recognizing the second transition, for this article, we opt to use multi-precision so we can examine trends even when there is a relatively small number of control inputs, which would otherwise make some networks be not numerically controllable using double precision. Here, the Matlab toolbox Advanpix58 allows the computation of the eigendecomposition of the Gramian W to be performed in an arbitrarily precise manner. Say μi and vi are the ith eigenvalue and eigenvector of W, respectively. The average residual error, using Advanpix, is

Typical values of a used throughout this paper are 100–200.

We also use Advanpix when computing the energy for the general quadratic cost function in equation (14). To approximate the integral, we use Legendre–Gauss (LG) quadrature with appropriate weights and points.

We choose L=50 and compute the necessary LG weights wi and LG points τi, i=1,…, 50.

Data availability

The codes used to obtain the results in this study are available from the authors on reasonable request.

Additional information

How to cite this article: Klickstein, I. et al. Energy scaling of targeted optimal control of complex networks. Nat. Commun. 8, 15145 doi: 10.1038/ncomms15145 (2017).

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

Sorrentino, F., di Bernardo, M., Garofalo, F. & Chen, G. Controllability of complex networks via pinning. Phys. Rev. E. 75, 046103 (2007).

Mikhailov, A. S. & Showalter, K. Introduction to focus issue: design and control of self-organization in distributed active systems. Chaos 18, 026101 (2008).

Yu, W., Chen, G., Lu, J. & Kurths, J. Synchronization via pinning control on general complex networks. SIAM J. Control Optim. 51, 1395–1416 (2013).

Tang, Y., Gao, H., Kurths, J. & Fang, J.-A. Evolutionary pinning control and its application in uav coordination. IEEE Trans. Ind. Inf. 8, 828–838 (2012).

Wang, X. F. & Chen, G. Pinning control of scale-free dynamical networks. Physica A 310, 521–531 (2002).

Liu, Y.-Y., Slotine, J.-J. & Barabási, A.-L. Controllability of complex networks. Nature 473, 167–173 (2011).

Liu, Y.-Y., Slotine, J.-J. & Barabasi, A.-L. reply to: Few inputs can reprogram biological networks. Nature 478, E4–E5 (2011).

Ruths, J. & Ruths, D. Control profiles of complex networks. Science 343, 1373–1376 (2014).

Summers, T. H. & Lygeros, J. Optimal sensor and actuator placement in complex dynamical networks. IFAC Proc. Vol. 47, 3784–3789 (2014).

Wang, B., Gao, L. & Gao, Y. Control range: a controllability-based index for node significance in directed networks. J. Stat. Mech.: Theory Exp. 2012, P04011 (2012).

Nepusz, T. & Vicsek, T. Controlling edge dynamics in complex networks. Nat. Phys. 8, 568–573 (2012).

Yuan, Z., Zhao, C., Di, Z., Wang, W.-X. & Lai, Y.-C. Exact controllability of complex networks. Nat. Commun. 4, 2447 (2013).

Müller, F.-J. & Schuppert, A. Few inputs can reprogram biological networks. Nature 478, E4 (2011).

Iudice, F. L., Garofalo, F. & Sorrentino, F. Structural permeability of complex networks to control signals. Nat. Commun. 6, 8349 (2015).

Arianos, S., Bompard, E., Carbone, A. & Xue, F. Power grid vulnerability: a complex network approach. Chaos 19, 013119 (2009).

Pagani, G. A. & Aiello, M. The power grid as a complex network: a survey. Physica A 392, 2688–2700 (2013).

Onnela, J.-P. et al. Analysis of a large-scale weighted network of one-to-one human communication. New J. Phys. 9, 179 (2007).

Kwak, H., Lee, C., Park, H. & Moon, S. in Proceedings of the 19th international conference on World wide web 591–600ACM (2010).

Palsson, B. Systems Biology Cambridge Univ. Press (2015).

Sporns, O. Structure and function of complex brain networks. Dialogues Clin. Neurosci. 15, 247–262 (2013).

Papo, D., Buldú, J. M., Boccaletti, S. & Bullmore, E. T. Complex network theory and the brain. Phil. Trans. R. Soc. B 369, 20130520 (2014).

Allhoff, K. T. & Drossel, B. When do evolutionary food web models generate complex networks? J. Theor. Biol. 334, 122–129 (2013).

Lerman, K. & Ghosh, R. Information contagion: an empirical study of the spread of news on Digg and Twitter social networks. ICWSM 10, 90–97 (2010).

Newman, M. E. Networks: An Introduction Oxford Univ. Press (2010).

Gao, X.-D., Wang, W.-X. & Lai, Y.-C. Control efficacy of complex networks. Sci. Rep. 6, 28037 (2016).

Kailath, T. Linear Systems Vol. 156, Prentice-Hall (1980).

Yan, G. et al. Spectrum of controlling and observing complex networks. Nat. Phys. 11, 779–786 (2015).

Yan, G., Ren, J., Lai, Y.-C., Lai, C.-H. & Li, B. Controlling complex networks: how much energy is needed? Phys. Rev. Lett. 108, 218703 (2012).

Chen, Y.-Z., Wang, L.-Z., Wang, W.-X. & Lai, Y.-C. Energy scaling and reduction in controlling complex networks. R. Soc. Open Sci. 3, 160064 (2016).

Nacher, J. C. & Akutsu, T. Minimum dominating set-based methods for analyzing biological networks. Methods 102, 57–63 (2016).

Wuchty, S. Controllability in protein interaction networks. Proc. Natl Acad. Sci. USA 111, 7156–7160 (2014).

Yang, K., Bai, H., Ouyang, Q., Lai, L. & Tang, C. Finding multiple target optimal intervention in disease-related molecular network. Mol. Syst. Biol. 4, 228 (2008).

Galbiati, M., Delpini, D. & Battiston, S. The power to control. Nat. Phys. 9, 126–128 (2013).

Klemm, K., Eguluz, V. M., Toral, R. & San Miguel, M. Nonequilibrium transitions in complex networks: a model of social interaction. Phys. Rev. E 67, 026120 (2003).

Gao, J., Liu, Y.-Y., D’Souza, R. M. & Barabási, A.-L. Target control of complex networks. Nat. Commun. 5, 5415 (2014).

Albert, R. & Barabási, A.-L. Statistical mechanics of complex networks. Rev. Mod. Phys. 74, 47 (2002).

Slotine, J.-J. et al. Applied Nonlinear Control Vol. 1991, Prentice-Hall (1991).

Cowan, N. J., Chastain, E. J., Vilhena, D. A., Freudenberg, J. S. & Bergstrom, C. T. Nodal dynamics, not degree distributions, determine the structural controllability of complex networks. PLoS ONE 7, e38398 (2012).

Rugh, W. J. Linear System Theory Vol. 2, Prentice Hall (1996).

Murota, K. & Poljak, S. Note on a graph-theoretic criterion for structural output controllability. IEEE Trans. Autom. Control 35, 939–942 (1990).

Stengel, R. F., Ghigliazza, R., Kulkarni, N. & Laplace, O. Optimal control of innate immune response. Optimal Control Appl. Methods 23, 91–104 (2002).

Chang, Y. H. & Tomlin, C. in 50th IEEE Conference on Decision and Control and European Control Conference 3706–3711IEEE (2011).

Cao, Y. & Ren, W. Optimal linear-consensus algorithms: an lqr perspective. IEEE Trans. Syst. Man Cybern. B Cybern. 40, 819–830 (2010).

Cosby, J. A., Shtessel, Y. B. & Bordetsky, A. in 2012 American Control Conference (ACC) 2830–2835IEEE (2012).

Mosebach, A. & Lunze, J. in Control Conference (ECC), 2014 European 208–213IEEE (2014).

Galván-Guerra, R. & Azhmyakov, V. in Industrial Technology (ICIT), 2010 IEEE International Conference on 1759–1764IEEE (2010).

Bloembergen, D., Sahraei, B. R., Bou-Ammar, H., Tuyls, K. & Weiss, G. in ECAI 2014 105–110 (IOS Press, 2014).

Bernstein, D. S. Matrix Mathematics: Theory, Facts, and Formulas Princeton Univ. Press (2009).

Priess, M. C., Conway, R., Choi, J., Popovich, J. M. & Radcliffe, C. Solutions to the inverse lqr problem with application to biological systems analysis. IEEE Trans. Control Syst. Technol. 23, 770–777 (2015).

Chen, C., Fan, T. & Wang, B. Inverse optimal control of hyperchaotic finance system. World J. Model. Simul. 10, 83–91 (2014).

Ali, U., Yan, Y., Mostofi, Y. & Wardi, Y. in 2015 American Control Conference 2930–2935IEEE (2015).

Goh, K.-I., Kahng, B. & Kim, D. Universal behavior of load distribution in scale-free networks. Phys. Rev. Lett. 87, 278701 (2001).

Milo, R. et al. Superfamilies of evolved and designed networks. Science 303, 1538–1542 (2004).

Jeong, H., Tombor, B., Albert, R., Oltvai, Z. N. & Barabási, A.-L. The large-scale organization of metabolic networks. Nature 407, 651–654 (2000).

Lafferty, K. D., Hechinger, R. F., Shaw, J. C., Whitney, K. & Kuris, A. M. in Disease Ecology: Community Structure and Pathogen Dynamics 119–134 (Oxford University Press, 2006).

Opsahl, T. Triadic closure in two-mode networks: redefining the global and local clustering coefficients. Social Netw. 35, 159–167 (2013).

Sun, J. & Motter, A. E. Controllability transition and nonlocality in network control. Phys. Rev. Lett. 110, 208701 (2013).

Advanpix LLC. Multiprecision Computing Toolbox for Matlab v.3.8.3 http://www.advanpix.com (2015).

Freeman, L. C., Webster, C. M. & Kirke, D. M. Exploring social structure using dynamic three-dimensional color images. Social Netw. 20, 109–118 (1998).

Menck, P. J., Heitzig, J., Kurths, J. & Schellnhuber, H. J. How dead ends undermine power grid stability. Nat. Commun. 5, 3969 (2014).

Acknowledgements

We gratefully acknowledge support from the National Science Foundation through NSF grant CMMI-1400193, NSF grant CRISP-1541148 and from the Office of Naval Research through award No. N00014-16-1-2637. We thank Franco Garofalo, Francesco Lo Iudice, Jorge Orozco, Elvia Beltran Ruiz, Jens Lorenz and Andrea L’Afflitto for insightful conversations.

Author information

Authors and Affiliations

Contributions

A.S., I.K. and F.S. formulated the problem statement; A.S. and I.K. performed the mathematical analysis and numerical simulaions; A.S., I.K. and F.S. wrote the paper; F.S. supervised the research.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Supplementary Information

Supplementary Figures, Supplementary Tables, Supplementary Notes and Supplementary References. (PDF 511 kb)

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Klickstein, I., Shirin, A. & Sorrentino, F. Energy scaling of targeted optimal control of complex networks. Nat Commun 8, 15145 (2017). https://doi.org/10.1038/ncomms15145

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/ncomms15145

This article is cited by

-

Input node placement restricting the longest control chain in controllability of complex networks

Scientific Reports (2023)

-

Integrating molecular, histopathological, neuroimaging and clinical neuroscience data with NeuroPM-box

Communications Biology (2021)

-

Controlling network ensembles

Nature Communications (2021)

-

From data to complex network control of airline flight delays

Scientific Reports (2021)

-

Data-driven control of complex networks

Nature Communications (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.