Abstract

Wavefront sensing is a set of techniques providing efficient means to ascertain the shape of an optical wavefront or its deviation from an ideal reference. Owing to its wide dynamical range and high optical efficiency, the Shack–Hartmann wavefront sensor is nowadays the most widely used of these sensors. Here we show that it actually performs a simultaneous measurement of position and angular spectrum of the incident radiation and, therefore, when combined with tomographic techniques previously developed for quantum information processing, the Shack–Hartmann wavefront sensor can be instrumental in reconstructing the complete coherence properties of the signal. We confirm these predictions with an experimental characterization of partially coherent vortex beams, a case that cannot be treated with the standard tools. This seems to indicate that classical methods employed hitherto do not fully exploit the potential of the registered data.

Similar content being viewed by others

Introduction

Light is a major carrier of information about the universe around us, from the smallest to the largest scale. Three-dimensional objects emit radiation that can be viewed as complex wavefronts shaped by diverse features, such as refractive index, density or temperature of the emitter. These wavefronts are specified by both their amplitude and phase; yet, as conventional optical detectors measure only (time-averaged) intensity, information on the phase is discarded. This information turns out to be valuable for a variety of applications, such as optical testing1, image recovery2, displacement and position sensing3, beam control and shaping4,5,6, as well as active and adaptive control of optical systems7, to mention but a few.

Actually, there exists a diversity of methods for wavefront reconstruction, each one with its own pros and cons8. Such methods can be roughly classified into three categories: (a) interferometric methods based on the superposition of two beams with a well-defined relative phase; (b) methods based on the measurement of the wavefront slope or wavefront curvature and (c) methods based on the acquisition of images followed by the application of an iterative phase-retrieval algorithm9. Notwithstanding the enormous progress that has already been made, practical and robust wavefront sensing still stands as an unresolved and demanding problem10.

The time-honoured example of the Shack–Hartmann (S–H) wavefront sensor surely deserves a special mention11: its wide dynamical range, high optical efficiency, white light capability and ability to use continuous or pulsed sources make of this setup an excellent solution in numerous applications.

The operation of the S–H sensor appeals to the intuition, giving the overall impression that the underlying theory is obvious12. Indeed, it is often understood in an oversimplified geometrical-optics framework, which is much the same as assuming full coherence of the detected signal. By any means, this is not a complete picture: even in the simplest instance of beam propagation, the coherence features turn out to be indispensable13.

It has been recently suggested14 that S–H sensing can be reformulated in a concise quantum notation. This is more than an academic curiosity, because it immediately calls for the application of the methods of quantum state reconstruction15. Accordingly, one can verify right away that wavefront sensors may open the door to an assessment of the mutual coherence function, which conveys full information on the signal.

In this paper, we report the first experimental measurement of the coherence properties of an optical beam with a S–H sensor. To that end, we have prepared several coherent and incoherent superpositions of vortex beams. Our strategy can efficiently disclose that information, whereas the common S–H operation fails in the task.

Results

S–H wavefront sensing

The working principle of the S–H wavefront sensor can be elaborated with reference to Fig. 1. An incoming light field is divided into a number of sub-apertures by a microlens array that creates focal spots, registered in a camera, typically a charge-coupled device (CCD). The deviation of the spot pattern from a reference measurement allows the local direction angles to be derived, which in turn enables the reconstruction of the wavefront. In addition, the intensity distribution within the detector plane can be obtained by integration and interpolation between the foci.

Unfortunately, this naive picture breaks down when the light is partially coherent, because the very notion of a single wavefront becomes somewhat ambiguous: the signal has to be conceived as a statistical mixture of many wavefronts16. To circumvent this difficulty, we observe that these sensors provide a simultaneous detection of position and angular spectrum (that is, directions) of the incident radiation. In other words, the S–H is a pertinent example of a simultaneous unsharp position and momentum measurement, a question of fundamental importance in quantum theory and about which much has been discussed17,18,19.

Rephrasing the S–H operation in a quantum parlance will prove pivotal for the remaining discussion. Let ρ be the coherence matrix of the field to be analysed. Using an obvious Dirac notation, we can write G(x′,x′′)=‹x′|ρ|x′′›=Tr(ρ|x′›‹x′′|), where |x› is a vector describing a point-like source located at x and Tr is the matrix trace. Thereby, the mutual coherence function G(x′,x′′) appears as the position representation of the coherence matrix. As a special case, the intensity distribution across a transversal plane becomes I(x)=Tr(ρ|x›‹x|). Moreover, a coherent beam of complex amplitude U(x), can be assigned to a ket |U›, such that U(x)=‹x|U›.

To simplify, we restrict the discussion to one dimension, denoted by x. If the setup is illuminated with a coherent signal U(x), and the ith microlens is Δxi apart from the S–H axis, this microlens feels the field U(x−Δxi)=‹x|exp(−iΔxiP)|U›, where P is the momentum operator. This field is truncated and filtered by the aperture (or pupil) function A(x)=‹x|A› and Fourier transformed by the microlens before being detected by the CCD camera. All this can be accounted for in the form

where X is the position operator and we have assumed that the jth pixel is angularly displaced from the axis by Δpj. The intensity measured at the jth pixel behind the ith lens is then governed by a Born-like rule

with |πij›=exp(iΔxiP)exp(iΔpjX)|A›. As a result, each pixel performs a projection on the position- and momentum-displaced aperture state, as anticipated before.

Some special cases of those aperture states are particularly appealing. For point-like microlenses, A(x)→δ(x) and |πij›→|x=Δxi› (that is, a position eigenstate): they produce broad diffraction patterns and information about the transversal momentum is lost. Conversely, for very large microlenses, A(x)→1 and |πij›→|p=Δpj› (that is, a momentum eigenstate): they provide a sharp momentum measurement with the corresponding loss of position sensitivity. A most interesting situation is when one uses a Gaussian approximation14; now A(x)=exp(−x2/2), which implies |πij›→|αij›, that is, a coherent state of amplitude αij=Δxi+iΔpj. This means that the measurement in this case projects the signal on a set of coherent states and hence yields a direct sampling of the Husimi distribution20 Q(α)=‹α|ρ|α›.

This quantum analogy provides quite a convenient description of the signal: different choices of CCD pixels and/or microlenses can be interpreted as particular phase-space operations21.

S–H tomography

Unlike the Gaussian profiles discussed before, in a realistic setup the microlens apertures do not overlap. If we introduce the operators Πij=|πij›‹πij|, the measurements describing two pixels belonging to distinct apertures are compatible whenever [Πij, Πi′j]=0, i≠i′, which renders the scheme informationally incomplete22. Signal components passing through distinct apertures are never recombined and the mutual coherence of those components cannot be determined.

Put differently, the method cannot discriminate signals comprised of sharply localized non-overlapping components. Nevertheless, these problematic modes do not set any practical restriction. As a matter of fact, spatially bounded modes (that is, with vanishing amplitude outside a finite area) have an unbounded Fourier spectrum and so, an unlimited range of transversal momenta. Such modes cannot thus be prepared with finite resources and they must be excluded from our considerations: for all practical purposes, the S–H performs an informationally complete measurement and any practically realizable signal can be characterized with the present approach.

To proceed further in this matter, we expand the signal as a finite superposition of a suitable spatially unbounded computational basis (depending on the actual experiment, one should use plane waves, Laguerre–Gauss beams and so on). If that basis is labelled by |k› (k=1,…, d, with d being the dimension), the complex amplitudes are ‹x|k›=ψk(x). Therefore, the coherence matrix ρ and the measurement operators Πij are given by d × d non-negative matrices. A convenient representation of Πij can be obtained directly from equation (2), viz,

where ψm,i(x) is the complex amplitude at the CCD plane of the ith lens generated by the incident mth basis mode ψm.

This idea can be illustrated with the simple yet relevant example of square microlenses: A(x)=rect(x). We decompose the signal in a discrete set of plane waves ψk(x)=exp(−ipkx), parameterized by the transverse momenta pk. This is just the Fraunhofer diffraction on a slit, and the measurement matrix is

The smallest possible search space consists of two plane waves (which is equivalent to a single-qubit tomography). By considering different pixels j belonging to the same aperture i, linear combinations of only three out of the four Pauli matrices can be generated from equation (4). For example, a lens placed on the S–H axis (Δxi=0) fails to generate σy and at least one more lens with a different Δxi needs to be added to the setup to make the tomography complete.

This argument can be easily extended: the larger the search space, the more microlenses must be used. In this example, the maximum number of independent measurements generated by the S–H detection is (2M+1)d−3M, for M lenses. A d-dimensional signal—a spatial qudit—can be characterized with about M~d/2 microlenses. This should be compared with the d quadratures required for the homodyne reconstruction of a photonic qudit23,24.

Experiment

We have validated our method with vortex beams25,26. Consider the one-parameter family of modes specified by the orbital angular momentum ℓ, Vℓ=‹r, ϕ|Vℓ›∝eiℓϕ, where (r, ϕ) are cylindrical coordinates. In our experiment, the partially coherent signal

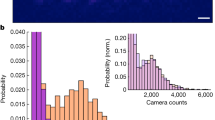

was created; that is, modes V−3 and V−6 are coherently superposed, while V3 is incoherently mixed. Figure 2 sketches the experimental layout used to generate equation (5). Imperfections of the setup and sensor noise make the actual state to differ from the true state. Calibration and signal intensity scans are presented in Fig. 3.

Two independent laser sources, He–Ne at 633 nm (He–Ne) and a laser diode at 635 nm (LD), are coupled into single-mode fibres (SMF) by fibre couplers (FC). After collimation (CO), they are transformed into vortex beams by two different techniques. The first beam, representing a coherent superposition of two vortex modes, is prepared by a digital hologram imprinted in a spatial light modulator (SLM). Unwanted diffraction orders are filtered by an aperture stop (AS), placed in a 4f system. The second beam is modulated by a vortex phase mask (PM) and represents a single vortex mode with an opposite phase orientation with respect to the first beam. Both beams are incoherently mixed in a beam splitter (BS) and finally detected in a S–H sensor (S–H).

Rescaled 8-bit data corresponding to seven microlenses placed in a hexagonal geometry, 81 × 81-pixel region, is displayed in both panels. (a) Data of the plane wave used for calibration; (b) data of the partially coherent vortex beam in equation (5). Green squares enclose the data used for the reconstruction. The intensity from the central microlens vanishes owing to the presence of a phase singularity.

The coherence matrix of the true state is expanded in the seven-dimensional (7D) space spanned by the modes Vℓ, with ℓε{−9, −6, −3, 0, +3, +6, +9}. The resulting matrix elements are plotted in Fig. 4.

Real ℜ and imaginary ℑ parts of the coherence matrix for the true state ρtrue (upper panel) and for the reconstructed ρ (lower panel). The reconstruction space is spanned by vortex modes with ℓε{−9, −6, −3, 0, +3, +6, +9}. The nonzero values of ρ−6,−3 and ρ−3,−6 describe coherences between the modes |V−6› and |V−3› and the phase shift π between them. The very small values of ρ3,−6, ρ3,−3, ρ−6,3 and ρ−3,3 come from the incoherent mixing of |V3› and |V−3− V−6›. The fidelity of the reconstructed coherence matrix is F=0.98.

V−6›. The fidelity of the reconstructed coherence matrix is F=0.98.

To reconstruct the state we use a maximum likelihood algorithm27,28, whose results are summarized in Fig. 4. The main features of ρtrue are nicely displayed, which is also confirmed by the high fidelity of the reconstructed state F(ρtrue, ρ)=Tr =0.98. The off-diagonal elements detect the coherence between modes, whereas the diagonal ones give the amplitude ratios between them. The reconstruction errors are mainly due to the difference between the true and the actually generated state.

=0.98. The off-diagonal elements detect the coherence between modes, whereas the diagonal ones give the amplitude ratios between them. The reconstruction errors are mainly due to the difference between the true and the actually generated state.

To our best knowledge, this is the first experimental measurement of the coherence properties with a wavefront sensor. The procedure outperforms the standard S–H operation, both in terms of dynamical range and resolution, even for fully coherent beams. For example, the high-order vortex beams with strongly helical wavefronts are very difficult to analyse with the standard wavefront sensors, while they pose no difficulty for our proposed approach.

The dynamical range and the resolution of the S–H tomography are delimited by the choice of the search space {|k›} and can be quantified by the singular spectrum29 of the measurement matrix Πij. For the data in Fig. 4, the singular spectrum (which is the analogue of the modulation transfer function in wave optics) is shown in Fig. 5. Depending on the threshold, around 20 out of the total of 49 modes spanning the space of 7 × 7 coherence matrices can be discriminated. The modes outside this field of view are mainly those with significant intensity contributions out of the rectangular regions of the CCD sensor. Further improvements can be expected by exploiting the full CCD area and/or using a CCD camera with more resolution, at the expense of more computational resources for data post-processing.

The singular spectrum {Skk} of the data in Fig. 4 (here, sorted and normalized to the largest singular value) quantifies the sensitivity of the tomography setup to the normal modes of the problem (see Methods). The relative strengths of the singular values correspond to the relative measuring accuracy of those modes. The dynamical range (or field of view) can be defined as the set of normal modes with singular values exceeding a given threshold.

3D imaging

Once the feasibility of the S–H tomography has been proven, we illustrate its utility with an experimental demonstration of 3D imaging (or digital propagation) of partially coherent fields.

As it is well known16, the knowledge of the transverse intensity distribution at an input plane is, in general, not sufficient for calculating the transverse profile at other output planes. Propagation requires the explicit form of the mutual coherence function Gin at the input to determine Iout:

Here x′ (x′′) and x are the coordinates parameterizing the input and output planes, respectively, and h(x, x′) the response function accounting for propagation.

The dependence of the far-field intensity on the beam coherence properties is evidenced in Fig. 6 for coherent, partially coherent and incoherent superpositions of vortex beams.

We have considered different mixtures of the modes |V4›, |V−4› and |V0› and calculated the associated intensity distribution as a Fraunhofer diffraction pattern. (a) Fully coherent superposition |V4+V−4+0.4V0›‹V4+V−4+0.4V0|; (b) incoherent mixture |V4›‹V4|+|V−4›‹V−4|+0.4|V0›‹V0|; and (c) partially coherent mixture |V4+V−4›‹V4+V−4|+0.4|V0›‹V0|.

Once the coherence matrix is reconstructed, the forward/backward spatial propagation can be obtained using tools of diffraction theory and, consequently, the full 3D spatial intensity distribution can be computed. In particular, the intensity profile at the focal plane of an imaging system can be predicted from the S–H measurements. This has been experimentally confirmed, as sketched in Fig. 7. We prepared the partially coherent superposition |V4+V−4›‹V4+V−4|+k|V0›‹V0| and characterized it by the S–H tomography method. The reconstructed coherence function (upper left) was digitally propagated to the focal plane of a lens and the intensity distribution at this plane was calculated (upper right) and compared with the actual CCD scan in the same plane (lower right). Excellent agreement between the predicted and measured distributions was found.

The prediction of the far-field intensity distribution is compared with a direct intensity measurement. The partially coherent vortex beam |V4+V−4›‹V4+V−4|+k|V0›‹V0| was generated (with a beam diameter of 4.9 mm) with a fixed parameter k (unknown before the reconstruction). Upper, middle and lower pannels correspond to the S–H tomography, standard S–H measurement and direct intensity measurement, respectively. Upper left: real and imaginary parts of the reconstructed ρ in the 7D space spanned by the vortices Vℓ with ℓε{−6, −4, −2, 0, 2, 4, 6}. Upper right: calculated far-field intensity distribution Iρ based on the reconstructed ρ propagated to the focal plane of the lens (f=500 mm). Middle left: intensity distribution (in arbitrary units) and wavefront as measured by the standard S–H sensor. Middle right: calculated far-field intensity distribution Istd using the standard S–H wavefront reconstruction and the transport of intensity equation included in the sensor (HASO). Bottom left: schematic picture of the direct intensity measurement at the lens focal plane. Bottom right: the result of the direct intensity measurement ICCD at the focal plane with a CCD camera.

We emphasize that the standard S–H operation fails in this kind of application30. Indeed, we measured the intensity and wavefront of the target vortex superposition with a standard S–H sensor (middle left) and propagated the measured intensity to the focal plane using the transport of intensity equation31,32 (middle right). To quantify the result, we compute the normalized correlation coefficient [C(Ia, Ib)=Σi,j IaIb/ ] of the measured intensity with the prediction: the result, C(Istd, ICCD)=0.47, confirms the inability of the standard S–H to cope with the coherence properties of the signal. This has to be compared with the result for the S–H tomography: C(Iρ, ICCD)=0.89, which supports its advantages.

] of the measured intensity with the prediction: the result, C(Istd, ICCD)=0.47, confirms the inability of the standard S–H to cope with the coherence properties of the signal. This has to be compared with the result for the S–H tomography: C(Iρ, ICCD)=0.89, which supports its advantages.

Discussion

We have demonstrated a non-trivial coherence measurement with a S–H sensor. This goes further than the standard analysis and constitutes a substantial leap ahead that might trigger potential applications in many areas. Such a breakthrough would not have been possible without reinterpreting the S–H operation as a simultaneous unsharp measurement of position and momentum. This immediately allows one to set a fundamental limit in the experimental accuracy33.

Moreover, although the S–H has been the thread for our discussion, it is not difficult to extend the treatment to other wavefront sensors. For example, let us consider the recent results for temperature deviations of the cosmic microwave background34. The anisotropy is mapped as spots on the sphere, representing the distribution of directions of the incoming radiation. To get access to the position distribution, the detector has to be moved and, in principle, such a scanning brings information about the position and direction simultaneously: the position of the measured signal before detection is delimited by the scanning aperture, whereas the direction the signal comes from is revealed by the detector placed at the focal plane. When the aperture moves, it scans the field repeatedly at different positions. This could be an excellent chance to investigate the coherence properties of the relict radiation. To our best knowledge, this question has not been posed yet. Quantum tomography is especially germane for this task.

Finally, let us stress that the classical estimation theory has been already applied to the raw S–H image data, offering an improved accuracy, but at a greater computational cost35,36. However, the protocol used here can be implemented in a very easy, compact way, without any numerical burden.

Methods

Partially-coherent beam preparation

Two independent vortex beams were created in the setup of Fig. 2 with two laser sources of nearly the same wavelength: a He–Ne (633 nm) and a diode laser (635 nm). The output beams were spatialy filtered by coupling them into single-mode fibres. The power ratio between the modes was controlled by changing the coupling efficiency. The resulting modes were transformed into vortex beams by different methods.

The state |V−3− V−6› was realized using a digital hologram prepared with an amplitude spatial light modulator (OPTO SLM), with a resolution of 1,024 × 768 pixels. The hologram was then illuminated by a reference plane wave produced by placing the output of a single-mode fibre at the focal plane of a collimating lens. The diffraction spectrum involves several orders, of which only one contains useful information. To filter out the unwanted orders, a 4f-optical processor, with a 0.3-mm circular aperture stop placed at the rear focal plane of the second lens, was used. The resulting coherent vortex beam is then realized at the focal plane of the third lens.

V−6› was realized using a digital hologram prepared with an amplitude spatial light modulator (OPTO SLM), with a resolution of 1,024 × 768 pixels. The hologram was then illuminated by a reference plane wave produced by placing the output of a single-mode fibre at the focal plane of a collimating lens. The diffraction spectrum involves several orders, of which only one contains useful information. To filter out the unwanted orders, a 4f-optical processor, with a 0.3-mm circular aperture stop placed at the rear focal plane of the second lens, was used. The resulting coherent vortex beam is then realized at the focal plane of the third lens.

The second beam |V3› was obtained through a plane-wave phase profile modulation by a special vortex phase mask (RPC Photonics). Finally, the field in equation (5) was prepared by mixing the two vortex modes in a beam splitter.

During the state preparation, special care was taken to reduce any deviation between the true and target states. This involved minimizing aberrations as well as imperfections of the spatial light modulator, resulting in distortions of the transmitted wavefront.

S–H detection

The S–H measurement involved a Flexible Optical array of 128 microlenses arranged in a hexagonal pattern. Each microlens has a focal length of 17.9 mm and a hexagonal aperture of 0.3 mm. The signal at the focal plane of the array is detected by a uEye CCD camera with a resolution of 640 × 480 pixels, each pixel being 9.9 μm × 9.9 μm in size. Because of microlens array imperfections, CCD–microlens misalignment and aberrations of the 4f processor (aberrations of the collimating optics are negligible), calibration of the detector must be carried out. The holographic part of the setup provided this calibration wave. S–H data from the calibration wave and the partially coherent beam are shown in Fig. 3. The beam axis position in the microlens array coordinates was adjusted with a Gaussian mode. The detection noise is mainly due to the background light, which is filtered out before reconstruction.

Reconstruction

The reconstruction was done in the 7D space spanned by the Vℓ modes with ℓε{−9, −6, −3, 0, +3, +6, +9}. All in all, 49 real parameters had to be reconstructed. The data come from CCD areas belonging to seven microlenses around the beam axis; each one of them comprise 11 × 11 pixels, which means 847 data samples altogether. An iterative maximum likelihood algorithm27,28 was applied to estimate the true coherence matrix of the signal.

Dynamical range and resolution

The errors of the S–H tomography can be quantified by evaluating the covariances of the parameters of the reconstructed coherence matrix ρ. In the absence of systematic errors, the Cramér–Rao lower bound37,38 can be employed to that end. In practice, a simpler approach based on the singular spectrum analysis29 works pretty well.

Let us decompose the d × d coherence matrix ρ (d is just the dimension of the search space) and the measurement operators Πij in an orthonormal matrix basis Γk (k=1, …, d2) [Tr(ΓkΓl)=δkl], namely

so that the Born-like rule (2) can be recast as a system of linear equations

On using a single index α to label all possible microlens/CCD–pixel combinations α≡{i, j}, equation (8) can be concisely expressed in the matrix form

where I is the vector of measured data, r is the vector of coherence-matrix parameters and Pα k= is the tomography matrix.

is the tomography matrix.

Obviously, for ill-conditioned measurements, the reconstruction errors will be larger and vice versa. By applying a singular-value decomposition to the measurement matrix P=USV†, equation (9) takes the diagonal form

where r′=V†r and I′=U†I are the normal modes of the problem and the corresponding transformed data, respectively. The singular values Skk are the eigenvalues associated with the normal modes, so the relative sensitivity of the tomography to different normal modes is given by the relative sizes of the corresponding singular values. With the help of equations (9) and (10), the errors are readily propagated from the detection I to the reconstruction r.

Drawing an analogy between equation (10) and the filtering by a linear spatially invariant system, the singular spectrum Skk and the sum of the singular values ΣkSkk are the discrete analogues of the modulation transfer function and the maximum of the point spread function, respectively. Hence we define the dynamical range (or field of view) of the S–H tomography as the set of normal modes with singular values exceeding a given threshold. The sum of the singular values then describes the overall performance of the S–H tomography setup. When some of the singular values are zero, the tomography is not informationally complete and the search space must be readjusted.

Far-field intensity

In the experiment on 3D imaging, the partially coherent vortex beam |V4+V−4›‹V4+V−4|+k|V0›‹V0| was generated, where k was a parameter governing the degree of spatial coherence. To this end, a coherent mixture |V4+V−4›‹V4+V−4| was realized by the digital-holography part of the setup, whereas the zero-order vortex beam |V0› was prepared by removing the spiral phase mask. The output diameter of the beam was set to 4.9 mm.

The measurement was done in three steps. First, the S–H sensor (see Fig. 2) was replaced by a lens of 500 mm focal length and the far-field intensity was detected at its rear focal plane with a CCD camera (Olympus F-View II, 1376 × 1032 pixels, 6.45 μm × 6.45 μm each). Second, the same vortex superposition was subject to the S–H tomography using the S–H sensor (Flexible Optical) and the reconstruction of the coherence matrix in the 7D subspace spaned by the vortices Vℓ with ℓε{−6, −4, −2, 0, +2, +4, +6}. Once ρ is reconstructed, the far-field intensity was computed using equation (6), where the focusing is described by the Fraunhofer diffraction response function. The predicted intensity was found to be in an excellent agreement with the direct sampling by the Olympus CCD camera. Finally, the Flexible Optical S–H sensor was replaced by a HASO3 S–H detector. The intensity and wavefront of the prepared vortex beam was measured and the far-field intensity was computed by resorting to the transport of intensity performed by the HASO software. Resampling was done to match the resolution of the HASO output to the resolution of the Olympus CCD camera.

Additional information

How to cite this article: Stoklasa, B. et al. Wavefront sensing reveals optical coherence. Nat. Commun. 5:3275 doi: 10.1038/ncomms4275 (2014).

References

Malacara D. (ed.).Optical Shop Testing 3rd ed Wiley (2007).

Dai, G.-M. Wavefront Optics for Vision Correction SPIE (2008).

Ares, J., Mancebo, T. & Barâ, S. Position and displacement sensing with Shack-Hartmann wave-front sensors. Appl. Opt. 39, 1511–1520 (2000).

Katz, O., Small, E., Bromberg, Y. & Silberberg, Y. Focusing and compression of ultrashort pulses through scattering media. Nat. Photonics 5, 372–377 (2011).

McCabe, D. J. et al. Spatio-temporal focusing of an ultrafast pulse through a multiply scattering medium. Nat. Commun. 2, 447 (2011).

Mosk, A. P., Lagendijk, A., Lerosey, G. & Fink, M. Controlling waves in space and time for imaging and focusing in complex media. Nat. Photonics 6, 283–292 (2012).

Tyson, R. K. Principles of Adaptive Optics 3rd ed CRC (2011).

Geary, J. M. Introduction to Wavefront Sensors SPIE (1995).

Luke, D. R., Burke, J. V. & Lyon, R. G. Optical wavefront reconstruction: theory and numerical methods. SIAM Rev. 44, 169–224 (2002).

Campbell, H. I. & Greenaway, A. H. Wavefront sensing: from historical roots to the state-of-the-art. EAS Publications 22, 165–185 (2006).

Platt, B. C. & Shack, R. S. History and principles of Shack-Hartmann wavefront sensing. J. Refract. Surg. 17, S573–S577 (2001).

Primot, J. Theoretical description of Shack-Hartmann wave-front sensor. Opt. Commun. 222, 81–92 (2003).

Mandel, L. & Wolf, E. Optical Coherence and Quantum Optics Cambridge Univ. Press (1995).

Hradil, Z., Řeháček, J. & Sánchez-Soto, L. L. Quantum reconstruction of the mutual coherence function. Phys. Rev. Lett. 105, 010401 (2010).

Paris M. G. A., Řeháček J. (eds).Quantum State Estimation Vol. 649, of Lecture Notesin PhysicsSpringer (2004).

Goodman, J. W. Introduction to Fourier Optics 3rd ed Roberts (2005).

Arthurs, E. & Kelly, J. L. J. On the simultaneous measurement of a pair of conjugate observables. Bell Syst. Tech. J. 44, 725–729 (1965).

Stenholm, S. Simultaneous measurement of conjugate variables. Ann. Phys. 218, 197–198 (1992).

Raymer, M. G. Uncertainty principle for joint measurement of noncommuting variables. Am. J. Phys. 62, 986–993 (1994).

Husimi, K. Some formal properties of the density matrix. Proc. Phys. Math. Soc. Jpn 22, 264–314 (1940).

Lvovsky, A. I. & Raymer, M. G. Continuous-variable optical quantum-state tomography. Rev. Mod. Phys. 81, 299–322 (2009).

Busch, P. & Lahti, P. The determination of the past and the future of a physical system in quantum mechanics. Found. Phys. 19, 633–678 (1989).

Leonhardt, U. & Munroe, M. Number of phases required to determine a quantum state in optical homodyne tomography. Phys. Rev. A 54, 3682–3684 (1996).

Sych, D., Řeháček, J., Hradil, Z., Leuchs, G. & Sánchez-Soto, L. L. Informational completeness of continuous-variable measurements. Phys. Rev. A 86, 052123 (2012).

Molina-Terriza, G., Torres, J. P. & Torner, L. Twisted photons. Nat. Phys. 3, 305–310 (2007).

Torres J., Torner L. (eds).Twisted Photons: Applications of Light with Orbital Angular Momentum Wiley-VCH (2011).

Hradil, Z., Mogilevtsev, D. & Řeháček, J. Biased tomography schemes: an objective approach. Phys. Rev. Lett. 96, 230401 (2006).

Řeháček, J., Hradil, Z., Bouchal, Z., Čelechovský, R., Rigas, I. & Sánchez-Soto, L. L. Full tomography from compatible measurements. Phys. Rev. Lett. 103, 250402 (2009).

Bogdanov, Y. I. et al. Statistical estimation of the quality of quantum-tomography protocols. Phys. Rev. A 84, 042108 (2011).

Schäfer, B. & Mann, K. Determination of beam parameters and coherence properties of laser radiation by use of an extended Hartmann–Shack wave-front sensor. Appl. Opt. 41, 2809–2817 (2002).

Teague, M. R. Deterministic phase retrieval: a Green’s function solution. J. Opt. Soc. Am. A 73, 1434–1441 (1983).

Roddier, F. Wavefront sensing and the irradiance transport equation. Appl. Opt. 29, 1402–1403 (1990).

Appleby, D. M. Concept of experimental accuracy and simultaneous measurements of position and momentum. Int. J. Theor. Phys. 37, 1491–1509 (1998).

Hinshaw, G. et al. Five-year Wilkinson microwave anisotropy probe observations: data processing, sky maps, and basic results. Astrophys. J. Suppl. Ser. 180, 225–245 (2009).

Cannon, R. C. Global wave-front reconstruction using Shack-Hartmann sensors. J. Opt. Soc. Am. A 12, 2031–2039 (1995).

Barrett, H. H., Dainty, C. & Lara, D. Maximum-likelihood methods in wavefront sensing: stochastic models and likelihood functions. J. Opt. Soc. Am. A 24, 391–414 (2007).

Cramér, H. Mathematical Methods of Statistics Princeton University Press (1946).

Rao, C. R. Linear Statistical Inference and Its Applications Wiley (1973).

Acknowledgements

This work was supported by the Technology Agency of the Czech Republic (Grant TE01020229), the Czech Ministry of Industry and Trade (Grant FR-TI1/364), the IGA Projects of the Palacký University (Grants PRF_2012_005 and PRF_2013_019) and the Spanish MINECO (Grant FIS2011-26786).

Author information

Authors and Affiliations

Contributions

The experiment was conceived by J.R., Z.H. and L.L.S.-S. and carried out by B.S. The numerical reconstruction was performed by B.S., whereas J.R. and L.M. provided theoretical analysis. Z.H. and J.R. supervised the project. The theoretical part of manuscript was written by L.L.S.-S. and J.R. and the experimental part by B.S. and L.L.S.-S., with input and discussions from all other authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

About this article

Cite this article

Stoklasa, B., Motka, L., Rehacek, J. et al. Wavefront sensing reveals optical coherence. Nat Commun 5, 3275 (2014). https://doi.org/10.1038/ncomms4275

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/ncomms4275

This article is cited by

-

Fabrication of Waterproof Artificial Compound Eyes with Variable Field of View Based on the Bioinspiration from Natural Hierarchical Micro–Nanostructures

Nano-Micro Letters (2020)

-

Simultaneous determination of the sign and the magnitude of the topological charge of a partially coherent vortex beam

Applied Physics B (2016)

-

Spatio-temporal coherence mapping of few-cycle vortex pulses

Scientific Reports (2014)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.