Abstract

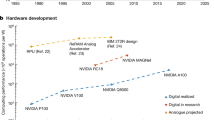

Neuromorphic computing draws inspiration from the brain to provide computing technology and architecture with the potential to drive the next wave of computer engineering1,2,3,4,5,6,7,8,9,10,11,12,13. Such brain-inspired computing also provides a promising platform for the development of artificial general intelligence14,15. However, unlike conventional computing systems, which have a well established computer hierarchy built around the concept of Turing completeness and the von Neumann architecture16,17,18, there is currently no generalized system hierarchy or understanding of completeness for brain-inspired computing. This affects the compatibility between software and hardware, impairing the programming flexibility and development productivity of brain-inspired computing. Here we propose ‘neuromorphic completeness’, which relaxes the requirement for hardware completeness, and a corresponding system hierarchy, which consists of a Turing-complete software-abstraction model and a versatile abstract neuromorphic architecture. Using this hierarchy, various programs can be described as uniform representations and transformed into the equivalent executable on any neuromorphic complete hardware—that is, it ensures programming-language portability, hardware completeness and compilation feasibility. We implement toolchain software to support the execution of different types of program on various typical hardware platforms, demonstrating the advantage of our system hierarchy, including a new system-design dimension introduced by the neuromorphic completeness. We expect that our study will enable efficient and compatible progress in all aspects of brain-inspired computing systems, facilitating the development of various applications, including artificial general intelligence.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The example applications that we used are publicly available, as described in the text and the relevant references. The experimental setups for demonstration and measurements are detailed in the text and the relevant references. Other data that support the findings of this study are available from the corresponding authors on reasonable request.

Code availability

The codes used for the software toolchain and the demonstration neural networks are available from the corresponding authors on reasonable request.

References

Waldrop, M. The chips are down for Moore’s law. Nature 530, 144–147 (2016).

Kendall, J. D. & Kumar, S. The building blocks of a brain-inspired computer. Appl. Phys. Rev. 7, 011305 (2020).

Zhang, B., Shi, L. P. & Song, S. Creating more intelligent robots through brain-inspired computing. Science 354 (Spons. Suppl.), 4–9 (2016).

Roy, K., Jaiswal, A. & Panda, P. Towards spike-based machine intelligence with neuromorphic computing. Nature 575, 607–617 (2019).

Chen, Y. et al. DianNao family: energy-efficient hardware accelerators for machine learning. Commun. ACM 59, 105–112 (2016).

Jouppi, N. P. et al. In-datacenter performance analysis of a tensor processing unit. In Proc. 44th Annu. Int. Symp. Computer Architecture 1–12 (IEEE, 2017).

Schemmel, J. et al. A wafer-scale neuromorphic hardware system for large-scale neural modeling. In Proc. 2010 IEEE Int. Symp. Circuits and Systems 1947–1950 (IEEE, 2010).

Merolla, P. A. et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673 (2014).

Furber, S. B. et al. The spinnaker project. Proc. IEEE 102, 652–665 (2014).

Davies, M. et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Benjamin, B. V. et al. Neurogrid: a mixed-analog-digital multichip system for large-scale neural simulations. Proc. IEEE 102, 699–716 (2014).

Friedmann, S. et al. Demonstrating hybrid learning in a flexible neuromorphic hardware system. IEEE Trans. Biomed. Circuits Syst. 11, 128–142 (2017).

Neckar, A. et al. Braindrop: a mixed-signal neuromorphic architecture with a dynamical systems-based programming model. Proc. IEEE 107, 144–164 (2019).

Pei, J. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019).

Goertzel, B. Artificial general intelligence: concept, state of the art, and future prospects. J. Artif. Gen. Intell. 5, 1–48 (2014).

Turing, A. M. On computable numbers, with an application to the Entscheidungsproblem. Proc. Lond. Math. Soc. 2, 230–265 (1937).

Eckert, J. P. Jr & Mauchly, J. W. Automatic High-speed Computing: A Progress Report on the EDVAC. Report No. W-670-ORD-4926 (Univ. Pennsylvania, 1945).

von Neumann, J. First draft of a report on the EDVAC. IEEE Ann. Hist. Comput. 15, 27–75 (1993).

Aimone, J. B., Severa, W. & Vineyard, C. M. Composing neural algorithms with Fugu. In Proc. Int. Conf. Neuromorphic Systems 1–8 (ACM, 2019).

Lagorce, X. & Benosman, R. Stick: spike time interval computational kernel, a framework for general purpose computation using neurons, precise timing, delays, and synchrony. Neural Comput. 27, 2261–2317 (2015).

Aimone, J. B. et al. Non-neural network applications for spiking neuromorphic hardware. Proc. 3rd Int. Worksh. Post Moores Era Supercomputing 24–26 (IEEE–TCHPC, 2018).

Sawada, J. et al. Truenorth ecosystem for brain-inspired computing: scalable systems, software, and applications. In Proc. Int. Conf. High Performance Computing, Networking, Storage and Analysis 130–141 (IEEE, 2016).

Rowley, A. G. D. et al. SpiNNTools: the execution engine for the SpiNNaker platform. Front. Neurosci. 13, 231 (2019).

Rhodes, O. et al. sPyNNaker: a software package for running PyNN simulations on SpiNNaker. Front. Neurosci. 12, 816 (2018).

Lin, C. K. et al. Programming spiking neural networks on Intel’s Loihi. Computer 51, 52–61 (2018).

Davison, A. P. et al. PyNN: a common interface for neuronal network simulators. Front. Neuroinform. 2, 11 (2009).

Bekolay, T. et al. Nengo: a Python tool for building large-scale functional brain models. Front. Neuroinform. 7, 48 (2014).

Hashmi, A., Nere, A., Thomas, J. J. and Lipasti, M. A case for neuromorphic ISAs. In ACM SIGARCH Computer Architecture News Vol. 39, 145–158 (ACM, 2011).

Schuman, C. D. et al. A survey of neuromorphic computing and neural networks in hardware. Preprint at https://arxiv.org/abs/1705.06963 (2017).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Poggio, T. & Girosi, F. Networks for approximation and learning. Proc. IEEE 78, 1481–1497 (1990).

Esmaeilzadeh, H., Sampson, A., Ceze, L. & Burger, D. Neural acceleration for general-purpose approximate programs. IEEE Micro 33, 16–27 (2013).

Mead, C. & Ismail, M. Analog VLSI Implementation of Neural Systems Ch. 5–6 (Springer, 1989).

Strukov, D. B., Snider, G. S., Stewart, D. R. & Williams, R. S. The missing memristor found. Nature 453, 80–83 (2008).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Ji, Y. et al. FPSA: a full system stack solution for reconfigurable ReRAM-based NN accelerator architecture. In Proc. 24th Int. Conf. Architectural Support for Programming Languages and Operating Systems 733–747 (ACM, 2019).

Tuma, T., Pantazi, A., Le Gallo, M., Sebastian, A. & Eleftheriou, E. Stochastic phase-change neurons. Nat. Nanotechnol. 11, 693–699 (2016).

Negrov, D. et al. An approximate backpropagation learning rule for memristor based neural networks using synaptic plasticity. Neurocomputing 237, 193–199 (2016).

Maass, W. Networks of spiking neurons: the third generation of neural network models. Neural Netw. 10, 1659–1671 (1997).

Leshno, M. et al. Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Netw. 6, 861–867 (1993).

Dennis, J. B., Fosseen, J. B. & Linderman, J. P. Data flow schemas. In Int. Symp. Theoretical Programming 187–216 (Springer, 1974).

Jagannathan, R. Coarse-grain dataflow programming of conventional parallel computers. In Advanced Topics in Dataflow Computing and Multithreading 113–129 (IEEE, 1995).

Zhang, W. & Yang, Y. A survey of mathematical modeling based on flocking system. Vibroengineering PROCEDIA 13, 243–248 (2017).

Hennessy, J. & Patterson, D. A new golden age for computer architecture. Commun. ACM 62, 48–60 (2019).

Deng, L. et al. Tianjic: a unified and scalable chip bridging spike-based and continuous neural computation. IEEE J. Solid-State Circuits 55, 2228–2246 (2020).

Dong, X. et al. Nvsim: a circuit-level performance, energy, and area model for emerging nonvolatile memory. IEEE Trans. Comput. Aided Des. Integrated Circ. Syst. 31, 994–1007 (2012).

Cong, J. & Xiao, B. mrFPGA: a novel FPGA architecture with memristor-based reconfiguration. In 2011 IEEE/ACM Int. Symp. Nanoscale Architectures 1–8 (IEEE, 2011).

Luu, J. et al. VTR 7.0: next generation architecture and CAD system for FPGAs. ACM Trans. Reconfig. Technol. Syst. 7, 6 (2014).

Reynolds, C. W. Flocks, herds and schools: a distributed behavioral model. In Proc. 14th Annu. Conf. Computer Graphics and Interactive Techniques 25–34 (ACM, 1987).

Bajec, I. L., Zimic, N. & Mraz, M. The computational beauty of flocking: boids revisited. Math. Comput. Model. Dyn. Syst. 13, 331–347 (2007).

Parker, C. XBoids, GPL version 2 licensed. http://www.vergenet.net/~conrad/boids/download (2002).

Aslan, S., Niu, S. & Saniie, J. FPGA implementation of fast QR decomposition based on givens rotation. Proc. 55th Int. Midwest Symp. Circuits and Systems 470–473 (IEEE, 2012).

Acknowledgements

This work was partly supported by Beijing Academy of Artificial Intelligence (No. BAAI2019ZD0403), NSFC (No. 61836004), Brain-Science Special Program of Beijing under grant Z181100001518006, Beijing National Research Center for Information Science and Technology, Beijing Innovation Center for Future Chips, Tsinghua University and Tsinghua University-China Electronics Technology HIK Group Co. Joint Research Center for Brain-inspired Computing, JCBIC.

Author information

Authors and Affiliations

Contributions

Y.Z., P.Q., Y.J. and W. Zhang proposed the idea for the brain-inspired hierarchy. Y.Z. was in charge of the whole design. P.Q. proposed the ideas for the POG and the proof of its Turing completeness. Y.J. proposed the ideas for neuromorphic completeness, the EPG, the basic execution primitives and the constructive proof of its neuromorphic completeness. W. Zhang proposed the ideas for the ANA and the mapping from the EPG to it. P.Q. performed the experiment on the GPU. Y.J. performed the experiment on the FPSA. W. Zhang and G.W. performed the experiments on Tianjic. Y.J. and W. Zhang performed the experiments on the Boid model and QR decomposition for the revision. G.G. gave advice on the theory of architecture and hierarchy. L.S. was in charge of Tianjic work and proposed the idea to bridge dual-driven brain-inspired computing with artificial general intelligence. G.G., S.S., G.L., W.C. W. Zheng, F.C., J.P., R.Z., M.Z. and L.S. contributed to the analysis and interpretation of results. All authors contributed to the discussion of the design principle of the brain-inspired hierarchy. Y.Z., L.S., P.Q. and R.Z. revised the manuscript, with input from all authors. Y.Z. and L.S. supervised the project.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature thanks Oliver Rhodes and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 POG.

a, Two sample operators: the left is a single mathematical operation and the right is a simple algorithm that consists of several computation operations. b, A sample operator graph for rectified linear units (ReLU). c, Parameter updater. More details are provided in Supplementary Information section 3.3.1. d, Main control-flow operators: conditional decider, conditional merger, true gate and false gate. e, Control-flow operator graphs of the branch and the loop. f, Synapse operator: it is enabled when any of the inputs arrive. i, input; o, output; T/F, true/false branch; P, parameter of the operator; P′, the new value of P; OGT/F, operator graph of the true/false branch; Body, operator graph of the loop body; Cond, operator graph of the loop condition.

Extended Data Fig. 2 Bicycle driving and tracking task.

a–e, POGs of the five neural network examples. Conv2d, two-dimensional convolution operator; MatMul, matrix multiplication operator; LIF, operator of the LIF model; Norm, normalization operator; W, b, weight and bias parameters for the corresponding operator. f, The overall relationships between these networks.

Extended Data Fig. 4 The experiment using QR decomposition.

a, Pseudocode for QR decomposition by Givens rotation. b, The heuristic search algorithm on QR decomposition. Each step of this figure includes approximators that have been evaluated and the current priority queue. The number on each node represents the cost of that approximator. The red triangle represents the current strategy. Each step, the first tree in the queue is selected (green rectangle) and the leaves are replaced by the root approximator. The dashed circles represent the approximators that do not need to be constructed and evaluated. The whole procedure explores only four (out of 16) strategies. In this case, the final strategy happens to be the best, but in general, this algorithm is not guaranteed to produce the optimal result.

Supplementary information

Supplementary Information

This file includes some mathematical proofs, a detailed introduction about each part of our hierarchy, detailed experiment description and analysis and an exhaustive discussion about the work. It also includes Supplementary Figures 1–11, Supplementary Tables 1-3, and additional references.

Rights and permissions

About this article

Cite this article

Zhang, Y., Qu, P., Ji, Y. et al. A system hierarchy for brain-inspired computing. Nature 586, 378–384 (2020). https://doi.org/10.1038/s41586-020-2782-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-020-2782-y

This article is cited by

-

Research on General-Purpose Brain-Inspired Computing Systems

Journal of Computer Science and Technology (2024)

-

Technical Perspective: Research on General-Purpose Brain-Inspired Computing Systems

Journal of Computer Science and Technology (2024)

-

Improving the efficiency of using multivalued logic tools: application of algebraic rings

Scientific Reports (2023)

-

Quantum-aided secure deep neural network inference on real quantum computers

Scientific Reports (2023)

-

A neural machine code and programming framework for the reservoir computer

Nature Machine Intelligence (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.