Early-career researchers and those from minority communities report a lack of fairness in grant-allocation processes.Credit: Getty

Earlier this month, the British Academy, the United Kingdom’s national academy for humanities and social sciences, introduced an innovative process for awarding small research grants. The academy will use the equivalent of a lottery to decide between funding applications that its grant-review panels consider to be equal on other criteria, such as the quality of research methodology and study design.

Using randomization to decide between grant applications is relatively new, and the British Academy is in a small group of funders to trial it, led by the Volkswagen Foundation in Germany, the Austrian Science Fund and the Health Research Council of New Zealand. The Swiss National Science Foundation (SNSF) has arguably gone the furthest: it decided in late 2021 to use randomization in all tiebreaker cases across its entire grant portfolio of around 880 million Swiss francs (US$910 million).

Swiss funder draws lots to make grant decisions

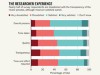

Other funders should consider whether they should now follow in these footsteps. That’s because it is becoming clear that randomization is a fairer way to allocate grants when applications are too close to call, as a study from the Research on Research Institute in London shows (see go.nature.com/3s54tgw). Doing so would go some way to assuage concerns, especially in early-career researchers and those from historically marginalized communities, about the lack of fairness when grants are allocated using peer review.

The British Academy/Leverhulme small-grants scheme distributes around £1.5 million (US$1.7 million) each year in grants of up to £10,000 each. These are valuable despite their relatively small size, especially for researchers starting out. The academy’s grants can be used only for direct research expenses, but small grants are also typically used to fund conference travel or to purchase computer equipment or software. Funders also use them to spot promising research talent for future (or larger) schemes. For these reasons and more, small grants are competitive — the British Academy says it is able to fund only 20–30% of applications in each funding round.

The academy’s problem is that its grant reviewers say that twice as many applications as this pass the quality threshold, but the academy lacks the funds to say yes to them all. So it is forced to make choices about who to fund and who to reject — a process prone to human biases. Deciding who to fund by entering tie-breaker applicants into a lottery is one way to reduce unfairness. The fix isn’t perfect: studies show that biases still exist during grant review1,2. But biases, such as recognizing more senior researchers, people with recognizable names, or people at better-known institutions, are more likely to creep in and influence the final decision when cases are too close to call.

It is good to see research-informed innovation in grant-giving — even a decade ago, it is highly unlikely that lotteries would have become part of the conversation. That they have now, is in large part down to research, and in particular to findings from studies of research funding. Funders must monitor the impact of their changes — assessing in particular whether lotteries have increased the diversity of applicants or made changes to reviewer workload. At the same time, researchers (and funders) need to test other models for grant allocation. One such model is what researchers call ‘egalitarian’ funding, by which grants are distributed more equally and less competitively3.

Innovating, testing and evaluating are all crucial to reducing bias in grant-giving. Using lotteries to decide in tie-breaker cases is a promising start.

Swiss funder draws lots to make grant decisions

Swiss funder draws lots to make grant decisions

Thousands of grant peer reviewers share concerns in global survey

Thousands of grant peer reviewers share concerns in global survey

Dear grant agencies: tell me where I went wrong

Dear grant agencies: tell me where I went wrong