Timothy Brown was the first person with HIV to be successfully treated with transplants of HIV-resistant stem cells. A case study published in Nature replicated this result in another patient, showing that Brown’s cure was not an anomaly.Credit: T.J. Kirkpatrick/Getty

The Berlin Institute of Health last year launched an initiative with the words, “Publish your NULL results — Fight the negative publication bias! Publish your Replication Study — Fight the replication crises!”

The institute is offering its researchers €1,000 (US$1,085) for publishing either the results of replication studies — which repeat an experiment — or a null result, in which the outcome is different from that expected. But Twitter, it seems, took more notice than the thousands of eligible scientists at the translational-research institute. The offer to pay for such studies has so far attracted only 22 applicants — all of whom received the award.

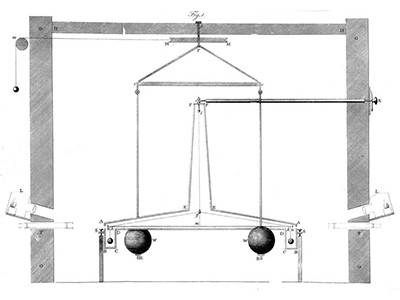

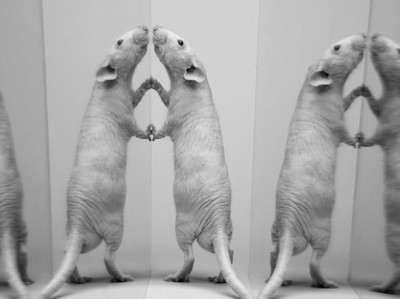

Replication studies are important. How else could researchers be sure that some experimental drugs fail to relieve disease symptoms in mice? Or that current experimental tests of dark-matter theories are drawing a blank1? But publishing this work is not always a priority for researchers, funders or editors — something that must change.

Irreproducibility is not a sign of failure, but an inspiration for fresh ideas

Two strategies can be used to encourage the publication of such work. First, institutions should actively encourage their researchers, through both words and actions. Aside from offering cash upfront, the Berlin Institute of Health has an app and advisers to help researchers to work out which journals, preprint servers and other outlets they should be contacting to publish replication studies and data. The app includes information on estimated publication fees and timelines, as well as formatting and peer-review requirements.

Second, more journals need to emphasize to the research community the benefits of publishing replications and null results. Researchers who report null results can help to steer grant money towards more fruitful studies. Replications are also invaluable for establishing what is necessary for reliable measurements, such as fundamental constants. And wider airing of null results will, eventually, prompt communities to revise their theories to better accommodate reality.

At Nature, replication studies are held to the same high standards as all our published papers. We welcome the submission of studies that provide insights into previously published results; those that can move a field forwards and those that might provide evidence of a transformative advance. We also encourage the research community as a whole to view the publication of such work as having real value.

For example, it had been thought that the presence of bacteria in the human placenta caused complications in pregnancy such as pre-eclampsia. However, a paper published last year found no evidence for the presence of a microbiome in the placenta2, suggesting that researchers might need to look elsewhere to understand such conditions.

Rewarding negative results keeps science on track

Null results are also an essential counterweight to the scientific focus on positive findings, which can cause conclusions to be accepted too readily. This is what happened when repeated studies suggested that a variation called 5-HTTLPR that affects the expression of the serotonin transporter gene was a major contributor to depression. For many, it took a comprehensive null study to shake entrenched assumptions that this is not the case3.

By contrast, confirmatory replications give researchers confidence that work is reliable and worth building on. This was the case when Nature published a case study describing someone with HIV who went into remission after receiving transplants of HIV-resistant stem cells. That person — who did not wish to be identified and has come to be known as the ‘London patient’ — was not the first to be freed of the virus in this way. That was Timothy Brown, who was treated in Berlin, but the work with the London patient showed that Brown’s cure was not an anomaly4.

Not all null results and replications are equally important or informative, but, as a whole, they are undervalued. If researchers assume that replications or null results will be dismissed, then it is our role as journals to show that this is not the case. At the same time, more institutions and funders must step up and support replications — for example, by explicitly making them part of evaluation criteria. We can all do more. Change cannot come soon enough.

Irreproducibility is not a sign of failure, but an inspiration for fresh ideas

Irreproducibility is not a sign of failure, but an inspiration for fresh ideas

Trustworthy data underpin reproducible research

Trustworthy data underpin reproducible research

Rewarding negative results keeps science on track

Rewarding negative results keeps science on track

Faculty promotion must assess reproducibility

Faculty promotion must assess reproducibility