Abstract

We construct temporal networks from time series via unfolding the temporal information into an additional topological dimension of the networks. Thus, we are able to introduce memory entropy analysis to unravel the memory effect within the considered signal. We find distinct patterns in the entropy growth rate of the aggregate network at different memory scales for time series with different dynamics ranging from white noise, 1/f noise, autoregressive process, periodic to chaotic dynamics. Interestingly, for a chaotic time series, an exponential scaling emerges in the memory entropy analysis. We demonstrate that the memory exponent can successfully characterize bifurcation phenomenon, and differentiate the human cardiac system in healthy and pathological states. Moreover, we show that the betweenness preference analysis of these temporal networks can further characterize dynamical systems and separate distinct electrocardiogram recordings. Our work explores the memory effect and betweenness preference in temporal networks constructed from time series data, providing a new perspective to understand the underlying dynamical systems.

Similar content being viewed by others

Introduction

Characterizing and unveiling evolutionary mechanisms from experimental time series is a fundamental problem which has attracted continuous interest over several decades. Going beyond conventional nonlinear techniques such as Lyapunov exponent1, symbolic dynamics approaches2,3, and surrogate methods4,5, intensive attention has focused on understanding dynamics of time series from the complex network perspective6,7,8,9,10,11,12,13,14. Depending on the definition of nodes and links, the previous transformation methods can be broadly classified into four categories: proximity networks6,7,8,9, visibility graphs10, recurrence networks11,12,13, and transition networks14. Intensive studies demonstrate that complex network measures are shown to provide an effective tool at characterizing dynamics6, identifying invariant substructures7,8,9,10, and describing attractor structure organization11,12,13,14. In this sense, network science offers an alternative perspective to characterize dynamical properties of experimental time series.

Generally, previous studies have mainly focused on a static network representation of time series. Amongst the static network representations, there is a growing industry in characterizing and exploring the time-varying nature of dynamical system15,16,17,18. Nonetheless, for describing adaptive systems, a desirable way is to use temporal networks instead of static ones19,20,21,22. In fact, it has been shown that the temporal network perspective via features such as accessibility behavior22, betweenness preference phenomenon23 and causality-driven characteristics24, can help us to better understand the dynamical variation of real systems deviating significantly from what one would expect from static network models. In particular, causality-driven characteristics provide an important advance and allow us to uncover the effect of low-order memory on temporal networks for exploring diffusion behaviors25. More significantly, the memory effect plays a key role for accurately understanding real systems ranging from traffic prediction25 and epidemics spreading26 to information search27.

In this paper, we propose a methodology for transforming time series into temporal networks by encoding temporal information into an additional topological dimension of the graph, which describes the “lifetime” of edges. We then introduce the memory entropy technique to reveal the memory effect within different types of time series including: white noise; 1/f noise; autoregressive (AR) process; and, periodic to chaotic dynamics. We find that time series with different underlying dynamics exhibit distinct memory effect phenomena which in turn can be used to characterize and classify the underlying dynamics. Interestingly, an exponential scaling behavior exists for a chaotic signal and the memory exponent is consistent with the largest Lyapunov exponent. We show that the memory exponent is capable of detecting and characterizing bifurcation phenomena. Application to human electrocardiogram (ECG) data during sinus rhythm (SR), ventricular fibrillation (VF), and ventricular tachycardia (VT) shows that such a memory exponent can accurately characterize and classify the healthy and pathological state of the heart. Moreover, we find that the betweenness preference analysis can further explore the essential difference among distinct chaotic systems and differentiate the human cardiac system under distinct states (i.e., SR, VF, and VT).

Results

From time series to temporal networks

We start from the construction of the temporal network from a dynamical system. Let  be a trajectory flow Γ of a dynamical system in m-dimensional phase space. We first partition the whole space into N non-overlapping cells with an equivalent size. For instance, the phase space shown in Fig. 1(a) is partitioned into 8 cells. By considering each cell as a node of the network, a temporal network representation GT of a given time series is achieved as follows: we denote a link between two nodes as being “active” with a particular “lifetime”. Specially, a time-stamped edge (υ, ω; t) between nodes υ and ω exists whenever the trajectory flow Γ performs a transition from cell υ to cell ω at time stamp t. As illustrated in Fig. 1(b), the time-stamped edge (c, e; 1) means that the trajectory flow Γ hops from cell c to cell e at time stamp t = 1. Similarly, the trajectory flow travels from cell e to cell d at time stamp t = 2 represented by the time-stamped edge (e, d; 2). This new definition of links is distinct from that of previous static network representations in which edges are established during the whole time window6,7,8,9,10,11,12,13,14. Specifically, for the previous static network representations, a time-stamped edge (υ, ω; t) exists for all time stamps t, while the time-stamped edge (υ, ω; t) occurs at some time stamps t in a temporal network representation. Based on time-stamped edges (υ, ω; t) ∈ E, we construct a temporal network from time series by unfolding temporal information t into an additional topological dimension. This configuration obviously ensures the one-to-one mapping between a time series and a temporal network. Hence, the temporal network will typically represents a unique time series. Note that in the process of constructing temporal networks from time series, the first step is to segment phase space into several cells. Of course, an ideal partition is to make each cell infinitesimal. However, such a partition is usually impractical due to the finite length of an observed time series. Instead, our objective is to ensure that distinct states of an attractor are distinguishable and similar states are grouped into the same cell. A specific example of this type of partitions can be referred to the ordinal partition method28.

be a trajectory flow Γ of a dynamical system in m-dimensional phase space. We first partition the whole space into N non-overlapping cells with an equivalent size. For instance, the phase space shown in Fig. 1(a) is partitioned into 8 cells. By considering each cell as a node of the network, a temporal network representation GT of a given time series is achieved as follows: we denote a link between two nodes as being “active” with a particular “lifetime”. Specially, a time-stamped edge (υ, ω; t) between nodes υ and ω exists whenever the trajectory flow Γ performs a transition from cell υ to cell ω at time stamp t. As illustrated in Fig. 1(b), the time-stamped edge (c, e; 1) means that the trajectory flow Γ hops from cell c to cell e at time stamp t = 1. Similarly, the trajectory flow travels from cell e to cell d at time stamp t = 2 represented by the time-stamped edge (e, d; 2). This new definition of links is distinct from that of previous static network representations in which edges are established during the whole time window6,7,8,9,10,11,12,13,14. Specifically, for the previous static network representations, a time-stamped edge (υ, ω; t) exists for all time stamps t, while the time-stamped edge (υ, ω; t) occurs at some time stamps t in a temporal network representation. Based on time-stamped edges (υ, ω; t) ∈ E, we construct a temporal network from time series by unfolding temporal information t into an additional topological dimension. This configuration obviously ensures the one-to-one mapping between a time series and a temporal network. Hence, the temporal network will typically represents a unique time series. Note that in the process of constructing temporal networks from time series, the first step is to segment phase space into several cells. Of course, an ideal partition is to make each cell infinitesimal. However, such a partition is usually impractical due to the finite length of an observed time series. Instead, our objective is to ensure that distinct states of an attractor are distinguishable and similar states are grouped into the same cell. A specific example of this type of partitions can be referred to the ordinal partition method28.

(a) Partition the phase space into 8 hyper-cubers. The trajectory flow thereby creates time-stamped edges in (b) the associated temporal network GT. (c) The null aggregate network G(0) and (d) the one-step memory network G(1) constructed from GT. The line width represents the number of transition times between two nodes.

The memory entropy analysis of different dynamics

After constructing the temporal network GT from a given time series, we build a null aggregate network G(0) in which a directed edge (υ, ω) from node υ to ω exists whenever a time-stamped edge (υ, ω; t) emerges in GT for some t and the weight w(υ, ω) measures how many times the directed edge (υ, ω) occurs in the temporal network GT (see Fig. 1(c)). From the null aggregate network G(0), we construct the consecutive memory network G(τ) = (V(τ), E(τ)), determined by the memory factor τ. Specifically, each node  represents a possible τ-step paths in the network G(0) such that

represents a possible τ-step paths in the network G(0) such that  , where

, where  is a set of consecutive edges in G(0). Here, the consecutive edges we mean the consecutive paths under the constraint of the edge direction with respect to the structure organization of G(0). Edges E(τ) in G(τ) are defined by all possible paths of length 2τ + 1 in G(0). For example, we construct a one-step memory network G(1) from the previous temporal network GT as illustrated in Fig. 1(d). We then apply the memory entropy (ME) approach (see methods) on the consecutive memory network G(τ) to reveal the effects of memory on shaping dynamics. Here, we investigate the transformed networks constructed from different types of time series, including white noise, 1/f noise, AR model, periodic signals, and chaotic signals. For each prototypical system, the length of the time series is n = 2 × 104 (after neglecting the first 4000 points). We find that temporal networks generated from different types of time series show distinct memory behaviors (see Fig. 2(a) and (b)). Specially, for white noise and 1/f noise, the entropy growth rate decreases sharply to zero in less than two or three memory scales, respectively, while it monotonically decreases on finite memory scales (i.e., τ ≤ 6) and then stabilizes to zero for the AR(3) model. Similarly, for a periodic signal (the Hénon map in a periodic regime for example) the entropy growth rate remains constant for all memory scales. In comparison, the entropy growth rates of chaotic signals, such as the logistic map, the Ikeda map, the Rössler system, and the Hénon map, monotonically decrease over the whole memory scale window. We call this behavior the memory effect on the time series. The observation shows that the memory effect plays an important role in shaping dynamical systems, comparable to the importance of the memory effect on real systems reported in ref. 25.

is a set of consecutive edges in G(0). Here, the consecutive edges we mean the consecutive paths under the constraint of the edge direction with respect to the structure organization of G(0). Edges E(τ) in G(τ) are defined by all possible paths of length 2τ + 1 in G(0). For example, we construct a one-step memory network G(1) from the previous temporal network GT as illustrated in Fig. 1(d). We then apply the memory entropy (ME) approach (see methods) on the consecutive memory network G(τ) to reveal the effects of memory on shaping dynamics. Here, we investigate the transformed networks constructed from different types of time series, including white noise, 1/f noise, AR model, periodic signals, and chaotic signals. For each prototypical system, the length of the time series is n = 2 × 104 (after neglecting the first 4000 points). We find that temporal networks generated from different types of time series show distinct memory behaviors (see Fig. 2(a) and (b)). Specially, for white noise and 1/f noise, the entropy growth rate decreases sharply to zero in less than two or three memory scales, respectively, while it monotonically decreases on finite memory scales (i.e., τ ≤ 6) and then stabilizes to zero for the AR(3) model. Similarly, for a periodic signal (the Hénon map in a periodic regime for example) the entropy growth rate remains constant for all memory scales. In comparison, the entropy growth rates of chaotic signals, such as the logistic map, the Ikeda map, the Rössler system, and the Hénon map, monotonically decrease over the whole memory scale window. We call this behavior the memory effect on the time series. The observation shows that the memory effect plays an important role in shaping dynamical systems, comparable to the importance of the memory effect on real systems reported in ref. 25.

The entropy growth rate H of a τ-order aggregate network as a function of the order τ for (a) White noise, 1/f noise, AR(3) model sn = 0.8sn−1 − 0.5sn−2 + 0.7sn−3 + εn (εn is generated from white noise), and the Hénon map:  and (b) Chaotic time series (i.e., the Hénon map, the Ikeda Map: xn+1 = 1 + 0.9 (xncostn − ynsintn), yn+1 = 0.9 (xnsintn − yncostn), where

and (b) Chaotic time series (i.e., the Hénon map, the Ikeda Map: xn+1 = 1 + 0.9 (xncostn − ynsintn), yn+1 = 0.9 (xnsintn − yncostn), where  , the logistic map: xn+1 = 4xn(1 − xn), and the Rössler system:

, the logistic map: xn+1 = 4xn(1 − xn), and the Rössler system:  = −(y + z),

= −(y + z),  = x + 0.2y,

= x + 0.2y,  = 0.2 + z(x − 5.7)). Note that here we choose the partition N = 2500, 1600, 900, 100 for the Hénon map, the Ikeda Map, the logistic map, and the Rössler system, respectively. The entropy growth rate H as a function of the order τ for the Hénon map in chaotic regime for (c) different time delays l and for (d) quadratic and cubic nonlinear transformations of the phase space. The planar insets show the corresponding phase portraits.

= 0.2 + z(x − 5.7)). Note that here we choose the partition N = 2500, 1600, 900, 100 for the Hénon map, the Ikeda Map, the logistic map, and the Rössler system, respectively. The entropy growth rate H as a function of the order τ for the Hénon map in chaotic regime for (c) different time delays l and for (d) quadratic and cubic nonlinear transformations of the phase space. The planar insets show the corresponding phase portraits.

These findings can be explained when referring to the evolution processes of these distinct systems. Specially, for white noise that is derived from a random process, a weakly one-step memory phenomenon exists due to the combined effect of finite data length and the coarse-grained process in the temporal network transformation. Unlike white noise, 1/f noise contains temporal connections which consequently results in the two-step memory behavior. Similarly, for the AR(3) model, the trajectory state at time t thoroughly depends on the previous three state information. Thereby, it shows memory characteristics over more time steps, compared with that of white noise and 1/f noise. In contrast, a periodic trajectory presents a clearly repeated behavior in phase space. Then, there is no memory effect on its trajectory that shows a constant value of the entropy growth rate. However, for a chaotic attractor, the skeleton is made up of an infinite number of unstable periodic orbits (UPOs)29. The trajectory evolution of a chaotic system will typically hop among these UPOs resulting in a dramatically distinct behavior of the entropy growth rate versus the memory scale.

Remarkably, for a chaotic system, an apparently exponential behavior emerges between H(τ) and τ (i.e., H(τ) ∝ exp(−ρτ)) as illustrated in Fig. 2(b). We thus define ρ as the memory exponent of a dynamical system. Such interesting scaling behavior can be anticipated by the encounter probability Φ(k) for an orbit of period k in a chaotic system. It is known that the trajectory usually approaches a UPO for a certain time interval until it is captured by the stable manifold of another UPO and so on. For any periodic orbit of period k, the main dependence of Φ(k) on k can be approximated by refs 30 and 31

where Q = λ + m1 − m0, λ, m1 and m0 are the largest Lyapunov exponent, the metric entropy and the topological entropy of the chaotic attractor, respectively. For each memory scale τ, the memory effect is based on the behaviors of τ-step consecutive paths in the null aggregate network G(0), which is largely dominated by the encounter probability Φ(k) in phase space. Thus, the memory effect on a chaotic time series demonstrates the same behavior as described in Eq. (1). Moreover, we find that the memory exponents for the Rössler system, the logistic map, the Ikeda map, and the Hénon map are 0.09, 0.658, 0.509, and 0.402, respectively. They are in good agreement with the largest Lyapunov exponents λ as reported in refs 1 and 32. Empirical findings suggest that the memory exponent ρ presents a strong connection with the largest Lyapunov exponent λ for chaotic time series. In fact, both of them are quantities that characterize the trajectory evolution of dynamical systems. For the chaotic system with a larger λ, infinitesimally close trajectories will be separated more rapidly. In this situation, the underlying connection of close trajectories will be lost more quickly. Such a characteristic is captured by the memory effect analysis, where the entropy growth rate H(τ) consequently decreases more rapidly, which in turn results in a higher memory exponent ρ and vice versa.

Here, we note that since the random processes (i.e., white noise and 1/f noise) have no attractor structure, we derive the temporal networks from their scalar time series directly. While for the other dynamical systems, we construct the temporal networks on the basis of the original phase space points. In fact, the results are unchanged when taking a phase space reconstruction from the x-coordinate, see in Fig. 2(c), where we take the Hénon map in the chaotic regime as an example. As shown in Fig. 2(c), profiles of H versus τ for different time delays l are similar to that of the original phase points exhibited in Fig. 2(b). Moreover, we show that the memory exponent is invariant even under a nonlinear transformation of phase space. This is illustrated in Fig. 2(d), where profiles of H versus τ computed from the same chaotic time series of the Hénon map in Fig. 2(b), however, passed through quadratic and cubic nonlinear transformations of the phase space. The results in Fig. 2(d) are almost identical to that from the original data, presented in Fig. 2(b).

The memory entropy analysis of bifurcation phenomena

Furthermore, we show that the memory exponent ρ can be used to characterize the bifurcation phenomena of dynamical systems. Here, we select the logistic map (i.e., xn+1 = μxn(1 − xn)) as a benchmark example and select μ ∈ [3.5, 4] with step size Δμ = 0.001. For each μ, we record 104 time points removing the leading 4000 observations (to eliminate transient states). Since the considered example is a one-dimensional map33,34, we perform the phase space reconstruction for each recording with the embedding dimension m = 2 and the time delay l = 1 and execute the ME analysis with N = 900. As shown in Fig. 3(a), the memory exponent ρ is sensitive to the presence of dynamical transition in the logistic map. The pattern of ρ versus μ not only correctly locates the periodicity of the transient periodic motions but also successfully reveals the intensity level of the chaotic behavior. In particular, for the chaotic regimes, the trend is very similar to that from the largest Lyapunov exponent λ calculated using the TISEAN software package35. The similarity is supported by observing the correlation coefficient r with r = 0.93. Meanwhile, ρ = 0 is obtained for the periodic regimes, as expected. These results indicate that the memory exponent ρ serves as an effective and alternative metric for characterizing different dynamical regimes. Moreover, we introduce observational noise into the recordings of each μ with the medium level (i.e., signal-to-noise ratio (SNR) is 28.5 dB). The periodic windows are then obscured by observing the bifurcation diagram in Fig. 3(b). Nonetheless, we can see that the memory exponent ρ can successfully detect periodic windows in the presence of medium level Gaussian noise. However, the traditional largest Lyapunov exponent λ fails in the same scenario as λ > 0 for the majority of these periodic windows, see in Fig. 3(b). The results show that the memory exponent ρ is more robust in comparison with the largest Lyapunov exponent λ. Note that here we choose the unified embedding parameters (i.e., m = 2 and l = 1) for calculating the quantities ρ and λ.

(a) The bifurcation diagram, the largest Lyapunov exponent λ and the memory exponent ρ for the logistic map in the range 3.5 ≤ μ ≤ 4. (b) The influence of additive Gaussian noise (SNR = 28.5 dB) on the bifurcation diagram, largest Lyapunov exponent λ and the memory exponent ρ. Note that here for each μ, we choose the unified embedding parameters (i.e., m = 2 and l = 1) for obtaining the quantities ρ and λ in both situations (i.e., with and without the Gaussian noise).

The betweenness preference phenomena of chaotic time series

The previous ME analysis provides only a part of the temporal network perspective of an observed time series. We further employ the betweenness preference to extract more interesting features. The betweenness preference characterizes the preferential connection of nodes in a temporal sense23. Specifically, first derive a betweenness preference matrix Bυ(t) from a constructed temporal network GT, whose entries  = 1(0) if the path (ω1, υ; t) is (not) followed by the path (υ, ω2; t + 1). Second, summarize the

= 1(0) if the path (ω1, υ; t) is (not) followed by the path (υ, ω2; t + 1). Second, summarize the  over all t to obtain a time-aggregated betweenness preference matrix Bυ such that

over all t to obtain a time-aggregated betweenness preference matrix Bυ such that  . Finally, calculate the betweenness preference measure Iυ for the node υ as follows:

. Finally, calculate the betweenness preference measure Iυ for the node υ as follows:

where  ,

,  , and

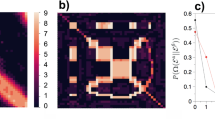

, and  . In this context, a trivial result is that Iυ = 0 for the temporal network constructed from periodic time series. In contrast, for chaotic time series, the resultant temporal network presents a pronounced amount of betweenness preference distributions shown in Fig. 4. These interesting distribution patterns are related to the evolution behaviors of distinct dynamical systems. In fact, it is well known that the skeleton of a chaotic attractor is made up of an infinite number of unstable periodic orbits29. The trajectory will typically switch or jump among these UPOs with different preferences in a temporal sense, something which is captured well by the betweenness preference analysis. Specifically, for the logistic map and the Hénon Map, the distribution of betweenness preference is heterogeneous with a relatively high probability of I = 0. This characteristics implies that the movement of their trajectories has a weak correlation in phase space. In contrast, the betweenness preference distribution for the Ikeda map is rather homogeneous and broad, meaning that the motion of the trajectory among different UPOs is highly correlated. However, none of them matches that of the Rössler system, which lies somewhere between heterogeneous and homogeneous as illustrated in Fig. 4(d). The result hints at the existence of a biased preference for its trajectory evolution in phase space. Moreover, for better illustration, we further reflect the spatial distribution of the betweenness preference shown in Fig. 5. We find high values of I near the tips of the attractor in phase space, and low values almost filled the whole phase space regions. Interestingly, there are pronounced regions with rather few isolated points of high I in phase space. Clearly, they play an important role in linking the evolution of trajectory among different regions in phase space in a temporal sense. Although we do not provide a complete explanation of these interesting features, the betweenness preference analysis allows to uncover the preference behavior of trajectory evolution of dynamical systems.

. In this context, a trivial result is that Iυ = 0 for the temporal network constructed from periodic time series. In contrast, for chaotic time series, the resultant temporal network presents a pronounced amount of betweenness preference distributions shown in Fig. 4. These interesting distribution patterns are related to the evolution behaviors of distinct dynamical systems. In fact, it is well known that the skeleton of a chaotic attractor is made up of an infinite number of unstable periodic orbits29. The trajectory will typically switch or jump among these UPOs with different preferences in a temporal sense, something which is captured well by the betweenness preference analysis. Specifically, for the logistic map and the Hénon Map, the distribution of betweenness preference is heterogeneous with a relatively high probability of I = 0. This characteristics implies that the movement of their trajectories has a weak correlation in phase space. In contrast, the betweenness preference distribution for the Ikeda map is rather homogeneous and broad, meaning that the motion of the trajectory among different UPOs is highly correlated. However, none of them matches that of the Rössler system, which lies somewhere between heterogeneous and homogeneous as illustrated in Fig. 4(d). The result hints at the existence of a biased preference for its trajectory evolution in phase space. Moreover, for better illustration, we further reflect the spatial distribution of the betweenness preference shown in Fig. 5. We find high values of I near the tips of the attractor in phase space, and low values almost filled the whole phase space regions. Interestingly, there are pronounced regions with rather few isolated points of high I in phase space. Clearly, they play an important role in linking the evolution of trajectory among different regions in phase space in a temporal sense. Although we do not provide a complete explanation of these interesting features, the betweenness preference analysis allows to uncover the preference behavior of trajectory evolution of dynamical systems.

The temporal network perspective of human ECG data

Finally, we consider the application of the temporal network analysis to human ECG to reveal the memory effect and betweenness preference within the human cardiac system. Here, the human ECG recordings are collected from patients in a Coronary Care Unit and adjacent Cardiology Ward containing three different states: SR, VT, and VF, as described in ref. 36. Each recording consists of 10,000 data points (20 s) in length. We repeated the calculation applied to previous time series with these physiological signals. We observe that the entropy growth rates H decrease exponentially with memory scale τ (see Fig. 6(a) for three representative subjects) consistent with that of chaotic systems. These findings suggest that the human cardiac system has chaotic characteristics, which is consistent with several previous results5,6. Meanwhile, we note that the slopes of log10H versus τ are different for distinct types of the ECG recordings in Fig. 6(a). This is further supported by observing the memory exponent ρ shown in Fig. 6(b), where distinct types of the ECG recording lie in a different range of values ρ. Hence the ME analysis uncovers the underlying mechanism (i.e., memory effect) that governs each type of human ECG recording. For example, for the SR group, the cardiac system presents a regular and repeated behavior. Therefore, the memory effect has less influence on the function of the cardiac system which in turn results in a smaller memory exponent ρ. In contrast, for the VF group, the cardiac system is under extreme physiological stress and presents more complex irregular behavior37. In such case, the memory effect seemingly affects the cardiac system more strongly. Since the condition of VT groups usually lies between regular and extremely disordered coronary rhythm their corresponding memory exponent ρ is in the middle as expected. Hence, the ME approach provides a powerful tool in characterizing human cardiac system from another perspective beyond the nonlinear dynamics aspect.

(a) The entropy growth rate H of τ-order aggregate network with the memory scale τ in temporal networks generated from the representative SR, VT, and VF recordings. (b) Values of the memory exponent ρ for the SR, the VT, and the VF groups. Note that here we choose the embedding dimension m = 2, the time delay l = 15, and the partition N = 300 for these physiologic time series. (c) The entropy growth rate H as a function of τ for the representative SR recording with different embedding dimensions m. (d) Betweenness preference distributions for the representative SR, VT, and VF recordings.

Since the recordings are non-stationary and noisy, we choose the embedding dimension m = 2 based on the information theoretic criterion38. Although the embedding dimension is substantially smaller than one would reasonably expect, it can be adopted to classify different states using their trajectories39. Loosely speaking, embedding is akin to “unfolding” the dynamics, albeit from different axes. Here, we show that the results are unchanged when slightly increasing the embedding dimension. For convenience, we take the representative SR recording as an example. It is shown that profiles of H versus τ for different embedding dimensions m present a similar tendency, seen in Fig. 6(c). Regarding the significance of the ME analysis in characterizing real world data, in the future we can adopt the surrogate method as a hypothesis model4. More precisely, first, we use the surrogate method to generate an ensemble of surrogate data from an observed time series with a desired property. We then calculate the memory effect of both the original time series and the surrogates. Finally, we can assess the statistical significance of the ME analysis through surrogate test technique.

The temporal network view of the ECG recordings enhances our understanding of the human cardiac system. Besides the previous memory effect, we further show that the betweenness preference analysis can reveal the significant difference among the SR, VT, and VF recordings. Here, for convenience, we take the results of the representative SR, VT, and VF recordings as an example shown in Fig. 6(d). Clearly, different recordings exhibit remarkably distinct distribution patterns. Specifically, the distribution pattern of the SR recording shows a relative high probability of I = 0. However, the probability of the same value is much smaller for that of the VF and VT recordings. Such clear difference is related to the distinct behaviors of the human cardiac system at health and pathological state. In particular, the cardiac system tends to have a weak correlation in the health state. Meanwhile, we notice that for the SR and VF recording, a spike emerges at the position of I = 1. However, this remarkable feature is almost absent in that of the VT recording. Although we do not provide a complete explanation to these distinct characteristics, the betweenness preference analysis indeed allows one to classify and characterize the human cardiac system from a new perspective.

Discussion

In summary, we develop a transformation from time series to temporal networks by unfolding temporal information into an additional topological dimension. For the transformed temporal network, we first introduce the so-called ME analysis to characterize the memory effect within the observed signal. We find that the memory effect can accurately differentiate various types of time series including white noise, 1/f noise, AR model, periodic and chaotic time series. Interestingly, an exponential scaling behavior emerges for a chaotic signal and the memory exponent is in good agreement with the largest Lyapunov exponent. Moreover, such memory exponent is capable of detecting bifurcation phenomena and characterizing real systems, for example, the human ECG recordings from the SR, VT and VF states. Moreover, we further adopt the betweenness preference analysis to characterize and classify dynamical systems and separate the human cardiac system under different states. Our work uncovers the function of the memory effect and betweenness preference in governing various dynamical systems. In the future, a number of other statistics that were recently developed in temporal networks, could be applied after our transformation scheme to shed light on the dynamics of time series.

We notice that the ME analysis is based on entropy rate of the consecutive memory network versus the memory scale, which seems to be related to K2 entropy40,41 and Markov models42. However, these methods approach the problem from different angles and with different intentions. Specifically, for using Markov models to characterize dynamical systems, they usually assume that the current state of a dynamical system provides all the information to predict future states and the history information is redundant. Although they are successfully adopted for prediction, especially to financial time series, the Markov models may fail to capture the high-order correlations underlying dynamical systems. While for the dynamical invariant K2 entropy, it is a classic quantity for quantifying “how” chaotic the signal is refs 40 and 41. On this point, the ME analysis seems to play the same function as that of the K2 entropy. Nonetheless, they have different channels to reveal such interesting metrics. In particular, for the K2 entropy, it is usually obtained by adapting embedding dimensions40 or using recurrence plots41. In contrast, the ME analysis is calculated in the temporal network paradigm. The advantage of using a temporal network representation is that we can derive a series of high-order memory networks. The properties of these memory networks will benefit us to explore more interesting high-order level features of an observed signal, which are absent from the previous techniques.

Finally, there is a long tradition of using symbolic dynamics to characterize time series, of which the works of refs 43, 44 and 45 are now the classic examples. The motivation for our technique is similar — the dynamics is partitioned and we look for transitions. Moreover, both the ME analysis and the symbolic dynamics are based on the dynamical entropy for deriving the “memory” stored in time series and their results are closely related to the Kolmogorov-Sinai entropy, which can quantify the order and randomness of an observed time series46. On this point, we admit that both methods have some similarities but are not identical. In fact, we believe that our temporal network method builds on and develops the traditional idea of symbolic dynamics. Nonetheless, there is a major difference between our method and the symbolic dynamics approach. Specifically, the methods in refs 43, 44 and 45 treat the evolution of dynamical systems using a signal Markov chain. In contrast, the memory effect analysis is based on the entropy rates of consecutive memory networks constructing from the temporal network. Furthermore, as we declared earlier, the other properties of these memory networks will bring new insights into the high-order level features of dynamical systems, which are absent from the symbolic dynamics techniques. Nonetheless, the traditional symbolic dynamics may be of benefit to us when we seek to further develop the theoretical aspect of the ME analysis in the future. Of course, the memory effect is part of our findings in virtue of the temporal network representation. With the help of our unique transformation from time series to temporal networks, we can adopt other statistics and tools in the temporal network regime to reveal more interesting features of time series, for example, the betweenness preference analysis shown in Fig. 5. Moreover, although we have revealed the memory effect of dynamical systems here, the technique (i.e., the ME analysis) presented in this paper should prove useful also for characterizing some real systems describing by temporal networks as well, for example, lottery, traffic system, and football games. This will be another interesting application of our memory effect analysis.

Methods

The memory entropy analysis of a temporal network

After deriving the temporal network GT from an observed time series, we introduce memory entropy to reveal the effect of memory on shaping its dynamics. First, we build a null aggregate network G(0) in which a directed edge (υ, ω) from node υ to ω exists whenever a time-stamped edge (υ, ω; t) emerges in GT for some t and the weight w(υ, ω) measures how many times the directed edge (υ, ω) occurs in the temporal network GT. Second, we construct the consecutive memory network G(τ) = (V(τ), E(τ)), controlled by the memory factor τ. Specifically, the node  represents a possible τ-step paths in the network G(0) such that

represents a possible τ-step paths in the network G(0) such that  , where

, where  is a set of consecutive edges in G(0). Edges E(τ) in G(τ) are defined by all possible paths of length 2τ + 1 in G(0) and we use

is a set of consecutive edges in G(0). Edges E(τ) in G(τ) are defined by all possible paths of length 2τ + 1 in G(0) and we use  to measure how frequently the τ-step paths

to measure how frequently the τ-step paths  and

and  emerge together in GT. More precisely,

emerge together in GT. More precisely,  is given by

is given by

where  if the time-stamped edge (ωik, ωi(k+1); t) (not) exists in the temporal network GT. Third, for the memory network G(τ), we define the transition probability

if the time-stamped edge (ωik, ωi(k+1); t) (not) exists in the temporal network GT. Third, for the memory network G(τ), we define the transition probability  from node

from node  to node

to node  as follows

as follows

From the above transition probability, we calculate the entropy growth rate H(τ)

where  is the ith component of the stationary distribution. The entropy growth rate measures the uncertainty of the next step of the jumps given the current state weighted by the stationary distribution25,47. In this sense, the less effect memory has in the trajectory flow Γ, the more the entropy growth rate will decrease in the high-order memory network G(τ). Finally, we plot the entropy growth rate H(τ) as a function of the memory scale τ. We call this the memory entropy analysis of a temporal network.

is the ith component of the stationary distribution. The entropy growth rate measures the uncertainty of the next step of the jumps given the current state weighted by the stationary distribution25,47. In this sense, the less effect memory has in the trajectory flow Γ, the more the entropy growth rate will decrease in the high-order memory network G(τ). Finally, we plot the entropy growth rate H(τ) as a function of the memory scale τ. We call this the memory entropy analysis of a temporal network.

Additional Information

How to cite this article: Weng, T. et al. Memory and betweenness preference in temporal networks induced from time series. Sci. Rep. 7, 41951; doi: 10.1038/srep41951 (2017).

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

Kantz, H. & Schreiber, T. Nonlinear Time Series Analysis (Cambridge University Press, Cambridge, UK, 2004).

Grassberger, P., Kantz, H. & Moenig, U. On the symbolic dynamics of the Hénon map. J. Phys. A: Math. Gen. 22, 5217–5230 (1989).

Grassberger, P., Schreiber, T. & Schaffrath, C. Nonlinear time sequence analysis. Int. J. Bifurc. Chaos 1, 521–547 (1991).

Theiler, J., Eubank, S., Longtin, A., Galdrikian, B. & Farmer, J. D. Testing for nonlinearity in time series: the method of surrogate data. Physica D 58, 77–94 (1992).

Small, M., Yu, D. J. & Harrison, R. G. Surrogate test for pseudoperiodic time series data. Phys. Rev. Lett. 87, 188101 (2001).

Zhang, J. & Small, M. Complex network from pseudoperiodic time series: Topology versus dynamics. Phys. Rev. Lett. 96, 238701 (2006).

Zhao, Y., Weng, T. F. & Ye, S. K. Geometrical invariability of transformation between a time series and a complex network. Phys. Rev. E 90, 012804 (2014).

Gao, Z. K. et al. Multi-frequency complex network from time series for uncovering oil-water flow structure. Sci. Rep. 5, 8222 (2015).

Gao, Z. K., Fang, P. C., Ding, M. S. & Jin, N. D. Multivariate weighted complex network analysis for characterizing nonlinear dynamic behavior in two-phase flow. Exp. Therm. Fluid Sci. 60, 157–164 (2015).

Lacasa, L., Luque, B., Ballesteros, F., Luque, J. & Nuño, J. C. From time series to complex networks: The visibility graph. Proc. Natl. Acad. Sci. USA 105, 4972–4975 (2008).

Donner, R. V. et al. Recurrence networks–a novel paradigm for nonlinear time series analysis. New J. Phys. 13, 033025 (2010).

Donner, R. V. et al. Recurrence-based time series analysis by means of complex network methods. Int. J. Bifurc. Chaos 21, 1019–1046 (2011).

Donner, R. V. et al. The geometry of chaotic dynamics — a complex network perspective. Eur. Phys. J. B 84, 653–672 (2011).

Campanharo, A. S., Sirer, M. I., Malmgren, R. D., Ramos, F. M. & Amaral, L. A. N. Duality between time series and networks. PLoS ONE 6, e23378 (2011).

Donges, J. F. et al. Nonlinear detection of paleoclimate-variability transitions possibly related to human evolution. Proc. Natl. Acad. Sci. USA 108, 20422–20427 (2011).

Iwayama, K. et al. Characterizing global evolutions of complex systems via intermediate network representations. Sci. Rep. 2, 423 (2012).

Gao, X. Y. et al. Transmission of linear regression patterns between time series: From relationship in time series to complex networks. Phys. Rev. E 90, 012818 (2014).

Eroglu, D. et al. See-saw relationship of the Holocene East Asian-Australian summer monsoon. Nat. Commun. 7, 12929 (2016).

Perra, N., Goncalves, B., Pastor-Satorras, R. & Vespignani, A. Activity driven modeling of time varying networks. Sci. Rep. 2, 469 (2012).

Perra, N. et al. Random walks and search in time-varying networks. Phys. Rev. Lett. 109, 238701 (2012).

Holme, P. & Saramäki, J. Temporal networks. Phys. Rep. 519, 97–125 (2012).

Lentz, H. H. K., Selhorst, T. & Sokolov, I. M. Unfolding accessibility provides a macroscopic approach to temporal networks. Phys. Rev. Lett. 110, 118701 (2013).

Pfitzner, R., Scholtes, I., Garas, A., Tessone, C. J. & Schweitzer, F. Betweenness preference: Quantifying correlations in the topological dynamics of temporal networks. Phys. Rev. Lett. 110, 198701 (2013).

Scholtes, I. et al. Causality-driven slow-down and speed-up of diffusion in non-Markovian temporal networks. Nat. Commun. 5, 5024 (2014).

Rosvall, M., Esquivel, A. V., Lancichinetti, A., West, J. D. & Lambiotte, R. Memory in network flows and its effects on spreading dynamics and community detection. Nat. Commun. 5, 4630 (2014).

Belik, V., Geisel, T. & Brockmann, D. Natural human mobility patterns and spatial spread of infectious diseases. Phys. Rev. X 1, 011001 (2011).

Singer, P., Helic, D., Taraghi, B. & Strohmaier, M. Detecting memory and structure in human navigation patterns using markov chain models of varying order. PLoS ONE 9, e102070 (2014).

McCullough, M., Small, M., Stemler, T. & lu, H. H. Time lagged ordinal partition networks for capturing dynamics of continuous dynamical systems. Chaos 25, 053101 (2015).

Hunt, B. R. & Ott, E. Optimal periodic orbits of chaotic systems. Phys. Rev. Lett. 76, 2254–2257 (1996).

Pei, X., Dolan, K., Moss, F. & Lai, Y. C. Counting unstable periodic orbits in noisy chaotic systems: A scaling relation connecting experiment with theory. Chaos 8, 853–860 (1998).

Dhamala, M., Lai, Y. C. & Kostelich, E. J. Detecting unstable periodic orbits from transient chaotic time series. Phys. Rev. E 61, 6485–6489 (2000).

Brown, R., Bryant, P. & Abarbanel, H. D. I. Computing the Lyapunov spectrum of a dynamical system from an observed time series. Phys. Rev. A 43, 2787–2806 (1991).

Marwan, N., Wessel, N., Meyerfeldt, U., Schirdewan, A. & Kurths, J. Recurrence plot based measures of complexity and their application to heart rate variability data. Phys. Rev. E 66, 026702 (2002).

Eroglu, D. et al. Entropy of weighted recurrence plots. Phys. Rev. E 90, 042919 (2014).

Hegger, R., Kantz, H. & Schreiber, T. Practical implementation of nonlinear time series methods: The TISEAN package. Chaos 9, 413–435 (1999).

Small, M. et al. Uncovering nonlinear structure in human ECG recordings. Chaos Solitons Fractals 13, 1755–1762 (2002).

Narayanan, K., Govindan, R. B. & Gopinathan, M. S. Unstable periodic orbits in human cardiac rhythms. Phys. Rev. E 57, 4594–4603 (1998).

Small, M. & Tse, C. K. Optimal embedding parameters: a modelling paradigm. Physica D 194, 283–296 (2004).

Sarvestani, R. R., Boostani, R. & Roopaei, M. VT and VF classification using trajectory analysis. Nonlinear Analysis 71, e55–e61 (2009).

Grassberger, P. & Procaccia, I. Estimation of the Kolmogorov entropy from a chaotic signal. Phys. Rev. A 28, 2591–2593 (1983).

Thiel, M., Romano, M. C., Read, P. L. & Kurth, J. Estimation of dynamical invariants without embedding by recurrence plots. Chaos 14, 234–243 (2004).

Dias, J. G., Vermunt, J. K. & Ramos, S. Clustering financial time series: New insights from an extended hidden Markov model. Eur. J. Oper. Res. 243, 852–864 (2015).

Grassberger, P. Toward a quantitative theory of self-generated complexity. Int. J. Theor. Phys. 25, 907–938 (1986).

Freund, J., Ebeling, W. & Rateitschak, K. Self-similar sequences and universal scaling of dynamical entropies. Phys. Rev. E 54, 5561–5566 (1996).

Crutchfield, J. P. & Feldman, D. P. Regularities unseen, randomness observed: Levels of entropy convergence. Chaos 13, 25–54 (2003).

Farmer, J. D. Chaotic attractors of an infinite dimensional dynamical system. Physica D 4, 366–393 (1982).

Gómez-Gardeñes, J. & Latora, V. Entropy rate of diffusion processes on complex networks. Phys. Rev. E 78, 065102(R) (2008).

Acknowledgements

J.Z. is supported by National Science Foundation of China NSFC 61573107 and 61104143, and Special Funds for Major State Basic Research Projects of China (2015CB856003). M.S. is supported by an Australian Research Council Future Fellowship (FT110100896). This research has been supported, in part, by General Research Fund 26211515 from the Research Grants Council of Hong Kong, and Innovation and Technology Fund ITS/369/14FP from the Hong Kong Innovation and Technology Commission.

Author information

Authors and Affiliations

Contributions

T.F. Weng, J. Zhang, M. Small and P. Hui designed the research, performed the research, and wrote the manuscript. R. Zheng analyzed data and performed research. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Weng, T., Zhang, J., Small, M. et al. Memory and betweenness preference in temporal networks induced from time series. Sci Rep 7, 41951 (2017). https://doi.org/10.1038/srep41951

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep41951

This article is cited by

-

Complex networks and deep learning for EEG signal analysis

Cognitive Neurodynamics (2021)

-

Constructing ordinal partition transition networks from multivariate time series

Scientific Reports (2017)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.