Abstract

Fourier ptychographic microscopy (FPM) is a novel computational coherent imaging technique for high space-bandwidth product imaging. Mathematically, Fourier ptychographic (FP) reconstruction can be implemented as a phase retrieval optimization process, in which we only obtain low resolution intensity images corresponding to the sub-bands of the sample’s high resolution (HR) spatial spectrum and aim to retrieve the complex HR spectrum. In real setups, the measurements always suffer from various degenerations such as Gaussian noise, Poisson noise, speckle noise and pupil location error, which would largely degrade the reconstruction. To efficiently address these degenerations, we propose a novel FP reconstruction method under a gradient descent optimization framework in this paper. The technique utilizes Poisson maximum likelihood for better signal modeling and truncated Wirtinger gradient for effective error removal. Results on both simulated data and real data captured using our laser-illuminated FPM setup show that the proposed method outperforms other state-of-the-art algorithms. Also, we have released our source code for non-commercial use.

Similar content being viewed by others

Introduction

Fourier ptychographic microscopy (FPM) is a novel computational coherent imaging technique for high space-bandwidth product (SBP) imaging1,2. This technique sequentially illuminates the sample with different incident angles and correspondingly captures a set of low-resolution (LR) images of the sample. Assuming that the incident light is a plane wave and the imaging system is a low-pass filter, the LR images captured under different incident angles correspond to different spatial spectrum bands of the sample, as shown in Fig. 1. By stitching these spectrum bands together in Fourier space, a large field-of-view (FOV) and high resolution (HR) image of the sample can be obtained. As a reference, the synthetic numerical aperture (NA) of the FPM setup reported in ref. 1 is ~0.5 and the FOV can reach ~120 mm2, which greatly improves the throughput of the existing microscope. FPM has been widely applied in 3D imaging3,4, fluorescence imaging5,6, mobile microscope7,8 and high-speed in vitro imaging9.

Mathematically, Fourier ptychographic (FP) reconstruction can be implemented as a typical phase retrieval optimization process, which needs to recover a complex function given the intensity measurements of its linear transforms. Specifically, we only obtain the LR intensity images corresponding to the sub-bands of the sample’s HR spatial spectrum and aim to retrieve the complex HR spatial spectrum. Conventional FPM1,2 utilizes the alternating projection (AP) algorithm10,11, which adds constraints alternately in spatial space (captured intensity images) and Fourier space (pupil function), to stitch the LR sub-spectra together. AP is easy to implement and fast to converge, but is sensitive to measurement noise and system errors arising from numerous factors such as low signal-to-noise ratio due to short camera exposure time12 and incorrect sub-sampling of the HR spatial spectrum due to misalignments of the imaging system. To tackle measurement noise, Bian et al.13 proposed a novel method termed Wirtinger flow optimization for Fourier Ptychography (WFP), which uses the gradient descent scheme and Wirtinger calculus14 to minimize the intensity errors between estimated LR images and corresponding measurements. WFP is robust to Gaussian noise and can produce better reconstruction results in low-exposure imaging scenarios and thus largely decrease image acquisition time. However, it needs careful initialization since the optimization is non-convex. Based on the semidefinite programming (SDP) convex optimization for phase retrieval15,16, Horstmeyer et al. modeled FP reconstruction as a convex optimization problem17. The method guarantees a global optimum, but converges slow which makes it impractical in real applications. Recently, Yeh et al.18 tested different objective functions (intensity based, amplitude based and Poisson maximum likelihood) under the gradient-descent optimization scheme for FP reconstruction. The results show that the amplitude-based and Poisson maximum-likelihood objective functions produce better results than the intensity-based objective function. To address the LED misalignment, the authors also added a simulated annealing algorithm into each iteration to search for the optimal pupil locations.

Although the above methods offer various options for FP reconstruction, they have their own limitations. AP and WFP are limited to address Gaussian noise and cannot handle speckle noise well (as shown in following experiments) which is common when the light source is highly spatially and temporally coherent (such as laser)12, as well as Poisson noise and pupil location error. The Poisson Wirtinger Fourier ptychographic reconstruction (PWFP) technique mentioned in ref. 18 performs better than other methods, but it still obtains aberrant reconstruction results with the measurements corrupted with Gaussian noise and needs much more running time for the incorporated simulated annealing algorithm to deal with LED misalignment.

In this paper, we propose a novel FP reconstruction method termed truncated Poisson Wirtinger Fourier ptychographic reconstruction (TPWFP), to efficiently handle the above mentioned measurement noise and pupil location error. The technique incorporates Poisson maximum likelihood objective function and truncated Wirtinger gradient19 together into a gradient-descent optimization framework. The advantages of TPWFP lie in three aspects:

-

The utilized Poisson maximum-likelihood objective function is more appropriate to describe the Poisson characteristic of the photon detection by an optical sensor in real imaging systems and thus can produce better results in real applications.

-

Truncated gradient is used to prevent outliers from degrading the reconstruction, which provides better descent directions and enhanced robustness to various sources of error such as Gaussian noise and pupil location error.

-

There is no matrix lifting and global searching for optimization, resulting in faster convergence and less computational requirement.

To demonstrate the effectiveness of TPWFP, we test it against the aforementioned algorithms on both simulated data and real data captured using a laser-illuminated FPM setup12. Both the simulations and real experiments show that TPWFP outperforms other state-of-the-art algorithms in the imaging scenarios involving Poisson noise, Gaussian noise, speckle noise and pupil location error.

Methods

As stated before, TPWFP incorporates Poisson maximum likelihood objective function and truncated Wirtinger gradient together into a gradient descent optimization framework for FP reconstruction. Next, we begin to introduce this technique in detail.

Image formation of FPM

FPM is a coherent imaging system. It requires the illumination to be coherent1, or partially coherent20, in order to capture multiple images of limited spatial bandwidth information in different regions of a sample’s spatial spectrum. For a relatively thin sample21, different spatial spectrum regions can be accessed by angularly varying coherent illumination. Under the assumption that the light incident on a sample is a plane wave, we can describe the light field transmitted from the sample as  , where ϕ is the sample’s complex spatial map, (x, y) are the 2D spatial coordinates, j is the imaginary unit, λ is the wavelength of illumination and θx and θy are the incident angles as shown in Fig. 1. Then the light field is Fourier transformed to the pupil plane when it travels through the objective lens and subsequently low-pass filtered by the aperture. This process can be represented as

, where ϕ is the sample’s complex spatial map, (x, y) are the 2D spatial coordinates, j is the imaginary unit, λ is the wavelength of illumination and θx and θy are the incident angles as shown in Fig. 1. Then the light field is Fourier transformed to the pupil plane when it travels through the objective lens and subsequently low-pass filtered by the aperture. This process can be represented as  , where P(kx, ky) is the pupil function for low-pass filtering, (kx, ky) are the 2D spatial frequency coordinates in the pupil plane and

, where P(kx, ky) is the pupil function for low-pass filtering, (kx, ky) are the 2D spatial frequency coordinates in the pupil plane and  is the Fourier transform operator. Afterwards, the light field is Fourier transformed again when it passes through the tube lens to the imaging sensor. Since real imaging sensors can only capture light’s intensity, the image formation of FPM follows:

is the Fourier transform operator. Afterwards, the light field is Fourier transformed again when it passes through the tube lens to the imaging sensor. Since real imaging sensors can only capture light’s intensity, the image formation of FPM follows:

where I is the captured image,  is the inverse Fourier transform operator and Φ is the spatial spectrum of the sample. Visual explanation of the image formation process is diagrammed in Fig. 1

is the inverse Fourier transform operator and Φ is the spatial spectrum of the sample. Visual explanation of the image formation process is diagrammed in Fig. 1

Because  is linear and

is linear and  is also a linear operation that passes only a finite bandwidth of the HR spatial spectrum with the pupil function, we can rewrite the above image formation of FPM as

is also a linear operation that passes only a finite bandwidth of the HR spatial spectrum with the pupil function, we can rewrite the above image formation of FPM as

where  is the ideal captured images (all the captured I under different angular illumination in vector form),

is the ideal captured images (all the captured I under different angular illumination in vector form),  is the corresponding linear transform matrix incorporating the Fourier transform and the low-pass filtering and

is the corresponding linear transform matrix incorporating the Fourier transform and the low-pass filtering and  is the sample’s HR spectrum (Φ in vector form). This is a standard phase retrieval problem22, where b and A are known and z is what we aim to recover.

is the sample’s HR spectrum (Φ in vector form). This is a standard phase retrieval problem22, where b and A are known and z is what we aim to recover.

Poisson maximum-likelihood objective function

Here we assume that the detected photons at each detector unit follow Poisson distribution in real setups, which is consistent with the independent nature of random individual photon arrivals at the imaging sensor23. Note that although the Poisson distribution approaches a Gaussian distribution for large photon counts according to the central limit theorem, most of the captured images in FPM are dark-field images under oblique illuminations (most dark-field pixels’ values are only 0.5–5% of the camera’s full bit-depth, see the exemplar captured image shown in Fig. 1) and are thus more consistent with Poisson distribution. The reason for this stark difference in signal strength between bright-field and dark-field images is the fact that, for natural samples such as cells, most of their energy in their spatial spectrum is concentrated at the low-frequency regions24. Increasing exposure time and utilizing the HDR technique1 could increase the signal-to-noise ratio (SNR) and accommodate the dramatic difference in the signal strength between bright-field and dark-field images, but most fast image acquisition setups such as our laser-illuminated FPM system12 necessitate using the same exposure level for all images. In a nutshell, the signal’s Poisson probability model can be represented as

where bi = |aiz|2 is the i th latent signal (pixel) in b and ai is the i th row of A. Thus, for ci, its probability mass function is

where e is the Euler’s number and ci! is the factorial of ci.

Based on the maximum-likelihood estimation theory, assuming that the measurements are independent from each other, the reconstruction turns into maximizing the global probability of all the measurements c1, ···, m, namely

Taking a logarithm of the objective function yields

Since c1, ···,m are the experimental measurements and thus constant in the optimization process, we omit the last item in L (namely  ) for optimization. Then by replacing bi with |aiz|2, we obtain the objective function of TPWFP as

) for optimization. Then by replacing bi with |aiz|2, we obtain the objective function of TPWFP as

Truncated Wirtinger gradient

As stated before, we use the gradient-descent optimization scheme. Based on the Wirtinger calculus14, we obtain the gradient of L(z) as

where  is the transposed-conjugate matrix of ai.

is the transposed-conjugate matrix of ai.

To prevent optimization degeneration from measurement noise, we add a pixel-wise thresholding operation to ∇L(z) before using it to update z in each iteration. Similar to ref. 19, the thresholding constraint is defined as

Here ah is a predetermined parameter specified by users, |ci − |aiz|2| is the difference between the i th measurement (pixel) and its reconstruction,  is the mean of all the differences and

is the mean of all the differences and  stands for the relative value of the linear transformation which is used to eliminate the scaling effect. Intuitively, the thresholding indicates that if one measurement is far from the reconstruction, it is labeled as an outlier and omitted in subsequent optimization. Note that the thresholding is signal dependent, which is beneficial for accurate detection of outliers.

stands for the relative value of the linear transformation which is used to eliminate the scaling effect. Intuitively, the thresholding indicates that if one measurement is far from the reconstruction, it is labeled as an outlier and omitted in subsequent optimization. Note that the thresholding is signal dependent, which is beneficial for accurate detection of outliers.

Thus, for each index i, its corresponding measurement can be used in Eq. (8) only when it meets the thresholding criterion in Eq. (9). In the following, we use ξ to denote the index set that meets the thresholding constraint and rewrite the corresponding truncated Wirtinger gradient as

Note that the index set ξ is iteration-variant, meaning that in each iteration we update ξ according to the measurements c and the updated z. This adaptively provides us with better descent direction.

In the gradient descent scheme, we update z in the kth iteration as

where μ is the gradient descent step that is predetermined manually. Here we utilize a setting similar to ref. 13 as

For initialization, we need to pre-determine the parameters k0 and μmax. As stated in ref. 14, the basic rule to choose their appropriate values is to make sure μ(k) is small at the beginning of iterations for correct converging direction and gradually increase it to μmax for fast converging speed. Initiatively, k0 controls the initialization of μ and its increasing speed, while μmax sets the maximum step size. Here we set k0 = 330 and μmax = 0.1, which are experimentally validated and work well in our experiments. This type of gradient-descent step is widely used, since it allows the gradient descent step to grow gradually and offers an adaptive way for better convergence14,19.

Aglorithm 1: TPWFP algorithm for FP reconstruction. Input: linear transform matrix A, measurement vector c and initialization z(0). Ouput: retrieved complex signal z (the sample’s HR spatial spectrum).

-

1

k = 0;

-

2

while not converged do

-

3

Update ξ according to Eq. (9);

-

4

Update µ(k+1) according to Eq. (12);

-

5

Update z(k+1) according to Eq. (11);

-

6

k := k + 1.

-

7

end

Based on the above derivations, we summarize the proposed TPWFP algorithm in Alg. 1. For the initialization z(0), similar to ref. 13, we set z(0) as the spatial spectrum of the up-sampled version of the LR image captured under normal incident light. According to ref. 19, the computation complexity of such an optimization algorithm is  , where m is the number of measurements, n is the number of signal entries and ε is the relative reconstruction error defined in Eq. (13). This is much lower than WFP’s computation complexity which is

, where m is the number of measurements, n is the number of signal entries and ε is the relative reconstruction error defined in Eq. (13). This is much lower than WFP’s computation complexity which is  . Note that the source code of TPWFP is available at http://www.sites.google.com/site/lihengbian for non-commercial use.

. Note that the source code of TPWFP is available at http://www.sites.google.com/site/lihengbian for non-commercial use.

Results

In this section, we test the proposed TPWFP and other three state-of-the-art algorithms including AP, WFP and PWFP on both simulated and real captured data, to show their pros and cons.

Quantitative metric

To quantitatively evaluate the reconstruction quality, we utilize the relative error (RE)19 metric defined as

This metric describes the difference between two complex functions z and  . We use it here to compare the reconstructed HR spatial spectrum with its ground truth in the simulation experiments.

. We use it here to compare the reconstructed HR spatial spectrum with its ground truth in the simulation experiments.

Parameters

In the simulation experiments, we simulate the FPM setup with its hardware parameters as follows: the NA of the objective lens is 0.08 and corresponding pupil function is an ideal binary function (all ones inside the NA circle and all zeros outside); the height from the LED plane to the sample plane is 84.8 mm; the distance between adjacent LEDs is 4 mm and 15 × 15 LEDs are used to provide a synthetic NA of ~0.5; the wavelength of incident light is 625 nm; and the pixel size of captured images is 0.2 um. Besides, we use the ‘Lena’ and the ‘Aerial’ image (512 × 512 pixels) from the USC-SIPI image database25 as the latent HR amplitude and phase map, respectively. The captured LR images’ pixel numbers are set to be one tenth of the HR image along both dimensions and are synthesized based on the image formation in Eq. 2. We repeat 20 times for each of the following simulation experiments and average their evaluations to produce final results.

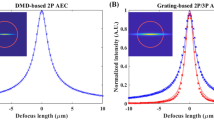

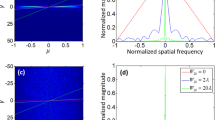

As for the algorithms’ parameters, an important parameter of TPWFP is the thresholding ah in Eq. 9. To choose appropriate ah, we test different ah on the simulated data corrupted with Gaussian noise, Poisson noise, speckle noise and pupil location error under varying degeneration levels and study its influence on the final reconstruction quality. The results are shown in Fig. 2. Note that the standard deviation (std) is the ratio between actual std and the maximum of the ideal measurements b. We use the model c = b(1 + n) to simulate speckle noise, where n is uniformly distributed random noise with zero mean. Also, we simulate the pupil location error by adding Gaussian noise to the incident wave vectors of each LED. From the results we can see that both too small or too big of ah result in worse reconstruction. This is determined by the nature of the utilized truncated gradient. When ah is too small, more informative measurements are incorrectly labeled as outliers and thus contribute nothing to final reconstruction, resulting in less information in the recovered spatial spectra and blurred reconstructed images, as shown in Fig. 2(a). When ah is too big, measurement noise and system errors are not effectively removed from the reconstructed images. To sum up, we choose an appropriate assignment for ah as ah = 25 in the following experiments for TPWFP, which produces satisfying results in different degeneration cases. Note that the constant assignment is reasonable because the thresholding constraint in Eq. (9) is independent from the degeneration model and level. The similar constant assignments of such parameters in ref. 19 also validate this.

Reconstruction quality (relative error between reconstructed HR spatial spectrum and its ground truth) under different settings of ah in TPWFP.

(a) shows the reconstruction results of different iterations under Gaussian noise with the standard deviation (std) being 2e-3. (b) presents more results under different degeneration models (Gaussian noise, Poisson noise, speckle noise and pupil location error) and varying degeneration levels, with the iteration number being fixed at 200 which is enough for TPWFP’s convergence.

Another parameter for all the algorithms is the iteration number. For different methods, we choose corresponding appropriate iteration numbers that ensure their convergence to demonstrate their best performance, but not increase unnecessary running time for fair comparison. For AP, 100 iterations are enough as proved in ref. 1. We set 1000 iterations for WFP according to ref. 13. From Fig. 2(a) we can see that 200 iterations are enough for TPWFP to converge. Since PWFP is a particular form of TPWFP when ah = ∞ (no thresholding to the gradient), we set the same iteration number for PWFP.

Simulation experiments

First, we test the four algorithms (AP, WFP, PWFP and TPWFP) on simulated captured images corrupted with Poisson noise, Gaussian noise and speckle noise, respectively, which are the most common noise in real imaging setups. The first two kinds are mostly caused by photoelectric effect and dark current23, while speckle noise is caused by the light’s spatial-temporal coherence of the illumination source (such as laser). The results are shown in Fig. 3, from which we can see that under small Gaussian noise, WFP outperforms the other three methods. This benefits from its Gaussian noise assumption. Instead, PWFP and TPWFP only assume Poisson signal model. Thus, they cannot recognize the small Gaussian noise and remove them. However, when noise grows to around  , TPWFP obtains better results than WFP. This is because when noise is large, WFP cannot extract useful information from the noisy data, while TPWFP recognizes these measurements as outliers using Eq. (9) and directly omits them to avoid their negative influence on final reconstruction. For Poisson noise and speckle noise, while both PWFP and TPWFP obtain better results than the other methods, TPWFP is little advantageous than PWFP. This is because for these kinds of signal dependent noise, it is hard for the truncated gradient to correctly distinguish noise from latent signals.

, TPWFP obtains better results than WFP. This is because when noise is large, WFP cannot extract useful information from the noisy data, while TPWFP recognizes these measurements as outliers using Eq. (9) and directly omits them to avoid their negative influence on final reconstruction. For Poisson noise and speckle noise, while both PWFP and TPWFP obtain better results than the other methods, TPWFP is little advantageous than PWFP. This is because for these kinds of signal dependent noise, it is hard for the truncated gradient to correctly distinguish noise from latent signals.

Then we apply the four algorithms on the simulated data corrupted with pupil location error, which is common in real setups due to LED misalignment or unexpected system errors. The reconstruction results are shown in Fig. 4. From the results we can see that TPWFP outperforms state-of-the-arts a lot. This benefits from the nature of the utilized truncated gradient. In the thresholding operation (Eq. (9)), if one measurement (spatial space) is far from the reconstruction due to pupil location error, we omit this measurement which represents misaligned information. Thus, we prevent the pupil location error from degenerating final reconstruction.

We display the running time of each method under the current algorithm settings in Table 1. All the four algorithms are implemented using Matlab on an Intel Xeon 2.4 GHz CPU computer, with 8 G RAM and 64 bit Windows 7 system. From the table we can see that both PWFP and TPWFP save more running time than WFP due to faster convergence19, but they are still more time consuming than AP. Note that TPWFP consumes more time than PWFP, which is caused by the additional thresholding and truncation operation to the gradient.

Real experiment

To further validate the robustness of TPWFP to the above measurement noise and system errors, we run the four algorithms on two real captured datasets including USAF target and red blood cell sample using a laser-illuminated FPM setup12. The red blood cell sample is prepared on a microscope slide stained with Hema 3 stain set (Wright-Giemsa). The setup consists of a 4f microscope system with a 4 × 0.1 NA (corresponding pupil function is still assumed to be known as an ideal binary function, same as the simulation experiments) objective lens (Olympus), a 200 mm focal-length tube lens (Thorlabs) and a 16-bit sCMOS camera (PCO.edge 5.5). The system is fitted with a circular array of 95 mirror elements providing illumination NA of 0.325, resulting in the total synthetic NA of 0.425. A 1W laser of 457 nm wavelength is used for the illumination source, which is pinhole-filtered, collimated and guided to a pair of Galvo mirrors (Thorlabs GVS212) to be directed to individual mirror elements. The use of a high-power laser allows for fast total capturing time at 0.96 seconds, but the high coherence of the laser source introduces speckle artifacts originating from reflective surfaces along the optical path, manifesting themselves as slowly varying fringe patterns. The reconstruction results are shown in Fig. 5. From the results we can see that AP produces intensity fluctuations in the background (see the white background of the USAF target for clear comparison) and low image contrast (see the reconstructed amplitude of the red blood cell sample). WFP also obtains corrugated artifacts due to the speckle noise produced by the laser illumination. Both PWFP and TPWFP obtain better results than AP and WFP, while TPWFP produces results with more image details (see the reconstructed amplitude of the USAF target, especially group 10) and higher image contrast (see the reconstructed phase of the red blood cell sample) than PWFP. To conclude, TPWFP outperforms the other methods with less artifacts, higher image contrast and more image details.

Discussion

In this paper, we propose a novel reconstruction method for FPM termed as TPWFP, which utilizes Poisson maximum-likelihood objective function and truncated Wirtinger gradient for optimization under a gradient descent framework. Results on both simulated data and real data captured using our laser-illuminated FPM setup show that the proposed method outperforms other state-of-the-art algorithms in the cases of Poisson noise, Gaussian noise, speckle noise and pupil location error.

We note that the proposed TPWFP does not assume Poisson noise model and only target Poisson noise. Instead, it is the process of the photons arriving at the detector that is being assumed to be Poisson-distributed. The final measurements by the sensor may be corrupted with various noise such as thermal noise in the detector and pupil location error. Also, though both TPWFP and PWFP use the Poisson signal model, the difference between them is the additional gradient truncation of TPWFP. Thus, if the signal is corrupted with noise (whatever noise type), the additional gradient truncation procedure in TPWFP can remove outliers and produce noise-free reconstruction. On the contrary, for PWFP with no noise removal procedure, the noise in the measurements would propagate to the final reconstruction and largely degenerate the algorithm’s performance.

TPWFP can be widely extended. First, the pupil function updating procedure of the EPRY-FPM algorithm26 can be incorporated into TPWFP to obtain corrected pupil function and better reconstruction. Second, other more robust and faster optimization schemes such as the conjugate gradient method27 can be applied to TPWFP to further improve its performance. Third, since the linear transform matrix A can be composed of any kinds of linear operations (Fourier transform and low-pass filtering in FPM), TPWFP can be applied in various linear optical imaging systems for phase retrieval, such as conventional ptychography28, multiplexed FP20,29 and fluorescence FP5. Fourth, since TPWFP is much more robust to pupil location error than other methods, it may find wide applications in other imaging schemes where precise calibrations are unavailable.

In spite of the advantageous performance and wide applications, the limitations of TPWFP lie in two aspects. First, it is still time consuming compared to conventional AP, though it is much faster than WFP. Second, it is non-convex. Although choosing the up-sampled image captured under normal illumination as the initialization results in satisfying reconstruction as we demonstrate in the above experiments, there is no theoretical guarantee for its global optimal convergence.

Additional Information

How to cite this article: Bian, L. et al. Fourier ptychographic reconstruction using Poisson maximum likelihood and truncated Wirtinger gradient. Sci. Rep. 6, 27384; doi: 10.1038/srep27384 (2016).

References

Zheng, G., Horstmeyer, R. & Yang, C. Wide-field, high-resolution Fourier ptychographic microscopy. Nat. Photonics 7, 739–745 (2013).

Ou, X., Horstmeyer, R., Yang, C. & Zheng, G. Quantitative phase imaging via Fourier ptychographic microscopy. Opt. Lett. 38, 4845–4848 (2013).

Dong, S. et al. Aperture-scanning Fourier ptychography for 3D refocusing and super-resolution macroscopic imaging. Opt. Express 22, 13586–13599 (2014).

Tian, L. & Waller, L. 3D intensity and phase imaging from light field measurements in an LED array microscope. Optica 2, 104–111 (2015).

Dong, S., Nanda, P., Shiradkar, R., Guo, K. & Zheng, G. High-resolution fluorescence imaging via pattern-illuminated Fourier ptychography. Opt. Express 22, 20856–20870 (2014).

Chung, J., Kim, J., Ou, X., Horstmeyer, R. & Yang, C. Wide field-of-view fluorescence image deconvolution with aberration-estimation from Fourier ptychography. Biomed. Opt. Express 7, 352–368 (2016).

Dong, S., Guo, K., Nanda, P., Shiradkar, R. & Zheng, G. FPscope: a field-portable high-resolution microscope using a cellphone lens. Biomed. Opt. Express 5, 3305–3310 (2014).

Phillips, Z. F. et al. Multi-contrast imaging and digital refocusing on a mobile microscope with a domed led array. PLoS ONE 10, e0124938 (2015).

Tian, L. et al. Computational illumination for high-speed in vitro Fourier ptychographic microscopy. Optica 2, 904–911 (2015).

Fienup, J. R. Phase retrieval algorithms: a comparison. Appl. Optics 21, 2758–2769 (1982).

Fienup, J. R. Reconstruction of a complex-valued object from the modulus of its Fourier transform using a support constraint. J. Opt. Soc. Am. A 4, 118–123 (1987).

Chung, J., Lu, H., Ou, X., Zhou, H. & Yang, C. Wide-field Fourier ptychographic microscopy using laser illumination source. arXiv preprint arXiv:1602.02901 (2016).

Bian, L. et al. Fourier ptychographic reconstruction using Wirtinger flow optimization. Opt. Express 23, 4856–4866 (2015).

Candes, E., Li, X. & Soltanolkotabi, M. Phase retrieval via Wirtinger flow: Theory and algorithms. arXiv preprint arXiv:1407.1065 (2014).

Candes, E. J., Strohmer, T. & Voroninski, V. Phaselift: Exact and stable signal recovery from magnitude measurements via convex programming. Commun. Pure Appl. Math. 66, 1241–1274 (2013).

Waldspurger, I., d’Aspremont, A. & Mallat, S. Phase recovery, maxcut and complex semidefinite programming. Math. Program. 1–35 (2012).

Horstmeyer, R. et al. Solving ptychography with a convex relaxation. New J. Phys. 17, 053044 (2015).

Yeh, L.-H. et al. Experimental robustness of Fourier ptychography phase retrieval algorithms. Opt. Express 23, 33214–33240 (2015).

Chen, Y. & Candès, E. J. Solving random quadratic systems of equations is nearly as easy as solving linear systems. arXiv preprint arXiv:1505.05114 (2015).

Tian, L., Li, X., Ramchandran, K. & Waller, L. Multiplexed coded illumination for Fourier Ptychography with an LED array microscope. Biomed. Opt. Express 5, 2376–2389 (2014).

Ou, X., Horstmeyer, R., Zheng, G. & Yang, C. High numerical aperture Fourier ptychography: principle, implementation and characterization. Opt. Express 23, 3472–3491 (2015).

Shechtman, Y. et al. Phase retrieval with application to optical imaging: A contemporary overview. IEEE Signal Proc. Mag. 32, 87–109 (2015).

Hasinoff, S. W. Computer Vision: A Reference Guide, chap. Photon, Poisson Noise, 608–610 (Springer US, Boston, MA, 2014).

Heintzmann, R. Estimating missing information by maximum likelihood deconvolution. Micron 38, 136–144 (2007).

Weber, A. G. The USC-SIPI image database version 5. USC-SIPI Rep. 315, 1–24 (1997).

Ou, X., Zheng, G. & Yang, C. Embedded pupil function recovery for Fourier ptychographic microscopy. Opt. Express 22, 4960–4972 (2014).

Luenberger, D. G. Introduction to linear and nonlinear programming, vol. 28 (Addison-Wesley Reading, MA, 1973).

Maiden, A. M., Rodenburg, J. M. & Humphry, M. J. Optical ptychography: a practical implementation with useful resolution. Opt. Lett. 35, 2585–2587 (2010).

Dong, S., Shiradkar, R., Nanda, P. & Zheng, G. Spectral multiplexing and coherent-state decomposition in Fourier ptychographic imaging. Biomed. Opt. Express 5, 1757–1767 (2014).

Acknowledgements

This work was supported by the National Natural Science Foundation of China, Nos 61120106003 and 61327902.

Author information

Authors and Affiliations

Contributions

L.B. and J.S. proposed the idea and conducted the experiments. J.C. and X.O. built the setup. L.B., J.S., J.C. and X.O. wrote and revised the manuscript. J.S., C.Y., F.C. and Q.D. supervised the project. All the authors reviewed the manuscript.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Bian, L., Suo, J., Chung, J. et al. Fourier ptychographic reconstruction using Poisson maximum likelihood and truncated Wirtinger gradient. Sci Rep 6, 27384 (2016). https://doi.org/10.1038/srep27384

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep27384

This article is cited by

-

Concept, implementations and applications of Fourier ptychography

Nature Reviews Physics (2021)

-

Fourier ptychographic microscopy with sparse representation

Scientific Reports (2017)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.