Abstract

Matching methods are common in studies across many disciplines. However, there is limited evidence on how to optimally combine matching with subsequent analysis approaches to minimize bias and maximize efficiency for the quantity of interest. We conducted simulations to compare the performance of a wide variety of matching methods and analysis approaches in terms of bias, variance, and mean squared error (MSE). We then compared these approaches in an applied example of an employment training program. The results indicate that combining full matching with double robust analysis performed best in both the simulations and the applied example, particularly when combined with machine learning estimation methods. To reduce bias, current guidelines advise researchers to select the technique with the best post-matching covariate balance, but this work finds that such an approach does not always minimize mean squared error (MSE). These findings have important implications for future research utilizing matching. To minimize MSE, investigators should consider additional diagnostics, and use of simulations tailored to the study of interest to identify the optimal matching and analysis combination.

Similar content being viewed by others

Introduction

Matching is a common approach to address confounding, particularly in studies that utilize observational data. Matching methods estimate the effect of a treatment, program, or policy by comparing treated (or exposed) units to control (or unexposed) units with similar observed characteristics. This approach is based on the potential outcomes1 and other causal inference frameworks2,3,4. Use of matching has increased as the corresponding methodological groundwork has developed5,6,7. There are many types of matching methods8,9,10,11 that have been used across scientific disciplines, including statistics4, economics7,12, political science and public policy analysis13,14, criminology15,16, education17,18, sociology19,20, psychology and behavioral health21,22, and medicine and public health23.

Matching methods help address differences in the distributions of measured covariates between treated and control groups by improving the balance of these factors, as would be expected in a randomized experiment. They also facilitate comparisons between study groups with good support by encouraging the researcher to only compare covariate subgroups that have both treated and control units, thereby avoiding extrapolation8,9,24. While covariate support, also known as positivity, should be examined in any analysis, this is not an inherent step in many regression-based methods11,25. Matching is also intuitive6,8 and may make fewer assumptions about the forms of the relationships between covariates, treatment, and outcome (e.g., allows for non-linearity) than standard regression analyses8,9. After matching, additional analyses can be applied to improve the estimate of effect.

Despite the large and multidisciplinary literature on matching methods, there is no consensus on how matching should be executed or evaluated. There are many general guidelines—for example, minimum covariate balance thresholds9,26 or weight trimming when support is poor10—some of which contradict one another or are ambiguous. To select among the myriad matching procedures, current guidelines commonly advise researchers to examine the balance of covariates between the treated and control groups and to select the matching approach that achieves the best post-matching balance (see for example11,27). One basis for this recommendation is the logic of a randomized experiment in which covariate balance is used to assess whether randomization has been effective and hence, whether bias is likely to be minimized28. While some suggest that balance may be a good indicator of bias, and existing work has shown its value for that purpose11,29,30,31, it is not known how well this recommendation optimizes mean squared error (MSE), a measure of performance that incorporates both bias and variance. Several previous studies have compared the performance of different matching methods (see for example32,33,34). However, these studies are limited in scope; all are either restricted to propensity score-based methods, study the performance of matching estimators without subsequent analysis, include only a small set of matching methods or analyses, or evaluate performance based on bias alone, not bias, variance, and MSE. It has not been systematically examined how balance measures relate to overall performance (MSE) of effect estimates, when considering a wide range of matching and analysis techniques. Furthermore, no previous studies have included systematic comparisons of matching coupled with double robust or semi-parametric estimation methods, which have shown superior performance in many settings35,36,37.

We conducted simulation studies to compare the performance of a wide range of matching and analysis combinations in estimating the average effect of treatment on the treated (ATT). The ATT is relevant when one is interested in the effect of an exposure among those who are exposed. For example, when studying an employment training program, the effect of the program on the unemployed people who received it would be more relevant than the effects in the general population, most of whom would already be employed. Similarly, with a harmful exposure such as drug use, the effect of drug use on health in those who use drugs is more interpretable than an effect in a general population many of whom have never used drugs.

Simulation-based approaches to compare estimation performance are appealing because the true effect of interest is known and can therefore be compared to estimates generated from different statistical methods under varying conditions. We considered whether covariate balance, assessed using recommended metrics11,31,38,39, identified the matching and analysis combination with the lowest MSE in the treatment effect estimate. Then, using data from a randomized employment training program, we compared the experimental effect estimate to effect estimates generated by applying matching and analysis combinations to data with observational controls drawn from a general population survey.

Methods

Simulated data

We simulated 1,000 data sets of size 1,000, comprised of a continuous outcome Y, a binary indicator of treatment A, and two baseline covariates W1 and W2. In this paper, we use the terms “treated” and “control” to refer to groups we wish to compare, but relevant studies need not involve an explicit treatment as in biomedical research. The simulations were designed to imitate data that could realistically arise in observational settings and to demonstrate the performance of combinations of matching and analysis methods in the presence of treatment effect heterogeneity and confounding of the relationship between the treatment and the outcome. Specifically, W1 and W2 were uniform and normal random variables, respectively. Treatment A was binomial with probability of success dependent on W1 and W1, and outcome Y was random normal with mean dependent on W1, W1, and A. Dependencies included squared terms and multiplicative interactions. The complete data generating mechanisms are presented in the Supporting Information (SI) Text.

Employment program data

As an applied example, we use data originating from LaLonde’s 198640 study of the National Supported Work (NSW) Demonstration, a large-scale employment training program that aimed to increase income levels by providing work experience and counseling to individuals who lacked basic job skills. Applicants were randomly assigned to the NSW program, or to the control group. Data on participants and controls was collected at baseline and at up to four post-baseline time points using surveys and Social Security Administration records. The outcome of interest was real earnings in 1978 and baseline covariates were age, years of education, high school completion, black race, Hispanic ethnicity, marital status, and real earnings in 1974 and 1975.

We used the publicly available dataset constructed by Dehejia and Wahba7, which includes both the experimental data and observational population-based controls. This arrangement allows researchers to compare effect estimates from the randomized data to estimates that might have been generated by comparing outcomes for individuals participating in the program to general population controls (an observational study design), had the randomized trial not been executed. The experimental data include 185 participants and 260 controls. The observational controls were drawn from Westat’s Matched Current Population Survey-Social Security Administration file containing 15,992 general population controls. Additional information on the NSW program and the dataset used in this study are available elsewhere7,40.

Estimation methods

We estimated the average effect of treatment on the treated (ATT) in both the simulated data and applied example, by applying seven matching approaches, three analysis methods, and two estimation approaches (parametric and semi-parametric). The ATT estimand is the average difference between potential outcomes2 for the exposed units under exposure, and the exposed units had they been unexposed. The methods estimate the ATT by comparing the average outcome in the exposed group to the average outcome in a comparison group of unexposed units that has been selected, weighted, or otherwise adjusted to approximate the covariate distribution of the exposed units. The matching and analysis methods, described in greater detail below, relied on estimation of the treatment mechanism, or propensity score25, and the outcome model. We estimated these models in two ways: First, parametrically, by assuming a functional form (main terms only) and applying linear or logistic regression, and second, semi-parametrically, by applying the SuperLearner ensemble machine learning algorithm35. While parametric approaches are standard and far more common in practice, recent evidence suggests that semi-parametric approaches may reduce bias and increase efficiency35,41,42.

When analyzing the simulated data, we assumed parametric model forms that were misspecified given the data generating mechanism. This is because correct specification of the model form is unlikely in applied settings where the true underlying data generating mechanisms are unknown. Hence, the analysis aligns with what is done in practice. It further provides an opportunity to examine potential gains from semi-parametric estimation when the model form is not known.

Matching methods

Using the framing of Ho et al.9, we considered each matching procedure as a form of pre-processing, after which the ATT could be estimated by calculating the difference of mean outcomes between treated and controls units (a “naïve” analysis) or applying further analysis techniques. The matching approaches were: one-to-one greedy nearest neighbor (NN) matching with replacement43; one-to-one optimal nearest neighbor (optimal) matching without replacement44; subclassification (with ten propensity score quantiles in simulations and five quantiles in the applied example)24; full matching4,45; inverse probability of treatment weighting (IPTW)25,46; and genetic matching38. A detailed description of each method can be found in the Supporting Information. We also considered unmatched data.

The matching methods relied on measures of the distance between covariate values in the treatment and control groups. In all cases except genetic matching, this distance metric was the propensity score, estimated parametrically and semi-parametrically, as described above. In the case of genetic matching, the distance measure was the generalized Mahalanobis metric, as recommended38. Results for the parametric and semi-parametric matching approaches were very similar. For parsimony, we present the parametric version in the main text and the results for all tested simulations in SI Tables S1–S3.

Analysis methods

Estimators of the ATT are available that adjust for covariates based on the treatment mechanism, the outcome mechanism, or both (also known as double-robust methods). After a matching approach, which utilizes the treatment mechanism, is applied, an analysis approach is used to compare outcomes in the matched samples. We considered three outcome analyses: a naïve analysis, g-computation46, and targeted minimum loss-based estimation (TMLE)47. G-computation is a maximum likelihood based substitution estimator of the G-formula. It is implemented by using regression to model the outcome as a function of the exposure and covariates. The fitted model is then used to predict the outcome under different exposure scenarios to be compared. To estimate the ATT, we average the difference between the model predictions for all exposed units had they been unexposed and the model predictions for all exposed units had they been exposed. Typically, g-computation relies on a parametric model. TMLE for the ATT is general two-stage efficient substitution estimator. In the first stage, we model the outcome as a function of the exposure and covariates. The second stage is a bias reduction step that iteratively updates the parameter estimates using models of the exposure given covariates (the treatment mechanism). This updating step also makes the estimator double-robust, asymptotically normal, and asymptotically efficient. TMLE is typically implemented with semi-parametric machine learning methods. Treatment and outcome models for TMLE were estimated using parametric and semi-parametric approaches, as described above. For g-computation, we used only parametric estimation, as inference using semi-parametric g-computation has not been established.

Balance and performance metrics

For each matched sample we calculated a large set of balance metrics recommended in the literature11,31,38,39. These included: the mean, median, and maximum absolute standardized mean difference (ASMD) in covariate values between the treated and control groups across all eight covariates, the percent of covariates with ASMD less than 20%, 10%, 5%, and 1%, the ASMD of the prognostic score (a formulation of the disease risk score39), the ASMD of the propensity score, and the absolute mean t-statistic comparing covariate values between the treatment and control groups.

The propensity scores and prognostic scores used to measure balance were distinct from those used to estimate effects. In the simulated data, the propensity scores and prognostic scores used to measure balance were quantified using generalized linear regression and the known treatment and outcome model forms (see SI Text). In the NSW data, the propensity scores and prognostic scores used to measure balance were estimated using main terms logistic regression and SuperLearner. In the NSW applied example, the propensity scores and prognostic scores were indistinguishable across the two estimation procedures, so only the logistic regression results are reported. We also present plots of the distributions of propensity scores by treated and control groups to illustrate the degree of covariate support in the different scenarios25,48.

For each data generating mechanism, we created 1,000 simulated datasets and evaluated the performance of each matching and analysis combination. The primary measure of performance was the mean squared error (MSE = bias2 + variance), where lower MSE indicates a better estimate. We also compared the average percent bias and the variance. We present all three performance measures for each of the estimators considered. To estimate the bias, variance, and MSE of the effect estimates in the applied example and to account for stochastic elements of the matching and machine learning algorithms, we analyzed 500 bootstrapped datasets49,50,51.

Additional information on the simulations, applied example, matching methods, and analyses are available in SI Text. All analyses were conducted in R version 3.2.052. Matching was implemented using the MatchIt package53, and its dependent packages. TMLE was implemented using a modified version of the tmlecte package (to incorporate matching weights) which is available on Github54.

Results

Simulation results

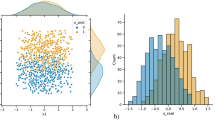

The distributions of the propensity score, by treated and control units, are presented in Fig. 1. The plots correspond to the scenarios of good support (substantial overlap of the propensity scores for treated and control) and poor support (minimal overlap). The probability of treatment ranged between 0.093 and 0.776 in the good support scenario and between <0.001 and >0.999 in the poor support scenario. Distributions and results for the medium support scenario fell in between those of the good and poor support scenarios and are presented in SI Text, SI Fig. S1, and SI Table S2.

(a) Density of estimated propensity scores for treated and control units in good support scenario. (b) Density of estimated propensity scores for treated and control units in poor support scenario. The plots illustrate substantial overlap of the propensity scores for treated and control units in the good support scenario and minimal overlap in the poor support scenario. The probability of treatment ranged between 0.093 and 0.776 in the good support scenario and between <0.001 and >0.999 in the poor support scenario. Distributions and results for the medium support scenario fell in between those of the good and poor support scenarios and are presented in SI Text, SI Fig. S1, and SI Table S2.

Selected balance metrics for the good and poor support scenarios are presented in Table 1 (see SI Table S4 for all support scenarios and balance metrics). Across all of the simulation scenarios, genetic matching consistently resulted in the best covariate balance, according to all balance metrics. However, when combined with analysis methods, genetic matching did not generally result in the ATT estimate with the lowest MSE.

In the good support scenario, many matching and analysis methods performed well and there were few substantive differences between estimators in terms of the MSE (Table 2). Across the 28 methods, 68% had MSE less than 0.01 and 75% were less than 1% biased. In terms of MSE, full matching with parametric TMLE performed best, and five of the top ten methods involved double robust analysis (TMLE with parametric or semi-parametric estimation). Subsequent analysis after matching generally improved performance, compared with matching alone. Several of the top-performers also involved no matching at all. In terms of MSE, full matching, IPTW, subclassification, and optimal matching were higher performing matching methods, while genetic and greedy NN matching were lower performing matching methods. Semi-parametric estimation for TMLE did not substantively improve performance over parametric TMLE. When considering only bias, full, NN, and genetic matching were high performers. The lowest bias was achieved for NN matching paired with semi-parametric double robust analysis.

In the poor support scenario (Table 3), fewer methods performed well. Across the 28 methods, 7% had MSE less than 0.01 and 29% were less than 1% biased. Again, full matching with TMLE was the top-performer in terms of MSE, and the top five methods involved double robust analysis. However, in this scenario, semi-parametric estimation for TMLE notably improved performance over parametric TMLE, except when paired with subclassification. There was again a clear benefit to utilizing matching and subsequent analysis together, as methods with no matching or naïve analysis generally performed poorly. An exception to this pattern was no matching with semi-parametric TMLE, which performed relatively well and had the lowest bias. In contrast with the good support scenario, genetic matching was a mid- to high-performer. The top four methods with the lowest bias involved semi-parametric TMLE, making this approach one of the most consistent performers for bias reduction across all scenarios.

Employment intervention results

As an applied example, we compared the experimental results from the National Supported Work (NSW) employment program to those estimated using observational controls. Based on the experimental data, the NSW program increased real earnings of those in the treatment group by an average of $1,794 (comparison of means). We treat this quantity as the true effect of the program. In analyses combining the experimental treatment group with observational control data, the level of covariate support before matching was extremely poor. The distribution of propensity scores by treated and control units are presented in Fig. 2. Propensity scores ranged between <0.001 and 0.488, and were substantially skewed towards 0 for the control group. These patterns indicate that the baseline characteristics of the control individuals are very different from those who participated in the program; this example is most similar to the poor support simulation scenario.

The plot illustrates extremely poor overlap of the propensity scores for treated and control units in the applied example. Propensity scores ranged between <0.001 and 0.488, and were substantially skewed towards 0 for the control group. These patterns indicate that the baseline characteristics of the control individuals are very different from those who participated in the program; this example is most similar to the poor support simulation scenario.

As in the simulations, genetic matching generally resulted in the best covariate balance in the NSW observational data, but not for all metrics (see Table 4 for summary; see SI Table S5 for all metrics). Six of ten metrics indicated that genetic matching generated the best balance, while three others indicated that IPTW generated the best balance, and one indicated that full matching achieved the best balance (SI Table S5). In cases where genetic matching did not achieve the best balance, the metrics were generally very close to those of the best method. As in simulations, genetic matching did not result in the matching and analysis combination with the lowest MSE.

The unadjusted estimate of the ATT in the NSW observational data was -$8,526, dramatically different from the experimental result of $1,794. The success of matching and analysis combinations in recovering the experimental result varied substantially (see Fig. 3 and SI Table S6). As in the simulations, the methods with the lowest MSE were full matching, genetic matching, optimal matching or IPTW paired with semi-parametric TMLE. While semi-parametric TMLE was involved in the top five performing methods, full, genetic, and optimal matching paired with other analyses also performed well. Subclassification and greedy nearest neighbor matching fared poorly. Interestingly, analyses involving no matching were among both the best and the worst, depending on the analysis with which they were paired. Genetic matching with g-computation was the least biased method. Almost all methods underestimated the experimental result, suggesting a consistent residual bias that may be the result of unmeasured covariates. The confidence intervals for all ATT estimates were wide, and few estimates excluded the null.

Colored points represent point estimates of the effect of treatment on the treated (ATT), with corresponding 95% error bars. The unadjusted estimate of the ATT in the NSW observational data was -$8,526, dramatically different from the experimental result of $1,794 (indicated by the grey line). The success of matching and analysis combinations in recovering the experimental result varied substantially. Almost all methods underestimated the experimental result, suggesting a consistent residual bias that may be the result of unmeasured covariates. The confidence intervals for all ATT estimates were wide, and few estimates excluded the null. IPTW: inverse probability of treatment weighting. TMLE: targeted minimum loss-based estimation. SL: using SuperLearner for semi-parametric estimation.

Discussion

We evaluated the performance of a wide variety of matching and analysis methods in estimating the ATT in a simulation study and applied example. The best-performing method depended on the degree of covariate support. When support was good, many matching and analysis approaches generated estimates with minimal bias and low variance. The high performance of double robust methods without matching (TMLE) indicated that matching may not provide a meaningful advantage when support is good. In contrast, when support was poor, fewer methods performed well, and the benefits of combining matching with double robust analysis, especially when incorporating machine learning for estimation, became more prominent. The combination of full matching and semi-parametric TMLE was the most consistently high-performing method in both the simulations and the applied example. Therefore, we expect this combination would perform well in other scenarios as well. The advantages of these methods in the poor support scenario are of particular interest, because settings of poor support are common in applied, observational research55,56,57.

While we studied many novel matching and analysis combinations that had not been studied previously, some of our findings are consistent with previous research. Schafer and Kang58,59 and Radice and colleagues34 used simulations to compare several different matching and analysis methods, and found that in good support scenarios, many analyses reported similar bias, variance, and MSE, but under poor support, there was significant heterogeneity in performance. Radice and colleagues also found that genetic matching achieved better balance than several other matching methods. At least two other studies29,30 found that full matching resulted in lower bias, but to our knowledge, none have examined the relationship with MSE. Estimators that combine matching with further adjustment60,61,62,63 have also been shown to offer performance benefits in previous research.

Previous research has demonstrated advantages of double robust analysis and semi-parametric estimators32,36,47,64, but few studies have evaluated how machine learning may improve estimates of effect when combined with any matching method38,41,42, let alone a broad range of matching methods. We are the first to systematically combine double robust methods with matching techniques and to consider semi-parametric estimation at every stage. Our results make an important contribution to the literature, because they indicate that these novel combinations may offer additional performance advantages over any individual analytic technique previously assessed. We found that use of the matching method with the best covariate balance does not necessarily lead to the estimator with the best overall performance, as measured by the MSE. Across simulations and an applied example, genetic matching achieved the best balance, but analyses that included genetic matching had larger MSE than the top-performing method in all cases. Two previous studies found that genetic matching achieved both better balance and lower MSE than other propensity score-based methods34,38, but they compared a limited number of estimators, and did not consider matching in combination with any subsequent analysis. We add to a small number of studies that caution against using of covariate balance as the only criteria for selecting a matching approach (see for example28,41,65).

Much of the previous literature on matching emphasized covariate balance for minimizing bias rather than MSE (see for example43,62,66,67,68). Indeed, in our simulations, we found that methods with better covariate balance were generally less biased. If a researcher’s goal is to minimize MSE, further investigation is needed to identify additional diagnostics that are more closely related to the combination of bias and variance. As might be expected, the balance measures we examined had little to do with variance, and hence, were imperfect indicators of MSE. While the balance metrics we considered are common in the matching literature11,31,38,39, most involve comparing single summary measures of univariate distributions. Ideal measures of balance would allow one to compare the full multivariate distributions of the covariates, and ideal diagnostics for MSE would incorporate both balance in multivariate distributions and efficiency of different estimators.

Some matching methods with good balance may not perform as well in terms of MSE due to differences in effective sample size. Because some matching methods drop observations, while others keep all observations and reweight, they may have very different effective sample sizes. Genetic matching is designed to optimize covariate balance, not MSE, and in process may drop observations and thereby sacrifice statistical precision5,9,69. In contrast, full matching retains all observations while maintaining covariate balance that is only slightly worse than that of genetic matching. This tradeoff between balance, bias, and statistical precision must be considered carefully given the specific goals of each unique study.

In this study, we identified full matching combined with double robust analysis and semi-parametric estimation as a high-performing estimator across numerous scenarios. However, it is possible that in other scenarios, the best-performing methods would be different. For example, the performance of parametric models will depend on the degree of model misspecification; in this work, we examined one type of misspecification, but future work should investigate how performance is altered under varying misspecification scenarios. The variable results of previous comparisons of matching methods attest that there is no single approach that best fits all scenarios (see for example38,29,32). Our study, along with others (for example60,61), illustrates the utility of simulation in determining the optimal analysis. In any applied study, the true effect is unknown, but researchers can use simulations to inform the choice of analytic method, supplementing general guidelines, which may be based on scenarios quite different from the one at hand. Ideally, the choice of matching and analysis for every study would be informed by simulation that reflects the setting and study question. The use of simulation to inform methodological choices is not new43,62,66. However, it is rarely used to inform choices in applied research. Fortunately, recognition of the value of simulation studies is increasing, and implementing simulations is becoming increasingly accessible with the release of new R packages70 and web-based software with graphical user interfaces71,72. The use of these tools can help researchers to make more rigorous and tailored decisions about study design and analysis approaches.

Our study has several limitations. We considered a broad range of commonly applied matching techniques, together with an array of analysis methods, with particular focus on incorporation of double robust analysis and semi-parametric estimation approaches. However, these are a subset of the possible matching and analysis combinations one could consider. Likewise, there are other balance metrics or estimands we could have considered. For example, we might have considered coarsened exact matching, augmented IPTW, the joint significance and pseudo R2 for measuring balance, estimators of the average treatment effect (ATE), or estimators for longitudinal data structures. However, the subset we have chosen clearly demonstrates the advantages of semi-parametric and double robust methods not previously examined, and further, illustrates that balance is not the best indicator of estimator performance in terms of MSE. There are no agreed upon best measures of balance11, and those we did examine displayed consistent results. A simulation approach similar to the one we have taken would be well-suited to identify optimal approaches for other matching methods, balance metrics, estimands, or data generating mechanisms.

To estimate variance, we utilized non-parametric bootstrapping. This approach is widely used to approximate the distribution of point estimates and to quantify uncertainty49. However, minimal previous scientific work has examined the validity of bootstrapping for inference for the unique combinations of matching analysis procedures included in this paper. Further methodological development is needed in the areas of variance estimation for estimators involving matching. Simulated data are unlikely to possess the unusual distributional properties sometimes present in real data. For this reason, some simulation studies employ covariates from real data and simulate only the outcome61. However, our simulation results are bolstered by the use of real data from the evaluation of the NSW employment training program; the similarity in results between the simulations and applied example increase our confidence that the simulation results are relevant to real world scenarios.

In this study, we identified optimal combinations of matching and analysis methods for estimates of the ATT. We presented a simulation study and applied example to quantify the performance of a range of combinations of matching and analysis approaches. Our findings demonstrated the superior performance of novel combinations of matching with double robust analysis and semi-parametric estimation. In particular, full matching combined with semi-parametric TMLE was a consistent top-performer, particularly when support was poor. In addition, we concluded that selecting an approach based on the currently recommended balance metrics may not lead to the least biased and most efficient estimate. We call for the development of more sophisticated balance metrics and other diagnostics that better align with performance in terms of MSE and for the increased use of tailored simulation to inform the choice of analytic methods. This would support the systematic selection of methods with better performance, rather than the methods that are most familiar or easy to implement. Such an approach has the potential to improve the rigor and quality of studies across a broad array of disciplines.

Additional Information

How to cite this article: Colson, K. E. et al. Optimizing matching and analysis combinations for estimating causal effects. Sci. Rep. 6, 23222; doi: 10.1038/srep23222 (2016).

References

Rubin, D. B. Bayesian inference for causal effects: the role of randomization. Ann. Stat. 6, 34–58 (1978).

Rubin, D. B. Estimating causal effects of treatments in randomized and nonrandomized studies. J. Educ. Psychol. 66, 688–701 (1974).

Pearl, J. Causality: Models, reasoning and inference applications (Cambridge University press, 2000).

Rosenbaum, P. R. Observational studies (Springer-Verlag, 2002).

Rosenbaum, P. R. & Rubin, D. B. The bias due to incomplete matching. Biometrics 41, 103–116 (1985).

Rosenbaum, P. R. & Rubin, D. B. Constructing a control group using multivariate matched sampling methods that incorporate the propensity score. Am. Stat. 39, 33 (1985).

Dehejia, R. H. & Wahba, S. Causal effects in nonexperimental studies: reevaluating the evaluation of training programs. J. Am. Stat. Assoc. 94, 1053–1062 (1999).

Morgan, S. L. & Harding, D. J. Matching estimators of causal effects: prospects and pitfalls in theory and practice. Sociol. Methods Res. 35, 3–60 (2006).

Ho, D. E., Imai, K., King, G. & Stuart, E. A. Matching as nonparametric preprocessing for reducing model dependence in parametric causal inference. Polit. Anal. 15, 199–236 (2007).

Caliendo, M. & Kopeinig, S. Some practical guidance for the implementation of propensity score matching. J. Econ. Surv. 22, 31–72 (2008).

Stuart, E. A. Matching methods for causal inference: a review and a look forward. Stat. Sci. 25, 1–21 (2010).

Mithas, S., Almirall, D. & Krishnan, M. S. Do CRM systems cause one-to-one marketing effectiveness? Stat. Sci. 21, 223–233 (2006).

Imai, K. Do get-out-the-vote calls reduce turnout? The importance of statistical methods for field experiments. Am. Polit. Sci. Rev. 99, 283–300 (2005).

Gilligan, M. J. & Sergenti, E. J. Do UN interventions cause peace? Using matching to improve causal inference. Q. J. Polit. Sci. 3, 89–122 (2008).

Peters, D. J., Hochstetler, A., DeLisi, M. & Kuo, H.-J. Parolee recidivism and successful treatment completion: comparing hazard models across propensity methods. J. Quant. Criminol. 31, 149–181 (2014).

Hedderman, C. & Jolliffe, D. The impact of prison for women on the edge: paying the price for wrong decisions. Vict. Offenders 10, 152–178 (2015).

Turney, K. & Haskins, A. R. Falling behind? Children’s early grade retention after paternal incarceration. Sociol. Educ. 87, 241–258 (2014).

Kirk, D. S. & Sampson, R. J. Juvenile arrest and collateral educational damage in the transition to adulthood. Sociol. Educ. 86, 36–62 (2013).

Harding, D. J. Counterfactual models of neighborhood effects: the effect of neighborhood poverty on dropping out and teenage pregnancy. Am. J. Sociol. 109, 676–719 (2003).

Kogan, I., Matković, T. & Gebel, M. Helpful friends? Personal contacts and job entry among youths in transformation societies. Int. J. Comp. Sociol. 54, 277–297 (2013).

Ye, Y. & Kaskutas, L. A. Using propensity scores to adjust for selection bias when assessing the effectiveness of Alcoholics Anonymous in observational studies. Drug Alcohol Depend. 104, 56–64 (2009).

Hoffmann, J. P. & Warnick, E. Do family dinners reduce the risk for early adolescent aubstance use? A propensity score analysis. J. Health Soc. Behav. 54, 335–352 (2013).

Cheng, Y. W., Hubbard, A., Caughey, A. B. & Tager, I. B. The association between persistent fetal occiput posterior position and perinatal outcomes: an example of propensity score and covariate distance matching. Am. J. Epidemiol. 171, 656–663 (2010).

Rosenbaum, P. R. & Rubin, D. B. Reducing bias in observational studies using subclassification on the propensity score. J. Am. Stat. Assoc. 79, 516 (1984).

Rosenbaum, P. R. & Rubin, D. B. The central role of the propensity score in observational studies for causal effects. Biometrika 70, 41–55 (1983).

Cochran, W. G. The effectiveness of adjustment by subclassification in removing bias in observational studies. Biometrics 24, 295–313 (1968).

Rubin, D. B. The design versus the analysis of observational studies for causal effects: parallels with the design of randomized trials. Stat. Med. 26, 20–36 (2007).

Arceneaux, K., Gerber, A. S. & Green, D. P. A cautionary note on the use of matching to estimate causal effects: an empirical example comparing matching estimates to an experimental benchmark. Sociol . Methods Res. 39, 256–282 (2010).

Gu, X. S. & Rosenbaum, P. R. Comparison of multivariate matching methods: structures, distances, and algorithms. J. Comput. Graph. Stat. 2, 405–420 (1993).

Stuart, E. A. & Green, K. M. Using full matching to estimate causal effects in nonexperimental studies: examining the relationship between adolescent marijuana use and adult outcomes. Dev. Psychol. 44, 395–406 (2008).

Harder, V. S., Stuart, E. A. & Anthony, J. C. Propensity score techniques and the assessment of measured covariate balance to test causal associations in psychological research. Psychol. Methods 15, 234–249 (2010).

Lunceford, J. K. & Davidian, M. Stratification and weighting via the propensity score in estimation of causal treatment effects: a comparative study. Stat. Med. 23, 2937–2960 (2004).

Austin, P. C. The performance of different propensity score methods for estimating marginal odds ratios. Stat. Med. 26, 3078–3094 (2007).

Radice, R. et al. Evaluating treatment effectiveness in patient subgroups: a comparison of propensity score methods with an automated matching approach. Int. J. Biostat. 8 (2012).

van der Laan, M. J., Polley, E. C. & Hubbard, A. E. Super learner. Stat. Appl. Genet. Mol. Biol. 6 (2007).

van der Laan, M. J. & Rose, S. Targeted learning: causal inference for observational and experimental data (Springer New York, 2011).

Kreif, N., Gruber, S., Radice, R., Grieve, R. & Sekhon, J. S. Evaluating treatment effectiveness under model misspecification: a comparison of targeted maximum likelihood estimation with bias-corrected matching. Stat. Methods Med. Res. 0962280214521341 10.1177/0962280214521341 (2014).

Diamond, A. & Sekhon, J. S. Genetic matching for estimating causal effects: a general multivariate matching method for achieving balance in observational studies. Rev. Econ. Stat. 95, 932–945 (2012).

Stuart, E. A., Lee, B. K. & Leacy, F. P. Prognostic score-based balance measures can be a useful diagnostic for propensity score methods in comparative effectiveness research. J. Clin. Epidemiol. 66, S84–S90.e1 (2013).

LaLonde, R. J. Evaluating the econometric evaluations of training programs with experimental data. Am. Econ. Rev. 76, 604–620 (1986).

Lee, B. K., Lessler, J. & Stuart, E. A. Improving propensity score weighting using machine learning. Stat. Med. 29, 337–346 (2010).

Pirracchio, R., Petersen, M. L. & van der Laan, M. Improving propensity score estimators’ robustness to model misspecification using super learner. Am. J. Epidemiol. 181, 108–119 (2015).

Rubin, D. B. Matching to remove bias in observational studies. Biometrics 29, 159–183 (1973).

Rosenbaum, P. R. Optimal matching for observational studies. J. Am. Stat. Assoc. 84, 1024–1032 (1989).

Rosenbaum, P. R. A characterization of optimal designs for observational studies. J. R. Stat. Soc. Ser. B Methodol. 53, 597–610 (1991).

Hernán, M. A. & Robins, J. M. Estimating causal effects from epidemiological data. J. Epidemiol. Community Health 60, 578–586 (2006).

van der Laan, M. J. & Rubin, D. Targeted maximum likelihood learning. Int. J. Biostat. 2 (2006).

Petersen, M. L., Porter, K. E., Gruber, S., Wang, Y. & van der Laan, M. J. Diagnosing and responding to violations in the positivity assumption. Stat. Methods Med. Res. 21, 31–54 (2012).

Freedman, D. Statistical models: theory and practice (Cambridge University press, 2009).

Bouckaert, R. R. & Frank, E. Advances in knowledge discovery and data mining (eds. Dai, H., Srikant, R. & Zhang, C. ) 3–12 (Springer Berlin Heidelberg, 2004).

Dietterich, T. Machine learning research: four current directions. AI Mag. 18, 97–136 (1997).

R Core Team. R: a language and environment for statistical computing (R Foundation for Statistical Computing, 2015).

Ho, D., Imai, K., King, G. & Stuart, E. MatchIt: nonparametric preprocessing for parametric causal inference. J. Stat. Softw. 42 (2011).

Lendle, S. tmlecte. Available at https://github.com/lendle/tmlecte (Accessed: 15th December 2015).

Leal, C., Bean, K., Thomas, F. & Chaix, B. Are associations between neighborhood socioeconomic characteristics and body mass index or waist circumference based on model extrapolations? Epidemiol. Camb. Mass 22, 694–703 (2011).

Hearst, M. O., Oakes, J. M. & Johnson, P. J. The effect of racial residential segregation on black infant mortality. Am. J. Epidemiol. 168, 1247–1254 (2008).

Ahern, J. et al. Navigating non-positivity in neighbourhood studies: an analysis of collective efficacy and violence. J. Epidemiol. Community Health 67, 159–165 (2013).

Schafer, J. L. & Kang, J. Average causal effects from nonrandomized studies: a practical guide and simulated example. Psychol. Methods 13, 279–313 (2008).

Robins, J., Sued, M., Lei-Gomez, Q. & Rotnitzky, A. Comment: performance of double-robust estimators when ‘inverse probability’ weights are highly variable. Stat. Sci. 22, 544–559 (2007).

Rubin, D. B. & Thomas, N. Combining propensity score matching with additional adjustments for prognostic covariates. J. Am. Stat. Assoc. 95, 573–585 (2000).

Imai, K. & van Dyk, D. A. Causal inference with general treatment regimes. J. Am. Stat. Assoc. 99, 854–866 (2004).

Rubin, D. B. The use of matched sampling and regression adjustment to remove bias in observational studies. Biometrics 29, 185–203 (1973).

Rubin, D. B. Using multivariate matched sampling and regression adjustment to control bias in observational studies. J. Am. Stat. Assoc. 74, 318–328 (1979).

Austin, P. C. The performance of different propensity-score methods for estimating differences in proportions (risk differences or absolute risk reductions) in observational studies. Stat. Med. 29, 2137–2148 (2010).

Groenwold, R. H. H. et al. Balance measures for propensity score methods: a clinical example on beta-agonist use and the risk of myocardial infarction. Pharmacoepidemiol. Drug Saf. 20, 1130–1137 (2011).

Cochran, W. G. & Rubin, D. B. Controlling bias in observational studies: a review. Sankhyā Indian J. Stat . Ser. 1961–2002 35, 417–446 (1973).

Rubin, D. B. Multivariate matching methods that are equal percent bias reducing, I: some examples. Biometrics 32, 109–120 (1976).

Rubin, D. B. Multivariate matching methods that are equal percent bias reducing, II: maximums on bias reduction for fixed sample sizes. Biometrics 32, 121–132 (1976).

Cohen, J. Statistical power analysis for the behavioral sciences (Academic Press, 1988).

Sofrygin, O., van der Laan, M. J. & Neugebauer, R. simcausal R package: conducting transparent reproducible simulation studies of causal effect estimation with complex longitudinal data. Available at https://cran.r-project.org/web/packages/simcausal/vignettes/simcausal_vignette.pdf. (Accessed: 15th December 2015).

Ratanatharathorn, A., Mooney, S. & Rundle, A. Match-o-matic. Available at https://ar3054.shinyapps.io/Match-o-matic. (Accessed: 15th December 2015).

Ahern, J., Zimmerman, S. & Colson, K. E. Study Simulator. Available at http://www.studysimulator.com/. (Accessed: 15th December 2015).

Acknowledgements

This work was supported by the National Institutes of Health Director’s New Innovator Award Program DP2 HD080350, National Institute of Mental Health grant R01MH099010, National Institute of Allergy and Infectious Disease grant 5R01AI074345–07, the Robert Wood Johnson Foundation Health & Society Scholars program, and the UC Berkeley Committee on Research. The authors wish to thank Samuel Lendle and Erin LeDell for their technical support.

Author information

Authors and Affiliations

Contributions

K.E.C., K.E.R., S.C.Z., D.E.G., E.A.S., M.J.V. and J.A. designed the research, discussed the results, and edited the manuscript; K.E.C., K.E.R., S.C.Z., D.E.G. and J.A. performed research; K.E.R., S.C.Z., E.A.S., M.J.V. and J.A. contributed new reagents/analytic tools; K.E.C. analyzed data; and K.E.C. and J.A. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Colson, K., Rudolph, K., Zimmerman, S. et al. Optimizing matching and analysis combinations for estimating causal effects. Sci Rep 6, 23222 (2016). https://doi.org/10.1038/srep23222

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep23222

This article is cited by

-

Empowering women through multifaceted interventions: long-term evidence from a double matching design

Journal of Population Economics (2024)

-

The effect of carbon fertilization on naturally regenerated and planted US forests

Nature Communications (2022)

-

What Can the Millions of Random Treatments in Nonexperimental Data Reveal About Causes?

SN Computer Science (2022)

-

Optimal subset selection for causal inference using machine learning ensembles and particle swarm optimization

Complex & Intelligent Systems (2021)

-

Nearest Neighbour Propensity Score Matching and Bootstrapping for Estimating Binary Patient Response in Oncology: A Monte Carlo Simulation

Scientific Reports (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.