Abstract

Scannerless time-of-flight three-dimensional imaging, the successor to raster scanning three-dimensional imaging, relies on large-scale detector arrays to obtain high pixel resolution; however, manufacturing limitations lead to a bottleneck in imaging resolution. Here, we report a methodology for laser imaging using code division multiple access, which involves three key steps. Optical encoding is carried out for a pulsed laser to generate space-time encoded beams for projection. Optical multiplexing subdivides the backscattered light signals and multiplexes them to a downscaled avalanche-photodiode array. Subpixel decoding decodes the digitized encoded full waveforms and decomposes the features of all subpixels. Accordingly, we design a prototype based on a 64-order encoder and a 4-element avalanche-photodiode array and conduct an outdoor experiment. We demonstrate that the system is capable of obtaining 256 pixels per frame in push-broom mode and reconstruct a three-dimensional image with centimetre-level lateral resolution and range precision at a distance of ∼112 m.

Similar content being viewed by others

Recent years have witnessed remarkable development and extensive applications for light detection and ranging (LIDAR). Currently, incipient point-by-point scanning LIDAR (i.e., whisk-broom LIDAR) is being gradually replaced by scannerless LIDAR for improved instantaneous performance1,2,3,4 (Supplementary Fig. 1 and Supplementary Note 1). Moreover, new laser imaging mechanisms for scannerless LIDAR are continuously emerging5,6,7. Figuratively, while a palm is used to replace a finger for instant sensing of a full view of a target, the optimal management of touch spots for the palm is further investigated for the perception of every detail with increased accuracy. Among existing mechanisms, the time-of-flight (TOF) measurement enables direct, accurate and rapid imaging performance8,9. In general, this mechanism-based system, commonly called flash LIDARs, uses a highly sensitive avalanche photodiode (APD) array to capture backscattered light and a high-speed readout integrated circuit to obtain depth information10,11.

Advances in large-scale focal-plane arrays and their seamless integration into LIDAR systems have effectively enhanced the resolution for laser imaging (Supplementary Table 1). The record for the largest array scale of 320 × 256 will soon be broken, allowing over 80,000 pixels to be retrieved simultaneously without scanning12,13,14. However, the use of the conventional TOF measurement method, whereby imaging resolution relies on the detector scale, hampers potential improvements in imaging performance. This is the reason why the Lincoln Laboratory has only improved the imaging resolution to 256 × 64 over two decades, which is still far less than the expected 300,000-pixel resolution15,16,17,18,19,20. Simply upgrading the detector scale to improve the imaging resolution not only increases the challenges of the hardware design and the cost but also hinders system miniaturization. Therefore, the traditional TOF-based mechanism is in urgent need of innovation via technical means to solve the contradiction between a small detector scale and high resolution.

For years, code-division multiple-access (CDMA) has been commercially successful in the field of mobile communications. By encoding the information from all users within the entire spectrum and time slots of a single channel and distinguishing different users via their own unique codes, CDMA possesses incomparably superior aspects relative to other multiple-access methods21. In this context, effective application of CDMA to the field of optical remote sensing and successful resolution of current problems are unprecedented and significant. Intuitively, it seems inexplicable to claim the existence of similarities between mobile communications and optical remote sensing. In fact, both aspects have extremely similar demands for the breadth and quality of information transmission. Regarding the former, high channel utilization and a lower bit error rate are needed to accommodate more users and ensure undistorted communications with limited base stations. For the latter, a high-pixel resolution and low-range deviation are urgently required for high-resolution and precise optical imaging with limited APD elements. From this perspective, the possibility remains to explore (with great potential) the applications of CDMA to optical remote sensing. The latest research preliminarily provides a scheme for applying the CDMA technique to LIDAR22. However, this application is restricted to scanning LIDAR to discriminate and separate the scanning echo signals under high-frame-rate conditions; the application does not fully implement the CDMA technique for high-resolution imaging of scannerless LIDAR. Therefore, a huge potential remains for optimal application of the CDMA technique to optical imaging.

Here, we present a subpixel three-dimensional (3D) laser imaging system. Substituting pure pulsed laser illumination, we generate pulse-encoded illumination to encode objective pixels using orthogonal codes for their distinguishability. Subsequently, optical multiplexing is operated for subpixelization and multiplexing of these subpixels. Consequently, we compress the transmission channels and thus downscale the required APD array. A subpixel decoding algorithm is specifically proposed and applied to encoded full-waveforms obtained from different APD elements; thus, the range profiles for all subpixels are recovered with high accuracy. Overall, we adopt the CDMA technique to surpass the traditional TOF mechanism with regards to one-to-one correspondence between the detectors and objective pixels and to ultimately realize high-resolution 3D imaging with a downscaled APD array. We conduct an outdoor experiment at a distance of ~112 m and demonstrate that image resolutions of 256 pixels per frame with centimetre-level precision can be realized merely by using a 4-element APD array. As a corollary, massive pixel resolution without scanning not only constitutes the domain of a large detector array but can also be realized accurately with a small one instead.

Results

Experimental configuration

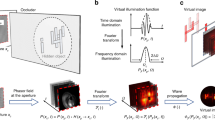

The experimental configuration for a CDMA-based subpixel 3D imaging system is illustrated in Fig. 1. Consistent with a common flash LIDAR, the system uses a beam expander to broaden the illumination range for multi-pixel detection under the scannerless condition and a multi-element APD array to record multi-channel backscattered echoes and resolve the depth information therein. The key change lies in the introduction of an N-order optical encoder and multiplexer into the transmitter and receiver. In virtue of these two devices, our goal is to reconstruct a precise 3D image of the target with a resolution of M × N pixels by using a mere M-element APD array.

CDMA-based subpixel 3D imaging system. Encoded linear beams generated from pulsed lasers by a beam expander and shaper set and an N-order optical encoder are projected onto a multi-story building in a push-broom mode, which illuminates N encoded sections. The backscattered light, collected and multiplexed into M-channel encoded light by a multiplexer, is then captured by an M-element avalanche photodiode (APD) array. The digitized signals are used to reconstruct M × N-pixel-per-frame range and intensity images

To improve the detection efficiency and better the adaptation to moving platforms relative to the whisk-broom system, our system is designed to work in a push-broom mode. The push-broom system produces a linear laser beam with a large instantaneous field of view (FOV) orthogonal to the moving direction, and sweeps up the surface with continuous forward movement23. To this end, in the prototype we designed (Supplementary Fig. 2 and Supplementary Note 2), a 532-nm pulsed laser with a pulse repetition frequency of 10 kHz and a pulse width of 10 ns is expanded and shaped to a linear laser beam with a flat-top energy distribution to uniformly cover a linear region. The lens set we design to realize beam expansion and shaping consists of an aspherical lens, a pair of conical lenses and a cylindrical lens. Herein, the conical lenses, which redistribute energy from the original Gaussian beams, are used to realize uniform detection and thus reduce the loss probability for the pixels. The linear beams are projected and collected, respectively, by the projection and collecting lenses, both of which adopt Schneider Symmar-S camera lenses with a focal length of 210 mm. To track the projection moment, a small fraction of the projection beam is simultaneously selected to trigger the digitizer. A time-to-digital converter module is integrated into the digitizer to implement fine corrections for inherent temporal fluctuations, which contribute to a time precision of dozens of picoseconds. Furthermore, to discard the redundant data, data acquisition is set to start after a fixed flying time and remain in operation within a finite period (Supplementary Figs. 7, 8 and 9 and Supplementary Note 5). The sampling rate is set to 1 Gsps for complete signal retrieval. All the digitized signals are directly transmitted to a computer terminal for subsequent signal processing and image reconstruction.

Optical encoding and multiplexing

These two modules contribute to the core implementation of our overall goal. The traditional CDMA-based mobile communication adopts a Walsh-Hadamard code for orthogonal spread spectrum modulation. A common Hadamard code is a symmetrical square matrix whose coefficients comprise bipolar elements of +1 and −1, with rows (columns) that are mutually orthogonal24. The orthogonal property of Walsh-Hadamard codes enables a laser imaging system to discriminate spatially different pixels by encoding, and compress the transmission channels by multiplexing. Unlike traditional bipolar digital modulation, laser modulation requires unipolar encoding operations, which can be realized by converting the original elements to “1” and “0”, wherein “1” denotes the light pass and “0” light interception. Both states are physically realized by on-off controls for the encoder elements. On the premise of the potential existence of a better encoder design, we designed an applicable disc-style optical encoder, which performs continuous optical encoding by rotation (Supplementary Fig. 4 and Supplementary Note 3). Thus, we generate encoded beams in both temporal and spatial dimensions, which are mapped to a unipolar Walsh-Hadamard-deformed code (Fig. 2a).

Diagrams for the encoding and multiplexing methods. a An N-order optical encoder is used to generate a 2D code with N spatial sections and N time slots by continuous on-off controls for all the encoder elements. The black square indicates the on status for light transmission, and the white square indicates the off status for light interception; these indicators are mapped to “1” and “0”, respectively. b The encoded light is projected onto N sections of a target, represented by s1,s2,…, sN. The current illumination status is denoted by the red-marked time slot in a. Subsequent subpixel detection leads to uniform subdivisions of these sections into \(\left\{ {S_{1,{\kern 1pt} j}} \right\},\left\{ {S_{2,{\kern 1pt} j}} \right\}, \cdots \left\{ {S_{N,{\kern 1pt} j}} \right\}\), where \(j = 1,{\kern 1pt} 2,{\kern 1pt} \cdots M\). The subsections of the same order i within different sections are marked with the same colour. c A multiplexer is used to redistribute and regroup the light beam reflected from the subsections. Thus, M new combinations \(\left\{ {S_{i,{\kern 1pt} 1}} \right\},\left\{ {S_{i,2}} \right\}, \cdots \left\{ {S_{{\kern 1pt} i,{\kern 1pt} M}} \right\}\) appear, where i = 1,2,…N, which are recorded using M avalanche photodiode (APD) elements. The cross-section figure shows that all the M-channel mixed light signals unexceptionally present complete code attributes

Unde r N-order encoding conditions, the encoded projection light divides the illumination region into N sections marked by S1,S2, …, SN. Typically, an N-element APD array is used for range retrieval for N pixels. Benefiting from the unique encoding attribute of each section, a single APD element can theoretically distinguish the respective information from the mixed light after multiplexing. To reach higher resolutions, the N sections are regarded as an assembly of M × N uniform subsections marked by {S1,j},{S2,j},…{SN,j}, where j =1,2, …, M, and the information from all these subsections is intended to be derived (Fig. 2b). Since the subsections from each section share the same code element, we can obtain M sets of encoded full waveforms of mixed light by regrouping the subsections into {Si,1},{Si,2}, …, {Si,M}, where i =1, 2, …, N. Consequently, the M data sets involving information from M × N subpixels can be distinguished by M APD elements. This concept of subpixelization and subpixel multiplexing requires a customized optical design to provide support at the receiver side (Fig. 2c). An N-element micro-prism array is first used to redistribute the backscattered light from all sections. Through convergence by a cylindrical lens, the light from different micro-prism elements is projected and superposed onto the front of an M-element wedge-prism array, thus forming a mixed light band. Each part of the light band corresponding to each wedge-prism element actually contains N subdivided light signals, which are exactly the backscattered light signals from the N subsections and are thus mutually orthogonal. Through total internal reflection in the wedge prisms, the light band is converted into M separate light spots, which are projected onto an M-element APD array via fibre optic bundles. Note that all APD elements operate in linear mode to completely record different multiplexed waveforms (Supplementary Fig. 6 and Supplementary Note 4).

Essentially, the CDMA implementations in our design resemble the CDMA implementations in mobile communications. We can regard the objective subsections as the users, the backscattered light as the user data and the APD elements as the base stations. Similarly, as each base station receives the multiplexed data by modulating bit streams and merging these multiple streams into one, each APD element collects the multiplexed backscattered light by modulating the reflected light signals and multiplexing them into one. Since each base station can distinguish and restore the bit streams transmitted from different users by digital demodulation, each APD element can distinguish and recover the echo signals from different subsections through component decoding.

Subpixel decoding

To clarify the principle of subpixel decoding, we particularize the process of signal formation and processing to the case of a 4-order encoder and one APD element (Fig. 3). The encoded spots, which are generated by a unipolar Walsh-Hadamard-deformed matrix H4, constitute a 2D-encoded light-spot array in temporal and spatial dimensions (Fig. 3a). The time intervals between the time slots are uniformly T = 1/f, where f denotes the pulse repetition frequency of laser emission. The projection points are marked by S1, S2, S3 and S4, with their echo signals assumed as p1, p2, p3 and p4, respectively. These signals, carrying specific depth and intensity information, propagate within four time slots in four channels (Fig. 3b). After being multiplexed, the channels are merged into a single channel, with an accumulated signal called the encoded full waveform, which is composed of four separated parts within four time slots (Fig. 3c). Accordingly, a matrix Y4 = [p1 + p2 + p3 + p4p1 + p3 p1 + p2p1 + p4] can be used to represent the encoded full waveform mathematically. The subsequent goal is to decompose Y4 into four components that approximate the original four components accurately. Multi-path propagation increases the overlapping of multiple pulses, ultimately lowering the effectiveness of traditional decomposition methods25,26. However, subpixel decoding can impart an improvement by using the orthogonal property of the Walsh-Hadamard code. In detail, a bipolar encoding matrix \(\overline {\bf{H}} _{4}\) is used to demodulate the encoded full waveform. The result is \({\mathbf{Y}}_{4}\overline {\bf{H}} _{4}^{\mathbf{T}} = [2p_1\,2p_2\,2p_3\,2p_4]\), which indicates successful decomposition of four components. Since the encoded full waveform is inevitably contaminated by different sources of noise, the decoding results tend to be noisy components \(\left[ {\tilde p_1\,\tilde p_2\,\tilde p_3\,\tilde p_4} \right]\). By using effective noise reduction, the time offsets and amplitudes for all the subpixels can be accurately derived (Fig. 3d).

Schematic diagram for signal formation and processing. Employing 4-order Walsh-Hadamard encoding and a single avalanche photodiode (APD) element, for instance, a four space-time encoded beams are generated by imposing an encoding matrix H4 on linear beams of four time slices and projected onto four objective points. b The backscattered echoes carrying depth and intensity information for the four points are propagated in the four channels. c An encoded full waveform is, thus, acquired by multiplexing the four channels into one and transmitting this channel to an APD element. d By performing a local decoding matrix \(\overline {\bf{H}} _{4}\) on the encoded full waveform, the characteristic waveforms involving the time offsets and amplitudes can be obtained

To demonstrate the universal applicability of subpixel decoding and determine the general construction of decoding matrix, a generalized condition for N-order encoding is further investigated. Initially, the N-order encoding matrix HN can be derived from the lowest-order Walsh-Hadamard-deformed matrix H2 by HN = HN/2⊗H2, where ⊗ denotes the Kronecker product and the order N should be a power of two. The signals diffusely reflected from the N objective points are assumed to be PN = [p1p2…pN]. These pulsed signals propagating within the N time slots are multiplexed into an encoded full waveform, which can be expressed as \({\mathbf{Y}}_{\mathbf{N}} = {\mathbf{P}}_{\mathbf{N}}{\mathbf{H}}_{\mathbf{N}}^{\mathbf{T}}\). After demodulation of an N-order encoding matrix \(\overline {\bf{H}} _{\bf{N}}\) over YN, \({\mathbf{Y}}_{\mathbf{N}}\overline {\bf{H}} _{\mathbf{N}}^{\mathbf{T}} = {\mathbf{P}}_{\mathbf{N}}{\mathbf{H}}_{\mathbf{N}}^{\mathbf{T}}\overline {\bf{H}} _{\mathbf{N}}^{\mathbf{T}} = {\mathbf{P}}_{\mathbf{N}}(\overline {\bf{H}} _{\mathbf{N}}{\mathbf{H}}_{\mathbf{N}})^{\mathbf{T}}\). We conclude that the elements of PN can be decomposed when \(\overline {\bf{H}} _{\mathbf{N}}{\mathbf{H}}_{\mathbf{N}}\) is a diagonal matrix. In this case, the diagonal elements of \(\overline {\bf{H}} _{\mathbf{N}}{\mathbf{H}}_{\mathbf{N}}\) are the coefficients of all decompositions. Actually, the coefficients do not influence the signal-to-noise ratios (SNRs) of all decompositions, thus making no contribution to the extraction of the time offsets and amplitudes. We therefore prefer \(\overline {\bf{H}} _{\mathbf{N}}{\mathbf{H}}_{\mathbf{N}}\) to be an identity matrix with a constant coefficient, i.e., kEN, for uniform distributions. Due to the symmetrical property of HN, \(\overline {\bf{H}} _{\mathbf{N}}\) is also a symmetric matrix that is orthogonal to HN. Since HN is the deformation of an N-order Walsh-Hadamard matrix, we further demonstrate that the satisfactory matrix \(\overline {\bf{H}} _{\mathbf{N}}\) can be obtained simply by changing the first-row first-column element of the Walsh-Hadamard matrix to 1 − N/2 and can thus be entirely described by \(\overline {\bf{H}} _{\mathbf{N}} \,= \,(N/2){\mathbf{H}}_{\mathbf{N}}^{{\mathbf{ - 1}}}\). Therefore, we adopt such an encoding matrix for subpixel decoding of encoded full waveforms to retrieve the echo components of different objective points.

The performance of subpixel decoding is verified by an outdoor experiment (Fig. 4). Thus, our experimental system is used to continuously project encoded laser beams onto a multi-story building with uniform push-broom steps, with the ultimate collection of 85 frames of data (Fig. 4a). As a reference, we present the 20th-frame datum involving four sets of full encoded full waveforms acquired from four APD elements (Fig. 4b). The waveform within the first time slot of each set is an accumulation of 64 subpixels, composing a traditional full waveform (Fig. 4c). Due to extreme approximation between the subpixel depths, it is almost impossible to distinguish the 64 subpixels simply by fitting algorithms, highlighting the outstanding range resolution of subpixel decoding. As per the decoding principle, an encoding matrix is applied to the four waveform sets. As expected, 64 decoding pulses are obtained from each set as characteristic waveforms of 64 subpixels (Fig. 4d). However, the noise contamination on the waveforms hinders precise extraction. Hence, we improved the decoding results by performing wavelet de-noising and five-spot triple smoothing algorithms (Fig. 4e). Eventually, the Levenberg-Marquardt fitting algorithm is used to determine the time offsets and amplitudes of different waveforms27.

Experimental demonstration of subpixel decoding. The system using a 4-element avalanche photodiode (APD) array and a 64-order encoder is operated for detection of a an experimental scene composed of triple windows shown in the dashed black box. Reflective films are pasted on window surfaces to ensure their reflectivity. In push-broom mode, the system collects 85-frame data, of which the 20th-frame datum corresponds to the red-line position. b The plot of this datum involving four sets of encoded full waveforms together with c the magnified plot for the red box in the 4th set is shown. Using subpixel decoding, d four sets of decoding results, each of which contains characteristic waveforms of 64 subpixels, are derived simultaneously. e Their de-noised results are further obtained for accurate subpixel decomposition. The abnormal results are marked by red boxes in d and e

However, certain abnormal results appear for explicable reasons. First, the first subpixel tends to show a lower-SNR characteristic waveform relative to the other subpixels, which is essentially due to the particularity of the first code sequence. In the 64-order decoding matrix, the first code sequence consists of one code element of −31 and 31 code elements of +1, while the other 31 code sequences contain the same quantities of +1 and −1. Therefore, the decoding of the first subpixel magnifies the noise in the first time slot, whereas decoding of the other subpixels mutually counteracts the noise in the different time slots. In addition to the first subpixel, some other subpixels may lose their echo waveforms due to weak reflectivity and background solar irradiance. Overall, these lost subpixels do not influence the retrieval of other detectable pixels and should be discarded for the following 3D imaging. To prevent the occurrence of subpixel loss as much as possible, sufficient output power and favourable ambient conditions should be provided.

3D image reconstruction

Essentially, subpixel decoding aims for accurate subpixel decomposition of the multiplexed signals from each APD element. Subsequently, TOFs t can be obtained by t = Δt + t0, where Δt denotes the decomposed time offsets and t0 denotes the reserved time for the start of data acquisition. Note that the subpixel ranges cannot be directly determined by ct/2, which is actually the flying distance, where c is the light velocity. Due to the large FOV, the flying distances of the linear subpixels do not represent the orthographic ranges relative to the system position and thus should be orthorectified (Supplementary Fig. 5 and Supplementary Note 3). According to the grouping and multiplexing rules of the subpixels (Fig. 2b), the ranges and amplitudes of all subpixels are recombined and spliced into the range and intensity maps of the target, respectively. Following combination of both maps, a 3D point cloud with 256 × 85-pixel resolution is ultimately obtained (Fig. 5a).

Quantitative analysis of the reconstructed three-dimensional (3D) image. a The depth and intensity images are combined into a 3D point cloud. b A close-range photograph of the central window in the objective scene shows six characteristic parts as marked, which await to be investigated. c A side view of the imaging result of b can be obtained by picking out the depth image marked by a white box in a and further filtering this image. Inset: the results of quantitative analysis involving the averages (AVGs) and the root mean square errors (RMSEs) for different layers of c

The visual consistency between the shape of the reconstructed 3D point cloud (Fig. 5a) and the real scene (Fig. 4a) demonstrates the validity of our approach. To further investigate the performance, a quantitative analysis is provided. Figure 5b shows a close-range photograph of the central window in the entire scene, which exhibits six characteristic parts corresponding to different distances to the imaging system: 1. exterior wall, 2. window guardrail, 3. left windowsill, 4. left windowpane, 5. right windowsill and 6. right windowpane. For comparison and evaluation, the corresponding reconstruction result for the central window is extracted from the entire result and further improved by image filtering. The side view of the improved result is shown in Fig. 5c, where six layers can be recognized. A comparative ranging test by a laser rangefinder was implemented to provide a more objective evaluation (Supplementary Fig. 13 and Supplementary Note 7). With the objective data, the averages and the root mean square errors (RMSEs) of the six layers are calculated and presented. Obviously, a minimum distinction of ~4 cm between the exterior wall and the window guardrail can be discriminated. Moreover, the RMSEs show an oscillation of several centimetres, indicating the range precision of our approach. Another non-negligible phenomenon occurs, in which the RMSEs increase with increasing detection distance, because this increase leads to an increasingly severe noise contamination and thus lowers the precision.

In fact, the range precision mainly relies on data acquisition precision and data processing precision. Regarding the former, data acquisition precision can reach ~20 ps by virtue of time-to-digital converter calibration. In terms of the latter, both effects of the denoising and fitting algorithms should be taken into consideration. Although the denoising algorithms can be used to effectively improve the signal quality, the residual error still leads to biased decomposition of the subsequent fitting algorithm. For the fitting algorithm itself, we demonstrate a theoretical precision of ~10−5 ps by application to a simulated noiseless signal with a pulse width of 10 ns and a sampling interval of 1 ns. Therefore, in spite of a centimetre-level practical precision, the range precision of our imaging system can have potentially better precision to the millimetre level under a low-noise condition. Besides range precision, another key indicator—lateral resolution—is considered. The lateral resolution of the different layers can be described by Δlk = 2Lktan(θ/2)/256, where Lk denotes the average distances of the different layers numbered k = 1,2, …, 6 and θ is the FOV for the laser emission. As the FOV of our system is 6.54°, the lateral resolutions of the six layers range from 4.98 to 5.04 cm.

Discussion

We have demonstrated that our subpixel 3D imaging system is capable of realizing high-resolution 3D imaging with millimetre-level range precision merely by using a downscaled APD array under scannerless conditions. The proposed CDMA-based subpixel imaging technique exhibits a laser imaging mechanism, which breaks the traditional dependency of the imaging resolution relying on the use of large-scale detector arrays. Consequently, we successfully overcome the resolution bottleneck arising from the limited manufacturability of large-scale detector arrays, thereby downscaling the required detector array for high-resolution imaging. The detailed contributions are two-fold. First, a collaborative approach of optical encoding and multiplexing is designed. By performing orthogonal codes on different pixels, optical encoding effectively enhances the distinction between pixels and reduces data redundancy. Optical multiplexing completes subpixelization for higher resolution and multiplexing of the subpixels for detector downscaling. Second, the subpixel decoding algorithm is specifically proposed to decode different sets of encoded full waveforms and obtain characteristic parameters of all the subpixels. The algorithm can perform an outstanding range resolution even in the case of extreme overlap of multiple pixels with approximate depths.

In fact, one earlier technique named single-pixel imaging possesses similar advantages with our subpixel imaging technique in reducing the required complexity of detector arrays and enhancing the image resolution7,28,29,30. However, many essential differences exist between them. First, the two techniques are, respectively, the interdisciplinary applications of the compressive sensing (CS) and CDMA to optical imaging. Thus, the former focuses more on data acquisition and processing, while the latter emphasizes data communication. Second, the module design is different. Compared with the CS encoder’s requirement for a programming pseudorandom-code generator, the CDMA encoder does not require the dynamic-data storage space because it uses specific codes, and thus has lower complexity. Moreover, while the CS imager simply uses a lens to project all reflections onto one photon detector, the CDMA multiplexer is able to subdivide the reflections (i.e., subpixelization) and accurately project them onto a multi-element detector array. An appropriate, but not extremely large, scale of detector array can reduce the complexity of encoder and the compromise on the measurement times. Third, their reconstruction solutions and performances are dissimilar. The reconstruction algorithms of CS address the convex optimization problem by means of iteration, while those of CDMA finish exact decomposition by linear demodulation, leading to different levels of complexity. For instance, to detect N pixels, subspace pursuit and orthogonal matching pursuit as two typical algorithms for CS reconstruction can, respectively, contribute to the complexity of O(mNlogK) and O(mNK), where m denotes the measurement times and K signifies the sparsity level31,32. The complexity of the decoding algorithm for CDMA-based reconstruction is O(N), which is far less than the former two. Moreover, the iteration algorithms of CS are sensitive to different parameters, easily producing pathological problems. In terms of accuracy, not arbitrarily small number of measurements for CS can result in accurate image reconstruction, since the practical condition does not always strictly meet the requirement of signal sparsity and measurement matrix. CDMA method does not explore the extreme dimensionality reduction but rather uses an exact demodulation process to ensure a high-range precision; the encoding and decoding matrices are full rank. Therefore, in addition to the same advantage in reducing the detector complexity, the CDMA-based subpixel laser imaging technique is also superior to the CS-based single-pixel imaging technique in the areas of lower complexity of encoding method and reconstruction algorithm, as well as having a higher stability of imaging. Especially, our multiplexing solution contributes to a more flexible high-resolution imaging approach, with minimal design challenges.

Despite the benefits in reduced complexity of the detector array and raised resolution of 3D imaging, some disadvantages should be considered. One trade-off exists between multi-pixel acquisition and high laser power. Since the mono laser source has to supply the required energy for the detection of multiple pixels and the optical encoder results in the light loss, a higher-powered laser is required to ensure the exact acquisition of all pixels. Furthermore, the CDMA-based acquisition of pixels necessitates a trade-off between space and time, just like the single-pixel imager.

Although this paper only presents a prototype customized for push-broom detection with a specific resolution, the proposed methodology of CDMA-based laser imaging can have an evolved application to a planar all-covering mode to realize staring 3D imaging with a larger FOV. From this perspective, as the current record holder, the 320 × 256-element APD array can readily realize 5120 × 4096-pixel (over 20 million pixels) non-scanning imaging when a 256-order encoder is provided. Our ultimate goal—to capture and recover the most extensive and clearest scene with the smallest and most portable laser equipment—is around the corner.

Methods

Optical encoder design

The optical encoder is designed specifically for experimentation. Overall, we adopt a round disc with a symmetrical drilled code distribution. To meet the experimental requirements, more details need to be considered and realized. First, the precision of the hole positions must be sufficient to prevent incorrect multiplexing of the reflections from the appointed subpixels. A larger-sized disc generally provides a higher tolerance for deviation. Therefore, under specified encoding orders, we should deal with the contradiction between the precision and size. Second, auto encoding is indispensable for non-stop detection. We use an electric motor to drive rotation of the encoder disc. Finally, accurate laser shots need to be considered. We use a photoelectric switch to track the arrival of every code sequence and drive the laser emitter to work. A prescribed rotating speed is set to control the laser emission with a pulse repetition frequency of ~10 kHz. Therefore, we aim to provide an applicable encoder for our system, and we do not exclude other (potentially better) encoding approaches. More details are provided in Supplementary Note 3.

Data acquisition

A high-speed digitizer is designed and used for four-channel data acquisition from four APD elements (Throlabs APD210). The digitizer includes an analog-to-digital converter (ADC) chip (E2V EV10AQ190), field-programmable gate array (FPGA) chip (Xilinx Virtex-5 XC5VSX95T) and an Ethernet chip, which control data digitization, data control and data transmission, respectively. The FPGA controls the ADC to work with a digitizing rate of 1 Gsps. The ADC outputs 10-bit digitized data in double data rate (DDR) mode with a clock frequency of 500 MHz. To reduce the amount of empty data and ease the burden for the digitizer, ADC works only within an appointed period by control of the FPGA. For the experiment illustrated in Fig. 4, from the moment of arrival for the trigger signal, the ADC is set to start after 250 clock cycles and stop after another 250 clock cycles. Consequently, the redundant data obtained within a distance of 75 m to the laser emitter are beyond acquisition, which thus effectively improves the work efficiency of the digitizer. More details are provided in Supplementary Note 5.

Integration and visualization

We develop a LabVIEW software platform consisting of two modules for satisfactory integration and visualization. The up-monitor module aims to acquire data from the Ethernet port of the digitizer. The signal processing module includes algorithms of waveform restoration, waveform enhancement, subpixel decoding and fitting decomposition. In combination with the FOV parameters of beams and multiplexing rules of the subpixels, the range and intensity information of subpixels are orthorectified and spliced, thus accomplishing 3D image reconstruction. More details are provided in Supplementary Note 6.

Data availability

The data supporting the findings of this study are available from the corresponding author upon reasonable request.

References

Schwarz, B. LIDAR: mapping the world in 3D. Nat. Photon.4, 429–430 (2010).

McCarthy, A. et al. Long-range time-of-flight scanning sensor based on high-speed time-correlated single-photon counting. Appl. Opt.48, 6241–6251 (2009).

McCarthy, A. et al. Kilometer-range, high resolution depth imaging via 1560 nm wavelength single-photon detection. Opt. Express21, 8904–8915 (2013).

Oh, M. S. et al. Multihit mode direct-detection laser radar system using a Geiger-mode avalanche photodiode. Rev. Sci. Instrum.81, 033109 (2010).

Sun, J. & Wang, Q. 4-D image reconstruction for streak tube imaging lidar. Laser Phys.19, 502–504 (2009).

Zhao, C. et al. Ghost imaging lidar via sparsity constraints. Appl. Phys. Lett.101, 141123 (2012).

Sun, M. J. et al. Single-pixel three-dimensional imaging with time-based depth resolution. Nat. Commun.7, 12010 (2016).

Velten, A. et al. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun.3, 745 (2012).

Lee, J. et al. Time-of-flight measurement with femtosecond light pulses. Nat. Photon.4, 716 (2010).

Zhou, G. et al. Flash lidar sensor using fiber-coupled APDs. IEEE Sens. J.15, 4758–4768 (2015).

Jo, S. et al. High resolution three-dimensional flash LIDAR system using a polarization modulating Pockels cell and a micro-polarizer CCD camera. Opt. Express24, A1580–A1585 (2016).

De Borniol, E. et al. A 320x256 HgCdTe avalanche photodiode focal plane array for passive and active 2D and 3D imaging. Proc. SPIE8012, 801232 (2011).

Finger, G. et al. Development of high-speed, low-noise NIR HgCdTe avalanche photodiode arrays for adaptive optics and interferometry. Proc. SPIE7742, 77421K (2010).

Hall, D. N. B., Baker, I. & Finger, G. Towards the next generation of L-APD MOVPE HgCdTe arrays: beyond the SAPHIRA 320 x 256. SPIE9915, 99150O (2016).

Albota, M. A. et al. Three-dimensional imaging laser radars with Geiger-mode avalanche photodiode arrays. MIT Linc. Lab. J.13, 351–370 (2002).

Aull, B. F. et al. Geiger-mode avalanche photodiodes for three-dimensional imaging. MIT Linc. Lab. J.13, 335–349 (2002).

Albota, M. A. et al. Three-dimensional imaging laser radar with a photon-counting avalanche photodiode array and microchip laser. Appl. Opt.41, 7671–7678 (2002).

Marino, R. M. & Davis, W. R. Jigsaw: a foliage-penetrating 3D imaging laser radar system. MIT Linc. Lab. J.15, 23–36 (2005).

Dumani, D. Airborne Optical Systems Test Bed (AOSTB). Report by MIT Lincoln Laboratory in Lexington United States (2016). http://www.dtic.mil/get-tr-doc/pdf?AD=AD1033486.

Albota, M. A. et al. The Airborne Optical Systems Testbed (AOSTB). Report by MIT Lincoln Laboratory in Lexington United States (2017). http://www.dtic.mil/get-tr-doc/pdf?AD=AD1033558.

Zhang, J. et al. Quantum internet using code division multiple access. Sci. Rep.3, 2211 (2013).

Fersch, T., Weigel, R. & Koelpin, A. A CDMA modulation technique for automotive time-of-flight LiDAR systems. IEEE Sens. J.17, 3507–3516 (2017).

Gryaznov, N. A., Kuprenyuk, V. I. & Sosnov, E. N. Laser information system for spacecraft rendezvous and docking. J. Opt. Technol.82, 286–290 (2015).

Beauchamp, K. G. Walsh functions and their applications[M]. Academic press, p24-p25 (1975)

Mallet, C. & Bretar, F. Full-waveform topographic lidar: state-of-the-art. ISPRS J. Photogramm. Remote Sens.64, 1–16 (2009).

Hofton, M. A., Minster, J. B. & Blair, J. B. Decomposition of laser altimeter waveforms. IEEE Trans. Geosci. Remote. Sens.38, 1989–1996 (2000).

Xu, F., Li, F. & Wang, Y. Modified Levenberg–Marquardt-based optimization method for LiDAR waveform decomposition. IEEE Geosci. Remote Sens. Lett.13, 530–534 (2016).

Duarte, M. F. et al. Single-pixel imaging via compressive sampling. IEEE Signal Process. Mag.25, 83–91 (2008).

Howland, G. A., Dixon, P. B. & Howell, J. C. Photon-counting compressive sensing laser radar for 3D imaging. Appl. Opt.50, 5917–5920 (2011).

Sun, B. et al. 3D computational imaging with single-pixel detectors. Science340, 844–847 (2013).

Tropp, J. A. & Gilbert, A. C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory53, 4655–4666 (2007).

Dai, W. & Milenkovic, O. Subspace pursuit for compressive sensing signal reconstruction. IEEE Trans. Inf. Theory55, 2230–2249 (2009).

Acknowledgements

This work is supported by the Natural Science Foundation of Jiangsu Province of China (Grant No. BK20170813), the Open Fund of Key Laboratory of Radar Imaging and Microwave Photonics of Ministry of Education of China (Grant No. NJ20170001-7) and the Fundamental Research Funds for the Central Universities of China (Grant No. 3082018NS2018029).

Author information

Authors and Affiliations

Contributions

F.X. and Y.-Q.W. supervised the project, designed the prototype and performed the experiment. Y.-Q.W. designed the multiplexing devices. F.X. designed the encoding devices and decoding algorithms, developed the software interface, analysed the presented data, concluded the methodology and wrote the manuscript. X.-F.Z. and C.-Y.W. provided constructive comments and suggestions.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, F., Wang, YQ., Zhang, XF. et al. Subpixel three-dimensional laser imaging with a downscaled avalanche photodiode array using code division multiple access. Commun Phys 2, 1 (2018). https://doi.org/10.1038/s42005-018-0096-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-018-0096-2

This article is cited by

-

Topological superconductor candidates PdBi2Te4 and PdBi2Te5 from a generic ab initio strategy

npj Computational Materials (2023)

-

Role of Y3+ on the temperature-dependent magnetic properties of Lu orthoferrite prepared by solution combustion method using a mixture of fuels

Journal of Materials Science: Materials in Electronics (2023)

-

Thermal scanning probe lithography

Nature Reviews Methods Primers (2022)

-

Entropy Generation in the Magnetohydrodynamic Jeffrey Nanofluid Flow Over a Stretching Sheet with Wide Range of Engineering Application Parameters

International Journal of Applied and Computational Mathematics (2022)

-

Effect of Negative Capacitance in Partially Ground Plane based SELBOX FET on Capacitance Matching and SCEs

Silicon (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.