Abstract

Recently, deep neural network (DNN) studies on direction-of-arrival (DOA) estimations have attracted more and more attention. This new method gives an alternative way to deal with DOA problem and has successfully shown its potential application. However, these works are often restricted to previously known signal number, same signal-to-noise ratio (SNR) or large intersignal angular distance, which will hinder their generalization in real application. In this paper, we present a novel DNN framework that realizes higher resolution and better generalization to random signal number and SNR. Simulation results outperform that of previous works and reach the state of the art.

Similar content being viewed by others

Introduction

Direction-of-arrival (DOA) estimation using multiple antenna technologies, such as smart antenna systems, is a very important problem in many applications, including wireless communications, source localization, speech enhancement, robot audition, astronomical observation, radar and sonar1. Signals received by the antenna arrays are down-converted into baseband signals, digitalized, and then used to form a spatial covariance matrix that is the input to most DOA algorithms. There have been long-lasting developments in DOA estimations2. So far, numerous algorithms have been devised to deal with the DOA estimation problem and among them, the subspace based estimation methods such as MUSIC (MUltiple SIgnal Classification) and ESPRIT (Estimation of Signal Parameters via Rotational Invariance Techniques) are well-known for their high resolution capabilities3,4. Performing spectral search, MUSIC can provide accurate DOA estimation at the cost of high computational complexity. Utilizing space rotational invariance, ESPRIT effectively reduces the amount of computations by avoiding spectral search. Besides, many useful modifications of MUSIC and ESPRIT algorithms have also been proposed under different conditions 5,6,7,8,9,10. However, these subspace based algorithms are difficult to implement in real time because of the time-consuming process of spatial covariance matrix eigenvalue decomposition.

Alternatively, machine learning approach based on artificial neural network (ANN) has also shown its potential application in DOA estimation11,12,13,14. Performing only basic mathematical operations and calculating elementary functions, ANNs are much faster than conventional DOA algorithms. By considering DOA estimation as a function approximation problem, ANNs show the abilities to establish the mapping between spatial covariance matrix and directions of source signals. As far as we know, the earliest attempts on ANN based DOA estimation trace back to Radial Basis Function (RBF) neural network15,16. RBF networks are often characterized by one hidden layer, of which each neuron is activated by one RBF (usually Gaussian function). The centers, deviations and weights of these RBFs are trained to fit a mapping between input-output pairs. The RBF networks successfully find the directions of signals, although the result is some rough. Soon, another machine learning method namely support vector regression (SVR) is proposed to deal with this problem17,18. SVR is based on the theory of support vector machine (SVM), which has a rigorous mathematical foundation19. But SVR is only suitable for small set of data and its direction finding performance is barely satisfactory. Then, multilayer perceptron (MLP) neural network is also employed in DOA estimation20,21. MLP neural networks are usually consisted of several hidden layers, of which the neurons are often activated by sigmoid or tanh functions. Due to the depth growth, MLP neural networks can approximate virtually any input-output mapping and are suitable for highly non-linear problems22. As a specialized form of machine learning, deep learning (DL) has achieved great progresses and attracted much attentions in the past few years. Different from traditional neural networks containing several hidden layers, deep neural network (DNN) often has tens or hundreds of hidden layers. With the aid of large amount of labeled data, substantial computing power and DL algorithm breakthrough, deep learning achieves state-of-the-art accuracy and sometimes exceeding human-level performance in the fields of image identification, speech recognition, natural language processing, automatic driving and so on.

Recently, some researchers have introduced deep learning techniques to solve acoustic location23,24,25 and DOA estimation problems26,27,28. There are mainly two ways of thinking about the DNN based DOA problem, and they apply to different situations. In the case of knowing the signal number in advance, the output layer of DNN has the same number of neurons, whose activation values representing the signal angles in the range of [\(-\pi /2,\pi /2\)]. This situation has been studied in Huang et al.’s work26, where the smallest location error of \(0.1^{\circ }\) is realized. Apparently, the most crippling weakness of this method is the priori knowledge of signal number, which severely hinders its generalization and is also the general drawback in many previous related works. The more practical situation is that the signal number is unknown, so the DOA estimation naturally falls into a direction classification problem. In this case, the observation space is separated into N subspaces and the activation values of N neurons in output layer represent the probability of a signal locating in each subspace. This situation has been studied in Liu et al.’s work28, where they propose a two-stage DNN structure that is characterized by a multitask autoencoder and a group of parallel multilayer classifiers. The autoencoder denoises the DNN input and decomposes its components into P spatial subregions, whereas P parallel disconnected MLP concentrate on the direction classifications in each subregion. The autoencoder and parallel classifiers are trained independently and give the DOA estimation collaboratively with error of \(0.5^{\circ }\). However, when signals are located at the edge of subregions, the corresponding DOA estimation aggravate in precision or even disappear in the estimated spectrum. Moreover, only the two-signal scenario is considered in the training process, which results in unsatisfactory performances in the scenarios of different signal number. Apart from the drawbacks mentioned above, most of the previous DNN studies locate sources on very coarse grids with spacing \(5^{\circ }\)23,24 or even \(10^{\circ }\)25. Such coarse segmentations do not meet the precision requirements in most general DOA estimation applications. Besides, in most traditional machine learning and deep learning approaches, source signals are restricted to large angular distances and same signal-to-noise ratios (SNR) in the training and validation stages, which hinders the generalization performance in real application of DOA estimation. At last, the DNN structures in all previous DOA studies are not actually deep, they are more like MLP neural networks. For the general reason that results often get better as DNN becomes deeper, we believe that a much deeper structure may boost the performance of DNN based DOA estimation.

In this paper, we take advantage of deep learning techniques to boost the resolution and generalization of DNN based DOA estimation. It is widely believed that as DNN gets deeper, its representational capacity becomes stronger and a more precise mapping function can be trained. Meanwhile, the residual technique makes it possible to train a very deep neural network in a controllable manner29. By considering DOA estimation as a direction classification problem, we propose a simple DNN architecture that turns out to be much better than ever before. The outline of this paper is as follows: Firstly, the data model, DNN model and training details are given in “Methods” section. Then, we show the detailed simulation results under different situations and compare with related studies in “Results” section. At last, we make the error analysis and conclude with some final remarks in “Discussion” section.

Methods

Data model

Let us consider a linear array composed of L omnidirectional antenna elements. Assume that there are P narrow band uncorrelated signals impinging on the array from directions \(\{\theta _1,\theta _2,...,\theta _P\}\) . Under complex representation, the received signal by the l-th array can be expressed as

where \(s_p(t)\) is the complex envelop of the p-th signal reaching antenna array at time t; \(a_l(\theta _p)=e^{-i(l-1)2\pi d\sin (\theta _p)/\lambda }\) is the steering vector of the p-th signal for the l-th array, with d denoting the spacing between the elements of the array and \(\lambda\) denoting the wave length; \(n_l(t)\) is the background noise of the l-th array, which takes a zero mean complex Gaussian distribution. To avoid spatial aliasing, spacing d is usually set as half of wavelength \(d=\lambda /2\). Equation (1) can be written in a matrix form

where X(t), S(t), N(t) are given by

and

is the array manifold matrix.

In order to perform DOA estimation, spatial covariance matrix R has to be determined, which is computed by the expectation value on snapshots of different time instants.

where noises and signals are assumed to be mutually independent. Without loss of information, the upper right part of the \(L\times L\) Hermitian matrix R can be organized into a vector of

As neural network does not deal directly with complex numbers, we need to extract the real and imaginary parts of each element in b

This real vector is of \(L^2\)-dimension because the diagonal elements of matrix R are purely real numbers. Then the vector \(\bar{b}\) is normalized as

and fed into the input layer of neural network.

DNN model

The architecture of DNN used in the DOA estimation is shown in Fig. 1, which is featured by three residual blocks of the same depth. In each residual block, the linear transformation layer is used to encode data into desired dimension, and the following multilayer residual network (ResNet) is used to achieve good performance. The output layer consists of 180 neurons with sigmoid activation value representing the existence probability of signal at each angle from \(-90^{\circ }\) to \(90^{\circ }\). The reason for choosing larger and larger ResNet blocks is that we naively expect DNN model probe signals more clearly as dimension increases. Actually we have trained a very deep ResNet block with fixed dimension 180, but its simulation result is not so good. For our proposed DNN model here, one can also use more neurons in the output layer to improve the resolution without changing the previous body structure.

In the three-stage pipeline processing, input data Z is encoded into vector of more and more large dimensions. To prohibit overfitting risk and improve the generalization of our DNN model, we use dropout technique between or after residual blocks. In each residual block, linear transformation layer serves only as dimension matching. The following ResNet consist of N residual layers, and each one is composed of two linear sub-layer with hidden ReLU nonlinearity30 in the middle

where \(W_1,W_2\in R^{d_x\times d_x}\) are trainable matrices. We use the residual connections proposed by He et al.29 to ease the training of our DNN. So the output Y of each residual layer is computed by the following equation:

We then apply layer normalization31 after the residual connection to stabilize the activations of neurons.

In the output layer, we use the mean squared error

as the loss function, where \(\mathbf{u} (\theta )\in R^{180}\) is the output of DNN and the corresponding label \(\hat{\mathbf{u }}(\theta ) = \sum _i^P {{\varvec{f}}}(\theta _i)\) is actually the summation of smoothed one-hot vectors, of which the j-th component is set as

with \(|j-i|_s\) indicating the shortest distance between i and j on the periodic interval \([-90,90]\). We find this smooth label technique also helpful to easy the training. Then, we adopt the stochastic gradient decent (SGD) algorithm to optimize the loss function based on the proposed DNN framework.

Training details

As neural networks get very deep, the phenomena of vanishing/exploding gradients can easily take place in the training processes. To prevent this problem, a suitable weight initialization is strictly required and also beneficial to convergence rate and final performance. In our DNN model, bias is initialized to zero and weight matrix is initialized to normal distribution \(W_l\sim N(0,1/d_l^2)\), where \(d_l\) is the input dimension of l-th hidden layer. Lastly, normalization factor is initialized to one in the layer normalization operations.

Learning rate plays a key role in weight updates and convergence performance. If it is too small, the training process converges very slowly and often gets trapped in local minimums. Conversely, if learning rate is too large, the model easily diverges and results in vanishing/exploding gradients phenomena. Here we take a dynamic learning rate strategy formulized by

where s is the latest training step, \(s_w=200k\) and \(s_d=400k\) are the warmup steps and decay-starting step. So the learning rate firstly grow linearly to 0.1, and then keeps unchanged for 200k steps, finally decay quadratically in the rest of training. This dynamic learning strategy turns out to be very efficient in our DNN training processes.

To prohibit overfitting risk, we only take use of dropout technique without L1 or L2 regularization. Dropout is applied to the outputs of second and third residual block with probability 0.5, and also applied to all residual values Res(X) with probability 0.1 in the three residual blocks. No other place is dropout involved. Because the dimension of final output layer equals that of the first residual block, we suspect dropout 0.5 following the first residual block may hinder the resolution performance. Experimental results indeed show performance degradation (not shown here) when this type of dropout is added.

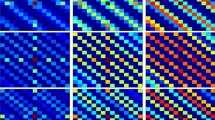

In the training processes, data model based on 8-element uniform linear array spaced half a wavelength is used. Signal impinging angles in the training and validation sets are random in (\(-90^{\circ }\), \(90^{\circ }\)) and (\(-75^{\circ }\), \(75^{\circ }\)), respectively. Besides, the spatial covariance vectors Z in all data sets are obtained from 500 snapshots. In all the training processes for DNN of different depth, we take use of millions of samples, with batch size set as 800 and iterations as 2000k. By introducing powerful TensorFlow, the most time-consuming training process takes about 20 hours on a single GPU Nvidia Quadro P6000. Training progress of the deepest neural network is shown in Fig. 2. One can find model converge steadily and no overfitting take place.

Results

In this section, the performances of DOA estimation based on our proposed DNN framework are investigated. Because the number of signals impinging on the array and their SNR can be varied, we carried out simulations with different settings. All simulation results are obtained from the best run after model converges.

Fixed number of signals with equal SNR (case A)

We first investigate the performance of shallow DNN in a simple situation, which is the case of most previous studies. The training and validation sets are respectively composed of 2000k and 100k samples, carrying the information of 5 random directional signals. To show the generalization of our DNN model, signals in the training set are characterized by integer impinging angles and SNR of 10 dB, whereas in the validation set by continues floating impinging angles (deviating from integer value by 0.35\(^{\circ }\) at most) and SNR of 15 dB. As a consequence, the output signal angles \(\theta _{O}\) of DNN are expected as the nearest integer number of continuous input angles \(\theta _I\). For comparison, we carried out experiments on DNNs of different ResNet block depth \(N = 1, 3, 5\). To be strict, any estimated angle deviating from its real value by \(1^{\circ }\) will be viewed as a false prediction. The corresponding estimation results of precision (P), recall (R) and their harmonic average (F-score) are shown in Tabel. 1. As one can see, the simulation performances get better as DNN becomes deeper. Lastly we state that the number of hidden layers is \(6N+3\) because of three ResNet blocks and linear transformation layers, and the shallowest one is deeper than that of almost all previous ANN studies.

Random number of signals with equal SNR (case B)

Naturally, the number of signals cannot be previously known in most real application. So we also carried out simulations on random number of signals. The training set is composed of 4000k samples, with signal numbers random in [0,7], integer impinging angles and equal SNR of 10 dB. The validation set consists of 4 subsets with different signal numbers 1,3,5,7, respectively. Each subset is composed of 100k samples with floating impinging angles (deviating from integer value by \(0.35^{\circ }\) at most) and equal SNR of 15 dB. The detailed simulation results are displayed in Table. 2. Different from the shallow DNN, a much deeper block depth is required to obtain a satisfactory result, taking the value of 5, 10 and 15 in the simulations. Apart from the improvement as the growth of ResNet depth, one can also find the performances decrease as signals become more. Comparing simulation results of five-signal subset with that of case A, unsurprisingly one can find performance get better when signal is restricted to a fixed number.

Moreover, we show the simulation results under different SNR as in Fig. 3a, where SNR influences are clearly displayed with respect to signal number. Naturally, the best results belong to the 10 dB validation set that is equaled to the training set. Performance declination of 7 dB set is larger than that of 15 dB, which is attributed to relatively increased influence of noises. Finally, we give an illustration of DOA estimation for this case. Six signals of 10 dB are assumed to impinge onto antenna array simultaneously, with initial angles \([-60^{\circ },-59^{\circ },-27^{\circ },-25^{\circ },0^{\circ },10^{\circ }]\). Keeping the angular distances unchanged and evolving the first signal from \(-60^{\circ }\) to \(-10^{\circ }\) with a step of \(1^{\circ }\), we show the direction estimation results in Fig. 3b. Only one false-dismissal and one false-alarm occur for the third and forth signals, resulting in a very high F-score 0.9967 for this example.

Fixed number of signals with random SNR (case C)

Previous works on ANN-based DOA estimation are mainly focused on the condition of equal SNR, so we test the performance of our DNN-based model on the condition of random SNR. The training and validation sets respectively consist of 4000k and 100k samples, both characterized by 4 signals of integer impinging angles and random SNR between 5 and 20 dB. In the simulations, we find the expected signals of small SNR often missed, which causes large recall decrease and is faithfully displayed in Table. 3. Because of the sharp drop compared with case B and limited improvements as the growth of DNN depth, we do not go further on the case of ‘random number and random SNR’. Nevertheless, this result is still acceptable.

Direction estimation resolution

The idea of direction classification is very useful when signal number is unknown, but it has a major defect: the higher resolution required, the larger class number and bigger neural network are needed. To overcome this problem, we take the method of amplitude interpolation to estimate signal directions of non-integer impinging angles, which is expressed as

where \(\theta _I\) is the estimated direction of the I-th peak of neural network output \(\mathbf{u} (\theta )\), and \(u_{I\pm n}\) is the neuron activation amplitude of the n-th neighbour. For example, the distribution of estimation error for case B is shown in Fig. 4a, where the proportion of different error \(\varepsilon\) is counted from 10k samples of two signals with SNR=10 dB. As one can see, almost all errors are smaller than \(0.25^{\circ }\). Statistically, the average and standard derivation of absolute estimation error is \(\mu =0.1^{\circ }\) and \(\sigma =0.06^{\circ }\), respectively. Through this interpolation method, performance of DOA estimation is largely improved.

Alternatively, there is another perspective to investigate estimation error. When impinging angles deviate from integer values by \(\delta =0.5^{\circ }\), the direction classification will be ambiguous. So we expect performance declination of direction classification as \(\delta\) increases gradually from \(0^{\circ }\) to \(0.5^{\circ }\). As is shown in Fig. 4b, F-score of direction classification falls from 1.0 at zero deviation down to 0.5 at middle point. Estimation error starts to pull down F-score greatly when it fills the gap between \(\delta\) and middle point, formulized as \(\delta +\mu +\sigma \approx 0.5^{\circ }\). So we can find the sharp collapse near \(\delta =0.35^{\circ }\), which is in good agreement with the straight estimation error distribution.

Comparison with previous studies

We compare our results with two latest DNN studies on DOA estimations carefully. In Liu et al.’s study28, the training process is restricted to two-signal scenario and the validation process is carried in the situation of big intersignal distance (\(9.4^{\circ }\)). When their result is generalized to three-signal scenario, the estimation errors will grow dramatically even though a larger intersignal distance (\(14^{\circ }\)) is applied. What is worse, because of their subregion technique, DOA estimation performance becomes terrible when signals are located at the edge of subregions. In Huang et al.’s study26, the most crippling weakness is that the signal number must be known in advance, which severely hinders its generalization in real applications. Although our DNN structure is fairly simple, it outperforms that of previous works; see Table. 4. Firstly, our DNN model is suitable for random number of signals, or rather, no priori knowledge of signal number is needed. Secondly, compared with Liu et al.’s work, our model is not troubled by the subregion edge aggravation problem and applicable in a larger spatial region (smaller than Huang et al.’s, but it is good enough in real application). Thirdly, our model keeps a very high resolution with the least number of antenna elements in a generalized condition. Although we just reach the same resolution as Huang et al in our simulation, it can be easily enhanced if we increase the element number of antenna array (this is well known) or enlarge the output size of our DNN model. Lastly, most previous works are restricted to zero or small SNR differences in validation processes, whereas our model is still nearly applicable in the case of large SNR difference. In general, we can safely say that we have reached the state-of-the-art DNN-based DOA estimation.

Discussion

In the previous simulations, the integer-float angle inconsistent between the training and validation sets will hinder the performance of DOA estimations. We find that if the validation set changes to the one of integer angle, the F-score will obtain improvements of at most 2.0 absolute percentages. Apart from that, estimation errors come from three aspects: neighboring signals, large angles and SNR differences.

Firstly, we find that one input signal may split into two output neighbors or two neighboring input signals may fuse into one output. For example, input signal of angle \(50^{\circ }\) sometimes generates prediction of two signals of angle \(50^{\circ }\) and \(51^{\circ }\), which causes the precision decrease. Conversely, input signals of angle \(34^{\circ }\) and \(36^{\circ }\) sometimes fuse into one output of angle \(35^{\circ }\), which causes both precision and recall decrease. As signals become more and more, the probability of neighboring occurrences gets larger. So we can see the performance decrease as the increasement of signal number in the above simulations. Furthermore, in the process of amplitude interpolation estimations, amplitude peaks of neighbouring signals will interact with each other greatly, making our interpolation method inaccurate (maybe there exist other proper amplitude interpolation methods, but the final estimation error will not be greatly reduced).

Secondly, ANNs obtain the abilities to establish the mapping between input features and output predictions after training. In our proposed DNN, the mapping function between input steering vector and output angles can be symbolically written as

To generate the right predictions \(\theta _O=\theta _I\), the mapping function f is expected as arcsine, whose gradient \(f^\prime (\sin \theta _I)=1/\cos \theta _I\) approaches infinite near large angle \(\pm 90^{\circ }\). As for neural networks, it is easy to fit a smooth function but hard to fit a very sharp one. So the mapping function f will deviate from arcsine at large angles and result in prediction errors. That is why we test our DNN model in (\(-75^{\circ }, 75^{\circ }\)) but not the whole interval (\(-90^{\circ }, 90^{\circ }\)).

Lastly, situation of different SNR has larger variable space compared with that of equal SNR, making it difficult to fit a precise mapping function. Furthermore, signals of higher SNR tend to cover up the ones of lower SNR. The extraction of weak signals is a general challenging problem in DOA estimations.

In conclusion, an efficient DNN-based model for superhigh resolution DOA estimation is proposed in this paper. The key advantage of ANN-based models over conventional subspace based methods is the ability to provide accurate DOA estimations almost instantaneously as they avoid complex matrix calculations. Compared with other ANN-based models, our DNN model has much deeper depth and keeps higher resolution in more general conditions, such as random signal number, random SNR and small angular distances. However, this model does not work very well in the condition of large signal number, large SNR difference or neighboring signals. This inspires us that ResNet is not adequate, and some other techniques are still required. For example, stacked autoencoders32,33 or restricted Boltzmann machines34,35 may be useful in signal denoising. Specially, convolutional neural network (CNN)36 may be more efficient to estimate signal directions from spatial covariance matrix R, whose geometry features may be lost in present DNN input Z. A more general DNN-based algorithm of superhigh resolution in the condition of random signal number and random SNR is left for a future work.

References

Johnson, D. H. & Dudgeon, D. E. Array Signal Processing: Concepts and Techniques (Simon & Schuster, New York, NY, 1992).

Krim, H. & Viberg, M. Two decades of array signal processing research: The parametric approach. IEEE Signal Process. Mag. 13, 4 (1996).

Schmidt, R. Multiple emitter location and signal parameter estimation. IEEE Trans. Antennas Propag. 34, 3 (1986).

Roy, R. & Kailath, T. ESPRIT-estimation of signal parameters via rotational invariance techniques. IEEE Trans. Acoust. Speech Signal Process. 37, 7 (1989).

Rao, B. D. & Hari, K. V. S. Performance analysis of Root-Music. IEEE Trans. Acoust. Speech Signal Process. 37, 12 (1989).

Rao, B. D. & Hari, K. V. S. Weighted subspace methods and spatial smoothing: analysis and comparison. IEEE Trans. Signal Process. 41, 2 (1993).

Belloni, F. & Koivunen, V. Unitary root-MUSIC technique for uniform circular array. Proceedings of the 3rd IEEE International Symposium on Signal Processing and Information Technology pp. 451–454 (2003).

Swindlehurst, A. L., Ottersten, B., Roy, R. & Kailath, T. Multiple invariance ESPRIT. IEEE Trans. Signal Process. 40, 4 (1992).

Tayem, N. & Kwon, H. M. Conjugated ESPRIT (C-ESPRIT). IEEE Trans. Antennas Propag. 52, 10 (2004).

Gao, F. & Gershman, A. B. A generalized ESPRIT approach to direction-of-arrival estimation. IEEE Signal Precess. Lett. 12, 3 (2005).

Du, K. L., Lai, A. K. Y., Cheng, K. K. M. & Swamy, M. N. S. Neural methods for antenna array signal processing: a review. Signal Process. 82, 4 (2002).

Wang, M., Yang, S., Wu, S. & Luo, F. A RBFNN approach for DOA estimation of ultra wideband antenna array. Neurocomputing 71, 4–6 (2008).

Jorge, N., Fonseca, G., Coudyser, M., Laurin, J. J. & Brault, J. J. On the design of a compact neural network-based DOA estimation system. IEEE Trans. Antennas Propag. 58, 2 (2010).

Rawat, A., Yadav, R. N. & Shrivastava, S. C. Neural network applications in smart antenna arrays: A review. Int. J. Electron. Commun. 66, 11 (2012).

Southall, H. L., Simmers, J. A. & O’Donnell, T. H. Direction finding in phased arrays with a neural network beamformer. IEEE Trans. Antennas Propag. 43, 12 (1995).

EI Zooghby, A. H., Christodoulou, C. G. & Georgiopoulos, M. A neural network-based smart antenna for multiple source tracking. IEEE Trans. Antennas Propag. 48, 5 (2000).

Pastorino, M. & Randazzo, A. A smart antenna system for direction of arrival estimation based on a support vector regression. IEEE Trans. Antennas Propag. 53, 7 (2005).

Randazzo, A., Abou-Khousa, M. A., Pastorino, M. & Zoughi, R. Direction of arrival estimation based on support vector regression: experimental validation and comparison with MUSIC. IEEE Antennas Wirel. Propag. Lett. 6, 379–382 (2007).

Vapnik, V. Statistical Learning Theory (Wiley, New York, 1998).

Stanković, Z., Milovanović, B. & Donc̆ov, N. Hybrid empirical-neural model of loaded microwave cylindrical cavity. Prog. Electromagn. Res. PIER 83, 257–277 (2008).

Agatonović, M. et al. Application of artificial neural networks for efficient high-resolution 2D DOA estimation. Radioengineering 21, 4 (2012).

Sarevska, M., Milovanović, B. & Stanković, Z. Reliability of Radial Basis Function - Neural Network Smart Antenna. WSEAS International Conference on Communications. Athens (Greece), 2005.

Takeda R. & Komatani, K. Discriminative multiple sound source localization based on deep neural networks using independent location model. Proc. IEEE Spoken Lang. Technol. Workshop(SLT), pp. 603–609 (2016).

Chakrabarty S. & Habets, E. A. P. Broadband DOA estimation using convolutional neural networks trained with noise signals. arXiv:1705.00919.

Adavanne, S., Politis, A. & Virtannen, T. Direction of arrival estimation for multiple sound sources using convolutional recurrent neural network. arXiv:1710.10059.

Huang, H. J., Yang, J., Huang, H., Song, Y. W., & Gui, G. Deep learning for super-resolution channel estimation and DOA estimation based massive MIMO system. IEEE Trans. Veh. Technol. 3, 1 (2018).

Chen, X., Wang, D., Y, J. X. & Wu, Y. A direct position-determination approach for multiple sources based on neural network computation. Sensors 18, 1925 (2018).

Liu, Z. M., Zhang, C. W. & Philip, S. Y. Direction-of-arrival estimation based on deep neural networks with robustness to array imperfections. IEEE Trans. Antennas Propag. 66, 12 (2018).

He, K. M., Zhang, X. Y., Ren, S. Q. & Sun, J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778 (2016).

Nair, V. & Hinton, G. E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th international conference on machine learning (ICML-10), 807-814 (2010).

Ba, J. L., Kiros, J. R. & Hinton, G. E. Layer normalization. arXiv:1607.06450.

Bengio, Y., Courville, A. & Vincent, P. Representation learning: a review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 35, 8 (2013).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning 499–507 (MIT Press, Cambridge, 2016).

Hinton, G. E. & Salakhutdinov, R. R. Reducing the dimensionality of data with neural networks. Science 313, 504 (2006).

Hinton, G. E., Osindero, S. & Teh, T. W. A fast learning algorithm for deep belief nets. Neural Comput. 18, 7 (2006).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning 326–366 (MIT Press, Cambridge, 2016).

Acknowledgements

The author would like to thank Wen Sun for fruitful discussions and introducing to this problem. The author also acknowledges Hai Dong and Kaichen Cao for helpful discussions.

Author information

Authors and Affiliations

Contributions

Wanli Liu developed the DoA estimation algorithm, did all the simulations and wrote the main manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, W. Super resolution DOA estimation based on deep neural network. Sci Rep 10, 19859 (2020). https://doi.org/10.1038/s41598-020-76608-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-76608-y

This article is cited by

-

Low-Complexity DOA Estimation Algorithm based on Real-Valued Sparse Bayesian Learning

Circuits, Systems, and Signal Processing (2024)

-

Deep-Learning Based DOA Estimation in the Presence of Multiplicative Noise

Wireless Personal Communications (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.