Abstract

Randomized controlled trials (RCT) are widely used in clinical efficacy evaluation studies. Linear regression is a general method to evaluate treatment efficacy considering the existence of confounding variables. However, when residuals are not normally distributed, parameter estimation based on ordinary least squares (OLS) is inefficient. This study introduces an exponential squared loss (ESL) model to evaluate treatment effect. The proposed method provides robust estimation for non-normal data. Simulation results show that it outperforms ordinary least squares regression with contaminated data. In the mild cognitive impairment (MCI) efficacy evaluation study with traditional Chinese medicine, our method is applied to construct a linear efficacy evaluation model for the difference in Alzheimer’s disease assessment scale-cognitive (ADAS-cog) scores between the final and baseline records (ADASFA), with the existence of confounding factors and non- normal residuals. The results coincide with existing medical literatures. This proposed method overcomes the limitation of confounding variables and non-normal residuals in RCT efficacy studies. It outperforms OLS on estimation efficiency in situations where the percentage of non-normal contamination reaches 30%. These advantages make it a good method for real-world clinical studies.

Similar content being viewed by others

Introduction

Mild cognitive impairment (MCI) is a syndrome defined as a cognitive decline, which may affect daily activities. The amnesic subtype of MCI has a high risk of progression to Alzheimer’s disease and could lead to a prodromal stage of this disorder1. Alzheimer’s disease assessment scale-cognitive (ADAS-cog) subscale measures the progression of MCI in 11 relevant fields, namely spoken language ability, comprehension of spoken language, recall of test instructions, word-finding difficulty, following commands, naming, constructions, ideational praxis, orientation, word recall, and word recognition. Detailed information on ADAS-cog subscale can be found in Rosen et al.2.

Institute of Clinical Pharmacology at Xiyuan Hospital conducted a phase III randomized clinical trial to evaluate the efficacy of a traditional Chinese prescription on MCI. The double blinded randomized clinical trial was conducted in eight qualified medical centres across China with 216 patients allocated to the treatment arm and 108 retained as control. Two patients dropped out from each arm, resulting in 320 complete observations in the final dataset. Data on the difference between final ADAS-cog score and baseline scores (ADASFA) were recorded for the efficacy study. Previous literatures have used ADASFA in efficacy evaluation of MCI or Alzheimer’s disease3,4. The most intuitive idea is to test whether the treatment and control means are equal. However, Morgan and Rubin5 argued that the baseline equivalence is not guaranteed although the allocation is randomized. Imbalance in baseline covariates could confound the statistical test when comparing ADASFA between the two arms. Ten variables, specifically age, height, weight, gender, education, ethnicity, occupation, centre, drug (whether the patient took a drug for MCI in the past three months), and ADAS1 (the baseline record of ADAS-cog6), were recorded as potential covariates. Table 1 shows descriptive statistics of these variables. The explorative covariance analysis presented in Table 2 indicates that the variable of centre and ADAS1 may confound the efficacy evaluation of ADASFA. This implies that a linear regression model should be involved rather than using a simple statistical test in this study, that is,

where p is the number of covariates. We denote a binary variable xj = 1 to represent the treatment arm and xj = 0 for the control arm. The efficacy can be evaluated by the corresponding coefficient βj 7. Ordinary least squares (OLS) is a general parameter estimation method for simple linear regression which performs as the best linear unbiased estimation when assuming independent identical normally distributed errors:

However, the QQ plot in Fig. 1 shows that the MCI dataset may not follow a normal distribution and a Shapiro-Wilk test (W=0.9283, p-value = 2.799e-11) also suggests a similar result. The contaminated non-normal part may come from either measurement error or mixed distribution8, which is commonly presented in medical studies9,10. This could lead to inefficient efficacy estimation by using OLS since the contaminated part is not addressed11. A more robust estimation method in linear regression is, therefore, required in such studies.

Many robust methods have been discussed in literatures. Bao12 developed a rank-based estimate in linear regression. Wang et al.13 proposed an robust estimation via least absolute deviation while Wang et al.14 introduced an exponential squared loss (ESL) to select variables robustly. Since the breakdown point of ESL is almost 50%, we adopt it in the MCI efficacy evaluation study. Numerical studies show that the proposed method can achieve a more accurate estimation with a large proportion of contamination in the dataset. Additionally, the estimations are consistent with OLS when contamination proportions are relatively low. Therefore, it can be used as a complementary efficacy evaluation method in real-world clinical studies regardless of the presence or lack of contaminations.

Methods

Model

Suppose there are n subjects, denoted as \({\{({x}_{i},{y}_{i})\}}_{i=1}^{n}\) where yi is the outcome and xi = (xi1, …, xip)T is a p-dimensional vector of covariates. A linear regression model is,

where β is a p-dimensional vector of unknown parameters while εi is independent and identically distributed with some unknown distribution satisfying E(εi) = 0 and εi ╨ xi.

The ESL function has been used in AdaBoost for classification problems with success15. Wang et al.14 expanded the use of the ESL function for robust variables selection. We now use it to estimate parameters in linear regression without sparsity. The ESL function is defined as

which is a function of t, and γ, where the latter is a tuning parameter. To estimate model parameters (β), the objective function of ESL is to maximize,

The tuning parameter γ controls the degree of robustness of the estimator. With a relatively large γ, the proposed estimator gets close to the OLS estimator while a smaller γ leads to a limited influence of contaminations on the estimator. Since the tuning parameter γ controls the degree of robustness and efficiency of the estimator, a data-driven procedure that yields both high robustness and high efficiency simultaneously is used to select an appropriate γ. The entire calculation process in terms of ESL borrows from the idea proposed in Wang et al.14:

-

1.

Find the pseudo outlier set of the sample. Let Dn = {(x1, y1), …, (xn, yn)}. Then, calculate \({r}_{i}({\hat{\beta }}_{n})={y}_{i}-{x}_{i}^{T}{\hat{\beta }}_{n},\,i=1,2,\,\cdots ,\,n\) and \({S}_{n}=1.4826\times {{\rm{median}}}_{i}|{r}_{i}({\hat{\beta }}_{n})-{{\rm{median}}}_{j}({r}_{j}({\hat{\beta }}_{n}))|\). Take the pseudo outlier set as \({D}_{m}=\{({x}_{i},{y}_{i}):{r}_{i}({\hat{\beta }}_{n})\ge 2.5{S}_{n}\}\), where m is the cardinality of Dm set and Dn−m = Dn/Dm.

-

2.

Update the tuning parameter γ. Let γ be the minimiser of det \((\hat{V}(\gamma ))\) in the set G = {γ:ζ(γ) ∈ (0, 1]}, and \(\zeta (\gamma )=2m/n+(2/n)\sum _{i=m+1}^{n}\,{{\rm{\Phi }}}_{\gamma }{r}_{i}({\hat{\beta }}_{n}),\hat{V}(\gamma )={\{{\hat{I}}_{1}({\hat{\beta }}_{n})\}}^{-1}{\rm{\Sigma }}{\{{\hat{I}}_{1}({\hat{\beta }}_{n})\}}^{-1}\), det (⋅) denotes the determinant operator, and

$$\begin{array}{rcl}{\hat{I}}_{1}({\hat{\beta }}_{n}) & = & \frac{2}{\gamma }\{\frac{1}{n}\sum _{i=1}^{n}\,\exp (\,-\,{r}_{i}^{2}({\hat{\beta }}_{n})/\gamma )\}(\frac{2{r}_{i}^{2}({\hat{\beta }}_{n})}{\gamma }-1)\}\times (\frac{1}{n}\sum _{i=1}^{n}\,{x}_{i}{x}_{i}^{T})\\ \hat{{\rm{\Sigma }}} & = & cov\{\exp (\,-\,{r}_{i}^{2}({\hat{\beta }}_{n})/\gamma )\frac{2{r}_{i}^{2}({\hat{\beta }}_{n})}{\gamma }{x}_{i}\times \cdots \times \exp (\,-\,{r}_{n}^{2}({\hat{\beta }}_{n})/\gamma )\frac{2{r}_{i}^{2}({\hat{\beta }}_{n})}{\gamma }{x}_{n}\}\end{array}$$ -

3.

Update \({\hat{{\boldsymbol{\beta }}}}_{{\boldsymbol{n}}}\). After selecting γ in step 2, update \({\hat{\beta }}_{n}\) by maximizing (1).

We set the MM estimator16 \({\tilde{\beta }}_{n}\) as the initial estimator. The algorithm is an iterative procedure as shown above. To attain high efficiency, we choose the tuning parameter γ by minimizing the determinant of asymptotic covariance matrix as in Step 2. Since the calculation of det \((\hat{V}(\gamma ))\) depends on the estimation of \({\tilde{\beta }}_{n}\), we update \({\tilde{\beta }}_{n}\) in Step 3 and repeat the algorithm until the convergence condition \(\Vert {\hat{\beta }}_{n}^{old}-{\hat{\beta }}_{n}^{new}\Vert < {10}^{-2}\) is satisfied.

Simulations

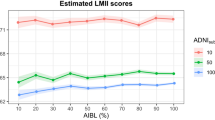

In order to verify the performance of the introduced method, we conduct numerical studies to compare bias and mean squared errors (MSE) of the estimators of our algorithm (ESL) versus those from the ordinary least squares (OLS).

Simulate data \({\{({x}_{i},{y}_{i})\}}_{i=1}^{n}\) as follows, where xi = (xi1, xi2, …, xip)T, i = 1, 2, …, n with p = 7 and n = 300. The first six covariates are continuous, that is, xij ~ N(0, 1) for j = 1, 2, …, 6 and xi7 is categorical, selected from {1, 2, …, 4}. Convert xi7 into three binary variables, denoted as zi1, zi2, zi3 where zij represents whether xi7 belongs to the j-th category and zi1 = zi2 = zi3 = 0 means xi7 belongs to the last category. Thus, we have xi = (xi1, xi2, …, xi6, zi1, zi2, zi3)T. Let β = (β0, β1, …, β9)T where β = (1, 1.2, 1.4, 1.6, 1.8, 2, 2.2, 2.4, 2.6, 2.8)T. The error term of contamination (outlier) follows t(1) distribution, and the error term of non-outlier follows standard normal distribution, N(0, 1). The proportion of contamination considered is 10%, 20% and 30%, respectively. For each proportion of contamination, the average mean, bias, standard deviation (SD), and MSE of ESL and OLS over 100 replications is reported in Table 3.

Figure 2–4 show error bars of ESL and OLS with three proportions of contaminations, where the triangular points represent true values of parameters, the circles points represent means of estimator means,s and vertical lines mean represent standard deviations. ‘truej’, ‘eslj’, and ‘olsj’ refer to the corresponding parameter j for true value, ELS, and OLS estimations. It can be seen that the widths of error bars using ESL are significantly considerably shorter than those using OLS, which implies that the standard deviation of the ESL estimator in ESL is much smaller than that in of OLS, and our method is more robust.

Results

In the MCI study, the linear regression model is conducted as follows:

We include these variables in the model based on our clinical experience and existing literatures. In addition, we transform weight and height into a new variable BMI, since there are discussions on whether BMI has an effect on MCI. We exclude ethnicity and marital status from our model mainly because these two variables are extremely unbalanced between the treatment and control arms, and also due to the fact that almost no literature suggests that these two variables have an effect on MCI. Since 11 out of 16 variables are categorical variables, we do not consider interaction effects. The by-centre descriptive analysis is presented in Table 4 and 5.

Table 6 shows the parameter estimations using ESL and OLS. The empirical 95% confidence interval is calculated by the bootstrap approach. When the bootstrap confidence interval does not include 0, it indicates that the corresponding covariate has a significant effect on the primary outcome. Note that there are some differences between the ESL and OLS estimations. For example, the effects of centre5 and centre8 on ADASFA are opposite. Given the non-normal residuals, the ESL estimators are more accurate. From the results, we can conclude that

-

(1)

ADAS1 and centre 6 have significant influences on ADASFA since their bootstrap confidence intervals do not contain 0. From the medical view, higher ADAS1 means patients are in worse health situation, which can have a positive effect on ADASFA.

-

(2)

ESL and OLS both show that ADAS1 has a positive effect on decreasing ADAS-cog. For age, ESL shows that age has no effect on decreasing ADAS-cog because its bootstrap confidence interval contains 0 while OLS shows that age has a negative effect on decreasing ADAS-cog. From a medical viewpoint17, it is verified that ‘age’ has a significant effect on MCI. Prior work has demonstrated that rates of dementia increase exponentially with age18,19. However, the significant effect of age on MCI does not mean that it also influences the treatment effect.

-

(3)

The ESL group coefficient is −0.141 and its bootstrap confidence interval contains 0. This result makes sense because this project is a non-inferiority trial and the treatment group was not worse than the control group.

-

(4)

The ESL shows that centres 3, 6, and 7 have significant effects on the outcome. However, OLS shows that centres 3 and 7 have no significant impact but centre 6 has a significant effect on the outcome. According to Table 4, the average ADAS1 of centre 6 is much lower than that of centres 3 and 7, which implies that patients in centres 3 and 7 are in worse conditions. Moreover, patients in different centres may have different non-compliance levels, which may also contribute to the result that some centres have significant effects on the outcome while others do not.

-

(5)

Since we have shown that the data is not normally distributed, we can have greater confidence in the ESL results.

Conclusion

In this paper, we discuss a method to evaluate efficacy in a randomized control MCI study. As many covariates may influence the outcome, a linear regression model is considered rather than comparing group means using t test or ANOVA. An exponential squared loss function, which is superior to OLS when dealing with non-normal residuals, is introduced in this study. Simulation results show that the ESL model yields more efficient estimation than OLS in non-normal data. The proposed method is also robust in the case of data with outliers. These advantages of the ESL model become more noticeable when the contamination percentage increases. The proposed method does not require the normal distribution assumption, offering new insight in the efficacy evaluation for practical researchers.

References

Gauthier, S. et al. Mild cognitive impairment. The Lancet 367, 1262–1270 (2006).

Rosen, W. G., Mohs, R. C. & Davis, K. L. A new rating scale for Alzheimer’s disease. The Am. J. Psychiatryy (1984).

Doraiswamy, P. M., Kaiser, L., Bieber, F. & Garman, R. L. The Alzheimer’s Disease Assessment Scale:evaluation of psychometric properties and patterns of cognitive decline in multicenter clinical trials of mild to moderate Alzheimer’s disease. Alzheimer Dis. & Assoc. Disord. 15, 174–183 (2001).

Petersen, R. C. et al. Vitamin E and donepezil for the treatment of mild cognitive impairment. New Engl. J. Medicine 352, 2379–2388 (2005).

Morgan, K. L. & Rubin, D. B. Rerandomization to improve covariate balance in experiments. The Annals Stat. 40, 1263–1282 (2012).

Hong Yan, N. Z. & Yongyong X Medical statistics. People’s Med. Publ (2010).

Jiqian Fang, Y. L. Advanced medical statistics. People’s Med. Publ. House (2002).

Qin, Y. & Priebe, C. E. Maximum Lq-likelihood estimation via the expectation-maximization algorithm: A robust estimation of mixture models. J. Am. Stat. Assoc. 108, 914–928 (2013).

Di Zhang et al. Clinical study of insulin resistance for patients after selective operation in department of general surgery. Chin. J. Bases Clin. Gen. Surg. 9, 951–954 (2010).

Yang Dai, X. Y. J. X. T. Z. Y. S. C. L. L. W. & Lijun, W. To study the effect and safety of lxhxcxiaofeng drug detoxification. Chin. J. Clin. Res. 8, 1087–1089 (2015).

Hekimoglu, S., Erenoglu, R. C. & Kalina, J. J. Outlier detection by means of robust regression estimators for use in engineering science. J. Zhejiang Univ. Sci. 10, 909–921 (2009).

Bao, Y. Research on robust regression analysis and diagnosis method based on rank order. Shanxi Med. Univ. 5, 10 (2003).

Wang, H., Li, G. & Jiang, G. Robust regression shrinkage and consistent variable selection through the LAD-lasso. J. Bus. Econ. Stat. 25, 347–355 (2007).

Wang, X., Jiang, Y., Huang, M. & Zhang, H. Robust variable selection with exponential squared loss. J. Am. Stat. Assoc. 108, 632–643 (2013).

Friedman, J., Hastie, T. & Tibshirani, R. Additive logistic regression: a statistical view of boosting (with discussion and a rejoinder by the authors). The Annals Stat. 28, 337–407 (2000).

Yohai, V. J. High breakdown-point and high efficiency robust estimates for regression. The Annals Stat. 15, 642–656 (1987).

Emre, M., Ford, P. J., Bilgiç, B. & Uç, E. Y. Cognitive impairment and dementia in Parkinson’s disease: practical issues and management. Mov. disorders 29, 663–672 (2014).

Hall, C. B. et al. Dementia incidence may increase more slowly after age 90 results from the Bronx Aging Study. Neurol. 65, 882–886 (2005).

Alzheimer’s Association et al. 2009 Alzheimer’s disease facts and figures. Alzheimer’s & Dementia 5, 234–270 (2009).

Acknowledgements

We thank Wenjing Kong for her suggestions on the writing of this manuscript. This study is partially supported by the Ministry of Science and Technology of the PRC: Significant New Drug Development Construction of Technology Platform used for Original New Drug Research and Development (2012ZX09303-010-002), the Ministry of Education Project of Key Research Institute of Humanities and Social Sciences at Universities (16JJD910002), and the National Natural Science Foundation of China (81774146).

Author information

Authors and Affiliations

Contributions

Yang Li, Fang Lu, and Danhui Yi were responsible for the concept and study design; Yang Li, Zhang Zhang, and Qian Feng wrote the main manuscript text; and Zhang Zhang and Qian Feng prepared all the figures and tables. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, Y., Zhang, Z., Feng, Q. et al. An efficacy evaluation method for non-normal outcomes in randomized controlled trials. Sci Rep 9, 11393 (2019). https://doi.org/10.1038/s41598-019-47727-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-47727-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.