Abstract

The approximity of the inferior alveolar nerve (IAN) to the roots of lower third molars (M3) is a risk factor for the occurrence of nerve damage and subsequent sensory disturbances of the lower lip and chin following the removal of third molars. To assess this risk, the identification of M3 and IAN on dental panoramic radiographs (OPG) is mandatory. In this study, we developed and validated an automated approach, based on deep-learning, to detect and segment the M3 and IAN on OPGs. As a reference, M3s and IAN were segmented manually on 81 OPGs. A deep-learning approach based on U-net was applied on the reference data to train the convolutional neural network (CNN) in the detection and segmentation of the M3 and IAN. Subsequently, the trained U-net was applied onto the original OPGs to detect and segment both structures. Dice-coefficients were calculated to quantify the degree of similarity between the manually and automatically segmented M3s and IAN. The mean dice-coefficients for M3s and IAN were 0.947 ± 0.033 and 0.847 ± 0.099, respectively. Deep-learning is an encouraging approach to segment anatomical structures and later on in clinical decision making, though further enhancement of the algorithm is advised to improve the accuracy.

Similar content being viewed by others

Introduction

The removal of the third molar is one of the most frequently performed surgical procedures in oral surgery. In the United States 10 million third molars are removed from approximately 5 million people annually1 Across the past three generations, the worldwide rate of third molar impaction was hovering at around 24.4%2,3.

As with other forms of surgery, the surgical removal of the lower third molars is associated with risk for complications. One of the most distressing complications following the removal of lower third molars is damage to the inferior alveolar nerve (IAN)4. IAN injuries cause temporary, and in certain cases, permanent neurosensory impairments in the lower lip and chin5, with an incidence of 3.5% and 0.91% respectively6. Therefore, preventing damage to the IAN is of utmost importance in the daily clinical practice.

Conventional two-dimensional panoramic radiograph, the orthopantomogram (OPG), is the most commonly used imaging technique to assess third molars and their relationship to the mandibular canal7,8,9,10. Previous studies have demonstrated that certain radiographic features on OPGs, such as darkening of the root, narrowing of the mandibular canal, interruption of the white line, are risk factors for IAN injuries11,12,13. A recent meta-analysis reported that darkening of the roots had a high specificity in predicting IAN injury14. However, the predictive factor was not satisfactory as an evident heterogenicity was seen across all the included studies. Apparently, high intra-observer and inter-observer variability of the radiographic signs exists. Examiners are not always able to identify and assess the specific signs in a reliable way on OPGs8.

Prediction and evaluation modelling methods based on deep-learning have proven to be useful in solving complex multifactorial problems in medicine15. Deep-learning has been applied to identify pulmonary nodes on high-resolution CTs and state-of-the-art performance has been achieved16. Parallel, there may be a high potential for the implementation of deep-learning in the detection of third molars, mandibular canals and the identification of certain radiographic signs for potential IAN injuries. The combined use of deep learning and OPG may allow an improved risk assessment of IAN injuries prior to the removal of third molars.

The aim of this present study was to achieve an automated high-performance segmentation of the third molars, and the inferior alveolar nerves (IAN) on OPG images using deep-learning as a fundamental basis for an improved and more automated risk assessment of IAN injuries.

Results

Lower M3 dentition detection

The lower M3 had a mean DICE of 0.947 (SD = 0.049) for the training data and a DICE of 0.936 (SD = 0.025) for the validation data (Table 1). In the training data one M3 was missed. Although a mean lower M3 was composed of less than 1% of the total OPG pixels, a mean and median sensitivity of 95% was achieved in both the training and validation set (Fig. 3).

IAN detection

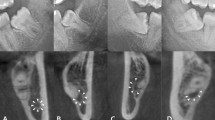

The IAN detection had a mean DICE of 0.768 (SD = 0.119) for the training data and a DICE of 0.805 (SD = 0.108) for the validation data (Table 1). Median values for training and validation data were slightly higher than the mean values, 0.789 and 0.856 respectively. The IAN detection scored the lowest of all the detection networks; a mean DICE of well above 0.75 was obtained. Optical inspection showed three types of position agreements between the automatically and manually segmented IAN: good segmentations had only minor flaws and none of them around the third molar (root) region, while mediocre cases had greater position disagreements and the third molar region was largely unaffected, and the group of poor segmentations showed inaccurate segmentation of IAN in the third molar region (Fig. 4). Considering the optical inspection as well as a sensitivity of 0.838 (SD = 0.132) for training data and 0.847 (SD = 0.099) for validation data, and a median of 0.866 and 0.865 respectively, the automated segmentations by using U-net were mostly satisfactory.

Discussion

Deep learning algorithms are evolving and are being increasingly applied in different medical fields, mainly to detect and segment clinically relevant anatomical structures or pathological changes, such as cancer17, tuberculosis18 or skin lesion19. However, the application of deep-learning in oral and maxillofacial surgery and dentistry is relatively scarce. Only the preliminary use of deep-learning in different topics such as caries detection or automatic dental radiography analysis has been described20.

Deep-learning is consisted of convolutional neural networks that is successfully applied to analyse visual imagery. CNN networks are able to detect and segment certain patterns in a large data set, such as a 2D radiograph or 3D CT scan. CNN can identify a group of pixels or voxels that make up either the contour or the interior of objects of interest. By changing certain characterises of the CNN architecture, the way of automatic segmentation can be adjusted to detect certain voxel patterns in a volume of interest15.

One of the most cited segmentation CNN applied in the medical field is the “U-net”. U-net has a simple and clear architecture and is aimed particularly at segmenting osseous and soft tissue structures. Compared to other CNNs, the accuracy of segmentation U-net is significantly better21. Ronneberger applied U-net to segment the enamel, dentine and pulp on dental radiographs (bitewings) and obtained a mean dice-coefficient of 0.5621. In some cases, a dice-coefficient of 0.70 was obtained, indicating the potential of further improvements of the U-net architecture.

The potential of applying U-net in the automated segmentation of third molars and IAN is investigated in the present study. By changing the U-net architecture and improvements in the training of U-net in performing segmentations, encouraging results have been obtained in both the identification and segmentation of third molars and IAN. However, in some cases, U-net was unable to locate and segment both predetermined anatomical structures satisfactorily. Several factors are associated with the underperformance. Firstly, the lack of contrast on the OPGs between the mandible and the mandibular canal complicated the task of segmentation for both the observer and the CNN. OPGs do not have constant image intensity in the region of the mandibular canals. Secondly, the mandibular canal varies significantly in its shape and location among patients17. Thirdly, since only one segmenting observer and one correcting observer segmented the OPGs only once a certain degree of interobserver and interobserver variability might occur leading to a potentially lower DICE-coefficient. The OPGs were initially scaled and cropped to a lower resolution than the native OPG resulting in a loss of data which can be useful for segmentation. Due to the high DICE-coefficient for both the overall dentition and M3s as well as the use of the near native resolution for the IAN with a lower DICE it seems that the resolution might not be a severe issue on the performance of the segmentation. In case the OPGs would be analysed in full detail it is possible to resort to more powerful PC’s or use the overlap-title strategy as originally used for the U-nets21.

There are several approaches to improve the segmentation of third molars and the IAN in particular. The first method to counteract the variety in shape and boundary on native images is by minimizing the region of interest. It is not necessary for the CNN to segment the complete mandibular canal. The only essential area for the risk assessment is the region of the third molar and its roots. The second suggestion is to increase the training data set with annotated OPGs. Although the applied augmentation due to rotation resulted in a high-performing segmentation in case of third molar, the accuracy for the IAN remained to be lower. The increase of annotated OPGs may lead to a better overall performance21. Thirdly, the use of DICOM file format for OPGs can enhance the segmentations. In this study, the OPGs were exported from the electronic patient files in JPEG files exports, resulting in 256 greyscale values with 8-bit per channel. An alternative to this file format is DICOM, which can natively hold a much larger range of greyscale values. Using these native DICOM files can potentially result in a higher contrast, making the IAN better to segment both manually and automatically. Another approach towards an enhanced IAN segmentation could be the use of cone-beam CT (CBCT) scans instead of OPGs. Among other segmentation techniques U-nets can also be applied to 3D volumetric shapes like CBCT-scans22. The CNN used in this study is specialized in segmentation based on shape. Yet, this CNN has another beneficial characteristic of taking topography into consideration. The problematic point of shape and boundary could also be solved when 3D data sets are provided, as the nerve appears as a circular cross-section in a CBCT scan. It could be possible that the 3D U-nets natively provide good segmentation in CBCT scans or that they yield the same shape and topology properties when used with double inputs. In this way, the anatomical positional relationship between the IAN and M3 could be evaluated. The main drawback of such an approach is the patient´s higher exposition to radiation.

It should be noted that alternative segmentation techniques exists to perform similar segmentations, such as watershed segmentation23, canny based segmentation24 or random forest regression voting constrained local models25, which were introduced as segmentation algorithms for OPGs in the past26,27,28. Since this study is more observational by character, comparative studies are required in the future, in order to the quality of different segmentation methods, especially semantic segmentation29,30, before the clinical implementation.

The encouraging results obtained from the present study in the segmentation of third molars and the IAN, was the first step on the way to a successfully implement deep-learning in daily clinical practice. The exact location and shape of the third molar roots in relation to the mandibular canal has to be determined accurately and in a reproducible way. Also risks patterns need to be identified. Individual risks need to be attributed to each factor and the CNN has to be able to sum up all the risk factors and the associated risks to give one final risk. Nevertheless the usage of deep-learning might not only provide professionals with additional information to optimize treatment planning and risk identification, but also automatically improve dental radiographs by dismissing unnecessary artefacts. The greatest advantage of deep-learning is the improvement in diagnostic without changing the present infrastructure and the wide range of application. For instance, the implementation of deep learning in caries management could enhance early caries detection and optimize the moment of intervention, thereby reduce the increasing global health burden induced by dental caries.

Material and Method

This study was conducted in accordance with the World Medical Association Declaration of Helsinki on medical research ethics. The approval of this study was waived by the Institutional Review Board (CMO Arnhem-Nijmegen) and informed consent were not required as all image data were anonymized and de-identifed prior to analysis (decision no. 2019–5232). All methods were carried out in accordance with relevant guidelines and regulations.

Patients

Orthopantomograms (OPGs) of patients who were admitted at the Department of Oral and Maxillofacial Surgery of Radboud University Nijmegen Medical Centre in 2017 were randomly selected. The inclusion criteria were the presence of at least 12 adjacent teeth with at most one missing element in between, at least one lower third molar and a minimum age of 16. Permanent fixed lingual retention wires and/or fillings are quite common in the (Dutch) population so OPGs containing these were not excluded to give a good societal representation. Blurred and incomplete OPGs were excluded from further analyses.

The collected data were de-identified and anonymized prior to analysis. Patients who signed the opt-out for anonymized research were also excluded conform the institution’s policy. Digital panoramic radiographs were acquired with a Cranex Novus e device (Soredex, Helsinki, Finland), operated at 77 kV and 10 mA, using a CCD sensor. The OPGs were taken with patients standing upright, head supported by the 5-point head support, with the upper and lower incisors biting gently into the bite block. A total of 81 OPGs were analysed.

M3 and IAN detection workflow

A workflow for the M3 detection and nerve segmentation was created using image processing and deep-learning networks (Fig. 1). In depth details of each step are described in the appendix. The core mechanic of the detection revolves around a deep-learning segmentation network called a U-net which is used in the subsequent steps21. The initial step 1 is the image acquisition and data pre-processing. This step prepares the data for standardizing the size and applying contrast enhancement to the OPG (Fig. 1). This is followed by the overall dentition detection (step 2) by providing the pre-processed OPG to a 6-layer deep-learning U-net. The lower M3 detection is executed in step 3 using the same pre-processed OPG from step 2 as well as the result (detected dentition mask) of step 2 by providing these to a specifically designed double input 6-layer U-net. Using the detected third molars in step 3 an automated crop of the original OPG is made in the fourth step 4. A margin extending caudally around the M3 is taken from the OPG to include a part of the IAN. Furthermore, the pre-processed OPG is also used to make a rough (low accuracy) extraction of the IANs in step 5 with a 5-layer U-net. The same cropped area of the original OPG around the third molar as taken in step 4 is also taken of the rough IAN segmentation of step 5 resulting in a cropped IAN for step 6. Finally in step 7 the refined IAN is detected in the crop from step 4 and step 6 using a 6-layer U-net. These steps result in the overall dentition (step 2), the isolated third molars (step 3) and the isolated IANs (step 7) which can be used for further analysis.

Deep-learning network training

Each step holding a Deep-Learning network (Steps 2, 3, 5 and 7) has been trained using annotated data. Training data were obtained by manual segmentations as well as the results of running the manual segmentations through the workflow. The specific training conditions and rules can be found in the Appendix (see supplementary data).

For the training data all present teeth in the maxilla and mandible, and the mandibular canal, were manually segmented one by one on OPGs. Each segmented tooth and mandibular canal was attributed with a distinct colour, and labelled according to the FDI tooth numbering system with Adobe Photoshop CC 2017 (Adobe System Incorporated, San Jose, California) by one observer (SV) (Fig. 2). A second observer (TX) checked the segmentations and made further refinements. The color-coded OPGs images were uniformly scaled and cropped to 2048 by 1024 pixels using Adobe Photoshop CC 2017.

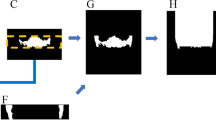

In the next step, all segmented teeth and the IAN were transformed to white and the background to black. In this way different Portable Network Graphics (PNG) files were created for the full dentition, and also separately for the lower third molars and the IAN.

The PNGs with all the segmented teeth were used as the gold standard for the training of the deep-learning network used in step 2 to obtain the full dentition. The PNGs with all the segmented teeth (as input) as well as those with the isolated third molars (as gold standard) were used for the first training of step 3. After the training reached its maximum DICE-coefficient31 using these data, the network was re-trained by using the result from step 2 in case all present M3’s were detected as an additional data input. For step 5 the network was trained using the manually segmented IANs on the whole OPGs resulting in low accuracy segmentations. The results of the low accuracy IANS from step 5 and M3s from step 3 were cropped in respectively step 6 and 4 and trained in step 7 until the maximum DICE- coefficient was achieved.

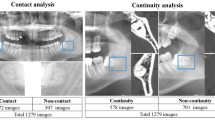

Deep learning training data split

The OPGs and crops were randomly split in a training group (70%) and a validation/test group (30%) prior to the data augmentation. Due to the amount of available scans the validation and test groups were taken as one single group. Data were subsequently checked for unequal distributions of missing third molars and corrected where needed.

Statistical analysis

The segmentations were assessed by determining the overlap between the gold standard and the deep-learning segmentation using DICE coefficient31. The mean, median, standard deviation, and 5–95% percentiles of all the DICE coefficients, Jaccard-indices, sensitivity and specificity were reported for a training and test subset for the M3s (step 3) and the segmented IANs (step 7). The Jaccard index and DICE-coefficient are interchangeable but reported both for convenience32.

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Friedman, J. W. The prophylactic extraction of third molars: A public health hazard. American Journal of Public Health 97, 1554–1559 (2007).

Carter, K. & Worthington, S. Morphologic and demographic predictors of third molar agenesis: A systematic review and meta-analysis. Journal of Dental Research 94, 886–894 (2015).

Santosh, P. Impacted mandibular third molars: Review of literature and a proposal of a combined clinical and radiological classification. Annals of Medical and Health Sciences Research, https://doi.org/10.4103/2141-9248.160177 (2015).

Hasegawa, T., Ri, S., Umeda, M. & Komori, T. Multivariate relationships among risk factors and hypoesthesia of the lower lip after extraction of the mandibular third molar. In Oral Surgery, Oral Medicine, Oral Pathology, Oral Radiology and Endodontology, https://doi.org/10.1016/j.tripleo.2011.02.013 (2011).

Ghaeminia, H. et al. Clinical relevance of cone beam computed tomography in mandibular third molar removal: A multicentre, randomised, controlled trial. Journal of Cranio-Maxillofacial Surgery 43, 2158–2167 (2015).

Gülicher, D. & Gerlach, K. L. Sensory impairment of the lingual and inferior alveolar nerves following removal of impacted mandibular third molars. International Journal of Oral and Maxillofacial Surgery 30, 306–312 (2001).

Eyrich, G. et al. 3-Dimensional imaging for lower third molars: Is there an implication for surgical removal? Journal of Oral and Maxillofacial Surgery, https://doi.org/10.1016/j.joms.2010.10.039 (2011).

Ghaeminia, H. et al. Position of the impacted third molar in relation to the mandibular canal. Diagnostic accuracy of cone beam computed tomography compared with panoramic radiography. International Journal of Oral and Maxillofacial Surgery 38, 964–971 (2009).

Matzen, L. H., Schou, S., Christensen, J., Hintze, H. & Wenzel, A. Audit of a 5-year radiographic protocol for assessment of mandibular third molars before surgical intervention. Dentomaxillofacial Radiology, https://doi.org/10.1259/dmfr.20140172 (2014).

Sanmartí-Garcia, G., Valmaseda-Castellón, E. & Gay-Escoda, C. Does computed tomography prevent inferior alveolar nerve injuries caused by lower third molar removal? Journal of Oral and Maxillofacial Surgery, https://doi.org/10.1016/j.joms.2011.03.030 (2012).

Ghaeminia, H. et al. The use of cone beam CT for the removal of wisdom teeth changes the surgical approach compared with panoramic radiography: A pilot study. International Journal of Oral and Maxillofacial Surgery, https://doi.org/10.1016/j.ijom.2011.02.032 (2011).

Pinto, P. X., Pinto, P. X., Mommaerts, M. Y., Wreakes, G. & Jacobs, W. V. G. J. A. Immediate postexpansion changes following the use of the transpalatal distractor. Journal of Oral and Maxillofacial Surgery, https://doi.org/10.1053/joms.2001.25823 (2001).

Rood, J. P. & Shehab, B. A. The radiological prediction of inferior alveolar nerve injury during third molar surgery. The British journal of oral & maxillofacial surgery 28, 20–5 (1990).

Liu, W., Yin, W., Zhang, R., Li, J. & Zheng, Y. Diagnostic value of panoramic radiography in predicting inferior alveolar nerve injury after mandibular third molar extraction: A meta-analysis. Australian Dental Journal 60, 233–239 (2015).

Litjens, G. et al. A survey on deep learning in medical image analysis. Medical Image Analysis 42, 60–88 (2017).

Shin, H. C. et al. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Transactions on Medical Imaging 35, 1285–1298 (2016).

Men, K. et al. Deep Deconvolutional Neural Network for Target Segmentation of Nasopharyngeal Cancer in Planning Computed Tomography Images. Frontiers in Oncology 7 (2017).

Foster, B. et al. Segmentation of PET images for computer-aided functional quantification of tuberculosis in small animal models. IEEE Transactions on Biomedical Engineering 61, 711–724 (2014).

Khalid, S. et al. Segmentation of skin lesion using Cohen–Daubechies–Feauveau biorthogonal wavelet. SpringerPlus 5 (2016).

Wang, C. W. et al. A benchmark for comparison of dental radiography analysis algorithms. Medical Image Analysis, https://doi.org/10.1016/j.media.2016.02.004 (2016).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Miccai 234–241 https://doi.org/10.1007/978-3-319-24574-4_28 (2015).

Çiçek, Ö., Abdulkadir, A., Lienkamp, S. S., Brox, T. & Ronneberger, O. 3D U-net: Learning dense volumetric segmentation from sparse annotation. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), https://doi.org/10.1007/978-3-319-46723-8_49 (2016).

Li, H., Sun, G., Sun, H. & Liu, W. Watershed algorithm based on morphology for dental X-ray images segmentation. In International Conference on Signal Processing Proceedings, ICSP, https://doi.org/10.1109/ICoSP.2012.6491720 (2012).

Karthikeyan, T. & Manikandaprabhu, P. A novel approach for inferior alveolar nerve (IAN) injury identification using panoramic radiographic image. Biomedical and Pharmacology Journal, https://doi.org/10.13005/bpj/613 (2015).

Vila Blanco, N., Tomás Carmona, I. & Carreira, M. Fully Automatic Teeth Segmentation in Adult OPG Images. Proceedings 2 (2018).

Lira, P., Giraldi, G. & Neves, L. A. Segmentation and Feature Extraction of Panoramic Dental X-Ray Images. IJNCR 1 (2010).

Na’am, J., Harlan, J., Madenda, S. & Wibowo, E. P. The Algorithm of Image Edge Detection on Panoramic Dental X-Ray using Multiple Morphological Gradient (mMG) Method. International Journal on Advanced Science, Engineering and Information Technology, https://doi.org/10.18517/ijaseit.6.6.1480 (2016).

Amer, Y. Y. & Aqel, M. J. An Efficient Segmentation Algorithm for Panoramic Dental Images. In Procedia Computer Science, https://doi.org/10.1016/j.procs.2015.09.016 (2015).

Arbelaez, P. et al. Semantic segmentation using regions and parts. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, https://doi.org/10.1109/CVPR.2012.6248077 (2012).

D. G., L. Distinctive Image Features from. International Journal of Computer Vision, https://doi.org/10.1023/B:VISI.0000029664.99615.94 (2004).

Dice, L. R. Measures of the Amount of Ecologic Association Between Species. Ecology, https://doi.org/10.2307/1932409 (1945).

Taha, A. A. & Hanbury, A. Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool. BMC Medical Imaging, https://doi.org/10.1186/s12880-015-0068-x (2015).

Author information

Authors and Affiliations

Contributions

Shankeeth Vinayahalingam: Study design, data collection, statistical analysis, writing the article. Tong Xi: Study design, statistical analysis, writing the article, supervision. Thomas Maal: Study design, article review, supervision. Stefaan Bergé: Supervision, writing article, article review. Guido de Jong: Study design, data collection, statistical analysis, writing the article.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vinayahalingam, S., Xi, T., Bergé, S. et al. Automated detection of third molars and mandibular nerve by deep learning. Sci Rep 9, 9007 (2019). https://doi.org/10.1038/s41598-019-45487-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-45487-3

This article is cited by

-

Patients’ perspectives on the use of artificial intelligence in dentistry: a regional survey

Head & Face Medicine (2023)

-

Automatic classification of 3D positional relationship between mandibular third molar and inferior alveolar canal using a distance-aware network

BMC Oral Health (2023)

-

An artificial intelligence study: automatic description of anatomic landmarks on panoramic radiographs in the pediatric population

BMC Oral Health (2023)

-

ResMIBCU-Net: an encoder–decoder network with residual blocks, modified inverted residual block, and bi-directional ConvLSTM for impacted tooth segmentation in panoramic X-ray images

Oral Radiology (2023)

-

Investigation of the best effective fold of data augmentation for training deep learning models for recognition of contiguity between mandibular third molar and inferior alveolar canal on panoramic radiographs

Clinical Oral Investigations (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.