Abstract

Coronary plaque burden measured by coronary computerized tomography angiography (CCTA), independent of stenosis, is a significant independent predictor of coronary heart disease (CHD) events and mortality. Hence, it is essential to develop comprehensive CCTA plaque quantification beyond existing subjective plaque volume or stenosis scoring methods. The purpose of this study is to develop a framework for automated 3D segmentation of CCTA vessel wall and quantification of atherosclerotic plaque, independent of the amount of stenosis, along with overcoming challenges caused by poor contrast, motion artifacts, severe stenosis, and degradation of image quality. Vesselness, region growing, and two sequential level sets are employed for segmenting the inner and outer wall to prevent artifact-defective segmentation. Lumen and vessel boundaries are joined to create the coronary wall. Curved multiplanar reformation is used to straighten the segmented lumen and wall using lumen centerline. In-vivo evaluation included CCTA stenotic and non-stenotic plaques from 41 asymptomatic subjects with 122 plaques of different characteristics against the individual and consensus of expert readers. Results demonstrate that the framework segmentation performed robustly by providing a reliable working platform for accelerated, objective, and reproducible atherosclerotic plaque characterization beyond subjective assessment of stenosis; can be potentially applicable for monitoring response to therapy.

Similar content being viewed by others

Introduction

Coronary heart disease (CHD) is the leading cause of death around the world1. The build-up of atherosclerotic plaques often results in severe coronary artery lumen stenosis which, in conjunction with plaque rupture, is the main etiology of angina, myocardial infarction, and sudden death2,3. The severity of the plaque build-up, also known as plaque burden, is multi-faceted involving the evaluation of coronary artery plaque content, volume, distribution, and lumen stenosis. While stenosis directly affects the flow of blood in the coronary arties, the spreading and composition of plaque may have a more abrupt and detrimental role in the development of CHD2,4. Small to moderate sized soft lipid-rich plaques can be more harmful than larger hard or calcified ones because of their increased vulnerability to rupture resulting in thrombosis and sudden disruption of blood flow2,3. Additionally, due to the positive arterial remodeling5 in the early stages of plaque development, the change in lumen cross-sectional area may not be perceived by imaging and hence, concealing the actual size and vulnerability of plaques. In fact, plaques that are vulnerable to rupture are typically lipid-filled non-stenotic and exist at many locations along the arteries6. Indeed, nearly half of sudden cardiac deaths occur in otherwise asymptomatic patients from culprit plaques that are not flow-limiting7. Therefore, measuring the degree of stenosis only is insufficient for complete assessment of the risk of CHD, particularly in the asymptomatic stages of the disease.

Assessment of plaque burden based on lumen stenosis scoring was driven by the availability of lumen-only data from conventional X-ray coronary angiography. With the advances in CCTA, 3D data now provide more in-depth insight about all components of plaque burden; the CCTA measurements have shown to be more precise independent predictors of CHD than conventional risk metrics8,9. However, identifying plaque locations and sizes from CCTA is still a tedious manual task that is prone to subjective image interpretation. Hence, a more objective and user-agnostic method is desirable for measuring and documenting the extent of coronary plaque burden as identified by CCTA.

Background and Related Work

Many paradigms were introduced for coronary artery lumen segmentation and stenosis detection from CCTA images. Optical flow techniques use visual cues (such as local changes in the lumen inner diameter) to guide segmentation, whereas machine learning approaches attempt to learn features that may not be recognizable or perceivable to human experts. Examples of optical flow approaches include the use of a Corkscrew tracking-based vessel extraction technique10,11 for lumen segmentation and centerline calculation. Marquering et al.12 employed a fast marching level set to estimate the initial lumen contour and a model-guided minimum cost approach13 to find the final contour. Wang et al.14 started with an initial centerline and iteratively applied level set and distance transformations to estimate the final centerline and vessel border. Schaap et al.15 employed the intensities along a given centerline to guide a graph cut algorithm for lumen segmentation. These techniques were limited to specific plaque types, e.g., calcified plaques. They were also only demonstrated in a limited number of cases. The performance of these techniques was generally sensitive to the initial centerline accuracy, the length of the stenosis, and the artery cross section shape.

Machine learning-based techniques have been used to detect stenosis directly or segment lumen first then calculate the stenosis. Zuluaga et al.16,17 assumed the lesion is a local outlier compared to normal artery regions and employed intensity-based features with use of Support Vector Machines (SVM) to detect such outliers. The proposed metric was calculated in planes orthogonal to a given centerline, thus the final accuracy is also influenced by the given initial centerline. Further details about related coronary artery segmentation and stenosis detection techniques can be found in Kirisli et al.18.

Other approaches detect stenosis by first detecting plaques. Kitamura et al.19 proposed a multi-label graph cut technique based on higher-order potentials and Hessian analysis to detect stenosis that exceeded 20%. Kang et al.20 proposed a two-stage technique. In the first stage, two independent stenosis detectors were applied: an SVM and a formula-based analytic method. In the second stage, an SVM based decision fusion algorithm used the output of the two detectors to provide a more accurate detection for lesions with stenosis of greater than 25%. Sivalingam et al.21 proposed a hybrid segmentation technique to segment the vessel wall. This technique used active contour models and a random forest regression with the segmentation being evaluated on five arteries with calcified plaques, mixed, or both.

In addition to the focus on the detection of lumen stenosis, the majority of current techniques only detect stenosis that exceeds 20%. This presents little or no information about performance with small to moderate soft plaques that are an important contributor to CHD and predictor of future events, as noted earlier.

In this work, we propose the first framework for 3D coronary CTA wall and plaque segmentation, regardless to the degree of stenosis, with a particular interest in the soft plaques of all sizes that cause mild or insignificant lumen stenosis. We compare its performance in a cohort of asymptomatic CHD subjects against three-expert individual and consensus delineations of the outer and inner lumen wall in different plaque size categories.

Problem Definition

Unlike coronary lumen segmentation, which only focuses on delineating the high-intensity lumen at the inner wall border from the low-intensity wall tissue and surroundings, the segmentation of coronary artery wall and plaques from CCTA images is substantially more challenging. Current CTA systems exist that provide relatively high temporal resolution around 66 msec., and a spatial resolution around 0.33 × 0.3 × 0.3 mm3, which are reasonable for the assessment of cardiac function and significant coronary artery stenosis. However, the available resolution adds restrictions to the accurate measurement of the vessel wall thickness, which typically ranges from 0.75 ± 0.17 mm for healthy segments (segments without plaques) to 4.38 ± 0.71 mm for segments with stenosis ≥40%22. Moreover, the current temporal resolution is not sufficient to eliminate all motion artifacts. Contrast also presents an issue between the vessel outer wall and surrounding tissues being substantially low; particularly when the vessel is very close to or surrounded by the myocardium and lacking the surrounding adipose tissue. These many challenges increase the complexity of segmenting the vessel wall and small plaques and therefore mandate the utilization of a multi-discipline framework.

Strategy Outline

The proposed framework is split into two major parts: segmentation of the coronary wall and visualization of the output. Segmentation has four modules as shown in Fig. 1. These modules are: S1) Lumen “inner wall” initial contour using Hessian analysis and region growing; S2) Vessel “outer wall” initial contour using mathematical morphology; S3) Final lumen segmentation; and S4) Final vessel segmentation, both using level sets. Visualization includes: V1) Generating a lumen 3D mesh; V2) Generating a vessel 3D mesh, both using the marching cube methods; V3) Lumen centerline using computational geometry; and V4) Curved multi-planar reformation (CMPR).

The proposed framework will be described in the subsequent Methods and Materials section.

Results

Framework experiments and evaluation

The experiments were part of a study that was approved by the local institutional review board (IRB) at the National Institutes of Health, in compliance with the Declaration of Helsinki, and were performed in accordance with relevant guidelines and regulations. Forty-one asymptomatic CAD subjects (18 females) were included in the performance assessment after signing informed consent. Participants were recruited using local advertisement from year 2011 to year 2016 (ClinicalTrials.gov identifier: NCT01399385). The criteria for inclusion were: age ≥20 years, without obesity, diabetes mellitus, or known history of cardiovascular disease.

Error and reproducibility assessment

Two observer radiologists, each with over 15 years of experience in coronary CTA imaging and interpretation, were involved in the study as investigators and to assist in the reading of the data and the evaluation of the framework performance. CCTA images were acquired using a 64-slice multi-detector CT (MDCT) or higher using previously established techniques23,24,25. Agatston coronary calcium score (CaS) was obtained without contrast26, then a standard coronary CCTA was performed to obtain images of the coronary vessels using iodine contrast.

Coronary CTA plaque localization and characterization

Before applying the proposed segmentation framework, CCTA images were read by one of the radiologist co-authors to identify the segments of adequate diagnostic image quality and the extent of atherosclerosis plaque burden therein. Axial, multi-planar reformatted coronary images were obtained using a 3-dimentional software tool (Virtual Place; AZE, Tokyo, Japan)24. Additionally, both trans-axial and reformatted images were used, and a modified 16-segment model was used by the radiologists to visually identify coronary segments8,27. In each of the 16 coronary segments, plaques were scored by the radiologist for their overall characteristics including: plaque presence (0 = No, 1 = Yes), plaque type (0 = None, 1 = Soft, 2 = Mixed, 3 = Calcified), plaque volume (0 = None, 1 = Small/trace “> = 1 to < = 24%”, 2 = Mild “> = 25 to< = 49%”, 3 = Moderate “> = 50 to < = 74%”, 4 = Large “> = 75%”) relative to the segment length28,29, and plaque luminal stenosis severity (0 = None: 0% luminal stenosis, 1 = Mild: 1% to 49% luminal stenosis, 2 = Moderate: 50% to 69% luminal stenosis, or 3 = Severe: 70% luminal stenosis)9. Based on the radiologist visual interpretation and assessment, a report for each subject was created listing the location, type, size of each plaque, and the degree of stenosis caused by the plaque. Inclusion in the study stopped when at least 20 plaques of each volume category were obtained.

The framework execution begins when the radiologist first identifies the origins of the right coronary artery (RCA) and the left main coronary artery (LM) by selecting two points at their ostia. The coronary tree lumen and wall were then automatically extracted according to the framework in Fig. 1. Accordingly, lumen and vessel boundaries were delineated, centerlines were generated, and the vessels were straightened and presented to the radiologist as in Fig. 2. Next, the radiologist was asked to identify the proximal and lateral ends of each of the previously recorded plaques. Plaque wall 3D meshes, locations, and lengths were added to the records. Segments with inadequate diagnostic image quality or with vessel caliber less than 2.0 mm, exclusive of focal stenosis, were excluded from the assessment. Further detailed step-by-step routines and software library calls be found in the attached appendix.

Comparison with Coronary CTA Consensus reading

Twenty-five arteries were chosen sequentially from 19 subjects containing seventy plaques including at least 15 plaques of each size (small, mild, moderate, large). Each 3D straightened artery was presented to the three observers as 5 longitudinal sections radially concentric at the centerline of the artery and at an angular gap of 36°. The observers were then asked individually to delineate the lumen and vessel contours unaware of the delineation results from the framework. A week later, the delineated plaques were randomly presented to observers together and a consensus reading was obtained, which will be considered the reference segmentation.

The blinded manual delineation of each observer as well as the automatic framework delineation were individually compared to the reference segmentation. Comparisons included the three extracted volumes; lumen, vessel, and wall, using the following similarity and error metrics.

Similarity:

Volume Mean Squared Error:

Relative Volume Error:

Here, \({V}_{t}\) is the set of test plaque volumes, which in this experiment is either one of the observers or the automatic framework volume. The set \({V}_{r}\) is the reference plaque volume set, which in this experiment is the volume obtained by consensus reading, and n is the number of plaques.

Significance of discrepancy between framework and observer

In many situations, the difference of delineation between the algorithm and the radiologist is either under the noise floor level or in regions of large uncertainty and therefore there is no superiority of one delineation over another for adequate representation of a plaque. The goal of this experiment is to quantify the unacceptable segmentation error in our framework. That is the error which represents a clear deviation from the true edges and requires correction by a radiologist. A larger sample size of 122 plaques sliced at equally-spaced 20 longitudinal radially concentric sections at the centerline of the artery. The first radiologist reader was asked to manually adjust the framework-generated contours if needed.

The performance criteria in Eqs (1–3) were assessed as well as precision and sensitivity defined by

and

Here, \({V}_{t}\) is the test volume, which in this experiment is the automatic framework volume. \({V}_{r}\) is the reference volume, which in this experiment is the volume obtained by the expert radiologist after correcting the framework-generated volume.

Framework execution

A typical execution of the framework is shown in Fig. 3 in which the original 3D coronary CTA is fed to the framework, lumen and wall are then segmented. The lumen centerline is calculated and used to straighten the segmented lumen and wall as well as the original gray-level 3D image. The radiologist was able to visualize the different concentric longitudinal and parallel transverse cross-sections as shown in Fig. 4. The typical execution time of the proposed framework is 56 seconds per artery while our expert radiologists took in average 9 minutes per artery, not including CMPR, to manually set the centerline and segment the lumen and vessel outer boundaries for five longitudinal sections.

In Vivo error and reproducibility assessment

Participants’ characteristics were the following: median age = 52 y.o. with the interquartile range (IQR) (43.6–58.0), median body mass index (BMI) = 25.26 (IQR: 23.34–28.44), median Framingham score = 2.75 (IQR: 0.30–7.42), and median Atherosclerotic Cardiovascular Disease (ASCVD) score = 3.67 (IQR: 0.90–6.30). 122 atherosclerotic plaques were identified. These included 95 soft plaques, 20 mixed, and 7 calcified. Based on size, 36 plaques were small, 34 mild, 30 medium, and 22 large. Based on degree of stenosis, plaques were categorized into 45 without stenosis, 58 mild, 13 moderate, and 6 severe.

Table 1 summarizes the performance of the three observers and framework segmenting the three coronary vessel volumes (lumen, vessel, and wall) relative to the consensus delineation. Automatic framework volumes were always superior to at least one observer. When compared to the three observers, the framework automatic segmentations ranked first with the least volume MSE, the errors were 8.0 mm3, 20.9 mm3, and 18.4 mm3 for the lumen, vessel, and wall, respectively. In the relative volume error, the framework ranked third in the lumen, second in the vessel, and first in vessel wall segmentation with relative errors of 26.1%, 21.5%, and 25.4%, respectively. Finally, for the DICE metric, the framework ranked third in lumen and wall, and ranked second in vessel segmentation with DICE coefficient of 81.1%, 60.2%, and 81.1%, respectively.

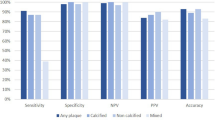

The results of the second experiment that involved framework segmentation versus manual supervised segmentation are demonstrated in this section. For plaque DICE, measurements are presented in Fig. 5. In the small plaques, median DICE, PREC, and SENS scores were 90%, 92%, and 90%, respectively. The quality measures improved to above 95% for large plaques. The interquartile ranges (IQR) also narrowed substantially to near 5% as plaques get larger. Lumen and vessel DICE, PREC, and PREC trends were similar to those of plaque wall albeit with higher median (above 95%) and narrower IQR that starts around 6% and narrows to as small as 3% (results not shown). Framework and expert segmentations were highly correlated without substantial deviation. Actual and relative plaque volume errors were within ±10 mm3 and 12%, respectively (Fig. 6).

Discussion

Coronary artery disease (CAD) starts and continues to accumulate deposits of plaques silently for decades before the development of the symptoms. During this asymptomatic phase, plaques grow mainly outwards or with minimal stenosis. Many recent large clinical studies demonstrated that in asymptomatic subjects, plaque burden including number, size, in addition to the luminal encroachment of the plaques, is a strong independent predictor of future CAD events and mortality8,9,28.

While there are multiple studies that addressed the problem of coronary lumen stenosis detection, there are few that attempts to systematically delineate coronary wall from CTA, particularly for asymptomatic CAD subjects. In this study, we developed and implemented a framework for automatic segmentation of coronary artery wall from CCTA in asymptomatic subjects at low and intermediate Framingham risk profile. Yet, there is evidence that they develop a substantial amount of plaque burden and even outward positive remodeling that cause no stenosis but may well rupture and become life-threatening. This group is of particular interest for a preventative approach towards early detection and quantification of CAD thereby improving disease monitoring and treatment outcome. This is the stage at which intervention could be more successful and cost-effective.

CCTA often produces an inconsistent and shallow gradient of contrast between the coronary wall and surrounding tissues. Additionally, the thin coronary wall is surrounded by a background with a wide range of attenuations. Collectively, these present multiple unique challenges toward robust delineation of plaques and walls and therefore mandate the development of novel multi-algorithm processing approaches.

The first new approach is for extracting coronary lumen. In other vessel segmentation problems, it was sufficient to initialize the level set algorithm with seed points or with a rough inexact initial contour. However, that approach is not sufficient for coronary CTA and is prone to early failure and pre-mature termination where vessels at certain locations can be distorted due to motion artifacts, severe stenosis, or blooming artifacts due to large calcification. In the proposed framework, the initial contouring step involved filtering out vessel-like background structures and generating a more precise robust initial contour. These tasks were accomplished by combining vesselness and region growing as was shown in Figs 1 and 7.

Improvement in initial contours; avoiding false vesselness artifacts. Coronary branches in the original CCTA (A) and artefactual shapes are enhanced with vesselness (B). Level set segmentation based on vesselness mistakenly includes these false structures (C). Proposed framework correctly identifies the vessel from the other objects (D).

The second new approach presented in this work is for coronary outer wall segmentation in which the final level set lumen contour is utilized to generate the initial contour for the outer vessel boundaries. The resulting initial outer contour is quite close to the vessel outer boundary, including inside it all of the potential plaques and excluding surrounding fat. This initial outer contour was then used by the second level set routine to extract the final outer vessel wall.

While there are benchmarks for centerline calculation, lumen segmentation, and stenosis detection18,30, there is no benchmark yet for vessel wall and plaque segmentation. To validate our segmentation, a blinded comparison between the three expert observers’ manual delineation and framework segmentation versus the expert observers’ consensus demonstrated a successful framework. This novel framework had the least volume MSE, the least wall relative error, and was better than at least one observer in the other metrics. Moreover, when the study senior radiologist with the closest performance to the consensus was asked to fix the framework segmentation to correct what was viewed as clearly a wrong segmentation, the mean difference in DICE, precision, and sensitivity was always within 10% in each plaque category and got smaller as plaques got larger. These results clearly demonstrate the frameworks successful performance and suggest that the framework segmentation can be a significant step in CCTA plaque analysis workflow to accelerate radiologist’s performance and reduce the inter-observer variability.

Successful segmentation requires diagnostic quality images of the coronary tree. The performance of the framework may be compromised under certain situation, which can be grouped into three categories. The first category includes sites with motion-induced blurring artifacts, blooming artifacts from calcified plaque, or lack of perceived fat tissue between the artery and the myocardium. Neither a radiologist nor the algorithm can determine with certainty the extent of plaques in these circumstances. Yet, the presented framework will still preserve the continuity of the segmentation through these sites of coronary vasculature and proceed with segmenting the rest of the tree. The second category of situation includes sites with severe motion artifacts that cause discontinuity of the artery, as shown in Fig. 8 left, or the segments of the arteries that tangent the coronary veins, as shown in Fig. 8 right. Coronary veins have a HU range similar to the wall and plaque tissues and will be misclassified as plaques unless manually guided. A radiologist can correctly interpret this category with higher degree of confidence than the framework as it requires a higher order spatial recognition than what the framework currently has.

Examples of cases in which the framework will fail due to (a) severe motion artifacts, in which parts of the coronary artery are broken and discontinuous (yellow arrows), and (b) coronary veins that are in close proximity to or in contact with the arteries and are misclassified as plaques (red arrows).

In conclusion, automatic CCTA coronary wall segmentation with high performance compared to radiologist’s manual delineation is feasible regardless of the degree of stenosis. This framework provides a robust working platform for accelerated, objective, and reproducible atherosclerotic plaque characterization and quantification beyond subjective assessment of stenosis and can be potentially applicable for monitoring patients at CHD risk and the response to therapy.

Materials and Methods

The details of framework’s modules are presented in this section. We, first, present the module for the initial inner and outer contour calculation. Next, we describe the segmentation and visualization modules. Specific implementation steps including subroutines, libraries, and toolkits of the proposed framework are listed in the appendix.

Initial contours

Level set techniques have been commonly used for solving 3D vessel segmentation problems because of their robustness and insensitivity to small intra-region intensity variations and their ability to overcome a suboptimal initialization31. Thus, our proposed framework uses level sets to identify the final segmentation of both the lumen and the vessel.

However, level sets alone will likely provide an inaccurate, and possibly completely erroneous contour of the coronary lumen in situations with substantial artifacts or poor image acquisition quality. The small caliber of the coronary arteries which is comparable in size to the imaging resolution and the large underlying motion, along with the lumen narrowing due to stenosis, can cause large variations within lumen brightness in Hounsfield units (HU). These intra-lumen variations are comparable to inter-vessel HU intensity variations from lumen to surrounding tissues. Severe stenosis and motion artifacts examples are shown in Fig. 9 to demonstrate cases in which lumen HU drop substantially, in such cases a level set lumen segmentation will terminate abruptly at the sites of severe motion artifacts or severe stenosis; even though the segments with artifacts are followed by other segments of diagnostic value. We avoid this early termination by preceding the level set segmentation with an automatically generated initial contour near to the lumen boundary, which will serve as a guide to the level set segmentation and will prevent it from early termination in artifactual segments (Fig. 1).

In the ideal case, Gaussian functions can model the change of lumen intensity profile across the vessel while a low change in gray levels is expected in the longitudinal direction along the vessel. As a result, a zero-crossing of the first derivative associated with a peak in the second derivative are expected across the vessel lumen. Based on this principle, many techniques proposed second derivative analysis32,33,34 to enhance and/or detect the vessels.

Utilizing the Hessian matrix to perform second derivative analysis provides a rotational and translation invariant tool for pre-processing and enhancing tube-like shapes. However, it is not scale invariant, which is important for dealing with multiple vessel sizes. Applying the second derivative after convoluting the image with a set of Gaussian functions with different standard deviations can resolve this issue.

In this work, Frangi’s vesselness function34 is used to enhance the appearance of the coronary arteries in the input 3D CTA dataset. An example of the outcome of the vesselness filter is shown in Fig. 10 where all tube-like and sharp-curvature structures are enhanced compared to the background and other objects.

The vesselness filter often enhances non-vessel structures as well. Particularly when coronary arteries pass near the borders of the heart-lung interface, these borders are erroneously enhanced by the vesselness filter creating false positive branches. Therefore, the output of this module is not ready to be used alone as an initial contour for segmentation with level sets. Figure 7(A) shows one of these cases where two branches of left coronary artery are near the heart-lung interface, the vesselness outcome is shown in Fig. 7(B). The figure shows the false positive branch that joins the two coronary branches.

To avoid such false vesselness-enhanced structures and to generate a more faithful initial contour, we propose adding a region-growing module to the framework as shown in Fig. 1. Region-growing is applied to both the original CTA image and the vesselness image independently. The two binary outputs are intersected thereafter. Applying region-growing on the vesselness image extracts the targeted coronary arteries, RCA, LCA, LCX and the false branches previously enhanced in the vesselness module. Applying region-growing on the original image, the output is a connected region that contains the coronary arteries, the aorta, and all other pixels connected to any of these arteries and have HU in the same range as blood HU. The intersection of both results provides a region representing the coronary arteries only without the false branches or the aorta and other connected pixels. Figure 7 demonstrates an example of this step. The vesselness filter applied to the original data generates false structures (Fig. 7(B)), which results in the wrong borders for the initial region (Fig. 7(C)). Correct borders for the region are identified from the proposed framework (Fig. 7(D)).

The final lumen inner wall contour is then calculated from the initial lumen contour using a level set method. The segmented lumen is used to calculate the initial vessel outer wall contour. Previous literature indicates large plaques causing severe stenosis ≥40% have a thickness of 4.38 ± 0.71 mm22. Consequently, we extend the segmented lumen by 5.5 mm using restricted morphological dilation. The restricted dilation excludes the surrounding fat regions, identified as pixels with negative HU, from the vessel initial contour. The resulting shape is considered the vessel initial outer contour that encloses the vessel and the plaques. In the segmentation module, this initial outer contour will shrink inward toward the actual outer boundaries of the vessel wall as described in the next section.

Segmentation

Segmentation of the lumen and vessel, which correspond to the inner and outer final contours, respectively, is performed using a level set method. We evolve two level sets in opposite directions; one from inside the lumen toward the true lumen boundary followed by the other level set starting from outside the vessel inward to the vessel boundary. The method takes the initial lumen and vessel contours as input and the feature image as guidance as described in the previous section (Fig. 1). The feature image is calculated by applying the sigmoid function on the gradient image.

Figure 11 shows examples of the line profiles of the HU image (top), the gradient image (middle), and the feature image in Fig. 11 (bottom) across the lumen and wall of (Fig. 11(A)) a healthy vessel segment and (Fig. 11(B)) a segment with a non-calcified plaque of the same subject. The change in the HU across the healthy vessel wall is relatively symmetric, smooth, and steep while the change through the plaque is slower and irregular. In both cases, points with maximum gradients cannot be used to reliably represent either the inner or the outer boundaries. First, the points with maximum HU gradient are of substantially lower HU intensity values than the center of the lumen. Second, in healthy cases, only one gradient peak exists that neither corresponds to the lumen inner wall nor the vessel outer wall. As shown in Fig. 11 (top), a more accurate representation of the lumen and vessels are the inner and the outer shoulders of the HU profile, respectively.

Visualization

The second part of the framework is the visualization of results. Once the wall is segmented, curved multiplanar reformation (CMPR) technique is used to straighten the original 3D dataset, and the segmented lumen and wall30 using the lumen centerline as a guideline.

Vessel centerline is calculated in two steps. In the first step, the Marching Cube algorithm is employed to extract a zero-level set that is used to generate the 3D mesh of the inner surface of the vessel wall. Next, the centerline is obtained from the 3D mesh using the Voronoi diagram35. The resulting centerline is analyzed using Frenet–Serret formulas36 that describe the geometry of the centerline at each point along it by three orthogonal unit vectors: tangent, normal, and binormal unit vectors. These vectors guide the CMPR of the original 3D input image, the inner level set image, and the outer level set image. An example of the centerline and CMPR output for the left coronary arteries is shown in Fig. 2 demonstrating the lumen centerlines, different coronary segments, and their representations in the straightened vessels.

After calculating CMPR for the inner and outer level set images, Marching Cube is applied to the 3D inner and outer segmented walls to extract the inner and outer contours which are displayed as an overlay on the reformatted input image as shown in Fig. 4. Finally, the vessel wall average thickness is calculated at every cross section and plotted along the longitudinal the straightened artery.

Further details about implementation subroutine and software libraries used in the construction of the framework can be found in the appendix.

References

Mortality, G. B. D. & Causes of Death, C. Global, regional, and national age-sex specific all-cause and cause-specific mortality for 240 causes of death, 1990–2013: a systematic analysis for the Global Burden of Disease Study 2013. Lancet 385, 117–171, https://doi.org/10.1016/S0140-6736(14)61682-2 (2015).

Falk, E., Shah, P. K. & Fuster, V. Coronary plaque disruption. Circulation 92, 657–671 (1995).

Farb, A. et al. Coronary plaque erosion without rupture into a lipid core. A frequent cause of coronary thrombosis in sudden coronary death. Circulation 93, 1354–1363 (1996).

Sangiorgi, G. et al. Arterial calcification and not lumen stenosis is highly correlated with atherosclerotic plaque burden in humans: a histologic study of 723 coronary artery segments using nondecalcifying methodology. Journal of the American College of Cardiology 31, 126–133 (1998).

Glagov, S., Weisenberg, E., Zarins, C. K., Stankunavicius, R. & Kolettis, G. J. Compensatory enlargement of human atherosclerotic coronary arteries. The New England journal of medicine 316, 1371–1375, https://doi.org/10.1056/NEJM198705283162204 (1987).

Libby, P., DiCarli, M. & Weissleder, R. The vascular biology of atherosclerosis and imaging targets. J Nucl Med 51(Suppl 1), 33S–37S, https://doi.org/10.2967/jnumed.109.069633 (2010).

Roger, V. L. et al. Heart disease and stroke statistics–2012 update: a report from the American Heart Association. Circulation 125, e2–e220, https://doi.org/10.1161/CIR.0b013e31823ac046 (2012).

Min, J. K. et al. Prognostic value of multidetector coronary computed tomographic angiography for prediction of all-cause mortality. Journal of the American College of Cardiology 50, 1161–1170, https://doi.org/10.1016/j.jacc.2007.03.067 (2007).

Min, J. K. et al. Age- and sex-related differences in all-cause mortality risk based on coronary computed tomography angiography findings results from the International Multicenter CONFIRM (Coronary CT Angiography Evaluation for Clinical Outcomes: An International Multicenter Registry) of 23,854 patients without known coronary artery disease. J Am Coll Cardiol 58, 849–860, https://doi.org/10.1016/j.jacc.2011.02.074 (2011).

Wesarg, S., Khan, M. F. & Firle, E. A. Localizing calcifications in cardiac CT data sets using a new vessel segmentation approach. Journal of digital imaging 19, 249–257, https://doi.org/10.1007/s10278-006-9947-6 (2006).

Wesarg, S. & Firle, E. A. Segmentation of vessels: The corkscrew algorithm. P Soc Photo-Opt Ins 5370, 1609–1620, https://doi.org/10.1117/12.535125 (2004).

Marquering, H. A., Dijkstra, J., de Koning, P. J., Stoel, B. C. & Reiber, J. H. Towards quantitative analysis of coronary CTA. The international journal of cardiovascular imaging 21, 73–84 (2005).

Sonka, M., Winniford, M. D. & Collins, S. M. Robust simultaneous detection of coronary borders in complex images. IEEE transactions on medical imaging 14, 151–161, https://doi.org/10.1109/42.370412 (1995).

Chunliang Wang, R. M. & Örjan, S. Vessel segmentation using implicit model-guided level sets. Proc. of MICCAI Workshop 3D Cardiovascular Imaging: A MICCAI Segmentation Challenge (2012).

Schaap, M. et al. Coronary lumen segmentation using graph cuts and robust kernel regression. Information processing in medical imaging: proceedings of the. conference 21, 528–539 (2009).

Zuluaga, M. A., Hush, D., Delgado Leyton, E. J., Hernandez Hoyos, M. & Orkisz, M. Learning from only positive and unlabeled data to detect lesions in vascular CT images. Medical image computing and computer-assisted intervention: MICCAI. International Conference on Medical Image Computing and Computer-Assisted Intervention 14, 9–16 (2011).

Zuluaga, M. A. et al. Automatic detection of abnormal vascular cross-sections based on density level detection and support vector machines. International journal of computer assisted radiology and surgery 6, 163–174, https://doi.org/10.1007/s11548-010-0494-8 (2011).

Kirisli, H. A. et al. Standardized evaluation framework for evaluating coronary artery stenosis detection, stenosis quantification and lumen segmentation algorithms in computed tomography angiography. Medical image analysis 17, 859–876, https://doi.org/10.1016/j.media.2013.05.007 (2013).

Kitamura, Y., Li, Y., Ito, W. & Ishikawa, H. Coronary lumen and plaque segmentation from CTA using higher-order shape prior. Medical image computing and computer-assisted intervention: MICCAI. International Conference on Medical Image Computing and Computer-Assisted Intervention 17, 339–347 (2014).

Kang, D. et al. Structured learning algorithm for detection of nonobstructive and obstructive coronary plaque lesions from computed tomography angiography. J Med Imaging (Bellingham) 2, 014003, https://doi.org/10.1117/1.JMI.2.1.014003 (2015).

Sivalingam, U. et al. 978502-978502-978508.

Fayad, Z. A. et al. Noninvasive in vivo human coronary artery lumen and wall imaging using black-blood magnetic resonance imaging. Circulation 102, 506–510 (2000).

Al-Mallah, M. H. et al. Does coronary CT angiography improve risk stratification over coronary calcium scoring in symptomatic patients with suspected coronary artery disease? Results from the prospective multicenter international CONFIRM registry. Eur Heart J Cardiovasc Imaging 15, 267–274, https://doi.org/10.1093/ehjci/jet148 (2014).

Gharib, A. M. et al. The feasibility of 350 mum spatial resolution coronary magnetic resonance angiography at 3 T in humans. Invest Radiol 47, 339–345, https://doi.org/10.1097/RLI.0b013e3182479ec4 (2012).

Gharib, A. M. et al. Coronary abnormalities in hyper-IgE recurrent infection syndrome: depiction at coronary MDCT angiography. AJR. American journal of roentgenology 193, W478–481, https://doi.org/10.2214/AJR.09.2623 (2009).

Agatston, A. S. et al. Quantification of coronary artery calcium using ultrafast computed tomography. Journal of the American College of Cardiology 15, 827–832 (1990).

Raff, G. L. et al. SCCT guidelines for the interpretation and reporting of coronary computed tomographic angiography. J Cardiovasc Comput Tomogr 3, 122–136, https://doi.org/10.1016/j.jcct.2009.01.001 (2009).

Johnson, K. M., Dowe, D. A. & Brink, J. A. Traditional clinical risk assessment tools do not accurately predict coronary atherosclerotic plaque burden: a CT angiography study. AJR Am J Roentgenol 192, 235–243, https://doi.org/10.2214/AJR.08.1056 (2009).

Johnson, K. M. & Dowe, D. A. Accuracy of statin assignment using the 2013 AHA/ACC Cholesterol Guideline versus the 2001 NCEP ATP III guideline: correlation with atherosclerotic plaque imaging. J Am Coll Cardiol 64, 910–919, https://doi.org/10.1016/j.jacc.2014.05.056 (2014).

Schaap, M. et al. Standardized evaluation methodology and reference database for evaluating coronary artery centerline extraction algorithms. Med Image Anal 13, 701–714, https://doi.org/10.1016/j.media.2009.06.003 (2009).

Lesage, D., Angelini, E. D., Bloch, I. & Funka-Lea, G. A review of 3D vessel lumen segmentation techniques: models, features and extraction schemes. Medical image analysis 13, 819–845, https://doi.org/10.1016/j.media.2009.07.011 (2009).

Loranz, C., Carlsen, C. I., Buzug, T. M. Fassnacht & Wesse, C. Multi-scale line segmentation with automatic estimation of width, contrast and tangential direction in 2D and 3D medical images. 1st Joint Conf. CVRMed and MRCAS (CVRMed/MRCAS’97), 233–242 (1997).

Sato, Y. et al. 3D multi-scale line filter for segmentation and visualization of curvilinear structures in medical images. Cvrmed-Mrcas’97 1205, 213–222 (1997).

Frangi, A. F., Niessen, W. J., Hoogeveen, R. M., van Walsum, T. & Viergever, M. A. Model-based quantitation of 3-D magnetic resonance angiographic images. IEEE transactions on medical imaging 18, 946–956, https://doi.org/10.1109/42.811279 (1999).

Attali, D. & Montanvert, A. Computing and Simplifying 2D and 3D Continuous Skeletons. Comput. Vis. Image Underst. 67, 261–273, https://doi.org/10.1006/cviu.1997.0536 (1997).

Kühnel, W. & Hunt, B. Differential geometry: curves, surfaces, manifolds. Third edition. edn, (American Mathematical Society 2015).

Acknowledgements

This work was supported by the Intramural Research Program of the National Institutes of Health, the National Institute of Diabetic and Digestive and Kidney Diseases.

Author information

Authors and Affiliations

Contributions

K.Z.A., A.M. Ghanem, and A.M. Gharib. designed the study; A.M. Gharib, A.H.H., and J.R.M. performed scans. A.M. Ghanem developed the framework. A.M. Ghanem, K.Z.A., R.M.E., A.M. Gharib, and A.H. analyzed the data. A.C., A.M. Ghanem, K.Z.A., A.M. Gharib wrote and thoroughly revised the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ghanem, A.M., Hamimi, A.H., Matta, J.R. et al. Automatic Coronary Wall and Atherosclerotic Plaque Segmentation from 3D Coronary CT Angiography. Sci Rep 9, 47 (2019). https://doi.org/10.1038/s41598-018-37168-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-37168-4

This article is cited by

-

Automatic Quantification of Local Plaque Thickness Differences as Assessed by Serial Coronary Computed Tomography Angiography Using Scan-Quality-Based Vessel-Specific Thresholds

Cardiology and Therapy (2024)

-

High-field magnetic resonance microscopy of aortic plaques in a mouse model of atherosclerosis

Magnetic Resonance Materials in Physics, Biology and Medicine (2023)

-

Ascending Aorta 4D Time to Peak Distention Sexual Dimorphism and Association with Coronary Plaque Burden Severity in Women

Journal of Cardiovascular Translational Research (2023)

-

Machine learning in cardiovascular radiology: ESCR position statement on design requirements, quality assessment, current applications, opportunities, and challenges

European Radiology (2021)

-

Per-lesion versus per-patient analysis of coronary artery disease in predicting the development of obstructive lesions: the Progression of AtheRosclerotic PlAque DetermIned by Computed TmoGraphic Angiography Imaging (PARADIGM) study

The International Journal of Cardiovascular Imaging (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.