Abstract

Cooperation in collective action dilemmas usually breaks down in the absence of additional incentive mechanisms. This tragedy can be escaped if cooperators have the possibility to invest in reward funds that are shared exclusively among cooperators (prosocial rewarding). Yet, the presence of defectors who do not contribute to the public good but do reward themselves (antisocial rewarding) deters cooperation in the absence of additional countermeasures. A recent simulation study suggests that spatial structure is sufficient to prevent antisocial rewarding from deterring cooperation. Here we reinvestigate this issue assuming mixed strategies and weak selection on a game-theoretic model of social interactions, which we also validate using individual-based simulations. We show that increasing reward funds facilitates the maintenance of prosocial rewarding but prevents its invasion, and that spatial structure can sometimes select against the evolution of prosocial rewarding. Our results suggest that, even in spatially structured populations, additional mechanisms are required to prevent antisocial rewarding from deterring cooperation in public goods dilemmas.

Similar content being viewed by others

Introduction

Explaining the evolution of cooperation has been a long-standing challenge in evolutionary biology and the social sciences1,2,3,4,5,6. The problem is to explain how cooperators, whose contributions to the common good benefit everybody in a group, can prevent defectors from outcompeting them, leading to a tragedy of the commons where nobody contributes and no common good is created or maintained7.

A solution to this problem is to provide individuals with additional incentives to contribute, thus making defection less profitable8, 9. Incentives can be either negative (punishment) or positive (rewards). Punishment occurs when individuals are willing to spend resources in order for defectors to lose even more resources10. Punishment can be stable against defection, since rare defectors are effectively punished11. However, to initially invade and resist invasion by individuals who cooperate but refrain from investing into incentives, i.e., second-order defectors, punishers must gain from punishing, for example, through reputational benefits in future interactions9, 12, 13.

Defection in collective action problems can also be prevented via positive incentives. Using rewards, cooperators can pay to increase the payoff of other cooperators. While the emergence of such behavior is usually favored, as there are very few cooperators to reward when cooperators are rare, it becomes increasingly costly to sustain as cooperators become more abundant in the population14. To resist second-order defectors, non-rewarding players must benefit less from rewards15. Alternatively, when both rewards and punishment are present, rewards can foster the emergence of punishment, which in turn can be stable provided second-order punishment is available16.

Individuals can either decide to impose incentives unilaterally, or they can pool their effort to impose incentives collectively. When acting collectively, individuals can be thought as investing into a fund used to either punish defectors or reward cooperators; in the latter scenario one speaks of “prosocial rewarding”. These collective mechanisms can be viewed as primitive institutions, as group members both design and enforce the rules to administer incentives to overcome social dilemmas17. Pool rewards15, 18 are particularly interesting because they involve the creation of resources, as opposed to their destruction (as in punishment). Prosocial rewarding can favor cooperation only if non-rewarding players can be sufficiently prevented from accessing reward funds so that second-order defectors benefit less from rewards than do rewarders15. However, the presence of “antisocial rewarders”, i.e., individuals who do not contribute to the public good but reward themselves, destroys cooperation unless additional mechanisms, such as better rewarding abilities for prosocials, work in combination with exclusion19.

Pool rewards can also be viewed as a second collective action dilemma played exclusively among those players who made a similar choice in the first public goods game, i.e., cooperators with each other, and defectors with each other. The nature of this secondary collective action is not necessarily similar to that of the first public goods game, and might, for example, involve non-linear returns. This observation extends beyond human behavior, with situations where individuals are involved in different levels of social dilemmas being particularly likely in bacterial communities20. Indeed, many species of bacteria secrete public good molecules (e.g., iron-binding siderophores and other signaling molecules), which are susceptible to exploitation from both their own and other strains21,22,23. In addition, bacteria are also involved in within-species public goods games, as some of those public good molecules can also be strain-specific23, 24. Thus, the relevance of pool-reward mechanisms extends to non-human species.

A recent theoretical study by Szolnoki and Perc25 [hereafter, SP15] challenged the view that additional mechanisms are required to prevent antisocial rewarding from deterring cooperation in public goods games. Contrastingly, SP15 showed that, if individuals interact preferentially with neighbors in a spatially structured population, prosocial rewarding outcompetes antisocial rewarding and that increasing rewards is beneficial for prosocial rewarding. However, SP15 reached these conclusions by means of Monte Carlo simulations of a very specific model of spatial structure and evolutionary dynamics (a square lattice with overlapping groups and a Fermi update rule) where interacting groups are always equal to five. Hence, it remains unclear whether their results generalize to a broader range of spatial models.

Spatial structure can favor the evolution of cooperation. The main reason for this phenomenon is simple. When populations are spatially structured through limited dispersal, social interactions necessarily occur more often among relatives. Hence, kin selection is at work3, 26,27,28. However, spatial structure also means that competitors are also more often kin29. In certain models (such as an island model with Wright-Fisher demography30 or an evolutionary graph updated with a Moran birth-death process31, 32), these two effects cancel each other out and spatial structure has no effect on the evolution of cooperation. More generally, the net effect is not null, and its direction and magnitude can be often conveniently captured by a single “scaled relatedness coefficient”33. The scaled relatedness coefficient depends on the demographic assumptions of a given model, including the “update rule” used to implement the evolutionary dynamics, but is otherwise independent of the payoffs from the game used to model social interactions33,34,35,36,37.

Studies on spatial games and evolutionary graph theory have also investigated the effects of spatial structure on evolutionary game dynamics38,39,40,41. Theses studies have shown how particular features of the graph used to represent the population and the update rules can promote or hinder the evolution of cooperation. In particular, it has been shown that, assuming weak selection on discrete strategies and additive effects, the interplay between graph topology and update rule can be captured by a single “structure coefficient” independent of the underlying game42, 43. Importantly, the structure coefficient of evolutionary graph theory and the scaled relatedness coefficient of kin selection theory are connected by a simple transformation35. Therefore, using scaled relatedness as a measure of spatial structure allows one to capture a large variety of spatial models, including spatial games and evolutionary graphs.

Here, we formulate a mathematical model that clarifies the role of spatial structure for cooperation to be favored through pool rewarding. In contrast to SP15 [which, in the tradition of spatial games and evolutionary graphs38, 40, 41, 44, assume discrete strategies and strong selection] we assume continuous strategies and weak selection45, 46. Our different assumptions allow us to build on existing theoretical work36 to analytically derive the conditions under which cooperation is favored and to write them as functions of the parameters of the game (including the group size) and of a single “scaled relatedness coefficient”33, 35, 36, which serves as a natural measure of spatial structure. This allows us to make general predictions about the effect of spatial structure on cooperation, and to make connections between our results and the vast literature on social evolution theory3, 28, 47.

Model

Public goods game with prosocial and antisocial reward funds

We consider a collective action problem with an incentive mechanism based on reward funds following the model of SP15. Individuals interact in groups of size n and play a linear public goods game (PGG) followed by a rewarding stage with non-linear returns. There are two types of actions available to individuals: “rewarding cooperation” (RC, or “prosocial rewarding”), whereby a benefit r 1 γ/n is provided to all group members (including the focal) at a cost γ, and “rewarding defection” (RD, or “antisocial rewarding”), whereby no benefit is provided and no cost is payed. The parameter r 1 is the multiplication factor of the PGG, and it is such that 1 < r 1 < n; this ensures that the first stage of the game is a prisoner’s dilemma, so that if rewards are absent rewarding cooperation is a dominated strategy.

Individuals choosing RC or RD also invest in their own reward funds. Each reward fund yields a per capita net reward r 2 − γ (reward benefit r 2 minus cost of contributing to the reward pool γ) provided there is at least another individual playing the same action among the n − 1 other group members, and zero otherwise (i.e., self-rewarding is not allowed and the cost γ is payed only if the rewarding institution is created). For example, a focal individual playing RC will pay the cost and receive the reward only if there is at least another RC among its n − 1 partners. This reflects a situation where reward funds yield non-linear returns, and is reminiscent of those of a volunteer’s dilemma48. Since the net reward r 2 − γ does not depend on the group size n, r 2 can in principle take any value greater than, or equal to γ. Note that individuals choosing the most common action are more likely to get the reward, even under random group formation. Hence, RC can prevail as long as its frequency in the global population is above one half and rewards outweigh the net cost of contributing to the PGG. However, if self-rewarding is allowed, cooperation is never favored even when all individuals play RC 19.

With the previous assumptions, and letting without loss of generality γ = 1, the payoffs for a focal individual choosing either RC or RD when k co-players choose RC (and n − 1 − k co-players choose RD) are respectively given by (cf. Equations 3.1, 3.2, and 3.3 in SP15):

and

Note that if everybody plays RC, everybody gets a payoff c n−1 = r 1 + r 2 − 2. Instead, if everybody plays RD, everybody gets d 0 = r 2 − 1. Since r 2 > 1, c n−1 > d 0 holds for all values of r 1 and r 2, which means that full prosocial rewarding Pareto dominates full antisocial rewarding: Players are collectively better if all play prosocial rewarding with probability one rather than if all play antisocial rewarding with probability one. Therefore, despite the presence of rewards available to both cooperators and defectors, the game we study retains the characteristics of a typical social dilemma where full cooperation by all individuals in the group yields higher payoffs than full defection by all individuals in the group. In such situations, it is usually expected that spatial structure facilitates the evolution of RC. As we show below, this is not always the case.

Spatial structure and evolutionary dynamics

We consider a homogeneous spatially structured population of constant and finite size N T where individuals interact with n − 1 other individuals according to the game described above. The exact type of spatial structure can follow any of a large family of models, including variants of the island model49 and transitive evolutionary graphs50. In this last case, and for simplicity, we assume that individuals play a single (k + 1)-player game with their k nearest neighbors, where k is the degree of the graph. All that is required for our analysis to be valid is that the selection gradient can be written in a form proportional to the gain function given by Eq. (3) below. We refer the interested reader to previous literature28, 33,34,35,36 for more details on this formalism and the models of spatial structure captured by our approach.

We assume that individuals implement mixed strategies, i.e., they play RC with probability z and RD with probability 1 − z, and investigate the evolutionary dynamics of the phenotype z. More specifically, we consider the fixation probability ρ(z, δ) of a single mutant playing z + δ in a resident population of phenotype z, take the phenotypic selection gradient \(\mathcal{S}(z)={(d\rho /d\delta )}_{\delta =0}\) as a measure of evolutionary success35, 51, and look into convergence stable strategies52 under trait substitution dynamics28.

In order to evaluate the selection gradient, we make use of standard results regarding the evolution of a continuous phenotype in a spatially structured population28. Denoting by z • the phenotype of a focal individual, by z ◦ the average phenotype of the individuals it socially interacts with, and by f(z •, z ◦) the fecundity of the focal individual, and further assuming that fecundity is proportional to the expected payoffs from the game, the selection gradient \(\mathcal{S}(z)\) takes the form34

where \(\mathcal{G}(z)\) is the “gain function”, which consists of three components: (i) the effect of the focal individual’s behavior on its fecundity (i.e., the “direct effect” \(-\mathcal{C}(z)\)), (ii) the effect of the co-players’ behavior on the focal individual’s fecundity (i.e., the “indirect effect” \(\mathcal{B}(z)\)), and (iii) a measure of relatedness between the focal individual and its neighbors, demographically scaled so as to capture the effects of local competition (i.e., the “scaled relatedness coefficient” κ).

For the matrix game with two pure strategies as the one we consider here, the direct and indirect effects appearing in Eq. (3) can be written, up to a constant factor, as36

where

are the “direct gains from switching” recording the changes in payoff experienced by a focal if it unilaterally switches its action from RD to RC when k co-players stick to RC and n − 1 − k stick to RD 53, and

are the “indirect gains from switching” recording the changes in the total payoff accrued by co-players when the focal unilaterally switches its action from RD to RC 36. Eq. (4) expresses \(-\mathcal{C}(z)\) and \(\mathcal{B}(z)\) as expected values of the direct and indirect gains from switching when the number of other individuals playing RC is distributed according to a binomial distribution with parameters n and z.

A necessary and sufficient condition for a mutant with phenotype z + δ to have a fixation probability greater than neutral when δ is vanishingly small is that \(\mathcal{S}(z) > 0\) and hence that \(-\mathcal{C}(z)+\kappa \mathcal{B}(z) > 0\) holds. This condition can be interpreted as a scaled form of Hamilton’s rule33, 35. Importantly, the gain function \(\mathcal{G}(z)=-\mathcal{C}(z)+\kappa \mathcal{B}(z)\) allows one to identify “convergence stable” evolutionary equilibria54,55,56; these are given either by singular strategies z * (i.e., the zeros of the gain function) satisfying \(\mathcal{G}^{\prime} ({z}^{\ast }) < 0\), or by the extreme points z = 0 (if \(\mathcal{G}(0) < 0\)) and z = 1 (if \(\mathcal{G}(1) > 0\)). Convergence stability is a standard way of characterizing long-term evolutionary attractors; a phenotype z * is convergence stable if for resident phenotypes close to z * mutants can invade only if mutants are closer to z * than the resident52.

Scaled relatedness

The coefficient κ appearing in Eq. (3) is the “scaled relatedness coefficient”, which balances the effects of both increased genetic relatedness and increased local competition characteristic of spatially structured populations29, 33,34,35, 37. The scaled relatedness coefficient has been calculated for many models of spatial structure for which Eq. (3) applies, see Table 2 of ref. 33, Table 1 of ref. 34, Appendix A of ref. 36, and references therein for some examples. We also note that by identifying

where σ is the so-called “structure coefficient”, the right hand side of Eq. (3) recovers the “canonical equation of adaptive dynamics with interaction structure” (ref. 43, Eq. 5). Table 1 of ref. 43 provides several examples of models of spatial structure and their respective values of σ; transforming these values via Eq. (7), the scaled relatedness coefficients for such models can be obtained in a straightforward manner.

Usually, κ takes a value between −1 and 1 depending on the demographic assumptions of the model, but it is always such that the larger it is the less genetic relatedness is effectively reduced by the extent of local competition. Importantly, the larger the magnitude of scaled relatedness κ the more important the role of the indirect effect \(\mathcal{B}(z)\) in the selection gradient. For this reason, we use scaled relatedness κ as a measure of spatial structure; we hence refer in the following to an increase in κ as an increase in spatial structure.

In the following, we present some examples to illustrate the connection between explicit spatial structure models and the formalism we use here, based on the scaled relatedness coefficient. For a well-mixed population or an island model with Wright-Fisher demography (such that generations are overlapping), the value of scaled relatedness is

(ref. 34, Eq. B.1). Contrastingly, in an island model with n d demes with N individuals each (so that N T = n d N) and a Moran demography (where adults have a positive probability of surviving to the next generation) scaled relatedness becomes

where m is the migration rate (ref. 34, Eq. B.2). In this case, κ is inversely proportional to the migration rate m; it follows that an island model with less migration has, according to our definition, more spatial structure. As a final example, consider a transitive evolutionary graph of size N T and degree k updated with a (death-birth) Moran demography. In this case we have

(ref. 34, Eq. B.5 with m = 1; which can also be recovered from the value of σ given in Table 1 of ref. 43 after applying identity (7)). For this model of population structure, scaled relatedness is inversely proportional to the degree k. This means that, according to our terminology, graphs of larger degree (and hence more similar to a well-mixed population represented by a complete graph for which k = N T − 1) are characterized by smaller spatial structure.

Results

Calculating the gains from switching by first replacing Eqs (1) and (2) into Eqs (5) and (6), then replacing the resulting expressions into Eqs (4a) and (4b), and simplifying, we obtain that the gain function for the PGG with reward funds can be written as (see Methods)

In the following, we identify convergence stable equilibria and characterize the evolutionary dynamics, first for well-mixed and then for spatially structured populations.

Infinitely large well-mixed populations

For well-mixed populations, and as N T → ∞, the scaled relatedness coefficient reduces to zero (Eq. (8)). In this case, the gain function simplifies to \(\mathcal{G}(z)=-\mathcal{C}(z)\) and we obtain the following characterization of the evolutionary dynamics (see Methods). If r 1/n + r 2 ≤ 2, z = 0 is the only stable equilibrium, and RD dominates RC. Otherwise, if r 1/n + r 2 > 2, both z = 0 and z = 1 are stable, and there is a unique z * > 1/2 that is unstable. In this case, the evolutionary dynamics are characterized by bistability or positive frequency dependence, with the basin of attraction of full RD (z = 0) being always larger than the basin of attraction of full RC (z = 1). Moreover, z * (and hence the basin of attraction of z = 0) decreases with increasing r 1 and r 2. In particular, higher reward funds lead to less stringent conditions for RC to evolve. In any case, RC has to be initially common (z > 1/2) in order for full RC to be the final evolutionary outcome.

Spatially structured populations

Interactions in spatially structured populations (for which κ is not necessarily equal to zero) can dramatically alter the evolutionary dynamics of public goods with prosocial and antisocial rewards. In particular, we find that whether or not the extreme points z = 0 and z = 1 are stable depends on how the scaled relatedness coefficient κ compares to the critical values

and

which satisfy κ * ≤ κ *, in the following way (Fig. 1):

-

1.

For low values of κ (κ < κ *), full RD (z = 0) is stable and full RC (z = 1) is unstable.

-

2.

For intermediate values of κ (κ * < κ < κ *), both full RD and full RC are stable.

-

3.

For large values of κ (κ > κ *), full RC is stable and full RD is unstable.

Phase diagrams illustrating the possible dynamical regimes of public goods games with prosocial and antisocial reward funds. Prosocial rewarding (RC) is stable if κ > κ *, while antisocial rewarding (RD) is stable if κ < κ *. The critical values κ * and κ * are functions of the public goods game multiplication factor r 1, the reward benefit r 2, and the group size n, as given by Eqs (12) and (13). Increasing the reward benefit r 2 makes it more difficult for both prosocial and antisocial rewarding to invade a population otherwise playing full antisocial and prosocial rewarding, respectively. Parameters: n = 5.

For a given group size n and PGG multiplication factor r 1, κ * = κ * if and only if r 2 = 1, i.e., if rewards are absent. In this case, full RD and full RC cannot be both stable.

Rewards have contrasting effects on κ * (the critical scaled relatedness value below which full RC is unstable) and κ * (the critical scaled relatedness value above which full RD is unstable). On the one hand, κ * is decreasing in the reward benefit r 2, so larger rewards increase the parameter space where full RC is stable. If spatial structure is maximal, i.e., κ = 1, the condition for full RC to be stable is r 1 + r 2 > 2, which always holds. On the other hand, κ * is an increasing function of r 2. Hence, larger rewards make it harder for spatial structure to destabilize the full RD equilibrium, and hence for RC to invade. For κ = 1, full RD is still stable whenever r 1 < r 2. Contrastingly, full defection can never be stable if κ = 1 in the absence of rewards (i.e., r 2 = 1) since, by definition, r 1 > 1. From this analysis we can already conclude that even maximal spatial structure does not necessarily allow RC to invade and increase when rare. In addition, a minimum value of scaled relatedness is required for prosocial rewarding to be stable once it is fully adopted by the entire population.

Let us now investigate singular strategies. Depending on the parameter values, there can be either zero, one, or three interior points at which the gain function (and hence the selection gradient) vanishes (see Methods). If there is a unique singular point, then it is unstable while z = 0 and z = 1 are stable, and the evolutionary dynamics is characterized by bistability. If there are three singular points (probabilities z L, z M, and z R, satisfying 0 < z L < z M < z R < 1), then z = 0, z M, and z = 1 are stable, while z L and z R are unstable. In this case RD and RC coexist at the convergence stable mixed strategy z M; a necessary condition for this dynamical outcome is both relatively large reward benefits and relatively large scaled relatedness.

We calculated the singular strategies numerically, as the equation \(\mathcal{G}(z)=0\) cannot be solved algebraically in the general case (Figs 2 and 3). Increasing scaled relatedness generally increases the parameter space where RC is favored. Yet, there are cases where increasing scaled relatedness can hinder the evolution of RC. Specifically, when the reward benefit is considerably larger than the public goods share, increasing scaled relatedness can increase the basin of attraction of the full RD equilibrium (Fig. 2c). Also, increasing rewards can be detrimental to RC in spatially structured populations by increasing the basin of attraction of full RD (Fig. 3c,f,h,i); this is never the case when there is no spatial structure (Fig. 3a,d,g). Finally, the best case scenario from the point of view of a rare mutant playing z = δ (where δ is vanishingly small) is in the absence of rewards (i.e., r 2 = 1), because that is the case where the required threshold value of scaled relatedness to favor prosocial rewarding is the lowest (i.e., where κ * attains its minimum value in Eq. (13)).

Bifurcation plots illustrating the evolutionary dynamics of pool rewarding in spatially structured populations. The scaled relatedness coefficient serves as a control parameter. Arrows show the direction of evolution for the probability of playing prosocial rewarding. Solid (dashed) lines correspond to convergence stable (unstable) equilibria. In the left column panels (a,d,g), rewards are absent (i.e., r 2 = 1). In the middle column panels (b,e,h), r 2 = 2.5. In the right column panels (c,f,i), r 2 = 4.5. In the top row panels (a–c), r 1 = 1.25. In the middle row panels (d–f), r 1 = 2.5. In the bottom row panels (g–i), r 1 = 4.5. In all panels, n = 5. A value of κ = 0 could correspond to an infinitely large well-mixed population (Eq. (8)); a value of κ = 0.25 could correspond to an evolutionary graph updated with a death-birth Moran model with \({N}_{{\rm{T}}}\gg k\) and k = 4 (Eq. (10)); a value of κ ≈ 0.167 could correspond to an infinite island model with deme size N = 5 and \(m\ll 1\) (Eq. (9)).

Bifurcation plots illustrating the evolutionary dynamics of pool rewarding in spatially structured populations. The reward benefit serves as a control parameter. Arrows show the direction of evolution for the probability of playing prosocial rewarding. Solid (dashed) lines correspond to convergence stable (unstable) equilibria. In the left column panels (a,d,g), there is no spatial structure (i.e., κ = 0). In the middle column panels (b,e,h), κ = 0.2. In the right column panels (c,f,i), κ = 0.8. In the top row panels (a,b,c), r 1 = 1.25. In the middle row panels (d,e,f), r 1 = 2.5. In the bottom row panels (g,h,i), r 1 = 4.5. In all panels, n = 5.

In order to understand why, contrary to naive expectations, increasing spatial structure might sometimes select against RC, note first that the derivative of the gain function with respect to κ is equal to the indirect effect \(\mathcal{B}(z)\). This is nonnegative if

In the absence of rewards (i.e., r 2 = 1), condition (14) always holds. That is, increasing scaled relatedness always promotes cooperation when there are no rewards. In addition, when 0 ≤ z ≤ 1/2, the function q(z) is nonnegative, so that condition (14) holds and \(\mathcal{B}(z)\) is positive. Hence, increasing scaled relatedness is always beneficial for RC when such behavior is expressed less often than RD. However, increasing scaled relatedness might not always favor RC when such behavior is already common in the population, i.e., if z > 1/2. Indeed, when the multiplication factor of the PGG is relatively small and rewards are relatively large, condition (14) is not fulfilled for some z and \(\mathcal{B}(z)\) is negative for some probability of playing RC (Fig. 4).

Parameter space where condition (14) does not hold and increasing spatial structure is detrimental to prosocial rewarding for some values of the probability of playing prosocial rewarding, z. Parameters: n = 5.

A closer look at the indirect gains from switching Θ k (Eq. 6) reveals why \(\mathcal{B}(z)\), and hence the effect of scaled relatedness on the selection gradient, can be negative for some z. The indirect gains from switching are nonnegative for all k ≠ n − 2. For k = n − 2 and n ≥ 4 we have Θ n−2 = (n − 1)r 1/n − r 2 + 1, which can be negative if

holds. Inequality (15) is hence a necessary condition for \(\mathcal{B}(z)\) to be negative for some z and for prosocial rewarding to fail to qualify as payoff cooperative or payoff altruistic [sensu ref. 36]. Indeed, when condition (15) holds and hence Θ n−2 < 0, prosocial rewarding cannot be said to be altruistic according to the “focal-complement” interpretation of altruism57, 58. This is because the sum of the payoffs of the n − 1 co-players of a given focal individual, out of which n − 2 play RC and one plays RD, is larger if the focal plays RD than if the focal plays RC. We also point out that RC is not altruistic according to an “individual-centered” interpretation58, 59 or “cooperative” [sensu ref. 60] if

since in this case the payoff to a focal individual playing RD as a function of the number of other players choosing RC in the group, d k (see Eq. (2)), is decreasing (and not increasing) with k at k = n − 2. Indeed, if condition (16) holds, players do not necessarily prefer other group members to play RC irrespective of their own strategy: a focal RD player would prefer one of its n − 1 co-players to play RD rather than play RC. In the light of this analysis, it is perhaps less surprising that for some parameters increasing spatial structure can be detrimental to the evolution of prosocial rewarding, even if prosocial rewarding Pareto dominates antisocial rewarding.

Individual-based simulations

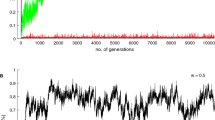

To test the validity of our mathematical model, we also ran individual-based simulations for a well-mixed population and a square lattice of size N T = 400, both updated with a Moran death-birth rule (see Methods for details). As predicted by our mathematical analysis, the evolutionary dynamics are often characterized by a single interior convergence unstable point z * (Fig. 5). When the phenotypic value z 0 of the initially monomorphic population is below such point, selection tends to disfavors rewarding cooperation and the population converges to full RD (z = 0). Contrastingly, when the population starts with a phenotypic value larger than z *, selection tends to favor rewarding cooperation and the population converges to full RC (z = 1). In all cases, the convergence unstable strategy z * resulting from our mathematical analysis are very good predictors of the point at which selection changes direction in our individual-based simulations.

Evolution of the probability of playing rewarding cooperation in a simulated population of N T = 400 individuals interacting in groups of size n = 5. Solid lines show the average phenotypic value of the population, gray dots show trait values of 10 individuals randomly sampled every N T time steps. Each set of solid line and gray dots represent one realization of the stochastic process starting with a different initial condition where the population is monomorphic for trait value z 0. Dotted red lines represent the analytical prediction for the value of the convergence unstable interior point z *. Parameters: w = 10, μ = 0.01, ν = 0.05. Left panels (a,c): well-mixed population updated with a Moran death-birth process (κ ≈ −0.0025). Right panels (b,d): square lattice with periodic boundary conditions and von Neumann neighborhood, i.e., each node is connected to North, East, South, and West neighbors (κ ≈ 0.2462). Top row panels (a,b): r 1 = 4.5, r 2 = 4.5. Bottom row panels (c,d): r 1 = 1.1, r 2 = 8.0. In all cases, the analytical model predicts bistable evolutionary dynamics with a single convergence unstable equilibrium z * dividing the basins of attraction of the two stable equilibria z = 0 and z = 1. (a) z * ≈ 0.5309. (b) z * ≈ 0.2472. (c) z * ≈ 0.606. (d) z * ≈ 0.632.

Discussion

We have investigated the effect of spatial structure on the evolution of public goods cooperation with reward funds. Measuring spatial structure by means of a scaled relatedness coefficient allowed us to capture both the effects of increased genetic assortment and increased local competition that characterize evolution in spatially structured populations. We have found that (i) prosocial rewarding cannot invade full antisocial rewarding unless scaled relatedness is sufficiently large, but (ii) increasing scaled relatedness can be detrimental to prosocial rewarding in cases where rewards are considerably larger than the public goods share. We have also demonstrated the contrasting effects of increasing rewards, which (iii) only benefits prosocial rewarding in well-mixed populations, but (iv) can also benefit antisocial rewarding in spatially structured populations. These results illustrate how pool-rewards introduce non-linearities to the public goods game, which in turn lead to counterintuitive effects of population structure on the evolutionary dynamics.

We found that increasing spatial structure can sometimes be detrimental to prosocial rewarding. We confirmed this analytical result with individual-based simulations, where we showed that the basin of attraction of full prosocial rewarding can be greater in a well-mixed population than in a square lattice with a Moran death-birth demography. This is a somewhat counterintuitive result, because spatial structure often favors the evolution of cooperation39, 41, 50, 60,61,62. However, previous studies have shown that increasing population structure can sometimes have a negative effect on the evolution of cooperative strategies34, 63, 64. For example, Hauert and Doebeli63 found that, when social interactions are modelled as a two-player snowdrift game between pure strategists under strong selection, well-mixed populations lead to higher levels of cooperation than square lattices. Peña et al.64 studied a multiplayer version of this game, but assuming weak selection and a death-birth Moran process, and found similar results. In our case, spatial structure can oppose prosocial rewarding because the indirect gains from switching from antisocial to prosocial rewarding can be negative. In particular, if individuals interact in groups of size n ≥ 4 and exactly n − 2 co-players choose rewarding cooperation while one co-player chooses rewarding defection, playing rewarding defection (RD) rather than rewarding cooperation (RC) might increase (rather than decrease) the payoffs of co-players. The reason is that, in this case, by choosing RD the focal player helps its RD co-player getting the reward fund, while also allowing its RC co-players to keep theirs, as the focal contribution is not critical to the creation of the prosocial reward fund. If the reward benefit is so large that Eq. (15) holds, the benefit to the single RD co-player is greater than what everybody loses by the focal not contributing to the public good, and the sum of payoffs to co-players is greater if the focal plays RD than if it plays RC. This implies that, although prosocial rewarding Pareto dominates antisocial rewarding, it does not strictly qualify as being payoff altruistic or payoff cooperative36, hence the mixed effects of increasing spatial structure.

Our results also revealed the fact that higher values of the reward benefit r 2 make it more difficult for prosocial rewarding to invade from rarity in spatially structured populations. Indeed, the critical value of scaled relatedness required for prosocial rewarding to be favored over antisocial rewarding is greater in the presence of rewards than in their absence. This is important because rewards are meant to be mechanisms incentivizing provision in public goods games15, 18, rather than making collective action more difficult to emerge. Clearly, our result hinges on the assumption that prosocial rewarders and antisocial rewarders are both equally effective in rewarding themselves, i.e., that r 2 is the same for both prosocial and antisocial rewarders. Challenging this assumption by making investments in rewards contingent on the production of the public good, or by increasing the ability of prosocials to reward each other relative to that of antisocials19, will necessarily change this picture and promote prosocial rewarding in larger regions of the parameter space.

Although higher rewards prevent the initial invasion of prosocial rewarding, we have also shown that, once prosocial rewarding is common, higher rewards can further enhance the evolution of prosocial rewarding. These results are in line with the findings of SP15, who showed that when both spatial structure is sufficiently large (their spatial model supports cooperation even in the absence of rewards) and the initial frequency of prosocial rewarding is relatively high (i.e., 1/4 in their simulations), larger rewards promote prosocial rewarding.

In contrast to the original model by SP15, which considered discrete strategies and strong selection, we assumed continuous mixed strategies and weak selection36. For well-mixed populations, it is well known that these different sets of assumptions lead to identical results under a suitable reinterpretation of the model variables53. Thus, our result that in this case there is a unique convergence unstable z * in mixed strategies also implies that the replicator dynamics for the two-strategy model will be characterized by an unstable rest point at a frequency z * of prosocial rewarders, and corroborates the numerical results presented in section 3.a of SP15. By contrast, for structured populations the invasion and equilibrium conditions between discrete- and mixed-strategy game models45, 46, 63 and between weak and strong selection models61 can differ. Hence, our results for spatially structured populations need not be identical to those reported in SP15. Importantly, our mathematical framework assumes that the population is essentially monomorphic. An initial state of, say, z = 1/3 in our Fig. 2 means that all individuals in the population play the same mixed strategy z = 1/3. Evolution then proceeds by means of a trait substitution sequence (TSS), whereby a single mutant (which we also assume plays a slightly different mixed strategy z + δ, where δ is small) will either become extinct or invade and replace the resident population65. If the latter happens, the resident strategy is updated to z + δ and the process starts again, until a convergence stable state is reached. The TSS assumption, common in adaptive dynamics and related mathematical methods studying the evolution of continuous traits in spatially structured populations28, 66 is then in stark contrast to the numerical simulations used in SP15 and related studies41, where evolution starts from a polymorphic population where a large number of mutants appear en masse either randomly or clustered together according to a given “prepared initial state”.

Finally, there is a slight difference in the way SP15 and our study (explicitly in our simulations and implicitly in our analytical model) implemented the evolutionary game on a graph. SP15 assume, in the tradition of other computational studies (e.g., refs 41, 67, 68), that a focal player’s total payoff is given by the sum of payoffs obtained in k + 1 different games, one “centered” on the focal player itself and the other k centered on its neighbors. As a result, a focal player interacts not only with first-order but also with second-order neighbors. An analytical treatment of such case would need an extension of our framework to incorporate an additional scaled relatedness coefficient to account for interactions with second-order neighbors50. Contrastingly, we assumed that a focal player obtains its payoff from a single multiplayer game with its k immediate neighbors64, 69. This more parsimonious assumption allows us to analyze multiplayer interactions on graphs in a straightforward way (i.e., by allowing us to directly use the framework developed in ref. 36 requiring a single scaled relatedness coefficient) without importantly modifying the underlying evolutionary dynamics69.

Our motivation for a set of assumptions different to those of SP15 was hence for both analytical tractability and wider applicability. An analytical solution of the model with discrete strategies (as in SP15) would require tracking higher-order genetic associations and effects of local competition, which can be a complicated task even in relatively simple models of spatial structure under weak selection61, 70,71,72,73. By contrast, assuming continuous strategies (and a single game per player) allowed us to identify convergence stable levels of prosocial rewarding in a wide array of spatially structured populations, each characterized by a particular value of scaled relatedness. This way, we made analytical progress going beyond the numerical results on a particular type of population structure (a square lattice with overlapping groups of size n = 5) studied in SP15. Furthermore, treating scaled relatedness as an exogenous parameter independent of specific demographic assumptions, allows making more general predictions on the evolution of a trait, as well as relating results from different types of models74. Understanding the effect of more specific demographic parameters (such as the dispersal rate, the update rule, or the degree of the network) can be achieved by determining how scaled relatedness can be expressed in terms of those parameters, as we have shown above.

Our analytical results are valid only to the first order of δ (the difference between the trait of mutants and the trait of residents). As a result, we cannot evaluate whether or not the singular strategies we identify as convergence stable are also “evolutionarily stable” or “locally uninvadable”, i.e., whether a population monomorphic for a singular value will resist invasion by mutants with traits close to the singular value37, 52, 54, 75. This also means that our model does not allow one to check whether or not evolutionary branching (whereby a convergence stable but locally invadable population diversifies into differentiated coexisting morphs76) might occur. We hasten to note that such drawback is not particular to our method28. Evolutionary stability in spatially structured populations is significantly more challenging to characterize than convergence stability37, 77,78,79,80 and is thus beyond the scope of the present paper.

A related issue has to do with our assumption that individuals play mixed strategies and hence that payoffs are linear in the focal’s own strategy. For this kind of models, “a peculiar degeneracy raises its ugly head”81, namely that the second-order condition to evaluate evolutionary stability in a well-mixed population is null. In turn, this implies that phenotypic variants at a singular point that is convergence stable are strictly neutral. Such degeneracy is however restricted to well-mixed populations, and does not necessarily apply to spatially structured populations. Indeed, the condition for uninvadibility under weak selection in subdivided populations has been shown to depend also on mixed partial derivatives of the payoff function37, which in general are not zero. All in all, our view is that assuming individuals play mixed strategies of a matrix game is not that problematic: For well-mixed populations (where the degeneracy raises its head), the convergence stable mixed strategies can be reinterpreted as evolutionarily stable points of a replicator dynamics in discrete strategies; for spatially structured populations, there is simply no degeneracy. Future work should explore the conditions under which convergence stable mixed strategies of the model presented here and other matrix games are locally uninvadable.

Both our model and that of SP15 have not considered the presence of individuals who are able to benefit from reward funds without contributing to them. In other words, second-order defection is avoided by design. Allowing for second-order defection makes cooperation through pool rewarding vulnerable, even in the absence of antisocial rewarding15. Therefore, even though the conclusions of SP15 contradict the findings of dos Santos19, namely that antisocial rewarding deters cooperation except in certain conditions (e.g., better rewarding abilities for prosocials), SP15 did not investigate standard pool-rewarding15, 18, 19. Hence, their claim that spatial structure prevents antisocial rewarding from deterring cooperation, while not always true as we have shown here, does not apply to the more general case of pool-reward funds where second-order defection is allowed. Exploring the effects of spatial structure in these more realistic cases remains an interesting line of research.

To conclude, we find that antisocial rewarding deters the invasion of cooperation unless scaled relatedness is sufficiently high and rewards are relatively low, or ideally absent. We argue that additional countermeasures, such as exclusion and better rewarding abilities for prosocials19, are still required to (i) prevent antisocial rewarding from deterring cooperation between unrelated social partners, and (ii) allow prosocial rewarding to invade either when relatedness is low or when rewards are too large.

Methods

Gain function

To derive the gain function \(\mathcal{G}(z)\) (Eq. (11)), we first calculate the direct and indirect gains from switching (Eqs (5) and (6)) associated to the payoffs of the game. We find that the gains from switching depend on the group size n in the following way.

-

1.

For n = 2: (Δ0, Δ1) = (r 1/2 − r 2, r 1/2 + r 2 − 2) and (Θ0, Θ1) = (r 1/2 − r 2 + 1, r 1/2 + r 2 − 1).

-

2.

For n = 3: (Δ0, Δ1, Δ2) = (r 1/3 − r 2, r 1/3 − 1, r 1/3 + r 2 − 2) and (Θ0, Θ1, Θ2) = (2r 1/3, 2r 1/3, 2r 1/3).

-

3.

For n = 4: (Δ0, Δ1, Δ2, Δ3) = (r 1/4 − r 2, r 1/4 − 1, r 1/4 − 1, r 1/4 + r 2 − 2) and (Θ0, Θ1, Θ2, Θ3) = (3r 1/4, 3r 1/4 + r 2 − 1, 3r 1/4 − r 2 + 1, 3r 1/4).

-

4.

For n ≥ 5: (Δ0, Δ1, …, Δ n−2, Δ n−1) = (r 1/n − r 2, r 1/n − 1, …, r 1/n − 1, r 1/n + r 2 − 2) and (Θ0, Θ1, Θ2, …, Θ n−3, Θ n−2, Θ n−1) = ((n − 1)r 1/n, (n − 1)r 1/n + r 2 − 1, (n − 1)r 1/n, …, (n − 1)r 1/n, (n − 1)r 1/n − r 2 + 1, (n − 1)r 1/n).

Replacing the direct gains from switching Δ k into the expression for the direct effect \(-\mathcal{C}(z)\) (Eq. (4a)) and the indirect gains from switching Θ k into the expression for the indirect effect \(\mathcal{B}(z)\) (Eq. (4b)), and simplifying, we obtain the gain function \(\mathcal{G}(z)\) given in Eq. (11), which is valid for all n ≥ 2.

Evolutionary dynamics for κ = 0

For κ = 0, the gain function \(\mathcal{G}(z)\) (Eq. (11)) reduces to \(-\mathcal{C}(z)\) (Eq. (4a)). This function is increasing and its end-points are given by \(-\mathcal{C}(0)={r}_{1}/n-{r}_{2}\) and \(-{\mathcal{C}}(1)={r}_{1}/n+{r}_{2}-2\). Since 1 < r 1 < n and r 2 > 1, \(-\mathcal{C}(0) < 0\) always holds and z = 0 is always stable. If r 1/n + r 2 ≤ 2, \(-\mathcal{C}(z)\) is nonpositive for all z and z = 0 is the only stable equilibrium. If r 1/n + r 2 > 2, \(-\mathcal{C}(1) > 0\) and z = 1 is also stable. In this case, and since \(-\mathcal{C}(z)\) is increasing, \(-\mathcal{C}(z)\) has a single zero z * in (0, 1) corresponding to an unstable equilibrium. Such zero is given by the unique solution to

Since p(z) is increasing in z, p(1/2) = 0, and α > 0 always holds, it follows that z * > 1/2. Additionally, since α is decreasing in both r 1 and r 2, z * is increasing in both r 1 and r 2.

Evolutionary dynamics for κ ≠ 0

Rearranging terms, the gain function \(\mathcal{G}(z)\) given by Eq. (11) can be alternatively written as

where

is a polynomial in Bernstein form53 of degree n − 1 with coefficients given by

-

1.

(ζ 0, ζ 1) = (−(1 + κ), 1 + κ) if n = 2.

-

2.

(ζ 0, ζ 1, ζ 2) = (−1, 0, 1) if n = 3.

-

3.

(ζ 0, ζ 1, ζ 2, ζ 3) = (−1, κ, −κ, 1) if n = 4.

-

4.

(ζ 0, ζ 1, ζ 2, …, ζ n−3, ζ n−2, ζ n−1) = (−1, κ, 0, …, 0, −κ, 1) if n ≥ 5.

The number of sign changes (and hence of singular points) of \(\mathcal{G}(z)\) is bounded from above by the number of sign changes of \(\mathcal{P}(z)\). Moreover, and by the variation-diminishing property of polynomials in Bernstein form53, the number of sign changes of \(\mathcal{P}(z)\) is equal to the number of sign changes of the sequence of coefficients (ζ 0, …, ζ n−1) minus an even integer. It then follows that the number of singular points is at most one if n ≤ 3 or if scaled relatedness is nonpositive, κ ≤ 0. In this case, the unique singular point z * is convergence unstable. However, if n ≥ 4 and κ > 0, there could be up to three singular points z L, z M, and z R satisfying 0 < z L < z M < z R < 1 such that z L and z R are convergence unstable and z M is convergence stable.

Computer simulations

We performed individual-based simulations for a population composed of N T individuals, using NumPy version 1.11.1. Starting with a population monomorphic for a probability z = z 0 of playing rewarding cooperation, we track the evolution of the phenotypic distribution as mutations of small effect continuously arise. Each individual j = 1, …, N T is characterized by its probability z j . The average payoff of individual j is given by \({\pi }_{j}={\sum }_{k=0}^{n-1}{\varphi }_{k}\{{z}_{j}{c}_{k}+(1-{z}_{j}){d}_{k}\}\) where the payoff sequences c k and d k are respectively given by Eqs (1) and (2), and ϕ k is the probability that exactly k out of n − 1 of j’s neighbors choose action RC. Technically, ϕ k is the probability mass function of a Poisson binomial distribution with parameters \({z}_{{j}_{1}},{z}_{{j}_{2}},\ldots ,{z}_{{j}_{n-1}}\), where \({j}_{\ell }\) represents the \(\ell \)-th neighbor of individual j, and \({z}_{{j}_{\ell }}\) its phenotype. For simplicity, we approximate ϕ k by the probability mass function of a binomial random variable with parameters n and \(p={\sum }_{\ell =1}^{n-1}{z}_{{j}_{\ell }}/(n-1)\); such approximation to the true probability mass function is very accurate when the probabilities \({z}_{{j}_{\ell }}\) are close to each other. We update the population via a death-birth Moran process, i.e., we (i) randomly select one individual to die and one of its neighbors to give birth, and (ii) fill the vacated breeding spot with probability proportional to the average payoff. More specifically, assuming that j is chosen to die, its \(\ell \)-th neighbor is given a probability \(\exp (w{\pi }_{{j}_{\ell }})\) of replacing j, where w is the intensity of selection and \({\pi }_{{j}_{\ell }}\) is the payoff of the \(\ell \)-th neighbor of j. The vacated breeding spot is filled with the same phenotypic value as the parent with probability 1 − μ and with a mutated phenotypic value with probability μ. If mutation occurs, the mutated phenotypic value is given by the parent value plus a small perturbation sampled from a normal distribution with mean zero and standard deviation ν. The resulting phenotypes are truncated so that they are numbers between 0 and 1. The previous procedure is repeated for a sufficiently large number of time steps.

We simulated two models of spatial population structure: a square lattice with periodic boundary conditions (i.e., a type of transitive graph with k = 4) of N T = 400 nodes, and a well-mixed population of N T = 400 individuals where a given focal individual is assigned four randomly chosen other individuals as neighbors each time step. Other parameter values used were: w = 10, μ = 0.01, ν = 0.005.

References

Axelrod, R. The evolution of cooperation (Basic Books, New York, NY, 1984).

Sugden, R. The economics of rights, cooperation and welfare (Blackwell, Oxford and New York, 1986).

Frank, S. A. Foundations of social evolution (Princeton University Press, Princeton, NJ, 1998).

Lehmann, L. & Keller, L. The evolution of cooperation and altruism: a general framework and a classification of models. Journal of Evolutionary Biology 19, 1365–1376 (2006).

West, S. A., Griffin, A. S. & Gardner, A. Evolutionary explanations for cooperation. Current Biology 17, R661–R672 (2007).

Sigmund, K. The calculus of selfishness (Princeton University Press, 2010).

Hardin, G. The tragedy of the commons. Science 162, 1243–1248 (1968).

Oliver, P. Rewards and punishments as selective incentives for collective action: theoretical investigations. American Journal of Sociology 85, 1356–1375 (1980).

Hilbe, C. & Sigmund, K. Incentives and opportunism: from the carrot to the stick. Proceedings of the Royal Society B: Biological Sciences 277, 2427–2433 (2010).

Sigmund, K. Punish or perish? Retaliation and collaboration among humans. Trends in Ecology & Evolution 22, 593–600 (2007).

Boyd, R. & Richerson, P. Punishment allows the evolution of cooperation (or anything else) in sizable groups. Ethology and sociobiology 13, 171–195 (1992).

dos Santos, M., Rankin, D. J. & Wedekind, C. The evolution of punishment through reputation. Proceedings of the Royal Society B: Biological Sciences 278, 371–377 (2011).

Hilbe, C. & Traulsen, A. Emergence of responsible sanctions without second order free riders, antisocial punishment or spite. Scientific Reports 2, 458 (2012).

Hauert, C. Replicator dynamics of reward and reputation in public goods games. Journal of Theoretical Biology 267, 22–28 (2010).

Sasaki, T. & Unemi, T. Replicator dynamics in public goods games with reward funds. Journal of Theoretical Biology 287, 109–114 (2011).

Sasaki, T., Uchida, S. & Chen, X. Voluntary rewards mediate the evolution of pool punishment for maintaining public goods in large populations. Scientific Reports 5, 8917 (2015).

Ostrom, E. Governing the commons: the evolution of institutions for collective action (Cambridge University Press, Cambridge, 1990).

Sasaki, T. & Uchida, S. Rewards and the evolution of cooperation in public good games. Biology Letters 10, 20130903 (2014).

dos Santos, M. The evolution of anti-social rewarding and its countermeasures in public goods games. Proceedings of the Royal Society B: Biological Sciences 282, 20141994 (2015).

Hibbing, M. E., Fuqua, C., Parsek, M. R. & Peterson, S. B. Bacterial competition: surviving and thriving in the microbial jungle. Nature Reviews Microbiology 8, 15–25 (2010).

Griffin, A. S., West, S. A. & Buckling, A. Cooperation and competition in pathogenic bacteria. Nature 430, 1024–1027 (2004).

Khan, A. et al. Differential cross-utilization of heterologous siderophores by nodule bacteria of Cajanus cajan and its possible role in growth under iron-limited conditions. Applied Soil Ecology 34, 19–26 (2006).

Hughes, D. P. & d’Ettorre, P. Sociobiology of communication: an interdisciplinary perspective (Oxford University Press, Oxford, 2008).

Hohnadel, D. & Meyer, J. M. Specificity of pyoverdine-mediated iron uptake among fluorescent Pseudomonas strains. Journal of Bacteriology 170, 4865–4873 (1988).

Szolnoki, A. & Perc, M. Antisocial pool rewarding does not deter public cooperation. Proceedings of the Royal Society B: Biological Sciences 282, 20151975 (2015).

Hamilton, W. D. The genetical evolution of social behaviour. ii. Theoretical Population Biology 7, 17–52 (1964).

Hamilton, W. Selection of selfish and altruistic behavior in some extreme models. In Eisenberg, J. F. & Dillon, W. S. (eds) Man and Beast: Comparative Social Behavior, 57–91 (Smithsonian Press, Washington, 1971).

Rousset, F. Genetic structure and selection in subdivided populations. Monographs in Population Biology 40, 264 (2004).

Queller, D. C. Genetic relatedness in viscous populations. Evolutionary Ecology 8, 70–73 (1994).

Taylor, P. D. Altruism in viscous populations — an inclusive fitness model. Evolutionary Ecology 6, 352–356 (1992).

Grafen, A. An inclusive fitness analysis of altruism on a cyclical network. Journal of Evolutionary Biology 20, 2278–2283 (2007).

Taylor, P. D., Day, T. & Wild, G. Evolution of cooperation in a finite homogeneous graph. Nature 447, 469–472 (2007).

Lehmann, L. & Rousset, F. How life history and demography promote or inhibit the evolution of helping behaviours. Philosophical Transactions of the Royal Society B: Biological Sciences 365, 2599–2617 (2010).

Van Cleve, J. & Lehmann, L. Stochastic stability and the evolution of coordination in spatially structured populations. Theoretical Population Biology 89, 75–87 (2013).

Van Cleve, J. Social evolution and genetic interactions in the short and long term. Theoretical Population Biology 103, 2–26 (2015).

Peña, J., Nöldeke, G. & Lehmann, L. Evolutionary dynamics of collective action in spatially structured populations. Journal of Theoretical Biology 382, 122–136 (2015).

Mullon, C., Keller, L. & Lehmann, L. Evolutionary stability of jointly evolving traits in subdivided populations. The American Naturalist 188, 175–195 (2016).

Nowak, M. A. & May, R. M. Evolutionary games and spatial chaos. Nature 359, 826–829 (1992).

Ohtsuki, H., Hauert, C., Lieberman, E. & Nowak, M. A. A simple rule for the evolution of cooperation on graphs. Nature 441, 502–505 (2006).

Szabó, G. & Fath, G. Evolutionary games on graphs. Physics Reports 446, 97–216 (2007).

Perc, M., Gómez-Gardeñes, J., Szolnoki, A., Floría, L. & Moreno, Y. Evolutionary dynamics of group interactions on structured populations: a review. Journal of the Royal Society Interface 10, 20120997 (2013).

Tarnita, C. E., Ohtsuki, H., Antal, T., Fu, F. & Nowak, M. A. Strategy selection in structured populations. Journal of Theoretical Biology 259, 570–581 (2009).

Allen, B., Nowak, M. A. & Dieckmann, U. Adaptive dynamics with interaction structure. The American Naturalist 181, E139–E163 (2013).

Perc, M. & Szolnoki, A. Coevolutionary games—a mini review. BioSystems 99, 109–125 (2010).

Grafen, A. The hawk-dove game played between relatives. Animal Behaviour 27, 905–907 (1979).

Wild, G. & Traulsen, A. The different limits of weak selection and the evolutionary dynamics of finite populations. Journal of Theoretical Biology 247, 382–390 (2007).

Lehmann, L. & Rousset, F. The genetical theory of social behaviour. Philosophical Transactions of the Royal Society of London B: Biological Sciences 369 (2014).

Diekmann, A. Volunteer’s dilemma. Journal of Conflict Resolution 29, 605–610 (1985).

Wright, S. Evolution in mendelian populations. Genetics 16, 97–159 (1931).

Lehmann, L., Keller, L. & Sumpter, D. J. T. The evolution of helping and harming on graphs: the return of the inclusive fitness effect. Journal of Evolutionary Biology 20, 2284–2295 (2007).

Rousset & Billiard. A theoretical basis for measures of kin selection in subdivided populations: finite populations and localized dispersal. Journal of Evolutionary Biology 13, 814–825 (2000).

Geritz, S. A. H., Kisdi, E., Meszéna, G. & Metz, J. A. J. Evolutionarily singular strategies and the adaptive growth and branching of the evolutionary tree. Evolutionary Ecology Research 12, 35–57 (1998).

Peña, J., Lehmann, L. & Nöldeke, G. Gains from switching and evolutionary stability in multi-player matrix games. Journal of Theoretical Biology 346, 23–33 (2014).

Eshel, I. Evolutionary and continuous stability. Journal of Theoretical Biology 103, 99–111 (1983).

Eshel, I. On the changing concept of evolutionary population stability as a reflection of a changing point of view in the quantitative theory of evolution. Journal of Mathematical Biology 34, 485–510 (1996).

Taylor, P. D. Evolutionary stability in one-parameter models under weak selection. Theoretical Population Biology 36, 125–143 (1989).

Matessi, C. & Karlin, S. On the evolution of altruism by kin selection. Proceedings of the National Academy of Sciences 81, 1754–1758 (1984).

Kerr, B., Godfrey-Smith, P. & Feldman, M. W. What is altruism? Trends in Ecology & Evolution 19, 135–140 (2004).

Uyenoyama, M. & Feldman, M. W. Theories of kin and group selection: a population genetics approach. Theoretical Population Biology 17, 380–414 (1980).

Peña, J., Wu, B. & Traulsen, A. Ordering structured populations in multiplayer cooperation games. Journal of the Royal Society Interface 13, 20150881 (2016).

Mullon, C. & Lehmann, L. The robustness of the weak selection approximation for the evolution of altruism against strong selection. Journal of Evolutionary Biology 27, 2272–2282 (2014).

Débarre, F., Hauert, C. & Doebeli, M. Social evolution in structured populations. Nature Communications 5, 4409 (2014).

Hauert, C. & Doebeli, M. Spatial structure often inhibits the evolution of cooperation in the snowdrift game. Nature 428, 643–646 (2004).

Peña, J., Wu, B., Arranz, J. & Traulsen, A. Evolutionary games of multiplayer cooperation on graphs. PLOS Computational Biology 12, 1–15 (2016).

Champagnat, N. A microscopic interpretation for adaptive dynamics trait substitution sequence models. Stochastic Processes and their Applications 116, 1127–1160 (2006).

Metz, J., Nisbet, R. & Geritz, S. How should we define ‘fitness’ for general ecological scenarios? Trends in Ecology & Evolution 7, 198–202 (1992).

Santos, F. C., Santos, M. D. & Pacheco, J. M. Social diversity promotes the emergence of cooperation in public goods games. Nature 454, 213–216 (2008).

Peña, J. & Rochat, Y. Bipartite graphs as models of population structures in evolutionary multiplayer games. PLOS ONE 7, 1–13 (2012).

Szabó, G. & Hauert, C. Phase transitions and volunteering in spatial public goods games. Physical Review Letters 89, 118101 (2002).

Roze, D. & Rousset, F. Multilocus models in the infinite island model of population structure. Theoretical Population Biology 73, 529–542 (2008).

Ohtsuki, H. Evolutionary games in Wright’s island model: kin selection meets evolutionary game theory. Evolution 64, 3344–3353 (2010).

Wu, B., Traulsen, A. & Gokhale, C. S. Dynamic properties of evolutionary multi-player games in finite populations. Games 4, 182–199 (2013).

Ohtsuki, H. Evolutionary dynamics of n-player games played by relatives. Philosophical Transactions of the Royal Society B: Biological Sciences 369 (2014).

Gardner, A. & West, S. Demography, altruism, and the benefits of budding. Journal of Evolutionary Biology 19, 1707–1716 (2006).

Christiansen, F. B. On conditions for evolutionary stability for a continuously varying character. The American Naturalist 138, 37–50 (1991).

Doebeli, M., Hauert, C. & Killingback, T. The evolutionary origin of cooperators and defectors. Science 306, 859–862 (2004).

Day, T. Population structure inhibits evolutionary diversification under competition for resources. Genetica 112, 71–86 (2001).

Metz, J. A. J. & Gyllenberg, M. How should we define fitness in structured metapopulation models? including an application to the calculation of evolutionarily stable dispersal strategies. Proceedings of the Royal Society of London B: Biological Sciences 268, 499–508 (2001).

Ajar, É. Analysis of disruptive selection in subdivided populations. BMC Evolutionary Biology 3, 22 (2003).

Wakano, J. Y. & Lehmann, L. Evolutionary branching in deme-structured populations. Journal of Theoretical Biology 351, 83–95 (2014).

Dieckmann, U. & Metz, J. A. Surprising evolutionary predictions from enhanced ecological realism. Theoretical Population Biology 69, 263–281 (2006).

Acknowledgements

We thank Pat Barclay, Laurent Lehmann, and Stu West for comments, and the Swiss National Science Foundation for funding (Grant P2LAP3–158669 to M.D.S.).

Author information

Authors and Affiliations

Contributions

Both authors contributed equally to this work. M.D.S. and J.P. conceived and designed the study, performed the analysis, and wrote the manuscript. Both authors gave final approval for submission.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

dos Santos, M., Peña, J. Antisocial rewarding in structured populations. Sci Rep 7, 6212 (2017). https://doi.org/10.1038/s41598-017-06063-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-06063-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.