Abstract

We study a simple model for social-learning agents in a restless multiarmed bandit (rMAB). The bandit has one good arm that changes to a bad one with a certain probability. Each agent stochastically selects one of the two methods, random search (individual learning) or copying information from other agents (social learning), using which he/she seeks the good arm. Fitness of an agent is the probability to know the good arm in the steady state of the agent system. In this model, we explicitly construct the unique Nash equilibrium state and show that the corresponding strategy for each agent is an evolutionarily stable strategy (ESS) in the sense of Thomas. It is shown that the fitness of an agent with ESS is superior to that of an asocial learner when the success probability of social learning is greater than a threshold determined from the probability of success of individual learning, the probability of change of state of the rMAB, and the number of agents. The ESS Nash equilibrium is a solution to Rogers’ paradox.

Similar content being viewed by others

Introduction

One of the differences between human beings and other animals is that the former transfer their predecessors’ experience and wisdom in the form of knowledge1. Social learning—learning from the experience of others— is advantageous compared to individual learning2,3,4. Without social learning everybody would have to learn everything for themselves2. In other words, individual learning costs more than social learning does2,3,4. Therefore, Rogers’ finding that social learning is not necessarily more advantageous than individual learning is counterintuitive5. This is now called Rogers’ paradox.

Rogers’ conclusion seems very strange in light of our experience4. Several attempts have been made to solve Rogers’ paradox in social learning. Boyd and Richerson2 pointed out that Rogers’ paradox is not a paradox when the only benefit of social learning is to avoid learning costs. Further, on analysing two models where social learning reduces individual-learning costs and improves the information obtained through the latter, they concluded that social learning can be adaptive. Enquist et al.3 advocated a learning form called critical social learning, which is social learning supplemented by individual learning. They discussed using rate equations and succeeded in solving the paradox. Rendell et al.4 studied the relative merits of several learning strategies by using a spatially explicit stochastic model.

The concept of adaptive information filtering3, 6 has been proposed as key to the effective working of social learning. It indicates that each member effectively learns good-quality information provided by other members. For example, in a famous tournament by Rendell et al.6, discountmachine that did the most effective social learning won over the other strategies that combined individual learning and social learning.

In this study, we propose a stochastic model to solve Rogers’ paradox in the framework of a restless multiarmed bandit (rMAB) used in that tournament. The objective of this study is to analyse equilibrium social learning in an rMAB. An rMAB is analogous to the “one-armed bandit” slot machine but with multiple “arms”, each with a distinct payoff. We call an arm with a high payoff a good arm. The term “restless” means that the payoffs change randomly. Agents maximise their payoffs by exploiting an arm, searching for a good arm at random (individual learning), or copying an arm exploited by other agents (social learning). Because rMAB is simple in structure and its generality, we believe that it is an appropriate framework to consider Rogers’ paradox.

As a model for social-learning collectives, Bolton and Harris studied an agent system in a multi-armed bandit7. They assumed that the agents could know all information of other agents and obtained a socially optimal experiment (learning) strategy. In the present study, we consider the bounded rationality of agents, who can access the results of their respective choices only. In addition, we assume that the environment (i.e., the rMAB) changes randomly. We obtain the socially optimal and equilibrium learning strategies.

Model

We make the model as simple as possible and incorporate the property of adaptive filtering of information into it. A mathematical overview of the model is given in the Methods section.

The rMAB has only one good arm and infinitely many bad arms. There are N agents labeled by n = 1, …, N. In each turn, an agent (say, agent n) is randomly chosen. He/she exploits his/her arm and obtains payoff 1 if he/she knows a good arm. If he/she does not know a good arm, he/she randomly searches for it (individual learning) with probability 1 − r n , or copies the information of other agents’ good arms (social learning) with probability r n . In the random search, the probability that he/she successfully finds a good arm is denoted as q I . On the other hand, we assume that the copy process succeeds with probability q O 8 if there is at least one agent who knows a good arm, and fails if no agent knows a good arm. Then, with probability q C /N, the good arm changes to a bad one and another good arm appears. If a good arm changes to a bad one, the agents who knew the arm are forced to forget it and to know a bad one. See Fig. 1. The difference with our previous model8 is that there are M good arms in the previous model, whereas in the present model there is only one good arm.

Let σ n be a random variable defined by

This is simply the payoff for agent n. For each turn t, we have a joint probability function P(σ 1, …, σ N |t), which evolves in t according to the aforementioned rule. To exclude trivial results, we assume that q C , q I and q O are positive and that r n s are less than 18. Then, in the long run, we have the unique steady probability function \(P({\sigma }_{1},\cdots ,{\sigma }_{N})={\mathrm{lim}}_{t\to \infty }P({\sigma }_{1},\cdots ,{\sigma }_{N}|t)\). Now, we shall introduce the expected payoff for each agent in the steady state,

This quantity depends on parameters N, q C , q I , q O , and r n s. We regard w n mainly as a function of r n s. We denote this function by w(r n , \(\overline{r}\) n ), where

Thus, we have w n = w(r n , \(\overline{r}\) n ) for each n = 1, …, N.

In this study, we treat w n as the fitness for agent n.

Results and Discussion

Pure Strategies and Rogers’ Paradox

In the present study, the strategy of agent n refers to the social learning probability, r n . We call r n = 0, 1 as pure strategies and 0 < r n < 1 as mixed strategies.

First, we confirm that Rogers’ paradox occurs when agents adopt pure strategies. We shall divide N agents into two groups. The first group consists of N I individual learners (r k = 0, k = 1, …, N I ). The second group consists of N S = N − N I social learners (r k = 1, k = N I + 1, …, N I + N S ). The corresponding fitness per agent, which we denote respectively as w I and w S , are given by

where a is defined in equation (4).

When q O ≤ q I , we have w I > w S . Therefore, in this case, individual learning is always favourable over social learning.

Now, we consider the q O > q I case. Figure 2 is the plot of w I and w S for sufficiently large N.

When the proportion of social learners is small, social learning is effective. However, as the proportion of social learners increases, w S monotonically decreases and tends to zero. Thus, Rogers’ paradox occurs.

It is important to note that w I < w S is true when N I /N is finite, with a sufficiently large N. This is because, as N → ∞, we have w I → q I /(q C + q I ) and w S → q O /(q C + q O ).

Nash Equilibrium and Rogers’ Paradox

Let us assume that each agent adopts a mixed strategy, that is, for each n = 1, …, N, the social-learning probability, r n , is an arbitrary number between 0 and 1. This means that agent n performs social learning with probability r n and individual learning with probability 1 − r n . The learning mode that he/she chooses would be decided stochastically and automatically.

We consider the N-tuple, (r 1, …, r N ), of the social-learning probabilities. This is a point in the N-dimensional unit cube J = [0, 1] × … × [0, 1]. J is regarded as the space of N-tuples of mixed strategies. For each point in J, a joint probability function P(σ 1, …, σ N ) is determined and an N-tuple, (w 1, …, w N ), of the fitness functions of the agents is calculated.

Now, imagine that agent n maximises w n by adjusting r n for fixed r k s (k ≠ n). It is not difficult to show that the maximum point is unique (Fig. 3) and expressed as

where

We note that f(r) → 0 as q O → q I + 0. Next, we introduce the function,

This is a continuous function mapping from the N-dimensional unit cube J into itself. As shown in the Methods section, the fixed point of F is unique and is on the diagonal line of J,

where r Nash is a function of q C , q I , q O and N. The value of r Nash is explicitly given by

where

The entity r Nash has the following properties (see the Methods section): (i) 0 ≤ r Nash < 1, (ii) r Nash → 0 as (q O − q I )N − (a + q O ) → 0, and (iii) the fixed point (r Nash, …, r Nash) is the unique Nash equilibrium point in J. Figure 4 is a schematic explanation of the Nash equilibrium point.

Moreover, the corresponding mixed strategy is an evolutionarily stable strategy (ESS)9 because the fixed point is a Nash equilibrium point in the strong sense,

Further, it is an ESS in the sense of Thomas10, because the inequality,

is true.

Now, we consider the two fitness functions, w I and w N = w (r Nash, r Nash). As shown in the Methods section, the inequality w N > w I is correct if and only if (q O − q I ) N > a + q O . See also Fig. 5. The Nash equilibrium point is usually regarded as a stable point in the sense that no agent has an intention to change his/her strategy. Therefore, this inequality claims that the mixed strategy r n = r Nash (n = 1, …, N) can outperform the pure strategy of individual learning. This solves Rogers’ paradox. We note that the Nash equilibrium point is realised as a mixed strategy of social learning and individual learning.

Pareto Optimality

Pareto optimality is an important concept alongside Nash equilibrium. Thus, we consider Pareto optimality in our model. We shall adopt a natural definition of the Pareto-optimal point in J as the maximum point of the function, \({\sum }_{k=1}^{n}{w}_{k}\). We can show that the maximum point is unique and is on the diagonal line of J,

where r Pareto is a function of q C , q I , q O , and N. The value of r Pareto is explicitly given by

where

Further, r Pareto has the following properties: (i) 0 ≤ r Pareto < 1, (ii) r Pareto → 0 as (q O − q I )N − (a + q O ) → 0, (iii) r Pareto < r Nash if and only if (q O − q I )N > a + q O (see the Methods section), and (iv) the point (r Pareto, …, r Pareto) is the Pareto-optimal point in J. Here, by Pareto optimality, we imply that the statement “if an agent succeeds to increase his/her fitness by changing his/her social-learning probability from r Pareto to r Pareto + δr by δr ≠ 0, then another agent’s fitness certainly decreases” is true. Such a δr exists when r Pareto > 0 and no δr exists when r Pareto = 0. The statement is correct in both cases.

We define the Pareto fitness function, w P = f(r Pareto, r Pareto). Then, we have the inequality w P > w N if and only if (q O − q I )N > a + q O (see Fig. 5 and the Methods section). This is trivial by the definition of the Pareto-optimal point. Thus, we have established the relation among fitness functions,

Concluding Remarks

We have proposed a stochastic model of N agents and an rMAB. The unique Nash equilibrium point in the mixed strategy space J has been presented and shown to be an ESS in the sense of Thomas10. The corresponding fitness w N per agent is greater than the fitness w I for an individual learner. This solves Rogers’ paradox.

In this study, we concentrated on steady states. This is valid if the system relaxes quickly to the steady state (see the Methods section). However, if r n s change faster than the relaxation to the steady state, it is an introduction of non-trivial dynamics. It may be possible that our system has a nice dynamics possessing the stable Nash equilibrium point.

As a future research subject, we propose an experimental study of human collectives in rMAB. There have been several attempts in this direction11,12,13, whose target has been the improvement of performance by social learning, that is, collective intelligence effect. Since we have shown that there is an ESS Nash equilibrium in the social-learning agents system in rMAB, it is interesting to experimentally examine whether the prediction is realised. As a first step, the interactive rMAB game might be a suitable environment where one human competes with many other mixed-strategy agents and r = r Nash. We can check whether the social-learning rate of people is the same with r Nash. Second, when many people compete, the Nash equilibrium emerges as the model parameter q I changes. Meanwhile, we might be able to detect some phase-transitive behaviour8.

As for theoretical research, the stage of our analysis is far from mature. In the present work, we have studied the game of rMAB in the steady state of the system. However, when the relaxation time of the system discussed in the Methods section is not small enough, the assumption of steadiness is unrealistic in the laboratory experiment. Thus, we need to develop a t-dependent theory. It might be a difficult problem. We believe that the research direction is fruitful.

Methods

Mathematical Overview of the Model

For simplicity we use the following notation,

Our model develops in t according to an agent action and the subsequent state change of the rMAB. This is a Markov process14. The probability of change \(\mathop{\sigma}\limits^{\longrightarrow}\to \vec{\sigma}^{\prime}\) is described by the transition probability matrix14,

where

The joint probability function \(P(\mathop{\sigma }\limits^{\longrightarrow}|t)=P({\sigma }_{1},\ldots ,{\sigma }_{N}|t)\) satisfies the Chapman-Kolmogorov equation14,

Our assumption is that q C , q I , q O > 0 and r n < 1 (n = 1, …, N). In this case, the matrix T is shown to be irreducible and primitive15. Then, the Perron-Frobenius theory15 ensures that (i) λ 1 = 1 is an eigenvalue of T of multiplicity 1 and the steady probability function P(\(\vec{\sigma }\)) is a corresponding eigenvector, (ii) the set {|λ i |} i≥2 of absolute values of eigenvalues of T other than λ 1 has an upper bound ρ < 1. When r n s are fixed, we have the time-homogeneous Markov process14, that is, the matrix T does not depend on t. Therefore, for any initial probability function \(P(\vec{\sigma }|0)\), we have the unique limit \(P(\vec{\sigma })={\mathrm{lim}}_{t \rightarrow \infty }P(\vec{\sigma }|t)\). Then, it is not difficult to derive equation (3) using \(P(\vec{\sigma })\).

The convergence \(P(\vec{\sigma }|t) \rightarrow P(\vec{\sigma })\) is exponential, \(|P(\vec{\sigma }|t)-P(\vec{\sigma })|\sim {\rho }^{t}\). This means that the relaxation time is \(\tau =-\,1/\,\mathrm{log}\,{\rho }^{-1}\). Thus, when no agent changes his/her social learning probability over a much longer period than τ, the fitness per agent per turn is almost exactly equal to the value of the function w in equation (3).

Existence of a Fixed Point of F

Since the N-dimensional cube J = [0, 1] × … × [0, 1] is a compact, convex set and F is a continuous function mapping from J into itself, Brouwer’s fixed-point theorem16 guarantees that there exists a fixed point of F in J.

A Fixed Point of F is a Nash Equilibrium Point, and Vice Versa

Let (r 1, …, r N ) be a fixed point of F, that is, r n = f(\(\overline{r}\) n ) for each n = 1, …, N. Since r = f(\(\overline{r}\) n ) is the unique maximal point of w(r, \(\overline{r}\) n ), we have w(r n + δr, \(\overline{r}\) n ) < w(r n , \(\overline{r}\) n ) for each n = 1, …, N when δr ≠ 0. Thus, (r 1, …, r N ) is a Nash equilibrium point. Conversely, let (r 1, …, r N ) be a Nash equilibrium point, that is, r = r n is a maximal point of w(r, \(\overline{r}\) n ) for each n = 1, …, N. Since r = f(\(\overline{r}\) n ) is the unique maximal point of w(r, \(\overline{r}\) n ) (see Fig. 3), we have r n = f(\(\overline{r}\) n ). Thus, (r 1, …, r N ) is a fixed point of F.

Uniqueness of the Fixed Point of F

When q O ≤ q I , we have the unique fixed point (0, …, 0).

Next, we consider the q O > q I case.

Let (r 1, …, r N ) be a fixed point of F. Since \(\overline{r}\) n = (s − r n )/(N − 1), \(s={\sum }_{k=1}^{N}{r}_{k}\), all the r n s satisfy the common relation,

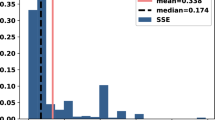

Figure 6(b) is a plot of the function g(r).

This is a strictly increasing concave function for s − (N − 1)r * ≤ r ≤ s − (N − 1)r *, where

It is not difficult to show that r * < r * < 1. The maximum value of the derivative g′(r) is 1/2, which is realised at r = s − (N − 1)r *. Thus, \(\tilde{g}(r)=r-g(r)\) is a strictly increasing function such that \(\tilde{g}\mathrm{(0)}\le 0\le \tilde{g}\mathrm{(1)}\). Therefore, there is only one zero, r 0, of the function \(\tilde{g}(r)\) in the interval 0 ≤ r ≤ 1. Then, we conclude that r 1 = … = r N = r 0.

Now we have s = Nr 0. Therefore, r 0 is a solution of the equation,

Figure 6(a) is a plot of the function f(r). The function f(r) is a decreasing function. Thus, h(r) = r − f(r) is a strictly increasing function such that h(0) ≤ 0 ≤ h(1). Therefore, the function h(r) possesses only one zero, r Nash, such that 0 ≤ r Nash < 1. Thus, we have r 0 = r Nash. This proves the uniqueness of the Nash equilibrium point.

Inequality w P > w N > w I

It is sufficient to consider the (q O − q I )N > a + q O case. Then, r Nash satisfies \(r=\overline{f}(r)\). We introduce the following function,

It is not difficult to check that 1/(1 − r Nash) is the larger root of k(u). We note that k(1) < 0.

Next, we define r I as

This entity has the following properties: (i) 0 < r I < 1, (ii) w(r I , r I ) = w I , and (iii) k (1/(1 − r I )) > 0. On the other hand, it is elementary to show that k (1/(1 − r Pareto)) < 0. Thus, we conclude that r Pareto < r Nash < r I .

Now, r Pareto is the maximal point of w(r, r). Therefore, we have the inequality w(r Pareto, r Pareto) > w(r Nash, r Nash) > w(r I , r I ), that is, w P > w N > w I .

References

Boyd, R. & Richerson, P. J. Culture and the Evolutionary Process (University of Chicago Press, Chicago, 1985).

Boyd, R. & Richerson, P. J. Why does culture increase human adaptability? Ethol. Sociobiol. 16, 125–143, doi:10.1016/0162-3095(94)00073-G (1995).

Enquist, M., Eriksson, K. & Ghirlanda, S. Critical social learning: A solution to Rogers’s paradox of nonadaptive culture. Am. Anthropol. 109, 727–734, doi:10.1525/aa.2007.109.4.727 (2007).

Rendell, L., Fogarty, L. & Laland, K. N. Rogers’ paradox recast and resolved: Population structure and the evolution of social learning strategies. Evolution 64, 534–548, doi:10.1111/j.1558-5646.2009.00817.x (2010).

Rogers, A. R. Does biology constrain culture? Am. Anthropol. 90, 819–831, doi:10.1525/aa.1988.90.4.02a00030 (1988).

Rendell, L. et al. Why copy others? Insights from the social learning strategies tournament. Science 328, 208–213, doi:10.1126/science.1184719 (2010).

Bolton, P. & Harris, C. Strategic experimentation. Econometrica 67, 349–374, doi:10.1111/1468-0262.00022 (1999).

Mori, S., Nakayama, K. & Hisakado, M. Phase transition of social learning collectives and the echo chamber. Phys. Rev. E 94, 052301, doi:10.1103/PhysRevE.94.052301 (2016).

Maynard-Smith, J. Evolution and the Theory of Games (Cambridge University Press, Cambridge, 1982).

Thomas, B. Evolutionary stability: states and strategies. Theor. Popul. Biol. 26, 49–67 (1984).

Toyokawa, W., Kim, H. & Kameda, T. Human collective intelligence under dual exploration-exploitation dilemmas. PloS One 9, e95789, doi:10.1371/journal.pone.0095789 (2014).

Kameda, T. & Nakanishi, D. Cost-benefit analysis of social/cultural learning in a nonstationary uncertain environment: An evolutionary simulation and an experiment with human subjects. Evol. Hum. Behav. 23, 373–393, doi:10.1016/S1090-5138(02)00101-0 (2002).

Yoshida, S., Hisakado, M. & Mori, S. Interactive restless multi-armed bandit game and swarm intelligence effect. New Generat. Comput. 34, 291–306, doi:10.1007/s00354-016-0306-y (2016).

Stroock, D. W. An Introduction to Markov Processes (Springer-Verlag, Heidelberg, 2014).

Meyer, C. D. Matrix Analysis and Linear Algebra (SIAM, 2000).

Granas, A. & Dugundji, J. Fixed Point Theory (Springer-Verlag, New York, 2003).

Acknowledgements

We would like to thank Editage (www.editage.jp) for English language editing. This work was supported by JSPS KAKENHI Grant Number 17K00347.

Author information

Authors and Affiliations

Contributions

S.M. and M.H. conceived the model. K.N. performed a theoretical analysis. All authors contributed to analysing and interpreting the results and to writing the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nakayama, K., Hisakado, M. & Mori, S. Nash Equilibrium of Social-Learning Agents in a Restless Multiarmed Bandit Game. Sci Rep 7, 1937 (2017). https://doi.org/10.1038/s41598-017-01750-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-01750-z

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.