Abstract

Open-ended evolution (OEE) is relevant to a variety of biological, artificial and technological systems, but has been challenging to reproduce in silico. Most theoretical efforts focus on key aspects of open-ended evolution as it appears in biology. We recast the problem as a more general one in dynamical systems theory, providing simple criteria for open-ended evolution based on two hallmark features: unbounded evolution and innovation. We define unbounded evolution as patterns that are non-repeating within the expected Poincare recurrence time of an isolated system, and innovation as trajectories not observed in isolated systems. As a case study, we implement novel variants of cellular automata (CA) where the update rules are allowed to vary with time in three alternative ways. Each is capable of generating conditions for open-ended evolution, but vary in their ability to do so. We find that state-dependent dynamics, regarded as a hallmark of life, statistically out-performs other candidate mechanisms, and is the only mechanism to produce open-ended evolution in a scalable manner, essential to the notion of ongoing evolution. This analysis suggests a new framework for unifying mechanisms for generating OEE with features distinctive to life and its artifacts, with broad applicability to biological and artificial systems.

Similar content being viewed by others

Introduction

Many real-world biological and technological systems display rich dynamics, often leading to increasing complexity over time that is limited only by resource availability. A prominent example is the evolution of biological complexity: the history of life on Earth has displayed a trend of continual evolutionary adaptation and innovation, giving rise to an apparent open-ended increase in the complexity of the biosphere over its >3.5 billion year history1. Other complex systems, from the growth of cities2, to the evolution of language3, culture4, 5 and the Internet6 appear to exhibit similar trends of innovation and open-ended dynamics. Producing computational models that generate sustained patterns of innovation over time is therefore an important goal in modeling complex systems as a necessary step on the path to elucidating the fundamental mechanisms driving open-ended dynamics in both natural and artificial systems. If successful, such models hold promise for new insights in diverse fields ranging from biological evolution to artificial life and artificial intelligence.

Despite the significance of realizing open-ended evolution in theoretical models, progress in this direction has been hindered by the lack of a universally accepted definition for open-ended evolution (OEE). Although relevant to many fields, OEE is most often discussed in the context of artificial life, where the problem is so fundamental that it has been dubbed a “millennium prize problem”7. Many working definitions exist, which can be classified into four hallmark categories as outlined by Banzhaf et al.8: (1) on-going innovation and generation of novelty9, 10; (2) unbounded evolution1, 11, 12; (3) on-going production of complexity13,14,15; (4) a defining feature of life16. Each of these faces its own challenges, as each is cast in terms of equally ambiguous concepts. For example, the concepts of “innovation” or “novelty”, “complexity” and “life” are all notoriously difficult to formalize in their own right. It is also not apparent whether “unbounded evolution” is physically possible since real systems are limited in their dynamics by finite resources, finite time, and finite space. A further challenge is identifying whether the diverse concepts of OEE are driving at qualitatively different phenomena, or whether they might be unified within a common conceptual framework. For example, it has been suggested that increasing complexity might not itself be a hallmark of OEE, but instead a consequence of it9, 16. Likewise, processes may appear unbounded, even within a finite space, if they can continually produce novelty within observable dynamical timescales17.

Given these limitations, it was unclear if OEE is a property unique to life, is inclusive of its artifacts (such as technology), or if it is an even broader phenomenon that could be a universal property of certain classes of dynamical systems. Many approaches aimed at addressing the hallmarks of OEE have been inspired by biology17, primarily because biological evolution is the best known example of a real-world system with the potential to be truly open-ended1. However, as stated, other examples of potentially open-ended complex systems do exist, such as trends associated with cultural4, 5 and technological2, 6 growth, and other creative processes. Therefore, herein we set out to develop a more general framework to seek links between the four aforementioned hallmarks of OEE within dynamical systems, while remaining agnostic about their precise implementation in biology. Our motivation is to discover universal mechanisms that underlie OEE as it might occur both within and outside of biological evolution.

In dynamical systems theory there exists a natural bound on the complexity that can be generated by a finite deterministic process, which is given by the Poincaré recurrence time. Roughly, the Poincaré time is the maximal time after which any finite system returns to its initial state and its dynamical trajectory repeats. Clearly, new dynamical patterns cannot occur past the Poincaré time if the system is isolated from external perturbations. To cast the concept of unbounded evolution firmly within dynamical systems theory, we introduce a formal minimal criterion for unbounded evolution (where we stress that here we mean the broader concept of dynamical evolution, not just evolution in the biological sense) in finite dynamical systems: minimally, an unbounded system is one that does not repeat within the expected Poincaré time. A key feature is that this definition automatically excludes finite deterministic systems unless they are open to external perturbations in some way. That is, we contend that unbounded evolution (and in turn OEE which depends on it) is only possible for a subsystem interacting with an external environment. To make better contact with real-world systems, where the Poincaré time often cannot even in principle be observed, we introduce a second criterion of innovation. Systems satisfying the minimal definition of unbounded evolution must also satisfy a formal notion of innovation, where we define innovation as dynamical trajectories not observed in isolated, unperturbed systems. We identify innovation by comparison to counterfactual histories (those of isolated systems). Like unbounded evolution, innovation is extrinsically defined and requires interaction between at least two subsystems. A given subsystem can exhibit OEE if and only if it is both unbounded and innovative. As we will show, utilizing these criteria for OEE allows us to evaluate candidate mechanisms for generating OEE in simple toy model dynamical systems, ones that could carry over to more realistic complex dynamical systems.

The utility of these definitions is that they provide a simple way to quantify intuition regarding hallmarks (1) and (2) of OEE for systems of finite size, which is applicable to any comparable dynamical system. They therefore provide a means to quantitatively evaluate, and therefore directly compare, different potential mechanisms for generating OEE. We apply these definitions to test three new variants of cellular automata (CA) for their capacity to generate OEE. A key feature of the new variants introduced is their implementation of time-dependent update rules, which represents a radical departure from more traditional approaches to dynamical systems where the dynamical laws remain fixed. Each variant introduced differs in its relative openness to an external environment. Of the variants tested, our results indicate that systems which implement time-dependent rules that are a function of their state are statistically better at satisfying the two criteria for OEE than dynamical systems with externally driven time-dependence for their rules (that is, where the rule evolution is not dependent on the state of the subsystem of interest). We show that the state-dependent systems provide a mechanism for generating OEE that includes the capacity for on-going production of novelty by coupling to larger environments. This mechanism also scales with system size, meaning the amount of open-endedness that is generated does not drop off as the system size increases. We then explore the complexity of state-dependent systems in more depth, calculating general complexity measures including compressibility (based on LZW in ref. 18) and Lyapunov exponents. Given that state-dependent dynamics are often cited as a hallmark feature of life due to the role of self-reference in biological processes19,20,21,22, our results provide a new connection between hallmarks (1), (2) and (4) of OEE. Our results therefore connect several hallmarks of OEE in a new framework that allows identification of mechanisms that might operate in a diverse range of dynamical systems. The framework holds promise for providing insights into universal mechanisms for generating OEE in dynamical systems, which is applicable to both biological and artificial systems.

Theory

Traditionally dynamical systems, like their physical counterparts, are modeled with fixed dynamical laws – a legacy from the time of Newton. However, this framework may not be the appropriate one for modeling biological complexity, where the dynamical laws appear to be self-referential and evolve in time as a function of the states19,20,21,22. An explicit example is the feedback between genotype and phenotype within a cell: genes are “read-out” to produce changes to the state of expressed proteins and RNAs, and these in turn can feed back to turn individual genes on and off23. Given this connection to biology, we are motivated in this work to focus explicitly on time-dependent rules, where time-dependence is introduced by driving the rule evolution through coupling to an external environment. Since open-ended evolution has been challenging to characterize in traditional models with fixed dynamical rules, implementing time-dependent rules could open new pathways to generating complexity. In this study we therefore define open systems as those where the rule dynamically evolves as a function of time, and we assume this is driven by interaction with an environment. As we show, time-dependent rules allow novel trajectories to be realized that have not been previously characterized in cellular automata models. To quantify this novelty, we introduce a rigorous notion of OEE that relies on formalized definitions of unbounded evolution and innovation. The definitions presented rely on utilizing isolated systems evolved according to a fixed rule as a set of counterfactual systems to compare to the novel dynamics driven by time-dependent rules.

Formalizing Open-Ended Evolution as Unbounded Evolution and Innovation

A hallmark feature of open-ended evolutionary systems is that they appear unbounded in their dynamical evolution1, 11, 12. For finite systems, such as those we encounter in the real world, the concept of “unbounded” is not well-defined. In part this is because all finite systems will eventually repeat, as captured by the well-known Poincaré recurrence theorem. As stated in the theorem, finite systems are bounded by their Poincaré recurrence time, which is the maximal time after which a system will start repeating its prior evolution. The Poincaré recurrence time t P of a finite, closed deterministic dynamical system therefore provides a natural bound on when one should expect such a system to stop producing novelty. In other words, t p is an absolute upper-bound on when such a system will terminate any appearance of open-endedness.

Potentially the Poincaré recurrence theorem can locally be violated by a subsystem with open boundary conditions or if the subsystem is stochastic (although in the latter case the system might still be expected to approximately repeat). We therefore consider a definition of unbounded evolution applicable to any instance of a dynamical system that can be decomposed into two interacting subsystems. Nominally, we refer to these two interacting subsystems as the “organism” (o) and “environment” (e). We note that our framework is sufficiently general to apply to systems outside of biology: the concept of “organism” is meant only to stress that we expect this subsystem to potentially exhibit the rich dynamics intuitively anticipated of OEE when coupled to an environment (the environment, by contrast, is not expected to produce OEE behavior). The purpose of the second “environment” subsystem is to explicitly introduce external perturbations to the organism, where e is also part of the larger system under investigation and modulates the rule of o in a time-dependent manner. We therefore minimally define unbounded evolution (UE) as occurring when a sub-partition of a dynamical system does not repeat within its expected Poincaré recurrence time, giving the appearance of unbounded dynamics for given finite resources:

Definition 1

Unbounded evolution (UE): A system U that can be decomposed into two interacting subsystems o and e, exhibits unbounded evolution if there exists a recurrence time such that the state-trajectory or the rule-trajectory of o is non-repeating for t r > t P or \({t}_{r}^{^{\prime} } > {t}_{P}\) respectively, where t r is the recurrence time of the states, \({t}_{r}^{^{\prime} }\) the recurrence time of the rules, and t P is the Poincaré recurrence time (measured in units of update steps) for an equivalent isolated (non-perturbed) system o.

Since we consider o where the states and rules evolve in time, unbounded evolution can apply to the state or rule trajectory recurrence time and still satisfy Definition 1. That is, a dynamical system exhibits UE if and only if it can be partitioned such that the sequence of one of its subsystems’ states or dynamical rules are non-repeating within the expected Poincaré recurrence time t P of an equivalent isolated system. In other words, unbounded evolution is only possible in a system that is partitioned into at least two interacting subsystems. This way, one of the subsystems acts as an external driver for the rule evolution of the other subsystem, which can then be pushed past its expected maximal recurrence time, t P . We calculate the expected t P as that of an equivalent isolated system. By equivalent isolated system, we mean the set of all possible trajectories evolved from any initial state drawn from the same set of possible states as for o, but generated with a fixed rule, which can be any possible fixed rule. We will describe explicit examples using the Elementary Cellular Automata (ECA) rule space in Section Model Implementation, where the relevant set of states are those constructed from the binary alphabet {0, 1} and the set of rules for comparison are the ECA rules. ECA are defined as 1-dimensional CA with nearest-neighbor update rules: for an ECA of width w (number of cells across), equivalent isolated systems as defined here include all trajectories evolved with any fixed ECA rule from any initial state of width w, where t P is then t P = 2w and w = w o , where w o is the width of o.

Implementing the above definition of UE necessarily depends on counterfactual histories of isolated systems (e.g. of ECA in our examples). These counterfactual systems cannot, by definition, generate conditions for UE. This suggests as a corollary a natural definition for innovation in terms of comparison to the same set of counterfactual histories:

Definition 2

Innovation (INN): A system U that can be decomposed into two interacting subsystems o and e exhibits innovation if there exists a recurrence time t r such that the state-trajectory is not contained in the set of all possible state trajectories for an equivalent isolated (non-perturbed) system.

That is, a subsystem o exhibits INN by Definition 2 if its dynamics are not contained within the set of all possible trajectories of equivalent isolated systems. We note these definitions do not necessitate that the complexity of individual states increase with time, thus one might observe INN without a corresponding rise in complexity with time. Figure 1 shows a conceptual illustration of both UE and INN, as presented in Definitions 1 and 2.

State diagrams: A hypothetical example demonstrating the concepts of INN and UE. The possible set of states are S = {1, 2, 3, 4, 5, 6} and rules R = {α, β}. For each panel, the example state trajectory s is initialized with starting state s o = 3. For panels c and d the rule trajectory r is also shown. Highlighted in bold is the first iteration of the attractor for states (all panels) or rules (panel (c,d) only). For a discrete deterministic system of six states, the Poincaré recurrence time is t P = 6. Panel (a) shows the state transition diagram for hypothetical rule α where a trajectory initialized at s(t 0) = 3 visits two states. Panel (b) shows the state transition diagram for hypothetical rule β where a trajectory initialized at s(t 0) = 3 visits only one state. Since the trajectories in (a,b) evolve according to a fixed rule (are isolated) they do not display INN or UE and in general the recurrence time \({t}_{r}\ll {t}_{P}\). Panel (c) demonstrates INN, where the trajectory shown cannot be fully described by rule α or rule β alone. The state trajectory s and rule trajectory r both have a recurrence time of t r = 5, which is less than t P so this example does not exhibit UE. Panel (d) exhibits UE (and is also an example of INN). The trajectory shown cannot be described by rule α or rule β alone. The recurrence time for the state trajectory is t r = 13, which is greater than t P . The rule trajectory also satisfies the conditions for UE, with a recurrence time in this example that is longer than that of the state trajectory due to the fact that the state transition 2 → 5 could be driven by rule α or β depending on the coupling to an external system.

A motivation for including both Definitions 1 and 2 is that they encompass intuitive notions of “on-going production of novelty” (INN) and “unbounded evolution” (UE), both of which are considered important hallmarks of OEE8. UE can imply INN, but INN does not likewise imply UE. It might therefore appear that UE is sufficient to characterize OEE without needing to appeal to separately defining INN. The utility of including INN in our formalism is that it allows generalization to both infinite systems where UE is not defined, and to real-world systems where UE is not physically observable (since, for example, t P could in principle be longer than the age of the universe). For the latter, INN can be an approximation to UE, where higher values of INN indicate a system more likely to exhibit UE. Additionally, the combination of UE and INN can be used to exclude cases that appear unbounded but are only trivially so. For example, a partition of a system evolved according to a fixed dynamical rule could in principle locally satisfy UE, but would not satisfy INN since its dynamics could be shown to be equivalent to those generated from an appropriately constructed isolated system (e.g. a larger ECA in our example). An example is the time evolution of ECA Rule 3024, which is known to be a ‘complex’ ECA rule that continually generates novel patterns under open-boundary conditions. In cases such as this, it should be considered that it is the complexity at the open boundary of the system that is generating continual novelty and not a mechanism internal to the system itself. In other words, in such examples the complexity is generated by the boundary conditions. Since our biosphere has simple, relatively homogeneous boundary conditions (geochemical and radiative energy sources) the complexity of the biosphere likely arises due to internal mechanisms and is not trivially generated by the boundary conditions alone25. Since we aim to understand the intrinsic mechanisms that might drive OEE in real, finite dynamical systems, we therefore require both definitions to be satisfied for a dynamical system to exhibit non-trivial OEE.

Model Implementation

We evaluate different mechanisms for generating OEE against Definitions 1 and 2, utilizing the rule space of Elementary Cellular Automata (ECA) as a case study. ECA are defined as nearest-neighbor 1-dimensional CA operating on the two-bit alphabet {0, 1}. There are 256 possible ECA rules, and since the rule numbering is arbitrary, we label them according to Wolfram’s heuristic designation24. Due to their relative simplicity, ECA represent some of the most widely-studied CA, thus providing a well-characterized foundation for this study. Traditionally, ECA evolve according to a fixed dynamical rule starting from a specified initial state. As such, no isolated finite ECA can meet both of the criteria laid out in Definitions 1 and 2 as per our construction aimed at excluding trivial cases. An isolated ECA of width w will repeat its pattern of states by the Poincaré time t P = 2w (violating Definition 1). If we instead considered a CA of width w as a subsystem of a larger ECA it would not necessarily repeat within 2w time steps, but it would not be innovative (violating Definition 2). Thus, as stated, we can exclude trivial examples such as ECA Rule 30, or other unbounded but non-innovative dynamical processes, which repeatedly apply the same update rule. A list of model parameters are summarized in Table 1.

To exclude trivial unbounded cases, Definitions 1 and 2 are constructed to require that the dynamical rules themselves evolve in time. As we will show, utilizing the set of 256 possible ECA rules as the rule space for CA with time-dependent rules makes both UE and INN possible. Rules can be stochastically or deterministically evolved, and we explore both mechanisms here. We note that there exists a huge number of possible variants one might consider. We therefore focus on three variants that display important mechanisms implicated in generating OEE, including openness to an environment10 (of varying degrees in all three variants), state-dependent dynamics (regarded as a hallmark feature of life19, 21, 22), and stochasticity. Here openness to an environment is parameterized by the degree to which the rule evolution of o depends on the state (or rule) of o, as compared to its dependence on the state of e. Completely open systems are regarded as depending only on external factors, such that the time-dependence of the rule evolution is only a function of the environment. We also consider cases that are only partially open, where the rule evolution depends on both extrinsic and intrinsic factors.

Case I

The first variant, Case I, implements state-dependent update rules, such that the evolution of o depends on its own state and that of its environment. This is intended to provide a model that captures the hypothesized self-referential dynamics underlying biological systems (see e.g. Goldenfeld and Woese21) while also being open to an environment (we do not consider closed self-referential systems herein as treated in Pavlic et al. since these do not permit the possibility of UE26). We consider two coupled subsystems o and e, where the update rule of o is state-dependent and is a function of the state and rule of o, and the state of e at the same time t (thus being self-referential but also open to perturbations from an external system). That is, the update rule of o takes on the functional form \({r}_{o}(t+1)=f({s}_{o}(t),{r}_{o}(t),{s}_{e}(t))\), where s o and r o are the state and rule of the organism respectively, and s e is the state of the environment. We regard this case as only partly open to an environment since the evolution of the rule of o depends on its own state (and rule) in addition to the state of its environment. By contrast, the subsystem e is closed to external perturbation and evolves according to a fixed rule (such that e is an ECA). Both o and e have periodic boundary conditions (o is only open in the sense that its rule evolution is in part externally driven). A schematic illustration of the time evolution of an ECA, and the coupling between subsystems in a Case I CA is shown in Fig. 2.

Illustrations of the time evolution of a standard ECA (left) and of a Case I state-dependent CA (right). ECA evolve according to a fixed update rule (here Rule 30), with the same rule implemented at each time step. In an ECA rule table, the cell representation of all possible binary ordered triplets is shown in the top row, with the cell representation of the corresponding mapping arising from Rule 30 shown below. Rule 30 therefore has the binary representation 00011110. In a Case I CA (right), the environment subsystem e evolves exactly like an ECA with a fixed rule. The organism subsystem o, by contrast, updates its rule at each time-step depending on its rule at the previous time-step, its own state (green arrows) and the state of e (red arrows). The new rule for o is then implemented to update the state of o (blue arrows). The rules are therefore time-dependent in a manner that is a function of the states of o and e and the past history of o (through the dependence on the rule at the previous time-step).

To demonstrate how the organism in our example of a Case I CA changes its update rule, we provide a simple illustrative example of the particular function f(s o (t), r o (t), s e (t)) implemented in this work (see Fig. 3 and Supplement 1.1). Specifically, we utilize an update function that takes advantage of the binary representation of ECA. An example of the structure of an ECA rule is shown for Rule 30 in Fig. 2. ECA rules are structured such that each successive bit in the binary representation of the rule is the output of one of the 23 possible ordered sets of triplet states. The left panel of Fig. 3 shows an example of a few times steps of the evolution of an organism o of width w o = 4 (right) coupled to an environment e with width w e = 6 (left), where o implemented rule 30 at t − 1. At each time-step t the frequency of each of the 23 ordered triplet states (listed in the top row of Fig. 2) in the state of o is compared to the frequency of the same ordered triplet in the state of e. If the frequency in o meets or exceeds the frequency in e for a given triplet, the bit corresponding to the output of that triplet in the rule of o is flipped from 0 ↔ 1. For the example in the left panel of Fig. 3, the triplet frequencies are listed in the table in the right panel of Fig. 3. We note that for our implementation, the frequency of a triplet in o is calculated relative to the total number of possible triplets in s o , which is 4 in this example (and likewise for e, with 6 possible triplets in the current state). We compare the frequency only for those triplets that appear in the state of o at time t. In the table, only the triplet 101 is expressed more frequently in the organism o than in the environment e. The interaction between o and e changes r o from Rule 30 at time-step t to Rule 62 at t + 1, as shown in Fig. 3. The rule may change by more than one bit in its binary representation in a single time step if multiple triplets meet the criteria to change the organism’s update rule.

Example of the implementation of a Case I organism in our example. Shown is an organism o of width w o = 4, coupled to an environment e with width w e = 6, where the rule of o at time step t is r o (t) = 30. (a) At each time step t, the frequency of ordered triplets are compared in the state of the organism and that of the environment, s o and s e respectively, and used to update \({r}_{o}(t)\to {r}_{o}(t+1)\) (see text for algorithm description). (b) Table of the calculated frequency of ordered triplets in the state of the environment and in the state of the organism for time step t shown in the left panel. (c) Update of r o from Rule 30 to Rule 62, based on the frequency of triplets in the table (b).

We note that we do not expect the qualitative features of Case I CA reported here to depend on the precise form of the state-dependent update rule as presented, so long as the update of r o depends on the state and rule of o, and the state of e (that is, o is self-referential and open – see Pavlic et al.26 for an example of non-open self-referencing CA that does not display UE). We explored some variants to this rule-changing mechanism. None of our variants significantly changed the statistics of the results, indicating that the qualitative features of the dynamics do not depend on the exact (and somewhat parochial) details of the example presented herein. Instead, we regard the important part to be the general feature of self-reference coupled to openness to an environment that is driving the interesting features of the dynamics observed, where we can focus on just one example in this study for computational tractability in generating large ensemble statistics. The example implemented here was chosen since it takes explicit advantage of the structure of ECA rules (by flipping bits in the rule table) to provide a simple, open state-dependent mechanism for producing interesting dynamics.

Case II

We introduce a second variant of CA, Case II, that is similarly composed of two spatially segregated, fixed-width, 1-dimensional CA: an organism o and an environment e. As with Case I, the environment e is an execution of an ECA, and is evolved according to a fixed rule drawn from the set of 256 possible ECA rules. The key difference between Case I and Case II CA is that for Case II, the the update rule of the subsystem o depends only on the state of the external environment e and is therefore independent of the current state or rule of o – that is, o is not self-referential in this example. Case II CA emulate systems where the rules for dynamical evolution are modulated exclusively by the time evolution of an external system. We consider o in this example to be more open to its environment than for Case I, since the rule evolution of o depends only on e. The functional form of Case II rule evolution may be written as \({r}_{o}(t+1)=f({s}_{e}(t))\), where r o is the rule of o and s e is the state of the environment (see Supplement 1.2). For the example presented here, we implement a map f that takes s e (t) → r o (t) that is 1:1 from the state of e to the binary representation of the rule of o (determined according to Wolfram’s binary classification scheme). Therefore for the implementation of Case II in our example the environment must be of width w e = 8.

Case III

The final variant, Case III, is composed of a single, fixed-width, 1-dimensional CA with periodic boundary conditions, which is identified as the organism o. Like with Case II, the rule evolution of Case III is driven externally and does not depend on o. However, here the external environment e is stochastic noise and not an ECA. The subsystem o has a time-dependent rule where each bit in the rule table is flipped with a probability μ (“mutation rate”) at each time step. In functional form, the subsystem o updates its rule such that \({r}_{o}(t+1)=f({r}_{o}(t),\xi )\), where r o is the rule of o, and ξ is a random number drawn from the interval [0, 1) (see Supplement 1.3). At each time step, for each bit in the rule table, a random number ξ is drawn, and if ξ is above a threshold μ, that bit is flipped 0 ↔ 1 at that time step. This implements a diffusive-random walk through ECA rule space. Since the rule of o at time t + 1, r o (t + 1), depends on the rule at time t, r 0(t), the dynamics of Case III CA are history-dependent in a similar manner to Case I (both rely on flipping bits in r o (t), where Case I do so deterministically as a function of s o and s e , and Case III do so stochastically). In this example, o is also more open to its environment than in Case I since the organism’s rule does not depend on s o , but it is less open than Case II since the rule does depend on the previous organism rule used.

All three variants are summarized in Table 2 (see Supplement 1), where the functional dependencies of the rule evolution in each example are explicitly compared. Since we restrict the rule space for Cases I–III to that of ECA rules only, the trajectories of ECA with periodic boundary conditions provides a well-defined set of isolated counterfactual trajectories with which to evaluate Definitions 1 and 2. For comparison to isolated systems, we evaluate all ECA of width w o , where w o is the width of the “organism” subsystem o. We test the capacity for each of the three cases presented to generate OEE against Definitions 1 and 2 in a statistically rigorous manner, and compare the efficacy of the different mechanisms implemented in each case.

Experimental Methods

For Cases I–III, we evolve o with periodic boundary conditions (such that interaction with the environment is only through the rule evolution). For Cases I and II, e is also a CA with periodic boundary conditions. For Case I, where w e must also be specified, we consider systems with w e = 1/2w o , w o , 3/2w o , 2w o and 5/2w o , where w o is the width o. For Case II, w e = 8 for all simulations, since this permits a 1:1 map from the possible states of e to the rule space of ECA. Results for Case III are given for organism rule mutation rate μ = 0.5, such that each outcome bit in the rule evolution has a 50% probability of flipping at every time step for ξ drawn from the interval [0, 1) (a bit flips when μ > ξ). Other values of μ were explored, with qualitatively similar results (see Supplement Fig. S4).

The number of possible executions grows exponentially large with width w o , limiting the computational tractability of statistically rigorous sampling. We therefore explored small CA with \({w}_{o}=3,4,\ldots 7\) and sampled a representative subspace of each (see Supplement 2). For each system sampled, we measured the recurrence times of the rule (\({t}_{r}^{^{\prime} }\)) and state (t r ) trajectories for o. For Case III CA, which are stochastic, all simulations eventually terminated as a random oscillation between the all ‘0’ state and the all ‘1’ state. We therefore used the timescale of reaching this oscillatory attractor as a proxy for the state recurrence time t r . In cases where t r > t P or \({t}_{r}^{^{\prime} } > {t}_{P}\), where \({t}_{P}={2}_{o}^{w}\) for isolated ECA (Definition 1), and the state trajectory was not produced by any ECA execution of width w o (Definition 2), the system is considered to exhibit OEE.

We measured the complexity of the resulting interactions by calculating relative compressibility, C, and by the system’s sensitivity based upon Lyapunov exponents, k 27 (see Supplement 8). Large values of C indicate low Kolmogorov-Chaitin complexity, meaning the output can be produced by a simple (short) program. Large values of k indicate complex dynamics, with trajectories that rapidly diverge for small perturbations such as occurs in deterministic chaos27. These values are compared to those of ECA. Additionally, ECA rules are often categorized in terms of four Wolfram complexity classes, I–IV24. Class I and II are considered simple because all initial patterns evolve quickly into a stable or oscillating, homogeneous state. Class III and IV rules are viewed as generating more complex dynamics. We use the complexity classes of the rules utilized in time-dependent rule evolution to determine whether the complexity of time-dependent CAs is a product of the ECA rules implemented, or if it is generated through the mechanism of time-dependence.

Results

The vast majority of executions sampled from all three CA variants were innovative by Definition 2, with >99% of Case II and Case III CAs displaying INN. For Case I CA, the percentage of INN cases increased as a function of both w o and w e , ranging from ~30% for the smallest CA explored to >99% for larger systems (see Supplement 5). This is intuitive, since the majority of organisms with changing updates rules should be expected to exhibit different state-trajectories than ECA. The fact that >99% of organisms are innovative in our examples may seem to indicate that INN is trivial. However, we note that INN conceptually becomes more significant when considering infinite systems (where UE is not defined) or large systems where t P is not measurable (and thus UE cannot be calculated). We show below that INN scales with recurrence time, and that measuring innovation over a finite timescale could provide a method for approximating UE. INN is therefore useful to the analysis of large or infinite systems where the methods implemented here to detail candidate mechanisms are not directly applicable to test UE. INN is also necessary to exclude trivial OEE. We also note that for computational tractability we compare the time evolution of o only to ECA, but in practice one could (and perhaps should) compare o to dynamical systems evolved according to any fixed rule (e.g. regardless of neighborhood size, which for ECA is n = 3), in which case we might expect the number of INN cases to decrease and therefore INN would be more non-trivial even for small systems.

By contrast to cases exhibiting INN, OEE cases are much rarer, even for our highly simplified examples, due to the fact that the number of UE cases is much smaller, typically representing <5% of all the sampled trajectories in the examples studied here. We therefore focus discussion primarily on sampled executions meeting the criteria for OEE, i.e. those that satisfied Definitions 1, before returning to how INN might approximate UE.

Open-Ended Evolution in CA Variants

The percentage of sampled cases for each CA variant that satisfies Definitions 1 for UE are shown in Table 3, where for purposes of more direct comparison Case I CA statistics are shown only for w o = w e . Case I CA statistics for other relative values of w o and w e are shown in Table 4. Examples of Case I CA exhibiting OEE are shown in Fig. 4, demonstrating the innovative patterns that can emerge due to time dependent rules. Box plots of the distribution of measured recurrence times for each CA variant are shown in Figs 5 and 6. All UE cases presented here are also INN, and thus exhibit OEE. We therefore refer to UE and OEE interchangeably (without explicitly referencing OEE as cases exhibiting UE and INN separately).

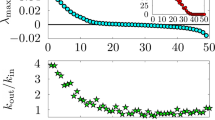

Distribution of recurrence times t r for the state trajectory of o for Case I CA. From top to bottom are distributions for w e = 1/2w o , w o , 3/2w o , 2w o and 5/2w o , respectively. In all panels the black horizontal line indicates where t r /t P = 1 (shown on a log scale). Sampled trajectories displaying UE occur for t r /t P > 1.

To compare the capacity for OEE across the different CA variants tested, it is useful to define a notion of scalability17. Here we define scalable systems as ones where the number of observed OEE cases can increase without the need to either (1) change the rule-updating mechanism of o or (2) significantly change the statistics of sampled cases. By this definition, the two primary mechanisms for increasing the number of OEE cases in a scalable manner are by changing w o , or depending on the nature of the coupling between o and e, changing w e (with the constraint that the rule-updating mechanism cannot change).

As expected (by definition), isolated ECA do not exhibit any OEE cases and the majority of ECA have recurrence times \({t}_{r}\ll {t}_{p}\). However, all three CA variants with time-dependent rules do exhibit examples of OEE, but differ in the percentage of sampled cases and their scalability. Case III exhibits the simplest dynamics, where trajectories follow a diffusive random walk through rule space until the system converges on a random oscillation between the all- ‘0’ and all-‘1’ states (where t r is approximated by this convergence time). The frequency of state recurrence times t r of the organism decreases exponentially (see Supplement Fig. S4), such that the o with the longest recurrence times are exponentially rare. Since so few examples were found for organisms of size w o = 7, we also tested w o = 8 and found no examples of OEE. In general, the exponential decline observed is steeper for increasing w o . Observing more OEE cases therefore requires exponentially increasing the number of sampled trajectories for increasing w o . The capacity for Case III CA to demonstrate OEE is therefore not scalable with system size (violating condition 2) in our definition above). An additional limitation of Case III CA is that their long-term dynamics are relatively simple once the system settles into the oscillatory attractor, thus the majority of observations of Case III CA would not yield interesting dynamics (e.g. if the observation time were much greater than the start time \({t}_{obs}\gg {t}_{o}\)).

For Case II, we also observed a steep decline in the number of OEE cases observed for increasing w o (Table 3). This is reflected by a steady decrease in the mean of the recurrence times for increasing w o , as shown in Fig. 6. We also tested a large statistical sample of organisms of size w o ≥ 8 for Case II CA (not shown) and found no examples of OEE cases. This is not wholly unexpected. For Case II with w o = 8, the environment and organism are the same size (w e = w o ). Therefore e and o share the same Poincaré time \({t}_{P}={2}^{{w}_{o}}\). The subsystem e is a traditional ECA, therefore the majority of e will exhibit recurrence times \(\ll \) t P (see e.g. trend in Fig. 6). Since the rule of o is determined by a 1:1 map from the state of e, the rule recurrence time of o will also be much less than the Poincaré time, such that \({t}_{r}^{^{\prime} }\ll {t}_{P}\). It is the rule evolution that drives novelty in the state evolution, we therefore also see that the state recurrence time is similarly limited such that \({t}_{r}\ll {t}_{P}\) also holds. To get around this limitation one could increase the size of the environment such that w e > w o . However, since the rule for o is a 1:1 map from the state of e, this would require changing the updating rule scheme for o. That is, the organism o would have to change how it evolves in time as a function of its environment (violating 1) in our definition of scalability above. By our definition of scalability, this is not a scalable mechanism for generating OEE since o must change the function for its updating rule and therefore would represent a different o.

We can compare the statistics of sampled OEE cases for Case I where w o = w e to those of Case II and Case III, as in Table 3. While Case II and Case III CA see a steep drop-off in the percentage of sampled cases exhibiting OEE with increasing organism size w o , the Case I CA exhibit a flatter trend. We determined whether this trend holds for varying w e by also analyzing statistics for Case I CA where \({w}_{e}=\tfrac{1}{2}{w}_{o}\), w o , \(\tfrac{3}{2}{w}_{o}\), 2w o and \(\tfrac{5}{2}{w}_{o}\). The statistics of OEE cases sampled are shown in Table 4 and box plots of the distribution of recurrence times are shown in Fig. 5. For each fixed environment size explored (w e , columns in Table 4), we observe that the statistics do not decrease dramatically as the size of the organism increases (increasing w o ). For fixed organism size (w o , rows in Table 4), we observe that the number of OEE cases increases with increasing environment size. These trends are also reflected in the means of the distributions shown in Fig. 5. Case I represents a scalable mechanism for OEE as o can be coupled to larger environments and will produce more OEE cases.

Case I and Case II can be contrasted to gain insights into scalability. The key difference between the two variants is that for Case II the update rule of o is a 1:1 map with the state of e, whereas for Case I the map is self-referencing and is many:1. Case I therefore uses a coarse-grained representation of the environment for updating the rule of o and because the dynamics are self-referential, the same pattern in the environment can lead to different rule transitions in o, depending on the previous state and rule of o. Thus, although both Case I and Case II exhibit trends of increasing OEE as w e is increased relative to w o , the degree to which the size of the environment can impact the time evolution of the organism is different for the two cases. For a comparable size environment in Case I and Case II CA, the pattern relevant to the update of o may have a longer recurrence time than the actual states of e for Case I CA (due to the coarse-graining), whereas for Case II CA this pattern is strictly limited by the environment’s recurrence time. Additionally, due to the coarse-graining of the environment in Case I CA, the update rule of o is not dependent on the size of e: the same exact function for updating the rule of o may be applied independent of the environment size. This is not true for Case II, where the function for updating the rule of o must change in order to accommodate larger environments.

INN as a Proxy for UE

We have presented examples of small dynamical systems to perform rigorous statistical testing of INN and UE to evaluate candidate mechanisms for generating OEE. An important question is how the results might apply to larger dynamical systems that could depend on different mechanisms than those testable in simple, discrete systems. While an approximation of INN is in principle measurable for large or infinite dynamical systems, UE is not measurable or not well-defined. We therefore aimed to determine if INN can be utilized as a proxy for UE. To do so, we defined a new parameter n r , which quantifies the number of times that an organism changed its update rule during a finite time interval τ in its dynamical evolution. We normalized to determine the relative innovation of an organism \(I=\frac{{n}_{r}}{\tau }\) to generate a standardized measure for comparing across example organisms in our study, where for the cases presented here τ = 2w. Statistically representative results for Case I and Case II organisms are shown in Fig. 7, where I is plotted against the organism’s state recurrence time (Case III results are not included since the recurrence time is not well-defined). For both Case I and Case II a clear trend is apparent where innovation is positively (and nearly linearly) correlated with recurrence time. For a given recurrence time, OEE cases (highlighted in red) are the most innovative. Comparing the two panels, it is evident that Case I CA exhibit higher innovation and therefore achieve longer recurrence times than Case II CA. From these results we can conclude that a statistical measure sampling the number of observed rule transitions over a finite interval τ could be used to approximate recurrence time and therefore provide a proxy measure for UE. However, this leaves open the question of how large the interval τ should be for accurately estimating \({I}_{\tau \to {t}_{r}}\) in cases where t r is not observable, and for identifying how I scales with t p for a given generating mechanism for the dynamics (that is, such that I = αt r could be solved for t r , where α is a scaling of innovation relative to recurrence time). We leave these questions as a subject for future work.

On-going Generation of Complexity in Case I

We also considered the complexity of Case I CA, relative to isolated ECA, as a further test of their scalability and potential to generate complex and novel dynamics. We characterized the complexity of Case I using two standard complexity measures, compressibility (C) and Lyapunov exponent (k). The trends demonstrate that in general C decreases with increasing organism width w o , but increases with increasing environment size w e (left panel, Fig. 8), indicative of increasing complexity with organism width w o . Similar trends are observed for the Lyapunov exponent, as shown in the right panel of Fig. 8, where it is evident that increasing w o or w e leads to an increasing number of cases with higher Lyapunov exponent k. OEE cases tend to have the highest k values (see Supplement Fig. S12). As C is normalized relative to ECA (see Supplement 8), we conclude that Case I CA are generally more complex than ECA evolved according to fixed dynamical rules, and this is especially true for OEE cases.

Heat maps of compression C (left) and Lyapunov exponent values k (right) for all state trajectories of sampled o for Case I CA. From top to bottom w o = 3, 4, 5, 6 and 7, with distributions shown for \({w}_{e}=\tfrac{1}{2}{w}_{o}\), w o , \(\tfrac{3}{2}{w}_{o}\), 2w o and \(\tfrac{5}{2}{w}_{o}\) (from top to bottom in each panel, respectively) for each w o . Distributions are normalized to the total size of sampled trajectories for each w o and w e (see statistics in Table S3).

We also analyzed the ECA rules implemented in sampled Case I trajectories relative to the Wolfram Rule complexity classes. We find that Case I CA, on average, implement more Class I and II rules than Class III or IV, as shown in the frequency distribution of Fig. 9 for Case I CA with w o = w e (see Supplement Figs 5 and 6). Thus, we can conclude that the complexity generated by Case I CA is intrinsic to the state-dependent mechanism, and is not attributable to Class III and Class IV ECA rules dominating the rule evolution of o.

Discussion

We have provided formal definitions of unbounded evolution (UE) and innovation (INN) that can be evaluated in any finite dynamical system, provided it can be decomposed into two interacting subsystems o and e. Systems satisfying both UE and INN we expect to minimally represent mechanisms capable of OEE. Testing the criteria for UE and INN against three different CA models with time-dependent rules reveals what we believe to be quite general mechanisms applicable to a broad class of OEE systems.

Mechanisms for OEE

Our analysis indicates that there are potentially many time-dependent mechanisms that can produce OEE in a subsystem o embedded within a larger dynamical system, but that some may be more “interesting” than others. An externally driven time-dependence for the rules of o (Case II), while producing the highest statistics of OEE cases sampled for small o, does not provide a scalable mechanism for producing OEE with increasing system size, unless the structure of o itself is fundamentally altered (such that the rule space changes). Stochastically driven rule evolution displays rich transient dynamics, but ultimately subsystems converge on dynamics with low complexity (Case III). An alternative is to introduce stochasticity to the states, rather than the rules, which would avert this issue. This has the drawback that the mechanism for OEE is then not as clearly mappable to biological processes (or other mechanisms internal to the system), where the genotype (rules) evolve due to random mutations that then dictate the phenotype (states).

We regard Case I as the most interesting mechanism explored herein for generating conditions favoring OEE: it is scalable and the dynamics generated are novel. We note that the state-dependent mechanism represents a departure from more traditional approaches to modeling dynamical systems, e.g. as occurs in the physical sciences, where the dynamical rule is usually assumed to be fixed. In particular, it represents an explicit form of top-down causation, often regarded as a key mechanism in emergence19, 28 that could also play an important role in driving major evolutionary transitions29. The state-dependent mechanism is also consistent with an important hallmark of biology – that biological systems appear to implement self-referential dynamics such that the “laws” in biology are a function of the states19, 21, 22, a feature that also appears to be characteristic of the evolution of language30, 31.

Applicability to Other Dynamical Systems

We have independently explored openness to an environment, stochasticity and state-dependent dynamics as we expect these to be general and apply to a wide-range of dynamical systems that might similarly display OEE by satisfying Definitions 1 and 2. An important feature of these definitions is that UE and INN must be driven by extrinsic factors (an environment)32, although the mechanisms driving the dynamics characteristic of OEE should be intrinsic to the subsystem of interest. OEE can therefore only be a property of a subsystem. We have not explored the case of feedback from o to e that might drive further open-ended dynamics, as characteristic of the biosphere, for example in niche construction33, but expect even richer dynamics to be observed in such cases. For large or infinite dynamical systems INN is an effective proxy for UE, and we expect highly innovative systems to be the most likely candidates for open-ended evolution.

Conclusions

Our results demonstrate that OEE, as formalized herein, is a general property of dynamical systems with time-dependent rules. This represents a radical departure from more traditional approaches to dynamics where the “laws” remain fixed. Our results suggest that uncovering the principles governing open-ended evolution and innovation in biological and technological systems may require removing the segregation of states and fixed dynamical laws characteristic of the physical sciences for the last 300 years. In particular, state-dependent dynamics have been shown to out-perform other candidate mechanisms in terms of scalability, suggestive of paths forward for understanding OEE. Our analysis connects all four hallmarks of OEE and provides a mechanism for producing OEE that is consistent with the self-referential nature of living systems. By casting the formalism of OEE within the broader context of dynamical systems theory, the proof-of-principle approach presented opens up the possibility of finding unifying principles of OEE that encompass both biological and artificial systems.

References

Bedau, M. A., Snyder, E. & Packard, N. H. A classification of long-term evolutionary dynamics. In Artificial life VI, 228–237, Cambridge: MIT Press (1998).

Bettencourt, L. M., Lobo, J., Helbing, D., Kühnert, C. & West, G. B. Growth, innovation, scaling, and the pace of life in cities. Proceedings of the national academy of sciences 104, 7301–7306, doi:10.1073/pnas.0610172104 (2007).

Seyfarth, R. M., Cheney, D. L. & Bergman, T. J. Primate social cognition and the origins of language. Trends in cognitive sciences 9, 264–266, doi:10.1016/j.tics.2005.04.001 (2005).

Buchanan, A., Packard, N. H. & Bedau, M. A. Measuring the evolution of the drivers of technological innovation in the patent record. Artificial life 17, 109–122, doi:10.1162/artl_a_00022 (2011).

Skusa, A. & Bedau, M. A. Towards a comparison of evolutionary creativity in biological and cultural evolution. Artificial Life 8, 233–242 (2003).

Oka, M., Hashimoto, Y. & Ikegami, T. Open-ended evolution in a web system. Late Breaking Papers at the European Conference on Artificial Life 17 (2015).

Bedau, M. A. et al. Open problems in artificial life. Artificial life 6, 363–376, doi:10.1162/106454600300103683 (2000).

Banzhaf, W. et al. Defining and simulating open-ended novelty: requirements, guidelines, and challenges. Theory in Biosciences, 1–31 (2016).

Taylor, T. J. From artificial evolution to artificial life. Ph.D. thesis, University of Edinburgh, College of Science and Engineering, School of Informatics (1999).

Ruiz-Mirazo, K., Umerez, J. & Moreno, A. Enabling conditions for open-ended evolution. Biology & Philosophy 23, 67–85 (2008).

Bedau, M. Can biological teleology be naturalized? The Journal of Philosophy, 647–655 (1991).

Bedau, M. A. & Packard, N. H. Measurement of evolutionary activity, teleology, and life. Artificial Life II, Santa Fe Institute Studies in the Sciences of Complexity (1992).

Fernando, C., Kampis, G. & Szathmáry, E. Evolvability of natural and artificial systems. Procedia Computer Science 7, 73–76, doi:10.1016/j.procs.2011.12.023 (2011).

Ruiz-Mirazo, K. & Moreno, A. Autonomy in evolution: from minimal to complex life. Synthese 185, 21–52, doi:10.1007/s11229-011-9874-z (2012).

Guttenberg, N. & Goldenfeld, N. Cascade of complexity in evolving predator-prey dynamics. Physical review letters 100, 058102, doi:10.1103/PhysRevLett.100.058102 (2008).

Ruiz-Mirazo, K., Peretó, J. & Moreno, A. A universal definition of life: autonomy and open-ended evolution. Origins of Life and Evolution of the Biosphere 34, 323–346, doi:10.1023/B:ORIG.0000016440.53346.dc (2004).

Taylor, T. et al. Open-ended evolution: Perspectives from the oee1 workshop in york. Artificial Life (2016).

Zenil, H. Compression-based investigation of the dynamical properties of cellular automata and other systems. Complex Systems 19, 1–28 (2010).

Walker, S. I. & Davies, P. C. The algorithmic origins of life. Journal of the Royal Society Interface 10, 20120869–20120869, doi:10.1098/rsif.2012.0869 (2013).

Davies, P. C. & Walker, S. I. The hidden simplicity of biology. Reports on Progress in Physics 79, 102601, doi:10.1088/0034-4885/79/10/102601 (2016).

Goldenfeld, N. & Woese, C. Life is physics: Evolution as a collective phenomenon far from equilibrium. Annu. Rev. Condens. Matter Phys. 2, 375–399, doi:10.1146/annurev-conmatphys-062910-140509 (2011).

Douglas, R. H. Gödel, Escher, Bach: An Eternal Golden Braid. Basic Books, New York (1979).

Noble, D. A theory of biological relativity: no privileged level of causation. Interface Focus 2, 55–64, doi:10.1098/rsfs.2011.0067 (2012).

Wolfram, S. A New Kind of Science, vol. 5. Wolfram Media Champaign (2002).

Smith, E. Thermodynamics of natural selection i: Energy flow and the limits on organization. Journal of theoretical biology 252, 185–197, doi:10.1016/j.jtbi.2008.02.010 (2008).

Pavlic, T. P., Adams, A. M., Davies, P. C. & Walker, S. I. Self-referencing cellular automata: A model of the evolution of information control in biological systems. Proc. of the Fourteenth International Conference on the Synthesis and Simulation of Living Systems (ALIFE 14) (2014).

Tisseur, P. Cellular automata and lyapunov exponents. Nonlinearity 13, 1547–1560, doi:10.1088/0951-7715/13/5/308 (2000).

Ellis, G. F. Top-down causation and emergence: some comments on mechanisms. Interface Focus, rsfs20110062 (2011).

Walker, S. I., Cisneros, L. & Davies, P. C. Evolutionary transitions and top-down causation. Proceedings of Artificial Life XIII, 283–290 (2012).

Levary, D., Eckmann, J.-P., Moses, E. & Tlusty, T. Loops and self-reference in the construction of dictionaries. Physical Review X 2, 031018, doi:10.1103/PhysRevX.2.031018 (2012).

Kataoka, N. & Kaneko, K. Functional dynamics. i: Articulation process. Physica D: Nonlinear Phenomena 138, 225–250, doi:10.1016/S0167-2789(99)00230-4 (2000).

Taylor, T. Redrawing the boundary between organism and environment. Proceedings of Artificial Life IX, 268–273 (2004).

Laubichler, M. D. & Renn, J. Extended evolution: A conceptual framework for integrating regulatory networks and niche construction. Journal of Experimental Zoology Part B: Molecular and Developmental Evolution 324, 565–577, doi:10.1002/jez.b.v324.7 (2015).

Acknowledgements

This project was made possible through support of a grant from the Templeton World Charity Foundation. The opinions expressed in this publication are those of the author(s) and do not necessarily reflect the views of Templeton World Charity Foundation. We thank S. Wolfram, T. Rowland, T. Beynon, A. Rueda-Toicen, and C. Marletto, and the Emergence group at ASU for helpful discussions related to this work.

Author information

Authors and Affiliations

Contributions

A.A., H.Z. and S.I.W. designed the research; A.A. and S.I.W. performed research; A.A., H.Z., P.D. and S.I.W. analyzed data; A.A., H.Z., P.D. and S.I.W. wrote the paper.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Adams, A., Zenil, H., Davies, P.C.W. et al. Formal Definitions of Unbounded Evolution and Innovation Reveal Universal Mechanisms for Open-Ended Evolution in Dynamical Systems. Sci Rep 7, 997 (2017). https://doi.org/10.1038/s41598-017-00810-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-017-00810-8

This article is cited by

-

Programmable pattern formation in cellular systems with local signaling

Communications Physics (2021)

-

Self-replication of a quantum artificial organism driven by single-photon pulses

Scientific Reports (2021)

-

Possibility spaces and the notion of novelty: from music to biology

Synthese (2019)

-

Hidden Concepts in the History and Philosophy of Origins-of-Life Studies: a Workshop Report

Origins of Life and Evolution of Biospheres (2019)

-

Trans-algorithmic nature of learning in biological systems

Biological Cybernetics (2018)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.