Abstract

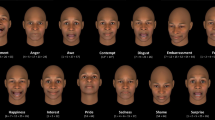

Understanding the degree to which human facial expressions co-vary with specific social contexts across cultures is central to the theory that emotions enable adaptive responses to important challenges and opportunities1,2,3,4,5,6. Concrete evidence linking social context to specific facial expressions is sparse and is largely based on survey-based approaches, which are often constrained by language and small sample sizes7,8,9,10,11,12,13. Here, by applying machine-learning methods to real-world, dynamic behaviour, we ascertain whether naturalistic social contexts (for example, weddings or sporting competitions) are associated with specific facial expressions14 across different cultures. In two experiments using deep neural networks, we examined the extent to which 16 types of facial expression occurred systematically in thousands of contexts in 6 million videos from 144 countries. We found that each kind of facial expression had distinct associations with a set of contexts that were 70% preserved across 12 world regions. Consistent with these associations, regions varied in how frequently different facial expressions were produced as a function of which contexts were most salient. Our results reveal fine-grained patterns in human facial expressions that are preserved across the modern world.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Anonymized (differentially private) versions of the context–expression correlations in each country are available from the GitHub repository (https://github.com/google/context-expression-nature-study). Owing to privacy concerns, video identifiers and annotations cannot be provided.

Code availability

Code to read and visualize the anonymized context–expression correlations within each world region is available from the GitHub repository (https://github.com/google/context-expression-nature-study). Code to generate interactive online maps (Fig. 1a) is available in the GitHub repository (https://github.com/krsna6/interactive-embedding-space)57. The trained video-processing algorithms used in this study are proprietary, but similar tools to annotate contexts in video and text, detect faces and classify the language of speech are available via the Google Cloud Video Intelligence API, Natural Language API and Speech-to-Text API, respectively (see https://cloud.google.com/apis).

References

Cowen, A. S. & Keltner, D. Clarifying the conceptualization, dimensionality, and structure of emotion: response to Barrett and colleagues. Trends Cogn. Sci. 22, 274–276 (2018).

Moors, A., Ellsworth, P. C., Scherer, K. R. & Frijda, N. H. Appraisal theories of emotion: state of the art and future development. Emot. Rev. 5, 119–124 (2013).

Ekman, P. & Cordaro, D. What is meant by calling emotions basic. Emot. Rev. 3, 364–370 (2011).

Keltner, D. & Haidt, J. Social functions of emotions at four levels of analysis. Cogn. Emot. 13, 505–521 (1999).

Niedenthal, P. M. & Ric, F. in Psychology of Emotion 98–123 (Routledge, 2017).

Keltner, D., Kogan, A., Piff, P. K. & Saturn, S. R. The sociocultural appraisals, values, and emotions (SAVE) framework of prosociality: core processes from gene to meme. Annu. Rev. Psychol. 65, 425–460 (2014).

Elfenbein, H. A. & Ambady, N. On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol. Bull. 128, 203–235 (2002).

Ekman, P. in The Nature of Emotion (eds Ekman, P. & Davidson, R.) 15–19 (Oxford Univ. Press, 1994).

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M. & Pollak, S. D. Emotional expressions reconsidered: challenges to inferring emotion from human facial movements. Psychol. Sci. Public Interest 20, 1–68 (2019).

Cowen, A., Sauter, D., Tracy, J. L. & Keltner, D. Mapping the passions: toward a high-dimensional taxonomy of emotional experience and expression. Psychol. Sci. Public Interest 20, 69–90 (2019).

Kollareth, D. & Russell, J. A. The English word disgust has no exact translation in Hindi or Malayalam. Cogn. Emot. 31, 1169–1180 (2017).

Mesquita, B. & Frijda, N. H. Cultural variations in emotions: a review. Psychol. Bull. 112, 179–204 (1992).

Russell, J. A. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychol. Bull. 115, 102–141 (1994).

Cowen, A. S. & Keltner, D. What the face displays: mapping 28 emotions conveyed by naturalistic expression. Am. Psychol. 75, 349–364 (2020).

Holland, A. C. & Kensinger, E. A. Emotion and autobiographical memory. Phys. Life Rev. 7, 88–131 (2010).

Kok, B. E. et al. How positive emotions build physical health: perceived positive social connections account for the upward spiral between positive emotions and vagal tone. Psychol. Sci. 24, 1123–1132 (2013).

Diener, E., Napa Scollon, C. & Lucas, R. E. The evolving concept of subjective well-being: the multifaceted nature of happiness. Adv. Cell Aging Gerontol. 15, 187–219 (2003).

Jou, B. et al. Visual affect around the world: a large-scale multilingual visual sentiment ontology. In MM’15: Proc. 23rd ACM International Conference on Multimedia 159–168 (2015).

Jackson, J. C. et al. Emotion semantics show both cultural variation and universal structure. Science 366, 1517–1522 (2019).

Tsai, J. L., Knutson, B. & Fung, H. H. Cultural variation in affect valuation. J. Pers. Soc. Psychol. 90, 288–307 (2006).

Russell, J. A. Core affect and the psychological construction of emotion. Psychol. Rev. 110, 145–172 (2003).

Tracy, J. L. & Matsumoto, D. The spontaneous expression of pride and shame: evidence for biologically innate nonverbal displays. Proc. Natl Acad. Sci. USA 105, 11655–11660 (2008).

Martin, R. A. Laughter: a scientific investigation (review). Perspect. Biol. Med. 46, 145–148 (2003).

Cohn, J. F., Ambadar, Z. & Ekman, P. in The Handbook of Emotion Elicitation and Assessment (eds Coan, J. A. & Allen, J. J. B.) 203–221 (Oxford Univ. Press, 2007).

Gatsonis, C. & Sampson, A. R. Multiple correlation: exact power and sample size calculations. Psychol. Bull. 106, 516–524 (1989).

Anderson, C. L., Monroy, M. & Keltner, D. Emotion in the wilds of nature: the coherence and contagion of fear during threatening group-based outdoors experiences. Emotion 18, 355–368 (2018).

Cordaro, D. T. et al. Universals and cultural variations in 22 emotional expressions across five cultures. Emotion 18, 75–93 (2018).

Keltner, D. & Cordaro, D. T. in Oxford Series in Social Cognition and Social Neuroscience. The Science of Facial Expression (eds Fernández-Dols, J.-M. & Russell, J. A.) 57–75 (Oxford Univ. Press, 2015).

Cowen, A. S., Elfenbein, H. A., Laukka, P. & Keltner, D. Mapping 24 emotions conveyed by brief human vocalization. Am. Psychol. 74, 698–712 (2019).

Cowen, A. S., Laukka, P., Elfenbein, H. A., Liu, R. & Keltner, D. The primacy of categories in the recognition of 12 emotions in speech prosody across two cultures. Nat. Hum. Behav. 3, 369–382 (2019).

Keltner, D. & Lerner, J. S. in Handbook of Social Psychology (eds Fiske, S. T et al.) (Wiley Online Library, 2010).

Cordaro, D. T. et al. The recognition of 18 facial-bodily expressions across nine cultures. Emotion 20, 1292–1300 (2020).

Sauter, D. A., LeGuen, O. & Haun, D. B. M. Categorical perception of emotional facial expressions does not require lexical categories. Emotion 11, 1479–1483 (2011).

Schroff, F., Kalenichenko, D. & Philbin, J. FaceNet: a unified embedding for face recognition and clustering. In Proc. IEEE Computer Society Conference on Computer Vision and Pattern Recognition 815–823 (2015).

Rudovic, O. et al. CultureNet: a deep learning approach for engagement intensity estimation from face images of children with autism. In Proc. IEEE International Conference on Intelligent Robots and Systems 339–346 (2018).

Gupta, V., Hanges, P. J. & Dorfman, P. Cultural clusters: methodology and findings. J. World Bus. 37, 11–15 (2002).

Rosenberg, N. A. et al. Genetic structure of human populations. Science 298, 2381–2385 (2002).

Eberhard, D. M., Simons, G. F. & Fennig, C. D. (eds) Ethnologue: Languages of the World 23rd edn (SIL International, 2020).

Aviezer, H., Trope, Y. & Todorov, A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science 338, 1225–1229 (2012).

Davidson, J. W. Bodily movement and facial actions in expressive musical performance by solo and duo instrumentalists: two distinctive case studies. Psychol. Music 40, 595–633 (2012).

Lord, F. M. & Novick, M. R. in Statistical Theories of Mental Test Scores Ch. 2 358–393 (Addison-Wesley, 1968).

Hardoon, D. R., Szedmak, S. & Shawe-Taylor, J. Canonical correlation analysis: an overview with application to learning methods. Neural Comput. 16, 2639–2664 (2004).

Scherer, K. R., Wallbott, H. G., Matsumoto, D. & Kudoh, T. in Facets of Emotion: Recent Research (ed. Scherer, K. R.) 5–30 (Erlbaum, 1988).

Markus, H. R. & Kitayama, S. Culture and the self: implications for cognition, emotion, and motivation. Psychol. Rev. 98, 224–253 (1991).

Picard, R. W. Affective Computing (MIT Press, 1997).

Roberson, D., Davies, I. & Davidoff, J. Color categories are not universal: replications and new evidence from a stone-age culture. J. Exp. Psychol. Gen. 129, 369–398 (2000).

Gegenfurtner, K. R. & Kiper, D. C. Color vision. Annu. Rev. Neurosci. 26, 181–206 (2003).

Emily, M., Sungbok, L., Maja, J. M. & Shrikanth, N. Joint-processing of audio-visual signals in human perception of conflicting synthetic character emotions. In Proc. 2008 IEEE International Conference on Multimedia and Expo 961–964 (ICME, 2008).

Van Der Maaten, L. & Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 9, 2579–2625 (2008).

Dwork, C. in Theory and Applications of Models of Computation (eds Agrawal, M. et al.) 1–19 (Springer, 2008).

Pappas, N. et al. Multilingual visual sentiment concept matching. In Proc. 2016 ACM International Conference on Multimedia Retrieval 151–158 (ICMR, 2016).

Kowsari, K. et al. Text classification algorithms: a survey. Information (Switzerland) 10, (2019).

Lee, J., Natsev, A. P., Reade, W., Sukthankar, R. & Toderici, G. in Lecture Notes in Computer Science 193–205 (Springer, 2019).

Yuksel, S. E., Wilson, J. N. & Gader, P. D. Twenty years of mixture of experts. IEEE Trans. Neural Netw. Learn. Syst. 23, 1177–1193 (2012).

Fisher, R. A. Frequency distribution of the values of the correlation coefficient in samples from an indefinitely large population. Biometrika 10, 507–521 (1915).

Efron, B., Rogosa, D. & Tibshirani, R. in International Encyclopedia of the Social & Behavioral Sciences 2nd edn (eds Smelser, N. J. & Baltes, P. B.) 492–495 (Elsevier, 2015).

Somandepalli, K. & Cowen, A. S. A simple Python wrapper to generate embedding spaces with interactive media using HTML and JS. https://doi.org/10.5281/zenodo.4048602 (2020).

Weyrauch, B., Heisele, B., Huang, J. & Blanz, V. Component-based face recognition with 3D morphable models. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (2004).

Bartlett, M. S. The general canonical correlation distribution. Ann. Math. Stat. 18, 1–17 (1947).

John, O. P. & Soto, C. J. in Handbook of Research Methods in Personality Psychology (eds Robins, R. W. et al.) Ch. 27, 461 (Guilford, 2007).

Chicco, D. & Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genomics 21, 6 (2020).

Powers, D. M. W. Evaluation: from precision, recall and F-measure to ROC, informedness, markedness and correlation. J. Mach. Learn. Technol. 2, 37–63 (2011).

Benjamini, Y. & Yu, B. The shuffle estimator for explainable variance in FMRI experiments. Ann. Appl. Stat. 7, 2007–2033 (2013).

Csárdi, G., Franks, A., Choi, D. S., Airoldi, E. M. & Drummond, D. A. Accounting for experimental noise reveals that mRNA levels, amplified by post-transcriptional processes, largely determine steady-state protein levels in yeast. PLoS Genet. 11, e1005206 (2015).

Mordvintsev, A., Tyka, M. & Olah, C. Inceptionism: going deeper into neural networks. Google Research Blog 1–8 (2015).

Brendel, W. & Bethge, M. Approximating CNNs with bag-of-local-features models works surprisingly well on Imagenet. In Proc. 7th International Conference on Learning Representations (ICLR, 2019).

Acknowledgements

This work was supported by Google Research in the effort to advance emotion research using machine-learning methods. Some of the artistically rendered faces in Fig. 1 are based on photographs originally posted on Flickr by V. Agrawal (https://www.flickr.com/photos/13810514@N07/8790163106), S. Kargaltsev (https://commons.wikimedia.org/wiki/File:Mitt_Jons.jpg), J. Hitchcock (https://www.flickr.com/photos/91281489@N00/90434347/) and J. Smed (https://commons.wikimedia.org/wiki/File:Tobin_Heath_celebration_(42048910344).jpg). We acknowledge the Massachusetts Institute of Technology and to the Center for Biological and Computational Learning for providing the database of facial images used for the analysis shown in Extended Data Fig. 2.

Author information

Authors and Affiliations

Contributions

A.S.C. conceived and designed the experiment with input from all other authors. A.S.C. and G.P. collected the data. A.S.C., F.S., B.J., H.A. and G.P. contributed analytic tools. A.S.C. analysed the data. A.S.C., D.K. and G.P. wrote the paper with input from all other authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature thanks Jeffrey Cohn, Ursula Hess and Alexander Todorov their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 The expression DNN predicts human judgments and is largely invariant to demographics.

a–c, Accuracy of the expression DNN in emulating human judgments. Human judgments (a) and the annotations of the expression DNN (b, c) have been projected onto a map of 1,456 facial expressions adapted from a previously published study14. Human judgments and the annotations of the expression DNN are represented using colours, according to the colour scheme used previously14. Probably because the expression DNN was trained on dynamic faces, it can in some cases make systematic errors in predicting the judgments of static faces (c). For example, a number of static faces of surprise were more strongly annotated by the expression DNN as awe (c, bottom left), probably because dynamic faces that convey surprise are distinguished in part by dynamic movement. This problem is mitigated when the expression DNN is calibrated for still images (b). To calibrate the DNN, multiple linear regression is applied in a leave-one-out manner to predict human judgments of the still images from the DNN annotations. After calibration on still images, we can see that the annotations of the expression DNN are fairly accurate in emulating human judgments (overall r = 0.69 between calibrated annotations of the expression DNN and human judgments after adjusting for explainable variance in human judgments33). d, The expression DNN can emulate human judgments of individual emotions and valence and arousal with moderate to high accuracy. Individual expression DNN predictions are correlated with human judgments across the 1,456 faces. Valence and arousal judgments (also from the previously published study14) were predicted using multiple linear regression in a leave-one-out manner from the 16 facial expression DNN annotations. e, The expression DNN is reliable for different demographic groups. By correlating the predictions of the expression DNN (calibrated for static images) across subsets of the 1,456 faces from the previous study14, we can see that the expression DNN is accurate for faces from different demographic groups (adjusted for explainable variance in human judgments). f, The expression DNN has little bias across demographic groups. To assess demographic bias, the annotations of each face of the expression DNN were predicted by averaging the annotations of the expression DNN across all other faces from the same demographic group. The variance in the annotations of the expression DNN explained by demographic group in this dataset was low, even though no effort was originally made to balance expressions in this dataset across demographic groups. Gender explained 0.88% of the total variance, race explained 0.28% and age explained 2.4%. Results for individual expressions were generally negligible, although age did explain more than 4% of the variance for three expressions—contempt, disappointment and surprise (maximum, 6.2% for surprise). Note that these numbers only provide a ceiling for the demographic bias, given that explained variance may also derive from systematic associations between expression and demographics in this naturalistic dataset—for example, because older people are less often pictured playing sports, they are less likely to be pictured with expressions that occur during sports. We can conclude that the expression DNN is largely unbiased by race and gender, with age possibly having at most a minor influence on certain annotations.

Extended Data Fig. 2 Annotations of the expression DNN are largely invariant to viewpoint and lighting.

a, To account for possible artefactual correlations owing to the effect of viewpoint and lighting on facial expression predictions, an in silico experiment was conducted. Predictions of facial expressions were applied to 3,240 synthetic images from the MIT-CBCL database, in which three-dimensional models of 10 neutral faces were rendered with 9 viewpoint conditions and 36 lighting conditions58. The variance explained in each facial expression annotation by viewpoint condition, lighting condition and their interaction was then computed. b, The explained standard deviation by viewpoint, lighting and their interaction is plotted alongside the actual standard deviation of each expression annotation in experiments 1 and 2. We note that the effects of viewpoint and lighting are small, except perhaps in the case of disappointment. This is unsurprising, given that faces are centred and normalized before the prediction of the expression.

Extended Data Fig. 3 Loadings of 16 global dimensions of context–expression associations revealed CCA.

Given that all 16 possible canonical correlations were discovered to be preserved across regions (using cross-validation; Fig. 3c), we sought to interpret the 16 underlying canonical variates. To do so, we applied CCA between facial expression and context to a balanced sample of 300,000 videos from across all 12 regions (25,000 randomly selected videos per region). Left, loadings of each canonical variate on the 16 facial expression annotations and the maximally loading context annotations. Right, correlations of the resulting canonical variates with individual contexts and expressions, revealing how each variate captures context–expression associations. Unsurprisingly, a traditional parametric test for the significance of each canonical correlation revealed that all 16 dimensions were highly statistically significant (χ2 = 860.4 for 16 variates, P < 10−8, Bartlett’s χ2 test59), a necessary precondition for their significant generalizability across all cultures (Fig. 3c).

Extended Data Fig. 4 Relative representation of each facial expression in the present study compared to the previous study.

In Fig. 1, we provide an interface for exploring how 1,456 faces14 are annotated by our facial expression DNN. Here, we analyse what the relative representation of these different kinds of facial expression within the present study was compared to within these 1,456 images. For each kind of facial expression, we plot the ratio of the standard deviation of our facial expression DNN annotations in the present study, averaged over each video, to the standard deviation of the annotations over the 1,456 faces. Given that the standard deviation in the present study was computed over averaged expressions within videos, it was expected to be smaller than the standard deviation over the 1,456 isolated expressions, generally yielding a ratio of less than 1. Nevertheless, it is still valid to compare the relative representation of different kinds of expression. We find that in both experiments within the present study, expressions labelled amusement, awe, sadness and surprise were particularly infrequent compared to those labelled concentration, desire, doubt, interest and triumph by the expression DNN. However, our findings still revealed culturally universal patterns of context–expression association for the less-frequent kinds of facial expression. Still, it is important to note that our measurements of the extent of universality may be differentially influenced by the expressions that occurred more often. Note that given limitations in the accuracy of our DNN, we were unable to examine 12 other kinds of facial expression that had been documented previously14 (for example, disgust, fear), and are unable to address the extent to which they are universal.

Extended Data Fig. 5 Neither interrater agreement levels nor prediction correlations in the data originally used to train and evaluate the expression DNN reveal evidence of regional bias.

a, Interrater agreement levels by upload region among raters originally used to train the expression DNN. For 44,821 video clips used in the initial training or evaluation of the DNN, multiple ratings were obtained to compute interrater reliability. For a subset of 25,028 of these clips, we were able to ascertain the region of upload of the video. Here, across all clips and each of the 12 regions, we compare the interrater reliability index (the square root of the interrater Pearson correlation41,60, the Pearson correlation being equivalent to the Matthew’s correlation coefficient for binary judgments61,62), which reflects the correlation of a single rater with the population average41,60. We can see that the interrater reliability index converges on a similar value of around 0.38 in regions with a large number of clips (the USA/Canada, Indian subcontinent and western Europe). This is an acceptable level of agreement, given the wide array of options in the rating task (29 emotion labels, plus neutral and unsure). We do not see a significant difference in interrater reliability between ratings of videos from India (the country of origin and residence of the raters) and the two other regions from which a large diversity of clips were drawn (the USA/Canada and western Europe). Error bars represent the standard error; n denotes the number of clips. To compute interrater reliability, we selected two individual ratings of each video clip, subtracted the mean from each rating, and correlated the flattened matrices of ratings of the 16 emotion categories selected for the present study across all clips. We repeated this process across 100 iterations of bootstrap resampling to compute standard error. b, Human judgment prediction correlations by region. We applied the trained expression DNN to the video clips from each region. To compute unbiased prediction correlations, it is necessary to control for interrater agreement levels by dividing by the interrater reliability index, which is the maximum raw prediction correlation that can be obtained given the sampling error in individual human ratings41,60,63,64. Given that the interrater reliability index could be precisely estimated for three of the regions (a), we computed prediction correlations for those regions. We did so across all video clips used in the training or evaluation in the DNN (blue), which may be subject to overfitting, and for a subset of video clips used only in the evaluation of the DNN (red). In both cases, prediction correlations are similar across regions, exceed human levels of interrater agreement (a) and are consistent with prediction correlations derived from a separate set of images rated by US English speakers, which also showed no evidence of bias to ethnicity or race (Extended Data Fig. 1e). Error bars represent the standard error; n denotes the number of clips.

Extended Data Fig. 6 Rates of the context occurrence.

a, Proportion of contexts by number of occurrences (out of around 3 million videos) for experiments 1 and 2. The minimum number of occurrences of any given context was 39 for experiment 1 and 176 for experiment 2, but most contexts occurred much more often. Note that the number of occurrences is plotted on a logarithmic scale. b, Proportion of contexts by number of occurrences in each region. Certain contexts were rarer in particular regions, especially regions with fewer videos overall. Still, the vast majority of contexts occurred at least dozens of times in every region.

Extended Data Fig. 7 Video-based context annotations are insensitive to facial expression.

To account for possible artefactual correlations owing to the direct influence of facial expression on the video topic predictions (and vice versa), an in silico experiment was conducted. a, First, 34,945 simulated videos were created by placing 1,456 tightly cropped facial expressions14 on each of 24 3-s clips from YouTube videos at random sizes and locations. Note that given the convolutional architecture of the DNN that we use, which largely overlooks strangeness in the configuration of objects within a video65,66, randomly superimposed faces should have a similar effect on annotations to real faces. Examples shown here are artistically rendered. b, The video topic and the expression DNN were then applied to these videos. The variance in the video topic annotations explained by the expression predictions was then computed using ordinary least squares linear regression. The amount of variance in context predictions explained by randomly superimposed expressions (prediction correlation r = 0.0094) was negligible compared to the amount of variance explained in context predictions by the expression predictions in actual videos from experiment 1 (r = 0.154, tenfold ordinary least squares linear regression applied to a subset of 60,000 videos from experiment 1; 5,000 from each of the 12 regions). Therefore, any direct influence of facial expression on the video topic annotations had a negligible effect on the context–expression correlations that we uncovered in experiment 1.

Supplementary information

Supplementary Figures

This zipped file contains Supplementary Figures 1 and 2 in html format. Supplementary Information Figure 1: Like Fig. 2a, but includes all 653 video- and text-based topic annotations analyzed in Experiment 1. Supplementary Information Figure 2: Like Fig. 2b, but includes all 1953 text-only context annotations analyzed in Experiment 2.

Supplementary Data 1

List of all strings used for first stage of video selection in Experiment 2.

Rights and permissions

About this article

Cite this article

Cowen, A.S., Keltner, D., Schroff, F. et al. Sixteen facial expressions occur in similar contexts worldwide. Nature 589, 251–257 (2021). https://doi.org/10.1038/s41586-020-3037-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-020-3037-7

This article is cited by

-

Almost Faces? ;-) Emoticons and Emojis as Cultural Artifacts for Social Cognition Online

Topoi (2024)

-

OpenFE: feature-extended OpenMax for open set facial expression recognition

Signal, Image and Video Processing (2024)

-

Manipulating facial musculature with functional electrical stimulation as an intervention for major depressive disorder: a focused search of literature for a proposal

Journal of NeuroEngineering and Rehabilitation (2023)

-

Evidence for cultural differences in affect during mother–infant interactions

Scientific Reports (2023)

-

Emotional event perception is related to lexical complexity and emotion knowledge

Communications Psychology (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.