Abstract

Cryptographic protocols are the backbone of our information society. This includes two-party protocols which offer protection against distrustful players. Such protocols can be built from a basic primitive called oblivious transfer. We present and experimentally demonstrate here a quantum protocol for oblivious transfer for optical continuous-variable systems, and prove its security in the noisy-storage model. This model allows us to establish security by sending more quantum signals than an attacker can reliably store during the protocol. The security proof is based on uncertainty relations which we derive for continuous-variable systems, that differ from the ones used in quantum key distribution. We experimentally demonstrate in a proof-of-principle experiment the proposed oblivious transfer protocol for various channel losses by using entangled two-mode squeezed states measured with balanced homodyne detection. Our work enables the implementation of arbitrary two-party quantum cryptographic protocols with continuous-variable communication systems.

Similar content being viewed by others

Introduction

Quantum cryptography can be used to perform cryptographic tasks with information theoretical security based on quantum mechanical principles. Most prominent is quantum key distribution (QKD), which allows to implement a communication link that provides theoretical security against eavesdropping1,2,3. Yet, there are other practically important cryptographic protocols such as oblivious transfer (OT), bit commitment, and secure password-based identification. In these so-called two-party protocols, two distrustful parties (Alice and Bob) engage and want to be ensured that the other party cannot cheat or maliciously influence the outcome. Hence, in contrast to QKD, security for these protocols needs not to be established against an outside attacker but against a distrustful player.

Because of this more demanding security requirement not even quantum physics allows us to implement these tasks securely without additional assumptions4,5,6,7,8,9,10. An assumption that can be posed on the adversary is to restrict the ability to store information11,12. As scalable and long-lived quantum memories are experimentally still very challenging this assumption can easily be justified. In particular, given any constraint on the size of the adversary’s storage device, security for two-party protocols can be obtained by sending more signals during the course of the protocol than the storage device is able to handle. This constraint is known as the bounded and more generally noisy-quantum-storage model13,14,15.

While OT is the basic building block from which all other two-party protocols can be derived16, it is possible to use the same techniques to establish security of bit commitment and secure identification. This has been achieved for protocols using a discrete variable (DV) encoding into single photon degrees of freedom (e.g., polarization, path, or time)17,18. Using such an encoding OT has been proposed and its security has been studied extensively13,14,15,19,20,21,22. Recently, its experimental demonstration has been reported23.

Here, we propose and experimentally demonstrate in a proof-of-principle experiment an oblivious transfer protocol based on optical continuous-variable (CV) systems. These systems, like classical optical telecommunication systems, encode information into orthogonal quadratures of the electromagnetic field. The similarity to classical telecom systems, room temperature operation, and intrinsic noise filtering by the local oscillator of homodyne detection will allow seamless integration into telecom networks using wavelength division multiplexing to transmit data and perform oblivious transfer or other quantum cryptographic protocols on the same fiber. We prove the security of the protocol in the noisy-quantum-storage model by establishing uncertainty relations, different to the one used in quantum key distribution. The experimental demonstration at a telecommunication wavelength is based on an optical CV setup adapted from a recent implementation of CV QKD24 which uses entangled two-mode squeezed states and subsequent homodyne measurements in two random orthogonal field quadratures.

Results

Oblivious transfer in the noisy-storage model

In our security proof we derive sufficient conditions for security against a distrustful party having a quantum memory with a bounded classical capacity similar to ref. 19. The main theoretical ingredients are entropic uncertainty relations for canonically conjugated observables which we derive with and without assumptions on the quantum memory’s storage operation and by modeling the quantum memory as bosonic loss channel. While we show that security for arbitrary storage operations is possible, the trade-off in parameters yields very pessimistic rates due to the absence of a tight uncertainty relation. We overcome this problem by assuming that the dishonest party’s storage operation is Gaussian.

We consider a one-out-of-two randomized oblivious transfer (1–2 rOT) protocol in which Bob learns one out of two random bit strings. More precisely, Bob chooses a bit t ∈ {0, 1} specifying the bit string he wants to learn, while Alice has no input. Alice’s output are two \(\ell\)-bit strings s0 and s1, and Bob obtains an \(\ell\)-bit string \(\widetilde {\bf{s}}\). A correct protocol satisfies that the outputs s0 and s1 are independent and uniformly distributed, and that Bob learns s t , i.e., \(\widetilde {\bf{s}} = {\bf{s}}_t\). To implement 1–2 OT from its randomized version, Alice takes two input strings x0, x1 and sends Bob the (bitwise) sums x0 ⊕ s0 and x1 ⊕ s1 mod 2. Bob can then learn x t by adding \(\widetilde {\bf{s}}\) to s t ⊕ x t (mod 2)19.

The protocol we propose here to implement 1–2 rOT requires the preparation of Gaussian modulated quadrature squeezed states of light. While indeed the protocol can be implemented using a prepare-and-measure technique, a convenient way to prepare such Gaussian modulated squeezed states is by homodyning one mode of a quadrature entangled two-mode squeezed state—often referred to as EPR state after the authors of their 1935 paper, Einstein, Podolski, and Rosen25. Such a state can be generated by mixing two squeezed modes with a balanced beam splitter26,27. In the following we will use the entanglement based variant to implement the protocol.

Before Alice and Bob start the actual protocol, they estimate the necessary parameters to run the protocol. The EPR source is located in Alice’s lab who is using balanced homodyne detection to estimate the variance of her local thermal state to fix αcut > 0 such that the probability for her to measure a quadrature with an absolute value smaller than αcut is larger than \(p_{\alpha _{{\mathrm{cut}}}}\) \(\left( {p_{\alpha _{{\mathrm{cut}}}} \approx 1} \right)\). Alice and Bob then estimate the correlation coefficient of their measurement outcomes, measured jointly in the same quadrature, to choose an appropriate information reconciliation (IR) code for the protocol. We note that this estimate can be made safely before the protocol even if one of the parties later tries to break the security (see ref. 23 for a discussion).

In the protocol, Alice first distributes n EPR states, each of which is then measured by Alice and Bob who both randomly perform balanced homodyne detection in one of two orthogonal quadratures X and P. We assume that Alice and Bob share a phase reference to synchronize their measurements. Alice discretizes the outcomes of the balanced homodyne detection by dividing the range [−αcut, αcut] into 2d bins of equal length δ indexed by \({\cal {Z}}\) = {1, …, 2d}. Any measurement lower than −αcut or larger than αcut is assigned to the corresponding adjacent bin in [−αcut, αcut]. Here, it is important that one uses a homodyne detector with subsequent analog-to-digital conversion with a precision larger than δ and a range larger than ±αcut. Bob uses the same discretization procedure after scaling his outcomes of the balanced homodyne detection with \(1{\mathrm{/}}\sqrt {1 - \mu }\) to account for the losses μ in the channel. Note that here all transmitted quantum states are used in the protocol, while in the single-photon protocol18,23 only successful transmissions are back reported. We denote the string of the n discretized outcomes on Alice’s and Bob’s side as Z = (Z1, …, Z n ) and Y = (Y1, …, Y n ), respectively.

After completing all the measurements, Alice and Bob wait for a fixed time Δt. As we will see later, a malicious Bob who wants to cheat has to be able to coherently store the modes in a quantum memory over time Δt. The rest of the protocol consists of classical post-processing and follows the same idea as the protocol using discrete variables14,28. First, Alice sends Bob her basis choices \(\theta _A^i\) for each measurement i = 1, …, n, that is, whether she measured the quadrature X \(\left( {\theta _A^i = 0} \right)\) or P \(\left( {\theta _A^i = 1} \right)\) of the ith mode. According to his choice bit t, Bob forms the index set I t containing all measurements in which both have measured the same quadrature and the complement I1−t of all measurements in which they measured different quadratures. Bob then sends the index sets I0, I1 to Alice upon which both split their strings of measurement results Z and Y into the sub-strings Z k and Y k corresponding to the indices I k (k = 0, 1). As elaborated in more detail in the next section, the properties of the EPR source ensure that Z t and Y t are correlated while Z1−t, Y1−t are uncorrelated.

Alice then uses a one-way information reconciliation code previously chosen by the two parties and computes syndromes W0, W1 for Z0, Z1 individually. She then sends W0, W1 to Bob, who corrects his strings Y t accordingly to obtain \({{\bf Y}}_t^{\prime}\). The information reconciliation code must be chosen such that up to a small failure probability \(\epsilon _{{\mathrm{IR}}}\) the strings Z t and \({\bf{Y}}_t^\prime\) coincide. Finally, Alice draws two random hash functions f0, f1 from a two-universal family of hash functions that map Z0, Z1 to \(\ell\)-bit strings s0, s1, respectively. Here, \(\ell\) is chosen appropriately to ensure the security of the protocol, see below. Alice then sends Bob a description of f0, f1 and Bob outputs \(\widetilde {\bf{s}} = f_t\left( {{\bf{Y}}_t^\prime } \right)\).

Correctness of the 1–2 rOT protocol

The OT protocol is correct if Bob learns the desired string, i.e. \({\bf{s}}_t = \widetilde {\bf{s}}\) and s0, s1 are uniformly distributed. The protocol is called \(\epsilon _{\mathrm{C}}\)-correct if the output distribution of the protocol is \(\epsilon _{\mathrm{C}}\)-close in statistical distance to the output of a perfect protocol19. Thus, \(\epsilon _{\mathrm{C}}\) is the failure probability that the protocol is incorrect.

The correctness condition above only has to be satisfied if both parties are honest and follow the rules of the protocol. In that case we can assume that the source and the channel are known. The EPR source has the characteristic property that if both parties measure the X (P) quadrature the outcomes are (anti-)correlated. To turn the anti-correlated outcomes of the P quadrature measurements into correlated ones, Bob simply multiplies his outcomes with −1. If Alice and Bob measure in orthogonal quadratures the outcomes are completely uncorrelated. This property of the EPR source implies that the strings Z t and Y t are correlated while Z1−t, Y1−t are uncorrelated.

For correctness it is important to demand that the information reconciliation code successfully corrects Bob’s string Y t with a probability larger than 1 − \(\epsilon _{{\mathrm{IR}}}\). Only after successful correction, i.e., \({\bf{Z}}_t = {\bf{Y}}_t^\prime\), it is ensured that \(\widetilde {\bf{s}} = {\bf{s}}\) after applying the hash function. The properties of the two-universal hash functions also ensure that the outcomes s0, s1 are close to uniform. By analyzing the security for Alice we will show that Alice’s outcomes are distributed close to uniform even if Bob is dishonest. Thus, if the protocol is \(\epsilon _{\mathrm{A}}\)-secure for Alice (see next section) our protocol is \(\epsilon _{\mathrm{C}}\)-correct with \(\epsilon _{\mathrm{C}}\) = \(\epsilon _{{\mathrm{IR}}}\) + 2\(\epsilon _{\mathrm{A}}\)19,28.

Security of the 1–2 rOT protocol

For honest Bob the oblivious transfer protocol is secure if a malicious Alice cannot find out which string t Bob wants to learn. The only information Bob reveals during the entire protocol are the index sets I0, I1. However, since honest Bob chooses his measurement basis uniformly at random, the strings I0, I1 are completely uncorrelated from t. This property implies that the protocol is perfectly secure for Bob without any assumption on the power of Alice. In particular, even if Alice possessed a perfect quantum memory she has no chance to find out t.

For honest Alice the oblivious transfer protocol is secure if a malicious Bob can only learn one of the strings s0, s1. Similarly to the case of correctness we allow for a small failure probability \(\epsilon _{\mathrm{A}}\) that security is not obtained. The precise composable secure definition of the \(\epsilon _{\mathrm{A}}\)-security for Alice that we employ here is given in terms of the distance to an ideal protocol that is perfectly secure19.

The security for a honest Alice requires additional assumptions on the power of a malicious Bob to store quantum information. Indeed, it is clear that if a malicious Bob has a perfect quantum memory, he could simply store all the modes until he receives the basis-choice information from Alice. After that he can simply measure all modes in the respective basis such that all the outcomes between Alice and Bob are correlated. This strategy then allows Bob to learn both strings s0, s1 and the protocol is completely insecure. But if Bob’s quantum storage capacity to store the modes over times longer than Δt is limited, he cannot preserve the necessary correlation required to learn both strings. By choosing a sufficiently small output length \(\ell\) of the hash function the additional correlation can be erased, and security for Alice can be obtained. The goal of the security proof in this noisy model is to quantify the trade-off between the capability of Bob’s quantum memory and the length \(\ell\) for which security can be established.

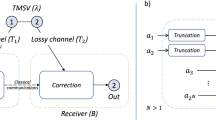

Without restriction of generality we model Bob’s available quantum storage ability by νn numbers of channels \({\cal {F}}_{{\mathrm{\Delta }}t}\). Here, the storage rate ν relates to the size of the available quantum storage, or also the failure probability to transfer the incoming photonic state successfully into the memory device. Additionally, we allow Bob to apply an encoding operation \({\cal {E}}\) before mapping the incoming mode to the input of his storage device. This encoding map also includes a classical outcome K that can, for instance, result from measuring part of the modes. A schematic of Bob’s quantum memory model is illustrated in Fig. 1.

The general form of an attack of dishonest Bob. Alice measured her mode of distributed EPR pairs with homodyne quadratures θA, yielding (discretized) results denoted Z. Bob’s memory attack is modeled by an encoding \({\cal E}\) that maps (conditioned on some classical outcome K) the n modes to the memory input Qin. The memory \({\cal M}\) is modeled by νn uses of the channel \({\cal {F}}_{{\mathrm{\Delta }}t}\). We consider the situations where the encoding \({\cal E}\) is arbitrary, a mixture of Gaussian channels or independent and identical over a small numbers of signals \(m_{\cal E}\)

We apply here a similar security proof as the one in ref. 19,28 for discrete variables (see Methods section for details). Therein, the problem of security has been related to the classical capacity Ccl\(\left( {{\cal {F}}_{{\mathrm{\Delta }}t}} \right)\) of Bob’s quantum memory channel \({\cal {F}}_{{\mathrm{\Delta }}t}\). The other important quantity determining the security is the probability with which Bob can correctly guess Alice’s discretized measurement outcomes Z given his classical outcomes of the encoding map and the information of Alice’s basis choices. This probability can conveniently be reformulated in terms of the min-entropy which is defined as minus the logarithm of the guessing probability. Furthermore, since we do not require perfect security we use the \(\epsilon\)-smooth min-entropy \(H_{\mathrm{min}}^\epsilon \left( {{\mathbf{Z}}|{\mathbf{\theta}}_{\mathrm{A}}{\mathbf{K}}} \right)\) which is defined as the largest min-entropy optimized over \(\epsilon\)-close states (see, e.g.,29). We emphasize that it is sufficient to condition on the classical information θA, K due to a relation of the smooth min-entropy of all the stored information to the question of how many classical bits can be sent reliably through the storage channel, i.e., Ccl\(\left( {{\cal {F}}_{{\mathrm{\Delta }}t}} \right)\)19 (see Methods section for more details).

A bound on the smooth min-entropy \(H_{\mathrm{min}}^\epsilon \left( {{\mathbf{Z}}|{\mathbf{\theta}} _{\mathrm{A}}{\mathbf{K}}} \right)\) is an uncertainty relation. To see this link, we can consider the equivalent scenario in which Bob sends Alice an ensemble of states {ρk}, where k corresponds to the different instances of the random variable K. Alice applies on each mode randomly either a discretized X or P measurement. Heisenberg’s uncertainty principle tells us that there exists no state for which Bob can correctly guess both outcomes for X and P. Since Bob does not know beforehand whether Alice is measuring X or P, he will always end up with an uncertainty about Alice’s outcomes Z. In the Methods section we derive such uncertainty relations that allow us to bound

with a state-independent lower bound \(\lambda ^\epsilon\)(δ, n). In the above equation the most crucial difference between the continuous- and the discrete-variable implementation appears. Indeed, while for discrete variables an uncertainty relation for BB84 measurements is required, we here need one for discretized position and momentum observables with finite binning δ.

We have now all ingredients to state the final results. Let us assume that the reliable communication rate of Bob’s quantum memory channel decreases exponentially if a coding rate above the classical capacity Ccl\(\left( {{\cal {F}}_{{\mathrm{\Delta }}t}} \right)\) is used. Then, given that \(\lambda ^\epsilon\) satisfies Eq. (1), we obtain an \(\epsilon _{\mathrm{A}}\)-secure 1–2 rOT if the length of the output bit string is chosen as

Here, \(r_{{\mathrm{IR}}} = (1{\mathrm{/}}n){\mathrm{log}}\left| {{\bf{W}}_0{\bf{W}}_1} \right|\) is the rate of bits used for information reconciliation. The explicit dependence on \(\epsilon _{\mathrm{A}}\) and the relation between the security and the classical capacity \({{\cal {C}}_{{\mathrm{cl}}}\left( {{\cal {F}}_{{\mathrm{\Delta }}t}} \right)}\) are given in the Methods section. If the right hand side of Eq. (2) is negative, security for Alice is not possible.

We see that security can be achieved for sufficiently large n if \(\lambda ^{{\cal {O}}\left( {\epsilon _{\mathrm{A}}} \right)} - r_{{\mathrm{IR}}} - \nu {\cal {C}}_{{\mathrm{cl}}}\left( {{\cal {F}}_{{\mathrm{\Delta }}t}} \right)\) is strictly larger than 0. In other words, we need that the uncertainty generated by Alice’s measurements should be larger than the sum of the leaked information during information reconciliation and the storage capacity of Bob. It is thus essential to find a tight uncertainty relation Eq. (1). We derive such an uncertainty relation in the Methods section. It turns out that it is difficult to derive a tight bound without further assumptions. This is partly due to the fact that no non-trivial uncertainty relation exists for continuous X and P measurements, i.e., if δ goes to 0. The uncertainty relation has thus to be derived directly for the discretized X and P measurements. We therefore also derive uncertainty relations under different assumptions on Bob’s encoding operation \({\cal {E}}\), namely, under the assumption that the encoding operation is a Gaussian operation and under the assumption that the encoding operation acts independent and identically (i.i.) on a limited number of modes \(m_{\cal {E}}\). For the explicit form of the uncertainty relations, we refer to the Methods section.

Security for realistic memory devices

Let us analyze the security in the case that Bob’s quantum memory can be modeled by a lossy bosonic channel \({\cal {N}}_n\), where η denotes the transmissivity. The classical capacity of this channel has only recently been determined after settling the minimal output entropy conjecture30,31. If the average photon number of each code word is smaller than Nav, it is given by g(ηNav), where \(g(x) = (x + 1)g(x + 1)\) \({\mathrm{log}}_2(x + 1) - x{\kern 1pt} {\mathrm{log}}_2{\kern 1pt} x\). An energy constraint is necessary as otherwise the capacity is unconstrained due to a memory that is infinite dimensional.

Recall that we further require that the success probability for reliable communication must drop exponentially to apply the security proof. It has been shown that for this to be the case a constraint on the average number of the photons is not sufficient but one has to impose that every code word is with high probability contained in a subspace with maximally Nmax photons32. Under this maximal photon number constraint the reliable communication vanishes exponentially at a rate above the classical capacity g(ηNmax)32,33,34, so that we can apply our security proof with \({\cal {C}}_{{\mathrm{cl}}}\left( {{\cal {F}}_{{\mathrm{\Delta }}t}} \right)\) = g(ηNmax).

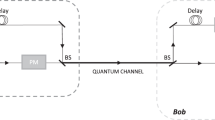

We plot in Fig. 2 under which assumptions on Bob’s quantum storage device security can be obtained. In particular, we consider the situation of arbitrary encoding operations, the situation that Bob’s encoding operation is a Gaussian operation, and the situation that Bob’s encoding operation is independent and identical over blocks of at most 10 modes. To obtain security, i.e. a positive OT rate, for arbitrary encoding operations, it is necessary to have an information reconciliation code with almost perfect efficiency β = 1. The information reconciliation efficiency describes the classical communication rate compared to the asymptotic optimal value, where the latter is achieved for β = 1. Current codes for CV systems can reach about β = 0.9835,36. The weakest requirements on the parameters have to be imposed under the Gaussian assumption in which security can already be obtained for low numbers of signals n = 105 (see Methods section). Under the independent and identical encoding assumption, larger numbers of transmitted signals n = 108 are required to obtain security under similar conditions as in the case of Gaussian operations.

Oblivious transfer security regions. The secure regions are obtained for different assumptions imposed on the encoding operation of malicious Bob’s quantum memories. We plot optical efficiency η of the quantum memories versus the logarithm to basis 10 of the quantum memory storage rate ν. Security is obtained for all values of ν and η marked by the colored regions. The green region is obtained under the assumption that the encoding is Gaussian (n = 2 × 105, β = 0.944, δ = 0.1), the blue region under the assumption that the encoding is independent and identical over at most \(m_{\cal E}\) = 10 modes (n = 108, β = 0.944, δ = 0.1), and the red region without any assumption, i.e. arbitrary encodings (n = 108, β = 0.98, δ = 1.0). The plots are obtained for an EPR source with two-mode squeezing of 12 dB and losses on Alice’s and Bob’s side of 3 and 6%, respectively. Further parameters: \(\epsilon _{\mathrm{A}}\) = 10−7, αcut = 51.2 and Bob’s maximal photon number in the encoding is assumed to be smaller than 100

In general, to obtain security a transmittance of the channel between Alice and Bob larger than 0.5 and non-trivial squeezing is required. This result is easily obtained if one takes the asymptotic limit for n to infinity under Gaussian or the identical and independent encoding operations. We note that the identical and independent assumption is no restriction of generality any more in the asymptotic limit37.

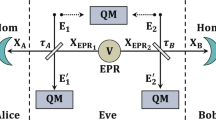

Experimental demonstration of 1–2 rOT

We performed a proof-of-principle experimental demonstration of the 1–2 rOT protocol using the experimental setup employed for CV QKD in ref. 24 and sketched in Fig. 3a. The EPR source was located at Alice’s location and consisted of two independent squeezed-light sources each producing continuous-wave squeezed vacuum states at 1550 nm by parametric down-conversion27. Both states were interfered at a balanced beam splitter with a relative phase of π/2 thereby exhibiting more than 10 dB entanglement according to the criterion from Duan et al.38. Alice kept one of the entangled modes and performed balanced homodyne detection using a low-noise, high quantum efficiency homodyne detector (see details in the Methods section). The homodyned quadrature amplitude was chosen randomly according to random bits generated by a quantum random-number generator based on homodyne measurements on vacuum states. The other entangled mode was sent to Bob via a free-space channel along with a bright local oscillator beam which served as phase reference. Optical loss in this channel was introduced by a variable beam splitter comprising a half-wave plate and a polarizing beam splitter. Bob performed balanced homodyne detection on his mode with a random quadrature chosen by a similar quantum random-number generator. The measurement repetition rate of the system was 100 kHz. For more experimental details we refer to the Methods and the ref. 24.

Experimental setup and results. a Squeezed light at 1550 nm was generated in two parametric down-conversion sources and superimposed at a 50:50 beam splitter to obtain entanglement. One mode was kept locally by Alice and measured with homodyne detection randomly in the amplitude and phase quadrature. The other mode was sent through a free-space channel simulated by a half-waveplate and a polarizing beam splitter (PBS). Bob then performed homodyne detection randomly in amplitude and phase quadrature. PD photodiode, LO local oscillator. b Secure oblivious transfer rate per signal obtained in the experiment. Points correspond to the generated oblivious transfer rates in the experiment for two different storage rates, ν = 0.001 (red) and ν = 0.01 (blue), for quantum memories with a transmittance of 0.75. The lines show simulated oblivious transfer rates obtained by applying a one-sided loss channel with losses μ to the estimated two-mode squeezed state in the experiment. Parameters: n = 2 × 105, αcut = 51.2, δ = 0.1, \(\epsilon _{\mathrm{A}}\) = 10−7, and Bob’s maximal photon number in the encoding is assumed to be smaller than 100

The classical post-processing was implemented as described above. We chose the number of exchanged signals to be 2.03 × 105 such that the number of measurement results where both parties have measured in the same bases and where both parties have measured in different bases are both larger than 105 with high probability. We then chose from each set the first 105 for post-processing (i.e., n = 2 × 105) to keep the block size of the information reconciliation code constant. From a security perspective this is possible because the size of the set is determined beforehand as part of the protocol. Because the honest player chooses his/her basis string uniformly at random, the choice of these sets is thus out of control of any dishonest player. For the discretization of the measurement outcomes, we used αcut = 51.2 and δ = 0.1, obtaining symbols from an alphabet of size 1024 corresponding to 10 bits per symbol.

The most challenging part is the information reconciliation for which we used a similar strategy as in ref. 24 and detailed in ref. 36. Here, Alice first communicated the four least significant bits of each symbol in plain to Bob. To correct the remaining 6 bits, she then used a non-binary low-density parity-check (LDPC) code with field size 64 and a code rate R compatible with the estimate of the correlation coefficient ρ from the CM. After Bob has received the syndrome corresponding to his input bit t (ignoring the data corresponding to bit 1 − t) he ran a belief propagation algorithm to correct Y t . In Table 1 we summarize the used code-rates for the different loss scenarios in our experiment.

As family of two-universal hashing functions we selected the mapping of the binary input string to the binary output string by multiplying the input string with a uniformly randomly chosen binary Toeplitz matrix T. Multiplication by a Toeplitz matrix is equivalent to linear cross-correlation. This allowed us to make use of the number-theoretic transform to obtain an implementation with computational complexity O(n log n) and without floating point errors. The binary input strings had a total length of 106 bits (consisting of n/2 = 105 symbols with 10 bits per symbol). The binary output strings had a length of \(\left\lfloor \ell \right\rfloor\). Thus the size of T was \(\left\lfloor \ell \right\rfloor \times 10^6\). The seed (the values for the first row and first column of the Toeplitz matrix) was generated with the quantum random number generator.

The correctness parameter \(\epsilon _{\mathrm{C}}\) = \(\epsilon _{{\mathrm{IR}}}\) + 2\(\epsilon _{\mathrm{A}}\) of the protocol depends on security parameter \(\epsilon _{\mathrm{A}}\), which we chose to be 10−7, and the probability of successful information reconciliation. From the given frame error rates in Table 1, we deduce a success rate of larger than 99.9%, i.e. \(\epsilon _{{\mathrm{IR}}}\) = 10−3, limited by the amount of experimental data taken, which yields \(\epsilon _{\mathrm{C}}\) = 10−3. The single frame error for 9% channel loss is thereby due to an error which prevents convergence of the LDPC decoder. The average overall efficiency of the information reconciliation was 94.4%. While generally possible, the temporal drift of the experimental setup in combination with the requirement of achieving a low frame error rate prevented a higher efficiency.

The results are shown in Fig. 3b. We computed the security under the Gaussian assumption and under the assumption on Bob’s quantum memories that the maximal photon number in the encoding is smaller than 100. The points correspond to the experimental implementation and the theoretical lines were computed using the estimated CM and the efficiency of the information reconciliation protocol used with the lossless channel. We see that for low channel loss, rates in the order of 0.1 bit per transmitted quantum state are possible. The maximal tolerated loss in the communication channel heavily relies on the assumptions on malicious Bob’s storage rate, which we set to ν = 0.01 and ν = 0.001 in Fig. 3b.

Discussion

We presented and experimentally demonstrated a protocol for oblivious transfer using optical continuous-variable systems, and showed security against a malicious party with an highly imperfect quantum storage device. For the implementation we used a strongly entangled two-mode squeezed continuous-wave light source, and balanced homodyne detection together with a quantum random-number generator for the measurements. While the employed EPR entangled state was close to optimal in the investigated regime of up to 15% channel loss, security can also be obtained with weaker entangled sources, e.g. for a quantum memory storage rate of ν = 0.001 only about 4 dB of squeezing are necessary to obtain security for 15% loss. More details can be found in the Methods section.

The secure bit rate of the OT protocol is in trade-off with assumptions on the quantum storage device of a dishonest party. In particular, it depends on the classical capacity of the storage device \({\cal {C}}_{{\mathrm{cl}}}\) and the storage rate ν. The storage rate is determined by the size of the available quantum storage and the success rate for transferring the photonic state into the quantum memory. To obtain security for any storage size, one can simply increase the number of signals sent during the protocol. The classical capacity is determined by the efficiency of the quantum memory for writing, storing (over time Δt) and reading out. Typical storage times of state-of-the-art quantum memories are milliseconds to seconds with some going up to minutes39.

For low channel losses we obtain rates that are about a factor three larger than those achievable in a previous DV implementation23 while using significantly smaller block sizes of about 105 compared to 107. However, our implementation is susceptible to losses and requires the optical loss to be generally less than 50%. This limit is a consequence of the analysis we employ in the security proof and is not a fundamental property of CV oblivious transfer. For practical purposes we encounter, however, an even lower loss threshold. For instance, in our experiment losses below 26% for ν = 0.01 and 32% for ν = 0.001 are necessary (see Fig. 3). This allows for an implementation of the protocol in short-range applications like a short free-space link with high collection efficiency, e.g., at an ATM, or a short fiber link of maybe 3–4 km within a business district of a city. Here, we assumed a free-space to fiber coupling efficiency of 95% (achievable with anti-reflex coated fibers), a realistic fiber transmission loss of 0.3 dB/km at 1550 nm and a high efficiency free-space homodyne receiver as implemented in our experiment.

Information reconciliation is required to correct the discretized (non-binary) data. In contrast to the case of DV, where conditioned on the arrival of a photon the bit-error rate is rather low, we require efficient information reconciliation for non-binary alphabets with high probability of success, i.e. low block error rate, since a two-way check ensuring that information reconciliation was successful will in contrast to QKD compromise security.

The security proof presented here can be adapted to other two-party cryptographic protocols such as bit commitment and secure identification using similar ideas and protocols as in13,18,19,28. Moreover, the security proof can be refined in various directions. Firstly, our security is related to the classical capacity of a malicious party’s quantum memory. However, conceptually, the security of the protocol relies on the ability to store quantum information coherently so that a reduction to the quantum capacity or a related quantity would be desirable. This relation has recently been shown for DV protocols using the entanglement cost20 and the quantum capacity21,22,40, but its generalization seems challenging for CV protocols as properties of finite groups have been used. Secondly, it is important to derive tight uncertainty relations that hold without additional assumptions. Having such a relation would remove the current constrained on the encoding operation into the quantum memory and possibly also remove the 50% loss limit. Finally, it would be interesting to clarify if OT can be implemented securely in the noisy-storage model using only coherent states. Although squeezing or entanglement is necessary in our security proof, it is not clear whether this is due to our proof technique or whether it is a general requirement.

Methods

Introduction to smooth min-entropy uncertainty relations

The security of OT in the noisy-storage model relies on tight uncertainty bounds \(\lambda ^\epsilon\) on the smooth min-entropy, Eq. (1)41 (for the details why this is the decisive quantity see method section 4.5). As discussed in the main text we can think of dishonest Bob preparing an ensemble of states \(\left\{ {\rho _{A^n}^k} \right\}_k\) according to K. Here, An indicates that Alice (A) holds the n modes sent by Bob. A restriction on the encoding map \({\cal {E}}\) translates to a restriction on the ensemble. Clearly, without any restriction on \({\cal {E}}\) there is no restriction on \(\rho _{A^n}^k\). If \({\cal {E}}\) is a Gaussian operation, then each \(\rho _{A^n}^k\) is a mixture of Gaussian states, since the source distributed by Alice is Gaussian. Note that mixtures have to be considered since combining two or more values of K into one is a simple operation. And finally, if \({\cal {E}}\) acts independently and identically over only \(m_{\cal {E}}\) modes, then each \(\rho _{A^n}^k\) is identical and independent over \(m_{\cal {E}}\) modes since the source is assumed to be identical and independent for each mode.

Uncertainty relation without assumptions

Because of the maximization in the definition of the smooth min-entropy over close-by states, it is very difficult to bound it directly. Instead, it is convenient to follow the idea from42 and to use the fact that it can be related to the conditional α-Rényi entropies defined as \(H_\alpha (A|B)_\rho\) = \(\frac{1}{{1 - \alpha }}{\mathrm{log}}{\kern 1pt} {\mathrm{tr}}\left[ {\rho _{AB}^\alpha \left( {{\mathrm{id}}_A \otimes \rho _B} \right)^{1 - \alpha }} \right]\). In particular, it holds for α ∈ (1, 2] and any two finite random variables X and Y that \(H_{{\mathrm{min}}}^\epsilon\)(X|Y) ≥ H α (X|Y) − 1/(α − 1)log 2/\(\epsilon ^2\)43. This relation can be generalized to discrete but infinite random variables using the approximation result from44. We then obtain a lower bound on the smooth min-entropy with

if (1/n)H α (Z|θ) ≥ Bα(δ, n) holds. Moreover, it suffices to find a bound for n = 1, as Bα(δ, n) = nBα(δ, 1)42.

We denote in the following by {x l } and {p l } (\(l \in {\Bbb N}\)) the probability distribution of the discretized X and P measurement. Using the definition of the α-Rényi entropy, one finds that \(2^{(1 - \alpha )H_\alpha ({\mathbf{Z}}|{\mathbf{\theta}} )}\) = \(\frac{1}{2}\left( {\mathop {\sum}\nolimits_{k \in {\Bbb N}} {\kern 1pt} x_k^\alpha + \mathop {\sum}\nolimits_{l \in {\Bbb N}} {\kern 1pt} p_l^\alpha } \right)\). Since the distributions x k and p k are discretized X and P distributions that are related by Fourier transform, they satisfy certain constraints. For instance, it is not possible that both have only support on a finite interval.

A precise formulation of the constraint for the probabilities x[I] and p[J] to measure X in interval I and P in interval J has been given by Landau and Pollak45. They proved that these probabilities are constrained by the inequality \({\mathrm{cos}}^{ - 1}{\kern 1pt} \sqrt {q[I]} + {\mathrm{cos}}^{ - 1}\) \(\sqrt {p[J]} \ge {\mathrm{cos}}^{ - 1}\sqrt {\gamma \left( {\left| I \right|,\left| J \right|} \right)}\). Here, \(\left| I \right|\) denotes the length of the interval I, and \(\gamma (a,b)\) := \(ab{\mathrm{/}}(2\pi \hbar )S_0^{(1)}\left( {1,ab{\mathrm{/}}(4\hbar )} \right)^2\) with \(S_0^{(1)}\) the 0th radial prolate spheroidal wave function of the first kind. For ab sufficiently small γ can be approximated by γ(a,b) ≈ ab/(2πħ).

The above constraint on q[I] and p[J] can be reformulated in the following way46: (i) if 0 ≤ q[I] ≤ γ(\(\left| I \right|\), \(\left| J \right|\)), then all values for p[J] are possible, and (ii) if γ(\(\left| I \right|\), \(\left| J \right|\)) ≤ q[I], then p[J] ≤ g(q[I], \(\left| I \right|\), \(\left| J \right|\)) for g(q, a, b) := \(\left[ {\sqrt {q\gamma (a,b)} } \right.\) + \(\left. {\sqrt {(1 - q)(1 - \gamma (a,b))} } \right]^2\). This yields an infinite number of constraints for {q l } and {p l }. Let us assume that {q l } and {p l } are decreasingly ordered, then for all \(M,N \in {\Bbb N}\) it has to hold that

It is challenging to turn the above constraints into an explicit and tight upper bound for the α-Rényi entropy. In the following we discuss a possible way that connects the above constraints with a majorization approach.

Let us denote by {r j } the decreasingly ordered joint sequence of both distributions {q l } and {p l }. Then, we can write \(2^{(1 - \alpha )H_\alpha (\bf{X}|{\theta} )}\) = \(\frac{1}{2}\mathop {\sum}\nolimits_{j \in {\Bbb N}} {\kern 1pt} r_j^\alpha\). Since the function \(r \mapsto \mathop {\sum}\nolimits_{j \in {\Bbb N}} {\kern 1pt} r_j^\alpha\) is Schur convex, it can be upper bounded by any sequence w j majorizing r j . Such a w j can be constructed in a way shown in ref. 47.

First, note that condition (ii) above implies that q[I] + p[J] ≤ q[I] + g(q[I], \(\left| I \right|\), \(\left| J \right|\)). Optimizing the right hand side over all 0 ≤ q[I] ≤ 1, we obtain the constraint q[I] + p[J] ≤ 1 + \(\sqrt {\gamma \left( {\left| I \right|,\left| J \right|} \right)}\). This then implies that \(\mathop {\sum}\nolimits_{j = 1}^n {\kern 1pt} r_j \le 1 + F_n(\delta )\), where \(F_n(\delta ) = {\mathrm{max}}_{1 \le k \le n}\)\(\sqrt {\gamma (k\delta ,(n - k)\delta )}\). Here, the maximum is attained for \(k = \left\lfloor {\frac{n}{2}} \right\rfloor\).

We can now construct a majorizing sequence w by setting recursively w1 = 1 and w k = F k − wk−1 for k ≥ 2. The obtained bound on the α-Rényi-entropy is then given by \(B_{{\mathrm{Maj}}}^\alpha\) = \(\frac{1}{{1 - \alpha }}{\mathrm{log}}\left( {\frac{1}{2}\mathop {\sum}\nolimits_k {\kern 1pt} w_k^\alpha } \right)\). According to Eq. (3), this translates into a bound on the smooth min-entropy given by

A plot of the bound is given in Fig. 4. We emphasize that the obtained bound seems not very tight, especially for small δ. We believe that this problem is due to the fact that the way how the majorizing sequence is constructed does not exploit all the possible constraints.

Uncertainty bounds. Uncertainty bound without assumptions (red, solid), under the identical and independent (i.i.) assumption over \(m_{\cal E}\) = 10 signals (blue, dashed-dotted) and under Gaussian assumptions (green, long-dashed) depending on the binning size δ. n = 108, \(\epsilon _{\mathrm{A}}\) = 10−7. We see that the best bound is obtained under the Gaussian assumption. Moreover, the bound without assumption is very loose for small δ

Uncertainty relation under Gaussian assumption

In order to obtain an improved uncertainty relation we assume that the states \(\rho _{A^n}^k\) are mixtures of Gaussian states. Similarly as before, we derive a bound for the α-Rényi entropy with α ∈ (1, 2] and use Eq. (3) to obtain a bound on the smooth min-entropy. This argument implies that it is again sufficient to consider the case n = 1.

Let us first assume that the state is Gaussian such that the continuous probability distributions x(s) and p(s) of the X and P measurements are Gaussian. We denote the standard deviations of the X and P distribution by σ X and σ P , respectively. Using Jensen’s inequality we can upper bound \(x_k^\alpha = \left( {{\int}_{I_k} x(s)ds} \right)^\alpha\) ≤ \(\delta ^{\alpha - 1}{\int}_{I_k} {\kern 1pt} x(s)^\alpha ds\), where I k denotes the interval corresponding to the bin k. Taking now the sum over all bins we arrive at \(\mathop {\sum}\nolimits_k {\kern 1pt} x_k^\alpha = \delta ^{\alpha - 1}\) \({\int} {\kern 1pt} x(s)^\alpha ds = :g\left( {\tilde \sigma _X} \right)\), where \(g(x) = 1{\mathrm{/}}\left[ {\sqrt \alpha \left( {\sqrt {2\pi } x} \right)^{\alpha - 1}} \right]\) and \(\tilde \sigma _X = \sigma _X{\mathrm{/}}\delta\) is the relative standard deviation of the Gaussian distribution x(s).

Note that the bound \(g\left( {\tilde \sigma _X} \right)\) becomes very loose if \(\tilde \sigma _X\) is very small and can even become larger than the trivial upper bound 1. We avoid that problem by simply bounding \(\mathop {\sum}\nolimits_k {\kern 1pt} q_k^\alpha \le {\mathrm{min}}\left\{ {g\left( {\tilde \sigma _X} \right),1} \right\}\). The same applies to the P quadrature yielding the upper bound \(\mathop {\sum}\nolimits_k {\kern 1pt} q_k^\alpha\) + \(\mathop {\sum}\nolimits_l {\kern 1pt} p_l^\alpha \le {\mathrm{min}}\left\{ {g\left( {\tilde \sigma _X} \right),1} \right\}\) + \({\mathrm{min}}\left\{ {g(\tilde \sigma _P),1} \right\}\). We can now apply Kennard’s uncertainty relation for the standard deviations of X and P to obtain \(\tilde \sigma _X\tilde \sigma _P \ge \hbar {\mathrm{/}}\left( {2\delta _x\delta _p} \right)\)48. Optimizing \({\mathrm{min}}\left\{ {g\left( {\tilde \sigma _X} \right),1} \right\}\) + \({\mathrm{min}}\left\{ {g\left( {\tilde \sigma _P} \right),1} \right\}\) over all possible \(\tilde \sigma _X,\tilde \sigma _P\) gives \(1 + (\delta ^2{\mathrm{/}}(\pi \hbar ))^{(\alpha - 1)}{\mathrm{/}}\alpha\). Hence, we find for Gaussian states the uncertainty relation \(H_\alpha ({\mathbf{Z}}|{\mathbf{\theta}} ) \ge {\mathbf{B}}_{\mathbf{Gauss}}^\alpha (\delta ,{\mathbf{n}})\) with

This relation then leads to a bound on the smooth min-entropy with \(\lambda _{{\mathrm{Gauss}}}^\epsilon\)(n) via Eq. (3). The improvement over the previous bound can be seen in Fig. 4.

Let us finally show that this relation also holds for arbitrary mixtures of Gaussian states. Let us take \(\rho = \mathop {\sum}\nolimits_y {\kern 1pt} \mu _y\rho ^y\) with probability μ y and ρy a Gaussian state for any y. We then obtain that \(\mathop {\sum}\nolimits_k {\kern 1pt} x_k^\alpha = \mathop {\sum}\nolimits_k \left( {\mathop {\sum}\nolimits_y {\kern 1pt} \mu _y{\int}_{I_k} {\kern 1pt} x^y(s)\mathrm{d}s} \right)^\alpha\) ≤ \(\mathop {\sum}\nolimits_k {\kern 1pt} \mathop {\sum}\nolimits_y {\kern 1pt} \mu _y\left( {{\int}_{I_k} x^y(s)\mathrm{d}s} \right)^\alpha\) = \(\mathop {\sum}\nolimits_y {\kern 1pt} \mu _y\mathop {\sum}\nolimits_k {\kern 1pt} \left( {x_k^y} \right)^\alpha\). Here we denote by xy the X probability distribution of ρy, and we used the concavity of the function \(x \mapsto x^\alpha\). This argument shows that the above uncertainty relation extends to arbitrary (even continuous) mixtures of Gaussian states.

Uncertainty relation under the identical and independent assumption

Let us assume that a certain number of quantum states are identical and independent, i.e. that each \(\rho _{A^n}^k\) has tensor product structure \(\rho _{A^n}^k = \left( {\sigma _{A^{m_{\cal {E}}}}^k} \right)^{ \otimes n/m_{\cal {E}}}\), with \(n/m_{\cal {E}}\) being an integer. It is known that if \(n/m_{\cal {E}}\) goes to infinity, the smooth min-entropy converges to the Shannon entropy49,50. More precisely, we can lower bound \(\frac{1}{n}H_{{\mathrm{min}}}^\epsilon \left( {{\bf{Z}}^n|{\mathbf{\theta}} ^n} \right)\) by

where \({\mathrm{\Gamma }}\left( {{\bf{Z}}^{m_{\cal {E}}}} \right) = 2 + 2^{H_{1/2}\left( {{\bf{Z}}^{m_{\cal {E}}}} \right)}\). This relation has also been shown for infinite-dimensional alphabets in44. If we assume that Alice knows the covariance matrix of her reduced state, we can bound \(H_{1/2}\left( {{\bf{Z}}^{m_{\cal {E}}}} \right)\), and thus, \({\mathrm{\Gamma }}\left( {{\bf{Z}}^{m_{\cal {E}}}} \right)\). It therefore remains to find a lower bound on the Shannon entropy \(H\left( {{\bf{Z}}^{m_{\cal {E}}}|{\mathbf{\theta}} ^{m_{\cal {E}}}} \right)\).

For simplicity let us assume that \(m_{\cal {E}}\) = 1. Because the measurement choice θ is uniformly distributed, we find that H(Z|θ) = 1/2(H(X δ ) + H(P δ )). Thus, we recover the usual entropic uncertainty relation for the Shannon entropy which has been extensively studied. In particular, it has been shown that H(X δ ) + H(P δ ) ≥ log(eπħ/δ2)51. It is easy to see that in the case of an arbitrary \(m_{\cal {E}}\), we similarly obtain \(H\left( {{\bf{Z}}^{m_{\cal {E}}}|{\mathbf{\theta}} ^{m_{\cal {E}}}} \right)\) ≥ \(m_{\cal {E}}{\mathrm{/}}2{\kern 1pt} {\mathrm{log}}\left( {e\pi \hbar {\mathrm{/}}\delta ^2} \right)\). In conclusion, we arrive at an uncertainty relation with

Security proof against a malicious Bob with restricted memory

The security proof for an honest Alice is similar to the one in ref. 28, which is using key results from ref. 17,19. The main difference is that we have to include the information reconciliation leakage, and to take into account that Bob’s quantum memory can be infinite-dimensional and that K can be continuous. According to the protocol, we can assume that Alice is distributing a state ρ AB , A = A1, …, A n , for which

holds for any mode i. As in the main text Z denotes Alice’s discretized measurement outcomes with the binning (−∞, −αcut + δ], (−αcut + δ, −αcut + 2δ], …, (αcut − 2δ, αcut − δ], (αcut − δ, ∞). Note, that αcut is an integer multiple of δ. We further introduce \(\tilde Z\) as the string of outcomes if Alice would measure a uniform binning of δ over the entire range \({\Bbb R}\) (as used in the derivation of the uncertainty relations).

To ensure composable security for Alice, we have to show that for any memory attack of Bob, there exists a random variable D in {0, 1} such that conditioned on D = d, Bob does not know s d with probability larger than 1 − \(\epsilon _{\mathrm{A}}\)19. Denoting by B′ all the classical and quantum information held by a malicious Bob at the end of the protocol, this condition can be formulated by using the trace norm

where \(\tau _{S_D}\) denotes the uniform distribution over S D . We use lower indices to indicate the relevant systems, that is, the overall state of a joint system with quantum information A, B and classical random variables X, Y is denoted by ρ ABXY . Hence, if X is a random variable, ρ X denotes its distribution, if A is a quantum system, ρ A denotes its quantum state, and its combination ρ XA can conveniently be described by a classical quantum state.

Recall that s0, s1 are obtained by hashing the substrings Z0, Z1. Choosing the length \(\ell\) of the bit strings s0, s1 sufficiently small has the effect of randomization and destruction of correlation, i.e., establishing Eq. (11). More precisely, the condition from Eq. (11) is satisfied if52

and we can optimize over 0 < 4\(\epsilon _1\) < \(\epsilon _{\mathrm{A}}\). The crucial difference to the discrete-variable case is that the above relation holds even if Bob’s quantum memory is modeled by an infinite-dimensional system.

Bob’s information B′ consist of the states of his quantum memories Q and his classical register K (see Fig. 1), Alice’s basis choice θA, and the information reconciliation syndrome W = (W0, W1). The next goal is to remove the conditioning on the quantum system by relating it to the classical capacity of Bob’s quantum memory \({\cal {F}}_{{\mathrm{\Delta }}t}^{ \otimes \nu n}\). For this step we use the key result from ref. 19 which says that \(H_{{\mathrm{min}}}^{\epsilon _1}\left( {{\bf{Z}}_D|S_{\bar D}D{\bf{QK}}{\mathbf{\theta}} _{\mathrm{A}}{\bf{W}}} \right)\) is larger than minus the binary logarithm of

where \({\cal {P}}_{{\mathrm{succ}}}^{{\cal {F}}_{{\mathrm{\Delta }}t}^{ \otimes \nu n}}(\ell )\) is the success probability to send \(\ell\) bits through the channel \({\cal {F}}_{{\mathrm{\Delta }}t}^{ \otimes \nu n}\). Again, we have the freedom to optimize over all 0 < \(\epsilon _2\) < \(\epsilon _1\). The above result, originally proven for finite dimensions, can easily be extended to infinite-dimensions using the finite-dimensional approximation results from ref. 44. For the following, we will assume that the reliable transmission of classical information over the channel \({\cal {F}}_{\Delta t}\) decays exponentially above the classical capacity, i.e., \({\cal {P}}_{{\mathrm{succ}}}^{{\cal {F}}_{{\mathrm{\Delta }}t}^{ \otimes n}}(nR) \le 2^{ - \xi \left( {R - C_{{\mathrm{cl}}}\left( {{\cal {F}}_{{\mathrm{\Delta }}t}} \right)} \right)}\), usually referred to as a strong converse.

The final step is to lower bound the smooth min-entropy of Z D given DKθAW in Eq. (13). It is convenient to use the min-entropy splitting theorem14 saying that for given two random variables Z0, Z1, there exists a binary variable D such that the smooth min-entropy of Z D given D is larger than half of the smooth min-entropy of the two strings together, that is, \(H_{{\mathrm{min}}}^\epsilon \left( {{\bf{Z}}_D|D{\bf{K}}{\mathbf{\theta}} _{\mathrm{A}}{\bf{W}}} \right)\) ≥ \(\frac{1}{2}H_{{\mathrm{min}}}^\epsilon \left( {{\bf{Z}}_0{\bf{Z}}_1|{\bf{K}}{\mathbf{\theta}} _{\mathrm{A}}{\bf{W}}} \right) - 1\). This theorem defines retrospectively the random variable D. The conditioning on the information reconciliation syndrome W can be removed by simply subtracting the maximum information contained in W given by \(nr_{{\mathrm{IR}}} = {\mathrm{log}}_2\left| {\bf{W}} \right|\). Before we can apply the uncertainty relation, we have to eventually relate the entropy of Z by the one of \(\widetilde {\bf{Z}}\). This is necessary since a state-independent uncertainty relation cannot be satisfied for quadrature measurements with a finite range. But due to the condition that αcut is chosen such that the probability to measure an event outside the measurement range is small, we can bound53 the \(\epsilon _2\)-smooth entropy of Z given by the \(\left( {\epsilon _2 - \epsilon _{\alpha _{{\mathrm{cut}}}}} \right)\)-smooth entropy of \(\widetilde {\bf{Z}}\), where \(\epsilon _{\alpha _{{\mathrm{cut}}}} = \sqrt {2\left( {1 - p_{\alpha _{{\mathrm{cut}}}}^n} \right)}\). Note that this step requires that \(\epsilon _{\alpha _{{\mathrm{cut}}}} < \epsilon _2 < \epsilon _{\mathrm{A}}{\mathrm{/}}4\). Since the probability that Alice measures an outcome with absolute value larger than αcut only depends on her reduced state, the same holds conditioning on K and θA53.

Hence, given that the uncertainty relation from Eq. (1) holds, we find that \(\epsilon _{\mathrm{A}}\)-security for Alice as in Eq. (11) is satisfied, if we choose

where

The length \(\ell\) can be optimized over all \(\epsilon _1\), \(\epsilon _2\) ≥ 0 arbitrary such that \(\epsilon _{\mathrm{A}} > 4\epsilon _1 > 4\epsilon _2 > 4\epsilon _{\alpha _{{\mathrm{cut}}}}\). We then obtain Eq. (2) in the main text for a Gaussian loss channel satisfying ξ = 132.

Figure 5 shows a simulation of the oblivious transfer rate under Gaussian assumption versus the amount of squeezing in the EPR state. For the experimentally investigated region of channel loss the generated EPR state was close to optimal. Only for loss very close to the maximum channel loss the optimal squeezing value is around 10 dB.

Simulation of oblivious transfer rate. Simulation of oblivious transfer rate under Gaussian assumption versus the amount of squeezing used to prepare the EPR state for two different storage rates ν = 0.01 and ν = 0.001. The shaded areas correspond to a channel loss between 3 and 15% which is the experimentally investigated region. The orange traces are calculated for a channel loss close to the maximum possible value for the respective storage rate. The anti-squeezing of the employed squeezed state was calculated using parameters characterizing the experimental squeezed light sources and homodyne detectors: 98.2% escape efficiency, 79.8 mm optical round-trip length, 8 MHz sideband frequency, 91.4% total optical efficiency. Other parameters: information reconciliation efficiency 92.5%, n = 2 × 105 samples, \(\epsilon _{\mathrm{A}}\) = 10−7, αcut = 51.2, δ = 0.1, Nmax = 100, transmittance of quantum memories 0.75

Experimental parameters

The squeezed light sources were pumped with 140 and 170 mW, respectively. The local oscillator power for Alice’s and Bob’s homodyne detector was 10 mW each yielding a vacuum-to-electronic-noise clearance of about 18 dB. The 14 bit analog-to-digital converter allowed us to measure a maximum α of about 100. The quantum efficiency of the photo diodes was 99%, the homodyne visibility 98%. The phases of the local oscillators were randomly switched at a rate of 100 kHz between the amplitude and phase quadrature using a fiber coupled waveguide phase modulator. The reconstructed covariance matrix measured without loss in the channel and after local rescaling of Bob’s variances reads

where the entries in brackets were not measured, but assumed to be 0. Taking an upper bound on the variance of Alice’s state of 25, \(\epsilon _{\mathrm{A}}\) = 10−7 and n = 2 ⋅ 105 we obtain a minimum αcut ≈ 47.9 using the expression in the previous section.

For further experimental details we refer to ref. 24.

For the post-processing we used C++11 as programming language, compiled with GNU GCC 6.3, and ran the binary on a single core of an Intel Xeon E7-8870v2 CPU in a PC running Linux (Debian 8) as operating system. On average we achieved a rate of approximately 1k oblivious bit transfers per second.

Data availability

Codes for calculating the oblivious transfer rate are available at https://github.com/qpit/ObliviousTransfer. All other data are available from the authors upon request.

References

Wiesner, S. Conjugate coding. SIGACT News 15, 78–88 (1983).

Bennett, C. H. & Brassard, G. Quantum cryptography: public key distribution and coin tossing. In Proc. IEEE Int. Conf. Comput. Syst. Signal Process. 175–179 (Bangalore, India, 1984).

Ekert, A. Quantum cryptography based on Bell’s theorem. Phys. Rev. Lett. 67, 661–663 (1991).

Mayers, D. Unconditionally secure quantum bit commitment is impossible. Phys. Rev. Lett. 78, 3414–3417 (1997).

Mayers, D. The trouble with quantum bit commitment. Preprint at http://arxiv.org/abs/9603015 (1996).

Lo, H.-K. & Chau, H. F. Is quantum bit commitment really possible? Phys. Rev. Lett. 78, 3410 (1997).

Lo, H.-K. & Chau, H. F. Why quantum bit commitment and ideal quantum coin tossing are impossible. Phys. D 120, 177–187 (1998).

Lo, H.-K. Insecurity of quantum secure computations. Phys. Rev. A 56, 1154–1162 (1997).

D’Ariano, G., Kretschmann, D., Schlingemann, D. & Werner, R. Quantum bit commitment revisited: the possible and the impossible. Phys. Rev. A 76, 032328 (2007).

Buhrman, H., Christandl, M. & Schaffner, C. Complete insecurity of quantum protocols for classical two-party computation. Phys. Rev. Lett. 109, 160501 (2012).

Maurer, U. Conditionally-perfect secrecy and a provably-secure randomized cipher. J. Cryptol. 5, 53–66 (1992).

Cachin, C. & Maurer, U. M. In Proceedings of CRYPTO1997, Lecture Notes in Computer Science, 292–306 (Springer, Berlin, Heidelberg, 1997).

Damgård, I. B., Fehr, S., Salvail, L. & Schaffner, C. Cryptography in the bounded-quantum-storage model. SIAM J. Comput. 37, 1865–1890 (2008).

Damgård, I. B., Fehr, S., Renner, R., Salvail, L. & Schaffner, C. A tight high-order entropic quantum uncertainty relation with applications. In Advances in Cryptology-CRYPTO 2007, 360–378 (Springer, 2007).

Wehner, S., Schaffner, C. & Terhal, B. M. Cryptography from noisy storage. Phys. Rev. Lett. 100, 220502 (2008).

Kilian, J. Founding Crytpography on Oblivious Transfer. In Proceedings of the Twentieth Annual ACM Symposium on Theory of Computing, 20–31 (ACM, New York, 1988).

Wehner, S., Curty, M., Schaffner, C. & Lo, H.-K. Implementation of two-party protocols in the noisy-storage model. Phys. Rev. A 81, 052336 (2010).

Ng, N. H. Y., Joshi, S. K., Ming, C. C., Kurtsiefer, C. & Wehner, S. Experimental implementation of bit commitment in the noisy-storage model. Nat. Commun. 3, 1326 (2012).

König, R., Wehner, S. & Wullschleger, J. Unconditional security from noisy quantum storage. IEEE Trans. Inf. Theory 58, 1962–1984 (2012).

Berta, M., Fawzi, O. & Wehner, S. In Advances in Cryptology CRYPTO2012, Lecture Notes in Computer Science, Vol. 7417, 776–793 (2012).

Berta, M., Brandao, F. G., Christandl, M. & Wehner, S. Entanglement cost of quantum channels. IEEE Trans. Inf. Theory 59, 6779–6795 (2013).

Dupuis, F., Fawzi, O. & Wehner, S. Entanglement sampling and applications. IEEE Trans. Inf. Theory 61, 1093–1112 (2015).

Erven, C. et al. An experimental implementation of oblivious transfer in the noisy storage model. Nat. Commun. 5, 3418 (2014).

Gehring, T. et al. Implementation of continuous-variable quantum key distribution with composable and one-sided-device-independent security against coherent attacks. Nat. Commun. 6, 8795 (2015).

Einstein, A., Podolsky, B. & Rosen, N. Can quantum-mechanical description of physical reality be considered complete? Phys. Rev. Lett. 47, 777–780 (1935).

Furusawa, A. et al. Unconditional quantum teleportation. Science 282, 706–709 (1998).

Eberle, T., Händchen, V. & Schnabel, R. Stable control of 10 dB two-mode squeezed vacuum states of light. Opt. Express 21, 11546–11553 (2013).

Schaffner, C. Simple protocols for oblivious transfer and secure identification in the noisy-quantum-storage model. Phys. Rev. A 82, 032308 (2010).

Tomamichel, M. Quantum Information Processing with Finite Resources: Mathematical Foundations, Springer Briefs in Mathematical Physics 5, (Springer, Cham, 2015).

Giovannetti, V., Holevo, A. & Garcia-Patron, R. A solution of the Gaussian optimizer conjecture. Commun. Math. Phys. 334, 1553–1571 (2014).

Giovannetti, V., Garcia-Patron, R., Cerf, N. & Holevo, A. Ultimate classical communication rates of quantum optical channels. Nat. Photonics 8, 796–800 (2014).

Wilde, M. M. & Winter, A. Strong converse for the classical capacity of the pure-loss bosonic channel. Probl. Inf. Transm. 50, 117–132 (2014).

Bardhan, B. R. & Wilde, M. M. Strong converse rates for classical communication over thermal and additive noise bosonic channels. Phys. Rev. A. 89, 022302 (2014).

Bardhan, B. R., Garcia-Patron, R., Wilde, M. M. & Winter, A. Strong converse for the classical capacity of optical quantum communication channels. IEEE Trans. Inf. Theory 61, 1842–1850 (2015).

Jouguet, P., Elkouss, D. & Kunz-Jacques, S. High-bit-rate continuous-variable quantum key distribution. Phys. Rev. A 90, 042329 (2014).

Pacher, C., Martinez-Mateo, J., Duhme, J., Gehring, T. & Furrer, F. Information reconciliation for continuous-variable quantum key distribution using non-binary low-density parity-check codes. Preprint at http://arxiv.org/abs/1602.09140 (2016).

Renner, R. & Cirac, J. I. de Finetti representation theorem for infinite dimensional quantum systems and applications to quantum cryptography. Phys. Rev. Lett. 102, 110504 (2009).

Duan, L.-M., Giedke, G., Cirac, J. & Zoller, P. Inseparability criterion for continuous variable systems. Phys. Rev. Lett. 84, 2722–2725 (2000).

Heshami, K. et al. Quantum memories: emerging applications and recent advances. J. Modern Opt. 63, 2005–2028 (2016).

Berta, M., Fawzi, O. & Wehner, S. Quantum to classical randomness extractors, Advances in Cryptology—CRYPTO 2012, Lecture Notes in Computer Science Vol. 7417, 776–793. (Springer, Berlin Heidelberg, 2012).

Tomamichel, M., Colbeck, R. & Renner, R. Duality between smooth min- and max-entropies. IEEE Trans. Inf. Theory 56, 4674–4681 (2010).

Ng, N. H. Y., Berta, M. & Wehner, S. Min-entropy uncertainty relation for finite-size cryptography. Phys. Rev. A 86, 042315 (2012).

Tomamichel, M., Colbeck, R. & Renner, R. A fully quantum asymptotic equipartition property. IEEE Trans. Inf. Theory 55, 5840–5847 (2009).

Furrer, F., Aberg, J. & Renner, R. Min- and max-entropy in infinite dimensions. Commun. Math. Phys. 306, 165–186 (2011).

Landau, H. J. & Pollak, H. O. Prolate spheroidal wave functions, Fourier analysis and uncertainty-II. Bell Syst. Tech. J. 65, 65–84 (1961).

Dym, H. & McKean, H. P. Fourier Series and Integrals (Academic, New York, 1972).

Rudnicki, Ł. Majorization approach to entropic uncertainty relations for coarse-grained observables. Phys. Rev. A 91, 032123 (2015).

Kennard, E. Zur Quantenmechanik einfacher Bewegungstypen. Z. für Phys. 44, 326–352 (1927).

Renner, R. Security of Quantum Key Distribution. (Ph.D. thesis, ETH Zurich 2005).

Tomamichel, M., Colbeck, R. & Renner, R. A fully quantum asymptotic equipartition property. IEEE Trans. Inf. Theory 55, 5840–5847 (2009).

Bialynicki-Birula, I. Entropic uncertainty relations. Phys. Lett. A. 103, 253–254 (1984).

Berta, M., Furrer, F. & Scholz, V. B. The smooth entropy formalism on von Neumann algebras. J. Math. Phys. 57, 015213 (2016).

Furrer, F. et al. Continuous variable quantum key distribution: finite-key analysis of composable security against coherent attacks. Phys. Rev. Lett. 109, 100502 (2012).

Acknowledgements

We would like to thank Anthony Leverrier, Loïck Magnin and Frédéric Grosshans for useful discussions about the continuous-variable world. F.F. is supported by the Japan Society for the Promotion of Science (JSPS) by KAKENHI grant No. 24-02793. T.G. is supported by the Danish Council for Independent Research (Individual Postdoc and Sapere Aude 4184-00338B). C.S. is supported by a NWO VIDI grant. S.W. is supported by STW Netherlands, as well as an NWO VIDI and an ERC Starting Grant. The experimental work is partially supported by the Deutsche Forschungsgemeinschaft (project SCHN 757/5–1).

Author information

Authors and Affiliations

Contributions

F.F. and S.W. conceived the project. F.F., C.S., and S.W. developed the security proof, T.G. and R.S. performed the experimental implementation, C.P. implemented the information reconciliation and classical post-processing, and F.F. did the numerical simulations. F.F., T.G., and C.P. wrote the manuscript with contributions from all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Furrer, F., Gehring, T., Schaffner, C. et al. Continuous-variable protocol for oblivious transfer in the noisy-storage model. Nat Commun 9, 1450 (2018). https://doi.org/10.1038/s41467-018-03729-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-018-03729-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.