Abstract

Purpose

This paper describes the implementation outcomes associated with integrating a family health history–based risk assessment and clinical decision support platform within primary care clinics at four diverse healthcare systems.

Methods

A type III hybrid implementation-effectiveness trial. Uptake and implementation processes were evaluated using the Reach, Effectiveness, Adoption, Implementation, and Maintenance (RE-AIM) framework.

Results

One hundred (58%) primary care providers and 2514 (7.8%) adult patients enrolled. Enrolled patients were 69% female, 22% minority, and 32% Medicare/Medicaid. Compared with their respective clinic’s population, patient-participants were more likely to be female (69 vs. 59%), older (mean age 57 vs. 49), and Caucasian (88 vs. 69%) (all p values <0.001). Female (81.3% of females vs. 78.5% of males, p value = 0.018) and Caucasian (Caucasians 90.4% vs. minority 84.1%, p value = 0.02) patient-participants were more likely to complete the study once enrolled. Patient-participant survey responses indicated MeTree was easy to use (95%), and patient-participants would recommend it to family/friends (91%). Minorities and those with less education reported greatest benefit. Enrolled providers reflected demographics of underlying provider population.

Conclusion

Family health history–based risk assessment can be effectively implemented in diverse primary care settings and can effectively engage patients and providers. Future research should focus on finding better ways to engage young adults, males, and minorities in preventive healthcare.

Similar content being viewed by others

Introduction

As precision medicine moves from bench to bedside, we must provide evidence of effectiveness of interventions in real-world clinical settings with a deep understanding of implementation processes that allow maintenance of and fidelity to the intervention while permitting adaptation to diverse care settings.1 Family health history (FHH) is a good model of this. FHH brings together genetic, environmental, and lifestyle factors for risk stratification and is widely accepted as a part of routine clinical care. Yet FHH is rarely collected in sufficient detail to significantly impact healthcare decisions.2,3,4 Barriers exist at the patient, provider, and system level including time and data standardization, lack of centralized resources for data interpretation, lack of education on FHH impact for providers, and patient barriers to preparation and education on pertinent FHH elements.5,6,7,8,9 Health information technology (IT) platforms that collect patient-entered FHH information and provide integrated clinical decision support (CDS) have addressed some of these barriers. Such technologies improve the quality and quantity of FHH collected,4,10,11 increase identification of individuals unaware of appropriate risk-based management,10,12 and move care management toward greater guideline adherence.13

MeTree, a web-based, patient-facing, FHH-based risk assessment and CDS platform with integrated education for patients and providers is one such program.14 A pilot of the software demonstrated clinical effectiveness and widespread acceptance by patients and providers.12,13,15 This led to the present trial to evaluate implementation and effectiveness of MeTree across diverse real-world healthcare settings.16 Work was funded by the Incorporating Genomics in Practice (IGNITE) network (https://ignite-genomics.org/) of the National Human Genomics Research Institute.17 This paper presents the primary implementation outcomes of the trial using the Reach, Effectiveness, Adoption, Implementation, and Maintenance (RE-AIM) framework.18

Materials and methods

Overview and study design

This was a pragmatic nonrandomized type III hybrid implementation-effectiveness trial across four diverse healthcare systems. Study methods were informed by Weiner’s organizational model of innovation implementation and are described in detail previously.16,19

Setting

The included healthcare systems (i.e., sites) had distinct operational profiles, missions, and clinical populations and included Duke University Health (Duke), a suburban academic medical center serving a moderately diverse population in North Carolina; Essentia Health (Essentia), an integrated health system serving predominantly Caucasian rural communities across the upper Midwest; Medical College of Wisconsin (MCW), an urban academic medical center with a significant African American population in Wisconsin; and University of North Texas Health Science Center (UNTHSC), an urban academic medical center with a significant Hispanic population in Texas.

Recruitment and enrollment

Primary care clinics from each site were identified by local research personnel for participation. Clinics with strong FHH champions were identified for initial enrollment. Additional clinics enrolled as implementation strategies were refined and adapted to meet the local site needs. Clinical providers were engaged early on. All providers at enrolled clinics were eligible. All patients of enrolled providers with an upcoming nonacute primary care appointment were offered enrollment. An entirely electronic process was used with a centralized coordinator. This included recruitment, consent, intervention completion and data collection, and provision of risk reports to both patients and enrolled providers (Fig. 1). Exceptions were allowed for patient-participants requiring more hands-on assistance such as providing a study device, creating an email account, etc.

Study phases

Preimplementation

A range of preimplementation strategies were completed at enrolled sites using the adapted Weiner organizational model of innovation implementation.19 Strategies included assessing for readiness and identifying barriers and facilitators and conducting educational outreach and meetings through site visits, staff surveys, and qualitative interviews.20 Surveys collected baseline provider and patient characteristics. The organizational readiness for change survey (ORIC) was sent to all staff and providers. Semistructured qualitative interviews were performed by research personnel trained in qualitative methods to assess organizational readiness. Data collected in the preimplementation phase was intended to allow for site adaptation of the core intervention and evaluate and interpret variations in key implementation outcomes between sites. Full details of preimplementation intervention components are outlined in a previous publication (Table S1).16

Implementation and postimplementation

During implementation, further strategies were employed (Table S1). In both preimplementation and implementation, monthly group calls were conducted with site principal investigators and research staff to capture and share local knowledge to make targeted adaptations to the implementation process and the intervention according to site needs. Targeted phone calls were made to eligible and enrolled patient-participants at Duke and Essentia to understand individual-level barriers to intervention completion so that, where possible, adaptations could be made to the recruitment and implementation process to address these issues. Surveys were sent out to all patient-participants who completed MeTree 3 months postimplementation.

Measures

Measures were organized by aspect(s) of the RE-AIM framework they informed (Table 1). Patient progression through each stage of the study was tracked as a measure of the reach of the intervention (Fig. 1, Fig. S1). All adaptations requested by the individual sites were tracked throughout the study as a measure of implementation fidelity.

The baseline provider/staff survey was the ORIC, a validated measure to assess a system’s readiness to implement a new intervention.21 ORIC is composed of 20 questions each scaled from −2 to +2. It measures the constructs of change commitment, (i.e., perception of organizational resolve to implement change) and change efficacy (i.e., belief that their organization has the ability to make such a change successfully). These constructs are valuable because when change commitment and efficacy are high, organizational members are more likely to put effort toward making the change and persist even considering obstacles they may encounter.

Primary implementation outcomes

The RE-AIM framework delineates steps for reporting on factors important to understanding implementation in different settings, including where the greatest barriers to impact may lie, and in turn the potential public health impact of the tested intervention.22

Reach seeks to understand the targeted patient population’s uptake of an intervention.18 This was assessed by comparing all those approached with those who consented to be in the study. Furthermore, patients who agreed to participate were followed electronically through study progression to determine points of significant dropout (see Fig. 1 for study stages). Patient enrollment, study progression, and study withdrawal were evaluated to identify the impact of patient demographics and clinic setting (i.e., rural versus urban, academic versus community). Lastly, structured qualitative interviews with eligible and enrolled participants evaluated barriers to enrollment and study completion.

Adoption refers to the representativeness of enrolled clinics and provider-participants as compared with the clinical setting and provider population the intervention was intended to target.18 All providers at enrolled clinics in each site were compared with those who consented and were enrolled in the study based on demographics. Providers were not rewarded for participation in the study other than benefits gained to quality of their care therefore enrollment is a reasonable measure of adoption. Results from ORIC and qualitative interviews were used to characterize variation in adoption levels by site.

Implementation refers to how adherent sites were to the implementation plan and what variations each introduced to maximize impact at their site.18 Adaptations were documented through regular teleconferences with the site research staff with ongoing review of enrollment uptake and barriers encountered.

Maintenance measures the “extent to which innovations become a relatively stable, enduring part of the behavioral repertoire of an individual, organization, or community.”18 In this paper, maintenance was operationalized as monitoring for new clinic accrual and ongoing discussions with healthcare administrators at the sites about how to make the intervention sustainable in their local contexts. We also assessed acceptability and desire for intervention continuation from patients in a 3-month post-MeTree survey. Patient-level maintenance as measured by behavioral outcomes will be the subject of a later paper.

Statistical analysis

Patients

Patient and provider demographics were summarized using counts and percentages for categorical features or means and standard deviations (SD) for continuous features. Demographic variation of (1) patient-participants between sites, (2) the overall clinic population and the patient-participants within sites, (3) withdrawn versus continuing participants, and (4) patient progression-specific milestones were assessed using Pearson’s chi-square test for categorical features and t-tests for continuous features. Logistic mixed effect regression was used to model patient completion of MeTree and patient study withdrawal as a function of patient demographics, as fixed effects, and clinic nested within site, as random effects, with the R lme4 package.23 Likelihood ratio tests of nested models were used to assess significance for each model component.

Providers

Provider demographic variability between consented and invited, as well as variability in provider-participant demographics across sites, were assessed using Pearson’s chi-squared tests for categorical features and t-tests for continuous features.

Patient 3-month postimplementation surveys

Responses to the MeTree satisfaction questions were summarized using descriptive statistics and tested for differences in response according to the patient’s demographics.

Organizational readiness to implement change surveys

ORIC survey completion rates were summarized overall by site using bivariate descriptive statistics. ORIC responses were scored −2 to 2, summed, and standardized by the number of questions answered per provider to create a standardized score. Standardized scores were summarized with descriptive statistics (mean, SD, 95% confidence interval [CI], N) at the site level. Differences in scores by site were assessed by analysis of variance (ANOVA) F-tests of linear fixed effect models. Consistency of scores across individuals at a given clinic was assessed using the intraclass correlation coefficient (ICC), where an ICC <0.4 indicates poor inter-rater agreement, 0.4–0.74 is fair–good, and 0.75–1.0 indicates excellent agreement.24

All analyses were completed using the statistical program R (https://www.r-project.org). Normal approximations were made for the test statistics and p values. Code availability: the data analysis code is version controlled in the Duke University gitlab repository and is available by request.

Results

We enrolled 2514 patients and 100 primary care providers (PCPs) across 19 clinics at four sites. Overall 4.51% of invited patients and 59% of invited providers enrolled.

Implementation outcomes

Reach

The intervention was intended for all adult primary care patients scheduled for routine (i.e., nonurgent) medical appointments. Reach was evaluated at two levels: (1) how the consented participants differ from the local clinic population and (2) how those who fully complete the process differ from those who initially consented but did not complete the study.

Comparing demographics within each site, there were several significant differences (Table 2). Across all sites, patients who consented were more likely to be older, female, and white (all p values < 0.001) than general site population. There were some exceptions; notably at MCW, consented and general population of patients did not differ in sex and at Essentia, consented and overall did not differ in race. There was no difference based on insurance (Medicare/Medicaid versus other). When comparing consented participants between sites, there were significant differences (all p values <0.001), except for sex. This was an intentional part of the study design, as our goal was to evaluate implementation in diverse communities. For the overall clinic population (N = 172,160), age and sex were 100% available, race was available for 134,162 (78%), and insurance was available for 171,752 (99%). For consented participants (N = 2514), age was available for all but one, sex for 2344 (93%), race for 2021 (80%), insurance for 2460 (98%), and education for 2454 (98%).

Demographics of patient-participants that reached each stage of the protocol (Fig. 1) varied (Table 3); 4154 patients responded to the invitation letter of whom 2514 (60.52%) consented. In univariate analysis, those with less education were less likely to complete the baseline survey (p value = 0.007) or complete MeTree (p value <0.001). Those with Medicare or Medicaid (p value <0.001) and minority race (p value = 0.003) were less likely to complete MeTree. Those who were older were equally likely to start MeTree, but less likely to complete it (log odds ratio/year –3.75 (SE 0.73), p value <0.001). Study progression differed by site at every stage (p value = 0.01, 0.02, and <0.001 at each progressive stage).

In multivariate logistic mixed effect modeling of MeTree completion (transition between MeTree started and Report downloaded stages), decreased odds of completing MeTree were associated with male sex (p = 0.02) and minority race (p = 0.02) after controlling for other demographics. Insurance, site, age, and education no longer showed an effect.

Only 107 (4.26%) of consented patient-participants withdrew from the study. Enrolled patients’ likelihood of withdrawal differed by site (withdrawal rates of 10% UNTHSC, 6.4% MCW, 4.13% Duke, and 2.32% Essentia, p value <0.013), sex (male 5.69% vs. female 3.6%, p value = 0.02), education (community college or less 3.83% vs. 4-year college or more 4.05%), insurance source (Medicare/Medicaid 7.05% vs. other 2.54%), and age (log odds ratio/year 10.43 (SE 1.38) p values <0.001). On multivariate modeling, age was the only factor that remained significant in odds of withdrawal (0.058 log odds/year (SE 0.011), p value <0.001).

To understand patient progression through the study, we completed structured qualitative interviews by telephone with enrolled patients (N = 248) at Duke and Essentia. Patients were surveyed on their reasons for not completing the study. Main barriers to completion were lack of time (N = 42, 17%), lack of knowledge/access to FHH (N = 68, 27%), and IT barriers (N = 122, 49%). IT issues included lack of familiarity with/access to computers and not understanding next steps in the electronic protocol. Provider preimplementation interviews across the sites elicited similar concerns about lack of IT familiarity and FHH knowledge.

Adoption

At Duke, seven of nine approached clinics enrolled in the study. The two that did not enroll included a busy suburban internal medicine clinic and a more rural family medicine clinic. At other sites, all clinics approached enrolled.

Provider adoption was 58% overall (N = 100). Provider-participants were reflective of the provider population in their respective clinics (Table 4). The only exception was Duke-consented providers were more likely to be female than the general population of clinic providers (69 vs. 61%, p value = 0.02). For the total population of providers (N = 173), years in practice was reported by 169 (98%), race by 134 (77%), sex by 173 (100%), and specialty by 135 (78%) providers. For provider-participants (N = 100), all demographics were reported except one missing value for specialty.

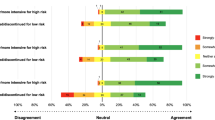

Across sites provider-participants’ race and sex were similar. Between sites, provider-participants varied based on specialty (p value <0.001) and years in practice (p value 0.03) (Table 4). The ORIC survey was completed by 42% (N = 94/225) of invited clinic personnel; 50% were providers and 50% other clinic staff. Completion rates varied by site (24–71%). ORIC scores did not differ significantly by site (range 0.48–0.92), but responses were highly variable within the clinics, as measured by ICC. Although some clinics had greater internal consistency than others, all were still within the “poor” range (ICC range by clinic 0.024–0.225). Smaller clinics tended to have greater internal consistency (i.e., higher ICC).

The ORIC surveys and interviews with providers indicated interest in and commitment to implementing MeTree in practice. The ORIC surveys generally contained positive responses regarding change commitment (range 0.52–1.11) and low but positive levels of change efficacy (range 0.05–1.32) (Fig. S2). Preimplementation qualitative interviews underscored this finding, with both providers and staff indicating a lack of understanding about how to use the tool and, in turn, how to communicate its value and operation to patients. Yet providers still reported feeling that MeTree would benefit their patients. For example, one provider explained that MeTree coincides well with what they “aspire to,” counseling people about disease risk.

Implementation

Sites made a variety of small changes to maximize uptake and effectiveness of the intervention for their specific population. Essentia found that cardiac risk calculations (e.g., Framingham risk score) built into MeTree were frequently not being calculated as patient-participants did not know needed data inputs. To address this, local research staff worked with patients beforehand providing direction on where to find lab values or pulled needed data points from medical records. Provider-participants at Essentia also requested that patient recruitment be limited to those coming in for annual physicals or new patient visits. Patients coming for shorter nonacute visits were not recruited. This along with the fact that Essentia’s clinics were rural and small may explain the lower number of patients invited to the study at Essentia. Based on findings of targeted calls to eligible and enrolled patients at Duke showing that patient-participants frequently forgot to complete the intervention in time for their scheduled appointment with their primary care provider, additional email reminders were sent out preceding the scheduled visit. At UNTHSC, the researchers translated the recruitment script into Spanish, shortened it, and instituted reminder phone calls to all potential patient-participants. Following these changes, investigators observed an increase in enrollment.

Maintenance and sustainability

Maintenance and sustainability were operationalized as long-term sustainability of the intervention. Over the course of the study, additional clinics were added to gain further experience with a variety of clinical settings and participants. Over 6 months, nine new clinics were enrolled at the sites (three at Duke, two at Essentia, four at MCW). One clinic at Duke withdrew from the trial due to competing demands imposed by the health system’s transition to open notes, and one clinic at UNTHSC closed due to restructuring of the health system. Over the course of the study, 3% of providers withdrew—one due to concerns about the potential for conflict of interest if MeTree were to be commercialized and two who felt MeTree added little value to their standard of care. Nine providers (9%) relocated, leaving enrolled clinics.

One significant barrier to long-term sustainability that provider-participants noted was lack of electronic medical record (EMR) integration. In interviews, integration was significantly related to perceived efficacy or confidence in implementing MeTree. Providers wanted “one go-to system” for patient care. During the year of implementation, Duke transitioned from a homegrown EMR system to EPIC’s EMR system, and providers said they were still adjusting. At the same time, they regarded it as an opportunity to improve patient care, although CDS capabilities were not being fully leveraged. Over the course of the study, the research team and Duke Health Technology Solutions worked closely with EPIC, the EMR vendor for three of four sites, to integrate MeTree with EPIC via a newly emerging data standard, SMART-FHIR.25,26 This connection allows providers to view data from MeTree within the EMR, and patients access to MeTree through their patient portal, along the way pulling relevant data from their medical record, such as lab values, into MeTree.

Patient-participants who completed MeTree completed 3-month post-MeTree surveys regarding satisfaction with their experience and the likelihood they would recommend it to others. Out of 1099 respondents (58.2% of participants), patients thought the program was easy to use (N = 995 [90.8%]), the questions were easy to understand (N = 1038 [95%]), and were satisfied with the resulting conversations with their doctors about their risk (N = 971 [89.9%]). Responses did not vary by education, race, or insurance status. Those with lower education levels (community college or less) were more likely than those with more education to indicate that MeTree was a useful experience (68 vs. 56.8%, p value <0.001), it made them more aware of their health risk (63.1 vs. 46.9%, p value <0.001), and they would recommend it to family/friends (89.7 vs. 84.3%, p value = 0.042). Minorities were more likely to report that it made them more aware of their health risk (minority 61.1% vs. nonminority 49.4%, p value = 0.045.)

Discussion

Development and implementation of IT solutions to improve application of FHH-based risk assessment within primary care have shown significant impact on risk identification and risk management strategies.10,11,12,13 Here we show that one such platform, MeTree, was successfully integrated into various care settings among diverse patient populations across the United States. While Reach evaluation showed a statistically significant difference in age, race, and sex between those who enrolled and the overall clinic populations, there was considerable demographic diversity among those who participated, and even though males and minorities were less likely to complete MeTree, they were well represented in the participant population. Enrollment and study progression varied by site despite similar ORIC scores across sites. Leadership support at the sites as described in Weiner’s model was qualitatively variable (as evidenced by study call participation and site principal investigator turnover), which may explain variation in uptake and points to the importance of this dimension. Potential patient barriers to wider uptake can be seen in the responses to structured interviews with participants. The most frequently reported reason for not completing the study was discomfort with/access to IT. In our current information age, we should not underestimate the potential impact of this barrier, particularly among older adults and those with lower educational levels. This is not just a barrier to the risk assessment itself, but also the very process of finding and engaging with medically oriented software. This barrier could be addressed by integrating health navigators who can prepare patients for clinician visits. Additionally, low-literacy experts and patients should be engaged in intervention conceptualization, development, and testing.

Adoption showed that enrolled providers represented their underlying populations. Although they came from a variety of care settings, they had similar assessments of their clinic’s implementation readiness. This shows that challenges faced within primary care may be more similar than different, at least across sites that see the value of risk assessment in primary care.

Implementation remained fairly close to the original study design, but with meaningful changes at individual sites to maximize impact on their populations while balancing workflow demands. Several adaptations point to ways that future-adopting systems might foster uptake, such as emphasizing FHH before preventive visits and adding FHH reminders to previsit reminder calls. The adaptation made at one site to support patients in using cardiovascular risk calculations is unsustainable in usual care. However, as SMART-FHIR applications become more widespread, data may be pushed and pulled between IT applications and the EMR.25,26,27

There is great promise in the areas of Maintenance and Sustainability. There was very low attrition at the clinic and provider level. Clinicians and patients found benefit from the platform based on post-MeTree surveys and qualitative reports from research staff at the various sites. Progress has been made in developing bidirectional data flow between MeTree and EPIC, which supports the potential for fuller integration and increased usability for clinicians and patients.

There are limitations to our study. We did not always have a complete data set for all comparisons, particularly race for clinic patient populations, potentially causing bias of unknown direction in our analysis. Given the IT barriers cited, having an entirely electronic process, from consent through intervention, may have affected both uptake and progression by patients. This may have particularly affected those with lower education and lower comfort with IT. If systematic risk assessment is to be incorporated into routine care, some of these barriers will potentially be lessened by the removal of the electronic research consenting and survey data collection steps. This is supported by at least one instance. When North Shore embedded a FHH risk assessment tool into their patient portal there was rapid uptake and strong support from their patient population (personal communication with William Knaus, 19 June 2015). Three-month post-MeTree surveys were only sent to patient-participants who completed MeTree. This may skew the results toward more favorable conclusions. As is true in much of health research, there was a nonminority bias in our enrollment, which may limit generalizability.28,29

This study is the first to demonstrate that FHH-based risk assessment programs with CDS can be implemented successfully across a diversity of healthcare systems and patient populations. This information coupled with previously documented evidence of clinical effectiveness should help drive the wider uptake of systematic FHH-based risk assessment within clinical care.11,12,13,30,31 Differences in uptake and completion by patient demographic require further research to minimize any potential healthcare disparities. This should especially be considered given that those at greatest risk for disparities were among those who reported greatest benefit from their use of MeTree. Further evaluation of the barriers and understanding of how to make risk assessment more accessible to all will be a part of ongoing and future studies.

References

Geng EH, Peiris D, Kruk ME. Implementation science: relevance in the real world without sacrificing rigor. PLoS Med. 2017;14:e1002288.

Carroll JC, Campbell-Scherer D, Permaul JA, et al. Assessing family history of chronic disease in primary care: Prevalence, documentation, and appropriate screening. Can Fam Physician. 2017;63:e58–e67.

Suther S, Goodson P. Barriers to the provision of genetic services by primary care physicians: a systematic review of the literature. Genet Med. 2003;5:70–76.

Wu RR, Himmel TL, Buchanan AH, et al. Quality of family history collection with use of a patient facing family history assessment tool. BMC Fam Pract. 2014;15:31.

Orlando LA, Wu RR, Beadles C, et al. Implementing family health history risk stratification in primary care: impact of guideline criteria on populations and resource demand. Am J Med Genet C Semin Med Genet. 2014;166C:24–33.

Acton RT, Burst NM, Casebeer L, et al. Knowledge, attitudes, and behaviors of Alabama’s primary care physicians regarding cancer genetics. Acad Med. 2000;75:850–2.

Barrison AF, Smith C, Oviedo J, et al. Colorectal cancer screening and familial risk: a survey of internal medicine residents’ knowledge and practice patterns. Am J Gastroenterol. 2003;98:1410–6.

Gramling R, Nash J, Siren K, et al. Family physician self-efficacy with screening for inherited cancer risk. Ann Fam Med. 2004;2:130–2.

Rich EC, Burke W, Heaton CJ, et al. Reconsidering the family history in primary care. J Gen Intern Med. 2004;19:273–80.

Cohn WF, Ropka ME, Pelletier SL, et al. Health Heritage, a web-based tool for the collection and assessment of family health history: initial user experience and analytic validity. Public Health Genomics. 2010;13:477–91

Hulse NC, Ranade-Kharkar P, Post H, et al. Development and early usage patterns of a consumer-facing family health history tool. AMIA Annu Symp Proc. 2011;2011:578–87.

Beadles CA, Ryanne Wu R, Himmel T, et al. Providing patient education: impact on quantity and quality of family health history collection. Fam Cancer. 2014;13:325–32.

Orlando LA, Wu RR, Myers RA, et al. Clinical utility of a web-enabled risk-assessment and clinical decision support program. Genet Med. 2016;18:1020–8

Orlando LA, Hauser ER, Christianson C, et al. Protocol for implementation of family health history collection and decision support into primary care using a computerized family health history system. BMC Health Serv Res. 2011;11:264.

Wu RR, Orlando LA, Himmel TL, et al. Patient and primary care provider experience using a family health history collection, risk stratification, and clinical decision support tool: a type 2 hybrid controlled implementation-effectiveness trial. BMC Fam Pract. 2013;14:111.

Wu RR, Myers RA, McCarty CA, et al. Protocol for the “Implementation, adoption, and utility of family history in diverse care settings” study. Implement Sci. 2015;10:163.

Weitzel KW, Alexander M, Bernhardt BA, et al. The IGNITE network: a model for genomic medicine implementation and research. BMC Med Genom. 2016;9:1.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89:1322–7.

Weiner BJ. A theory of organizational readiness for change. Implement Sci. 2009;4:67.

Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21.

Shea CM, Jacobs SR, Esserman DA, et al. Organizational readiness for implementing change: a psychometric assessment of a new measure. Implement Sci. 2014;9:7.

Gaglio B, Shoup JA, Glasgow RE. The RE-AIM framework: a systematic review of use over time. Am J Public Health. 2013;103:e38–46.

Bates D, Mächler M, Bolker B, et al. Fitting Linear Mixed-Effects Models Using Ime4. Journal of Statistical Software 2015;67:48.

Gamer MLJ, Fellows I, Sing P. Various coefficients of interrater reliability and agreement (Version 0.83). 2010. http://CRAN.R-project.org/package=irr.

Alterovitz G, Warner J, Zhang P, et al. SMART on FHIR Genomics: facilitating standardized clinico-genomic apps. J Am Med Inform Assoc. 2015;22:1173–8.

Mandel JC, Kreda DA, Mandl KD, et al. SMART on FHIR: a standards-based, interoperable apps platform for electronic health records. J Am Med Inform Assoc. 2016;23:899–908.

Warner JL, Rioth MJ, Mandl KD, et al. SMART precision cancer medicine: a FHIR-based app to provide genomic information at the point of care. J Am Med Inform Assoc. 2016;23:701–10.

Yancey AK, Ortega AN, Kumanyika SK. Effective recruitment and retention of minority research participants. Annu Rev Public Health. 2006;27:1–28.

Quay TA, Frimer L, Janssen PA, et al. Barriers and facilitators to recruitment of South Asians to health research: A scoping review. BMJ Open. 2017;7:e014889.

Baumgart LA, Postula KJV, Knaus WA. Initial clinical validation of Health Heritage, a patient-facing tool for personal and family history collection and cancer risk assessment. Fam Cancer. 2016;15:331–9.

Ozanne EM, Loberg A, Hughes S, et al. Identification and management of women at high risk for hereditary breast/ovarian cancer syndrome. Breast J. 2009;15:155–62.

Acknowledgements

This study was funded by National Institutes of Health (NIH) grant no. 1 U01 HG007282. The funder had no involvement in the design, conduct, data collection, analysis, or manuscript preparation. Corrine Voils’ effort on this study was supported by a Research Career Scientist award from the Department of Veterans Affairs (RCS 14-443). This study was approved by the institutional review boards of all four participating institutions and the funders. The views are those of the authors and do not reflect the Department of Veterans Affairs or United States Government.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosure

Drs. Wu, Orlando, and Ginsburg have a potential conflict of interest. They recently formed a company, MeTree&You, that will provide MeTree as a clinical service. The other authors declare no conflicts of interest.

Electronic supplementary material

Rights and permissions

About this article

Cite this article

Wu, R.R., Myers, R.A., Sperber, N. et al. Implementation, adoption, and utility of family health history risk assessment in diverse care settings: evaluating implementation processes and impact with an implementation framework. Genet Med 21, 331–338 (2019). https://doi.org/10.1038/s41436-018-0049-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41436-018-0049-x

Keywords

This article is cited by

-

Implementation-effectiveness trial of systematic family health history based risk assessment and impact on clinical disease prevention and surveillance activities

BMC Health Services Research (2022)

-

Literacy-adapted, electronic family history assessment for genetics referral in primary care: patient user insights from qualitative interviews

Hereditary Cancer in Clinical Practice (2022)

-

Clinical implementation of an oncology‐specific family health history risk assessment tool

Hereditary Cancer in Clinical Practice (2021)

-

Modernizing family health history: achievable strategies to reduce implementation gaps

Journal of Community Genetics (2021)

-

At the intersection of precision medicine and population health: an implementation-effectiveness study of family health history based systematic risk assessment in primary care

BMC Health Services Research (2020)