Abstract

In an uncertain world, the ability to predict and update the relationships between environmental cues and outcomes is a fundamental element of adaptive behaviour. This type of learning is typically thought to depend on prediction error, the difference between expected and experienced events and in the reward domain that has been closely linked to mesolimbic dopamine. There is also increasing behavioural and neuroimaging evidence that disruption to this process may be a cross-diagnostic feature of several neuropsychiatric and neurological disorders in which dopamine is dysregulated. However, the precise relationship between haemodynamic measures, dopamine and reward-guided learning remains unclear. To help address this issue, we used a translational technique, oxygen amperometry, to record haemodynamic signals in the nucleus accumbens (NAc) and orbitofrontal cortex (OFC), while freely moving rats performed a probabilistic Pavlovian learning task. Using a model-based analysis approach to account for individual variations in learning, we found that the oxygen signal in the NAc correlated with a reward prediction error, whereas in the OFC it correlated with an unsigned prediction error or salience signal. Furthermore, an acute dose of amphetamine, creating a hyperdopaminergic state, disrupted rats’ ability to discriminate between cues associated with either a high or a low probability of reward and concomitantly corrupted prediction error signalling. These results demonstrate parallel but distinct prediction error signals in NAc and OFC during learning, both of which are affected by psychostimulant administration. Furthermore, they establish the viability of tracking and manipulating haemodynamic signatures of reward-guided learning observed in human fMRI studies by using a proxy signal for BOLD in a freely behaving rodent.

Similar content being viewed by others

Introduction

The world is an uncertain place, where behaviour of animals must continuously change to promote optimal survival. Learning to predict the relationship between environmental cues and significant events is a critical element of adaptive behaviour. It is hypothesised that adaptive behaviour depends upon comparisons of neural representations of cue-evoked expectations of events with events that actually occurred. Mismatch between these two representations is defined as a prediction error, and is likely a vital substrate by which accuracy of ensuing predictions about cue-event relationships can be improved. Prediction errors related to receipt of reward have been strongly associated with dopaminergic neurons and their projections to frontostriatal circuits [1,2,3,4]. In rodents and humans, presentation of reward-predicting cues causes an increase in dopaminergic neuron activity and dopamine release in terminal regions, not only in proportion to the expected value of the upcoming reward but also to the deviation from that expectation when the reward is actually delivered [5,6,7,8,9,10,11,12]. Furthermore, experimental disruption of dopaminergic transmission can impair formation of appropriate cue–reward associations [13,14,15].

From a human perspective, elements of reward learning can be disrupted in a variety of neuropsychiatric conditions where dopaminergic dysfunction may play a central role [16,17,18,19]. For example, patients with major depressive disorder or schizophrenia can be insensitive to reward and display impairments in reward-learning behaviours [20,21,22,23,24]. Neuroimaging studies suggest that activation of parts of ventral striatum and frontal cortex, or changes in functional connectivity with these regions, may be an important neurophysiological correlate of reward-learning impairments [24,25,26,27,28,29]. However, not all studies show the same patterns of changes. e.g., ref. [30], and there is still uncertainty over whether the blunting of neural responses reflects a primary aetiology in these disorders. While the potential links between dopaminergic dysregulation, disrupted neural signatures of reward-guided learning and neuropsychiatric symptoms are manifest, strong direct evidence is currently lacking.

To help bridge this gap, we used constant-potential amperometry to monitor haemodynamic responses simultaneously in the nucleus accumbens (NAc) and orbitofrontal cortex (OFC) in rats performing a reward-driven, probabilistic Pavlovian learning task. Both NAc and OFC regions receive dopaminergic input, and have been previously implicated in representing the expected value of a cue to guide reward-learning behaviour [31, 32]. Amperometric tissue oxygen [TO2] signals likely originate from equivalent physiological mechanisms as fMRI BOLD signals [33,34,35], allowing cross-species comparisons of behaviourally driven haemodynamic signals in awake animals. The aim of this study was to define amperometric signatures of cue-evoked expectation of reward and prediction error in these regions and then investigate how they are modulated by administration of amphetamine. Amphetamine is known to modify physiological dopamine signalling [36], and in humans, even a single dose of a stimulant like methamphetamine can cause an increase in mild psychotic symptoms [37, 38]. While amphetamine can promote behavioural approach to rewarded cues [39], it can also impair conditional discrimination performance [40] and the influence of probabilistic cue–reward associations on subsequent decision-making [41]. We hypothesised that amphetamine would disrupt discriminative responses to cues during performance of a probabilistic Pavlovian task, with concomitant changes to the haemodynamic correlates of reward expectation and prediction error in the NAc and OFC.

Methods

See SI for detailed methods

Animals

All experiments were conducted in accordance with the United Kingdom Animals (Scientific Procedures) Act 1986. Adult male Sprague Dawley rats (Charles River, UK) were used in the present studies (n = 36). Four animals did not contribute to the behavioural dataset owing to poor O2 calibration responses, and the data from an additional two animals could not be included owing to a computer error. During testing, they were maintained at >85% of their free-feeding weight relative to their normal growth curve. Prior to the start of any training or testing, all animals underwent surgical procedures under general anaesthesia to implant carbon paste electrodes targeted bilaterally at the NAc and OFC.

O2 amperometry data recording

O2 signals were recorded from the NAc and OFC by using constant-potential amperometry (–650 mV applied for the duration of the session) as described previously [35, 42].

Probabilistic Pavlovian conditioning task

The task was a probabilistic Pavlovian learning task performed in standard operant chambers. Each trial consisted of a 10-s presentation of one of two auditory cues (3-kHz pure tone at 77 dB or 100-Hz clicker at 76 dB) followed immediately by either delivery or omission of reward (4 × 45 mg of sucrose food pellets). One of the auditory cues (CSHigh) was followed by reward delivery on 75% of trials, the other (CSLow) was rewarded on 25% of trials. Each session consisted of a total of 56 cue presentations, with an average intertrial interval of 45 s (range 30–60 s). Standard training took place over nine sessions and session 10 consisted of the drug challenge (see Fig. S1).

Pharmacological manipulations

D-amphetamine sulfate (Sigma, UK) was dissolved in 5% (w/v) glucose solution, and pH adjusted towards neutral with the dropwise addition of 1 M NaOH as necessary. Amphetamine was dosed at 1 mg/kg (free weight) via the intraperitoneal route.

Behavioural modelling

Head entries during the 10-s cue presentation were modelled by using variations of a Rescorla–Wagner model (Rescorla & Wagner 1972). We started with a model with a single free parameter, the learning rate α, and compared this against other models that also included free parameters specifying (a) cue-specific learning rates (i.e., a cue salience term, β); (b) separate learning rates for rewarded αpos and nonrewarded trials αneg and either (c) cue-independent k or (d) cue-specific unconditioned magazine responding, kClicker and kTone. To capture additional trial-by-trial variance, we also included either trial-specific or recency-weighted pre-cue response rates. To compare the models, we used the Bayesian information criterion (BIC), which penalises the likelihood of a model by the number of parameters and the natural logarithm of the number of data points.

Data analysis

Behaviour

We analysed the average number of head entries into the food magazine during presentation during either the CSHigh or CSLow cues during the 9 days of training and then during the pre-drug day (day 9 of training) with the drug challenge day.

Amperometry

We performed two sets of complementary analyses: (i) model-free analyses, where we investigated the average signals in NAc and OFC during cue presentation or in the 30 s after outcome delivery over the course of learning and after amphetamine administration, and (ii) model-based analyses where we regressed the same signals against estimates from our computational model using the model with the lowest BIC score (Fig. 1d).

Results

Behavioural performance during probabilistic learning

We trained rats on a two-cue probabilistic Pavlovian learning paradigm. One cue—CSHigh—was associated with reward delivery on 75% of trials and the other—CSLow—on 25% of trials (Fig. 1a). As can be observed, animals learned to discriminate between the cues, increasingly making magazine responses during presentation of the CSHigh but showing little change in behaviour upon presentation of CSLow as training progressed (main effect of CS: F1,27 = 39.92, p < 0.001; CS × day interaction: F2.63,70.92 = 6.12, p = 0.001) (Fig. 1b, Fig. S2). Unexpectedly, however, there was also a substantial and consistent influence of the counterbalancing assignment on responding (CS × cue identity interaction: F1,27 = 48.17, p < 0.001). Specifically, follow-up pairwise comparisons showed that the animals in Group 1, where CSHigh was assigned to be the clicker cue and CSLow the pure tone (“CL1-T2”) exhibited strong discrimination between the cues throughout training (p < 0.001; Fig. 1c). By contrast, rats in Group 2 with the opposite CS—auditory cue assignment (“T1-CL2”), did not show differential responding to the cues in spite of the different reward associations (p = 0.66).

Task, behavioural performance and modelling. a Schematic of the Pavlovian task. b, c Average head entries (mean ± SEM) to the magazine during presentation of each cue or during the pre-cue baseline period across the nine sessions (b, all animals; c, Group C1–T2 only, where the CSHigh was the clicker and CSLow was the tone; d, Group T1–C2 only, where the CSHigh was the tone and CSLow the clicker). d Bayesian information criterion (BIC, a measure of the goodness of fit of the model) estimates for different learning models. The BIC penalises the likelihood of a model by the number of parameters and the natural logarithm of the number of data points. The model with the lowest BIC score was deemed to give a better fit of the data. Note, however, the patterns of results in the model-based analyses of amperometric signals remained unchanged if we used any of the three models that fitted best for a number of individual rats (models a, c and f) or even if we used a standard Rescorla-Wagner-type learning model (model s). An “x” in the table indicates the presence of the given component in the model. Numbers within each bar indicate the number of animals for which the given model had the lowest BIC. e Example of the model fits for two animals from the two counterbalancing groups

To better understand how the cue identity was influencing this pattern of responding, we formally analysed how well different simple reinforcement learning models could describe individual rats’ Pavlovian behaviour. The preferred model included a cue salience parameter and a cue-specific unconditioned magazine responding term, as well as recency-weighted pre-cue responding parameter (Fig. 1d). In particular, the constant term attributable to unconditioned cue-elicited magazine responding was higher on clicker than tone trials (Z = 3.98, p < 0.001, Wilcoxon signed-rank test; p < 0.015 for Group 1 or 2 analysed separately). Therefore, once the difference in cue attributes was accounted for, rats’ behaviour could be well explained by using this modified simple reinforcement learning model (Fig. 1e).

Both NAc and OFC haemodynamic signals track Pavlovian responding

We examined how TO2 responses in NAc and OFC (Fig. 2) tracked animals’ learning of the appetitive associations and violations of their expectations. After exclusions for misplaced electrodes and poor quality of signals (see Supplementary Methods, Fig. S3), 40 electrodes in 20 rats were included for analysis (NAc = 25 electrodes from 15 rats, OFC = 15 electrodes from 11 rats).

We initially performed model-free analyses to investigate the TO2 signals in response to presentation of the CSHigh and CSLow cues as the rats learned the reward associations.

TO2 responses during presentation of the cues changed markedly over training in a similar manner in both brain regions (main effects of cue and session: both F > 9.49, p < 0.001) (Fig. 3a, b). In fact, analysis of the subset of animals with functional electrodes recorded simultaneously in NAc and OFC (n = 6 rats) showed a significant positive correlation between the signals recorded in each area (r2 = 0.41, p < 0.01). Moreover, mirroring the behavioural data, the patterns of responses differed substantially according to the cue identity (CL1–T2 or T1–CL2). While the TO2 response following the CSHigh developed similarly in both groups, there was a substantial difference in the CSLow response, with average signals when the clicker was the CSLow being significantly higher than when the tone was the CSLow (Cue × cue identity interaction: F1,36 = 18.67, p < 0.001; CL1–T2 vs. T1–CL2, CSHigh: p = 0.32, CSLow: p < 0.001).

Haemodynamic correlates during cue presentation. a TO2 responses on 3 sample days time-locked to cue presentation in the two counterbalance groups recorded from either NAc (upper panels) or OFC (lower panels). b Average area-under-the-curve responses (mean ± SEM) extracted from 5 to 10 s after cue onset for each cue across the nine sessions. c Average effect sizes in NAc (left panel) and OFC (right panel) from a general linear model relating TO2 responses to trial-by-trial estimates of the expected value associated with each cue. Main plots include all animals; insets show the analyses divided up into the two cue identity groups

To establish the relationship between development of magazine responding and the TO2 signals, we regressed the model-derived estimates of cue value from the model that best fitted the behavioural data, V(t), against the trial-by-trial TO2 responses and found a significant positive relationship in both regions in both groups (Fig. 3c). This was not simply a correlate of invigorated responding as cue value was a significantly better predictor than trial-by-trial magazine head entries (Fig. S4). Therefore, once differences in cue identity are accounted for, it is possible to demonstrate that TO2 signals in both NAc and OFC track the expected value associated with each cue.

Separate haemodynamic signatures of signed and unsigned prediction errors in NAc and OFC

We next investigated how the probabilistic delivery or omission of reward-shaped TO2 responses in NAc and OFC, and how these signals were shaped by cue-elicited reward expectations as learning progressed. As there were significant interactions between brain region with cue and training stage and their combination (all F > 3.76, p < 0.028), we here analysed responses in the two regions separately.

We again first performed model-free analyses, by focusing on how the average outcome-evoked changes in TO2 responses were influenced by the preceding cue and how these adapted over training. As can be seen in Fig. 4, the primary determinant of the signal change in the NAc was whether a reward was received (main effect of reward: F1,36 = 47.72, p < 0.001). However, the size of reward and no-reward signals, normalised to the time of outcome, depended on which cue had preceded the outcome, and these patterns altered as learning progressed (significant cue × outcome and cue × outcome × training-stage interactions, both F > 18.669, p < 0.001), suggesting a strong influence of expectation on TO2 responses. Follow-up comparisons showed that there was a reduction in reward-elicited TO2 responses on CSHigh trials as training progressed (CSHigh rew, stage 1 vs. stage 3: p = 0.016; stage 2 vs. stage 3: p = 0.065). Unexpectedly, there was also a diminution of omission-elicited reductions in TO2 responses on these trials (CSHigh no reward, stage 1 vs. stage 3: p = 0.014), which, from Fig. 4b, can be seen to be particularly prominent in the CL1–T2 group. By contrast, on CSLow trials, there was no meaningful change in TO2 responses to delivery or omission of reward throughout training (all p > 0.37). As might be expected given its effect on behaviour and cue-elicited neural signals, cue identity again influenced outcome signals, resulting in a four-way interaction of all the factors, as well as two-way interactions between cue × identity and training stage × identity (all F > 4.33, p < 0.019). Importantly, however, when analysed separately, both counterbalance groups showed the key cue × outcome × training- stage interaction (F > 4.84, p < 0.019).

Haemodynamic correlates following outcome presentation. a, c TO2 responses on 3 sample days, time-locked to outcome presentation (reward or no reward) after each cue in the two cue identity groups recorded from either NAc (a) or OFC (c). b, d Average area-under-the-curve responses (mean ± SEM) extracted from 30 s following the outcome after each cue across the nine sessions (NAc panel a, OFC panel c). e Average effect sizes in NAc (left panel) or OFC (right panel) from a general linear model relating TO2 responses to trial-by-trial estimates of the expected value associated with each cue and trial outcome (reward or no reward). Main plots include all animals; insets show the analyses divided into the two cue identity groups

In the OFC, outcome was also a strong influence on TO2 responses (F1,13 = 51.18, p < 0.001) and this was again shaped by the preceding cue (cue × outcome interaction: F1,13 = 5.37, p = 0.037). However, unlike in NAc, there came to be an increasingly strong TO2 response when reward was omitted, particularly after CSHigh (Fig. 4c, d). This resulted in a cue × training-stage interaction (F1,13 = 5.37, p = 0.037; CSHigh vs. CSLow, p = 0.51 for the early training stage, p < 0.001 for mid- and late stages). While there were qualitative differences in responses in the two counterbalance groups, none of the interactions with this factor or the main effect reached significance (all p > 0.069).

While these model-free analyses illustrate that the TO2 responses changed dynamically and differently over the course of training in the two brain regions, they do not clearly show whether either response might encode a teaching signal useful for learning, such as a reward PE: δ(t) = V(t) + r(t) − V(t − 1). Therefore, we next used model-based analyses to examine whether there was a relationship between TO2 responses across all sessions and the fundamental components of a reward PE: (i) a positive influence of outcome, r(t), and (ii) a negative influence of model-derived cue value, −V(t − 1). While both NAc and OFC TO2 responses showed a strong positive influence on outcome, only the NAc signals fulfilled both criteria of a reward PE by also exhibiting a significant negative influence of cue value; in OFC, by contrast, the cue value effect was positive (Fig. 4e). We also examined whether reward PE-like TO2 responses in NAc were present throughout training. This showed that while correlates of both NAc positive and negative reward PEs can be observed in rats that are still learning the cue–reward associations once appropriately established only positive reward PEs remain evident (Fig. S5).

Although the OFC TO2 responses do not correspond to a reward PE, the patterns of signals nonetheless still dynamically change over learning. As previous work has suggested that OFC neurons may signal the salience of the outcome for learning, we examined whether TO2 responses instead correlated with how unexpected each outcome was, corresponding to an unsigned PE. This analysis showed that each animal’s trial-by-trial unsigned PE had a strong positive influence on OFC signals (Fig. S6). Again, this was present in both counterbalance groups.

Therefore, while the changes in NAc TO2 responses reflect how much better or worse an outcome was than expected, OFC TO2 responses indicate how surprising either was.

Amphetamine disrupts cue-specific value encoding and prediction errors

Having established haemodynamic PE correlates in NAc and OFC, we next wanted to investigate how an acute dose of amphetamine (1 mg/kg), an indirect sympathomimetic known to potentiate dopamine release, influenced cue value and prediction error TO2 responses.

We first analysed baseline magazine responding in the pre-drug and the drug administration sessions. Although amphetamine caused a numeric increase in baseline responding, this was variable between animals—7/15 rats given amphetamine showing a substantial increase in baseline magazine response rates, whereas the other 8/15 animals showed a decrease in response rates —and the drug × session interaction did not reach significance (F1,26 = 3.71, p = 0.065). By contrast, there was a substantial and consistent change in cue-elicited responses (cue × session × drug interaction: F1,26 = 16.31, p < 0.001). This was not caused by differences between the drug groups on the pre-drug session (no main effect or interaction with drug group: all F < 1.21, p > 0.28). Instead, as can be observed in Fig. 5a, while both the vehicle and amphetamine groups responded more on average to the CSHigh than the CSLow on the pre-drug day (p < 0.003), this discrimination was abolished after administration of the drug (CSHigh vs. CSLow: p = 0.35), but not the vehicle (p < 0.001). Note that while there were again some differences between the counterbalance groups (cue × session × drug × identity interaction: F1,26 = 6.30, p = 0.019), the effects of amphetamine administration were comparable in both groups (amphetamine group: significant cue × session interaction, F1,13 = 9.047, p = 0.01; no significant cue × session × identity interaction, F1,13 = 2.71, p = 0.12).

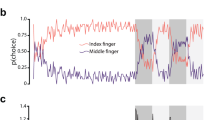

Effect of acute amphetamine administration on cue-elicited behaviour and haemodynamic signals. a Average head entries (mean ± SEM) to the magazine during presentation of each cue or during the pre-cue baseline period in the session before (“Pre”) and just after drug administration (“Drug”) in the group receiving vehicle or amphetamine (1 mg/kg). b TO2 responses time-locked to cue presentation recorded from either NAc (upper panels) or OFC (lower panels) in the pre-drug or drug administration sessions. Note that differences in the pre-drug patterns of signals in the vehicle and amphetamine group mainly reflect the unbalanced assignment of included animals from the two cue identity groups. c Average area-under-the-curve responses (mean ± SEM) extracted from 5 to 10 s after cue onset for each cue in the two sessions. d TO2 responses time-locked to outcome presentation (reward or no reward) after each cue in the two cue identity groups recorded from either NAc (upper panels) or OFC (lower panels) in the pre-drug or drug administration sessions. e Average area-under-the-curve responses (mean ± SEM) extracted from 30 s following the outcome after each cue in the two sessions

Administration of amphetamine also had a pronounced but specific effect on TO2 responses. During the cue period, the effect in both NAc and OFC mirrored the effect of the drug on behaviour, with amphetamine abolishing the distinction between the average TO2 response elicited by presentation of the CSHigh or CSLow (cue × session × drug: F1,32 = 6.22, p = 0.018; CSHigh vs. CSLow, amphetamine group drug session, p = 0.25; all other p < 0.006) (Fig. 5b, c, S7A). While there were still notable effects of cue identity on signals, follow-up comparisons found that there were no reliable differences in TO2 responses between the different cue configurations in either group or session (all p > 0.27).

Based on the differences between outcome-elicited signals in NAc and OFC observed during training, we split the outcome-elicited TO2 data by region. In the NAc, there was a significant cue × session × outcome × drug interaction (F1,21 = 4.82, p = 0.04). We focused follow-up analyses on each drug group separately without cue identity as a between-subjects’ factor as the NAc electrode exclusion criteria inadvertently biased the distribution of rats assigned to the drug and vehicle groups as a function of cue identity (χ2 = 9.4, df = 3 and p = 0.024) (see Fig. S7 for breakdown by counterbalance group).

While vehicle injections caused no changes in NAc signals (no main effect or interaction with session: all F < 1.4, p > 0.33), amphetamine had a marked influence on outcome-elicited TO2 responses, selectively blunting CSLow outcome responses (cue × session × outcome interaction: F1,12 = 22.07, p = 0.001; CSLow reward or no reward: pre-drug vs. drug session, p < 0.005; CSHigh, all p > 0.22). This meant that on amphetamine, there was now no reliable distinction between reward-evoked TO2 signals based on the preceding cue (p = 0.08; all other CSHigh vs. CSLow comparisons, p < 0.015) (Fig. 5d, e). Consistent with this, we also found a significant reduction in the relationship between TO2 responses and positive reward prediction errors selectively after amphetamine (comparison of peak effect size on and off drug: session × drug interaction: F1,21 = 8.02, p = 0.01; pre-drug vs. drug session, amphetamine group: p = 0.003; vehicle: p = 0.66) (note, we did not analyse the negative RPE as this was already largely absent in the pre-drug session in animals showing strong discrimination between the CSHigh and CSLow).

In OFC, there was also a significant change in TO2 responses when comparing outcome-elicited signals on the drug session with the pre-drug day (significant cue × session × drug and cue × session × outcome × drug interactions: both F > 5.35, p < 0.042). In the control group, vehicle injections caused a general increase in all the OFC TO2 responses (main effect of testing session: F1,6 = 7.51, p = 0.034). By contrast, in the amphetamine group, there was a striking reduction in OFC TO2 signals, particularly elicited by CSHigh cues (main effect of testing session: F1,5 = 5.12, p = 0.073; significant cue × session interaction: F1,21 = 29.527, p = 0.003). An analysis of the unsigned prediction error signal also resulted in a session × drug interaction (F1,11 = 6.63, p = 0.026), though this was driven both by a numeric decrease in the regression weight in the amphetamine group and an increase in the regression weight in the vehicle group.

Taken together, therefore, amphetamine impaired the discriminative influence of CSHigh and CSLow cues on behaviour and also corrupted the influence of these cue-based predictions on NAc and OFC TO2 responses.

Discussion

The results presented here show that haemodynamic signals in NAc and OFC dynamically track expectation of reward as rats form associations between cues with high or low probability of reward outcome. Importantly, both regions also displayed distinct forms of haemodynamic prediction error signal. NAc signals were shaped by reward expectation and the specific valence of the reward outcome, while in contrast OFC signals did not discriminate the valence of reward outcome, but rather reflected how surprising either reward outcome was. A single dose of amphetamine, sufficient to modulate dopamine activity, caused a loss of discrimination between cues that was evident both behaviourally and in the haemodynamic signatures of reward expectation and prediction error in both regions.

These results extend a previous study of instrumental learning where increases in NAc TO2 were observed as rats learned to associate a deterministic cue with receipt of reward upon pressing a lever [35]. The present probabilistic learning study allowed a formal assessment of whether the measured TO2 signals displayed features that would categorise them as encoding reward prediction errors (RPEs). To be considered an RPE signal, three cardinal features should be measurable: (i) a positive influence of expected reward value on cue-elicited signals (i.e., a greater response to a cue that is thought to predict a higher reward), (ii) a positive influence of actual reward delivered (i.e., a greater response when a high-value reward is actually delivered compared with when it is omitted) and (iii) a negative influence of expected reward value on outcome-elicited signals (i.e., a larger response to reward delivery the less that reward is expected and/or a smaller response to reward omission the more that reward is expected) [43, 44]. Using behavioural modelling, all three of those features were evident in NAc TO2 signal, consistent with a number of human fMRI studies of reward-guided learning in healthy subjects [43,44,45,46]. Although human fMRI studies predominantly use secondary reinforcers such as money to incentivise performance, similar RPE-like activations in NAc are also observed in studies by using primary fluid reinforcers in lightly food/water-restricted participants, which more closely mimic the means by which rats are motivated to perform the present task, see ref. [47].

While both positive and negative RPE-like TO2 signals were evident across the whole learning period, it was clear that the influence of each signal changed over time. Both positive and negative RPEs were evident early in learning. However, as discrimination between high and low reward probability cues was learned, negative RPEs had an increasingly negligible influence on NAc haemodynamic signals. Such adaptation has resonance with a previous finding in humans that NAc BOLD signals are not observed for every RPE event, but only those currently relevant to guide future behaviour [46]. The selective involvement of NAc in signalling whether an event is better or worse than expected fits well with the hypothesised roles of the extensive dopaminergic projections to this region, and suggests a fundamental role for NAc in sustaining approach responses to reward-associated cues [48, 49].

It has been demonstrated that dopamine release in the core region of the NAc correlates with a RPE, and similar to that observed here, dynamically changes over the course of learning [9, 10, 14, 50, 51] (see ref. [52] for a different interpretation). The amperometry electrodes in the current study were largely in caudal parts of ventral NAc, spanning the core and ventral shell regions. Given that the electrodes are estimated to be sensitive to changes in signal over approximately a 400-μm sphere around the electrode [53, 54], it is plausible that the signals we recorded here could have been influenced by RPE-like patterns of dopamine release [55]. Several recent papers have shown that optogenetic stimulation of dopamine neurons can have widespread influence on forebrain BOLD signals [56,57,58]. However, direct evidence for this link is currently lacking, and a recent study comparing patterns of BOLD signals with dopamine release in humans found indications of uncoupling between the measures [59]. Therefore, it is also conceivable that the NAc haemodynamic signals we observed here instead reflect afferent input from regions such as medial frontal cortex, where RPE-like signals have also been recorded [44, 60, 61].

By contrast, OFC TO2 signals did not respond to prediction error events in quite the same way as the NAc did and did not meet all three formal criteria to be considered formal correlates of an RPE. While some studies have found RPE-like outcome signals in OFC [62, 63], several—including those fMRI studies that have adopted the stringent criteria applied here—have not, e.g., refs. [44, 64, 65]. Like NAc, OFC TO2 signals signalled reward expectations when the cues were presented. This is consistent with previous fMRI and electrophysiological studies suggesting that central or lateral OFC may represent stimulus–reward mappings during cue presentation, e.g., refs. [66,67,68]. However, unlike NAc, the OFC signals measured in the present study tended to increase following reward omission as well as after reward delivery and this increase scaled with how surprising the reward omission was. Electrophysiological studies suggest that similar proportions of OFC cells exhibit either positive or negative relationships with value, and individual neurons can encode both positive and negative valenced information at outcome [69, 70].

These outcome-driven signals did however correlate with an unsigned prediction error: how surprising or salient any outcome is based on current expectations. There are an increasing number of studies implicating OFC in modulating salience for the purposes of learning [71,72,73,74]. However, given the precise pattern of OFC signals observed in the current study, the OFC TO2 responses might reflect the acquired salience of an outcome, e.g., refs. [71, 75], which represents both how surprising and how rewarding it is. Even though such responses do not signal whether an outcome is better or worse than expected, they are still important to guide the rate of learning or reallocate attention. Single-site lesion studies demonstrate a role for OFC, as well as for NAc, in aspects of stimulus–reward learning [76, 77]. However, a specific interaction between these regions to support these behaviours must depend upon another mediating region, as there is no direct projection between the two [78].

One unexpected but fortuitous finding was that TO2 signals related to magazine responding were strongly influenced by cue identity. By comparing different behavioural models, this effect was best explained by including two additional parameters to a simple reinforcement learning model: (i) a cue salience parameter, which scaled the influence of the RPE on future value estimates as a function of which auditory cue had been presented, and (ii) a cue “arousal” parameter, which was a constant term applied from the start of training. It has long been established that cue salience can be an important determinant of learning rates, e.g., refs. [79,80,81], and this is a standard term in the Rescorla–Wagner and other influential association learning models, capturing the effect that more salient or intense cues are learned about faster and are more readily discriminated than weaker or less salient cues. However, an extra parameter was also needed to account for the fact that in the majority of animals, responding to the clicker was greater than the tone at the start of testing, irrespective of whether it was assigned to be CSHigh or CSLow. While we do not know the precise reason for this effect and are not aware of this being reported in previous studies, one speculation is that the rats partially generalised the clicker cue to the sound of the pellet dispenser. Regardless of its precise cause, importantly, by using model-derived estimates of the value, we were nonetheless able to observe identical neural correlates of reward prediction and prediction error in both cue-counterbalanced groups.

Moreover, across both groups, a single “moderate/high” dose of amphetamine (1 mg/kg) [82] at the end of learning, mimicking a dopamine hyperactivity state, was sufficient to impair discriminative behavioural responses to the high and low probability cues and also to disrupt haemodynamic signals in both NAc and OFC. This was not simply a blunt pharmacological influence on neurovascular coupling as the signal change was specific to certain conditions, for instance selectively reducing the NAc reward signals on CSLow trials but leaving the reward and omission responses on CSHigh trials unaltered.

This may at first appear at odds with previous studies that have shown that this dose of amphetamine can selectively augment responding to reward-predictive cues over neutral cues and enhance phasic dopamine release and neuronal activity in the NAc [36, 39] and neuronal activity in OFC [83]. However, there are a number of important differences between these previous studies and the one reported here.

First, in our paradigm, the cues were probabilistically rewarded, meaning that both were associated with a certain level of reward expectation and elicit conditioned approach. A previous study has shown that the same dose of amphetamine as used in the current study can disrupt conditional discrimination performance [40]. Second, as discussed above, increased dopamine release may not necessarily map directly onto comparable haemodynamic changes. Indeed, although it would be expected that the dose of amphetamine used in the current experiment would boost phasic dopamine release to reward-predicting cues [36], fMRI studies have tended to observe a blunting of haemodynamic responses to such cues in NAc and frontal cortex following administration of a single dose of amphetamine or methamphetamine, comparable to what we observed in both NAc and OFC [37, 84]. In addition, one of these studies [37] reported the loss of RPE encoding in NAc, an effect that was also evident in the current study. Amphetamine is known to increase levels of dopamine—and other monoamines—in a stimulus-independent as well as a stimulus-driven manner. Therefore, the critical factor for appropriate responding is likely to rest on the balance of these two elements in frontal–striatal–limbic circuits, and the disruption of haemodynamic signalling of incentive predictions and prediction errors we recorded may reflect this. From the present data, it is unclear whether amphetamine is directly corrupting calculations of RPEs or is instead primarily disrupting the inputs then used to calculate the RPE such as cue-elicited reward expectation.

The changes in haemodynamic responses observed after amphetamine administration here are also consistent with an increasingly large body of fMRI studies of reward-guided learning in patients displaying symptoms that are believed to arise in part from dysregulated dopamine, such as psychosis and anhedonia (see refs. [16, 19, 25, 85] for reviews). This has raised the possibility that changes in behaviour and brain responses during reward anticipation and reinforcement learning might act as a cross-diagnostic preclinical translational biomarker [16, 86]. In parallel, there has been increased interest in using these types of finding as foundations for theoretical approaches to link the underlying biological dysfunctions to observed symptoms in patients (e.g., refs. [21, 87,88,89]).

While there is general consensus about the promise of such approaches, the literature is complicated by the diversity of the disorders and the drug regimens that patients have taken, which makes testing specific causal hypotheses about the relationship between altered brain function and psychiatric symptoms difficult. By contrast, in an animal model, it is possible to have precise control over and measurement of induced changes in neurobiology. Therefore, establishing the feasibility of observing these signatures in a freely behaving rodent, measured by using a valid proxy for fMRI, and demonstrating how clinically relevant pharmacological perturbations affect these responses, may be an important step to bridge the gaps in our understanding. This foundation, if combined with other causal manipulations (such as pharmacological or genetic animal models relevant to psychiatric disorders) and more sophisticated behavioural tasks that allow us to take into account different learning strategies, e.g., refs. [90, 91] and value parameters [7, 92], could therefore provide new opportunities for understanding how dysfunctional neurotransmission is reflected as changes in haemodynamic signatures and how both relate to behavioural performance.

Funding and disclosure

This work was funded by a Lilly Research Award Program grant to MEW and GG, and a Wellcome Trust Senior Research Fellowship to MEW (202831/Z/16/Z). JRH, HMM and GG declare being employees of Eli Lilly & Co Ltd.; JF, FG and MDT were employees of Eli Lilly & Co at the time of research. JF is now an employee of Vertex Pharmaceuticals (Europe) and FG is an employee of H. Lundbeck A/S. EW, SLS and MEW have no competing interests to declare.

References

Watabe-Uchida M, Eshel N, Uchida N. Neural circuitry of reward prediction error. Annu Rev Neurosci 2017;40:373–94.

Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci Off J Soc Neurosci 1996;16:1936–47.

Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science 1997;275:1593–9.

Walton ME, Bouret S. What is the relationship between dopamine and effort? Trends Neurosci 2018;42:79–91.

Cohen JY, Haesler S, Vong L, Lowell BB, Uchida N. Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature 2012;482:85–8.

Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science 2005;307:1642–5.

Gan JO, Walton ME, Phillips PE. Dissociable cost and benefit encoding of future rewards by mesolimbic dopamine. Nat Neurosci 2010;13:25–7.

Kishida KT, Saez I, Lohrenz T, Witcher MR, Laxton AW, Tatter SB, et al. Subsecond dopamine fluctuations in human striatum encode superposed error signals about actual and counterfactual reward. Proc Natl Acad Sci USA 2016;113:200–5.

Hart AS, Rutledge RB, Glimcher PW, Phillips PE. Phasic dopamine release in the rat nucleus accumbens symmetrically encodes a reward prediction error term. J Neurosci Off J Soc Neurosci 2014;34:698–704.

Saddoris MP, Cacciapaglia F, Wightman RM, Carelli RM. Differential dopamine release dynamics in the nucleus accumbens core and shell reveal complementary signals for error prediction and incentive motivation. J Neurosci Off J Soc Neurosci 2015;35:11572–82.

Ellwood IT, Patel T, Wadia V, Lee AT, Liptak AT, Bender KJ, et al. Tonic or phasic stimulation of dopaminergic projections to prefrontal cortex causes mice to maintain or deviate from previously learned behavioral strategies. J Neurosci Off J Soc Neurosci 2017;37:8315–29.

Zaghloul KA, Blanco JA, Weidemann CT, McGill K, Jaggi JL, Baltuch GH, et al. Human substantia nigra neurons encode unexpected financial rewards. Science 2009;323:1496–9.

Saunders BT, Robinson TE. The role of dopamine in the accumbens core in the expression of Pavlovian-conditioned responses. Eur J Neurosci 2012;36:2521–32.

Flagel SB, Clark JJ, Robinson TE, Mayo L, Czuj A, Willuhn I, et al. A selective role for dopamine in stimulus-reward learning. Nature 2011;469:53–7.

Parkinson JA, Dalley JW, Cardinal RN, Bamford A, Fehnert B, Lachenal G, et al. Nucleus accumbens dopamine depletion impairs both acquisition and performance of appetitive Pavlovian approach behaviour: implications for mesoaccumbens dopamine function. Behav Brain Res 2002;137:149–63.

Deserno L, Schlagenhauf F, Heinz A. Striatal dopamine, reward, and decision making in schizophrenia. Dialogues Clin Neurosci 2016;18:77–89.

Maia TV, Frank MJ. An integrative perspective on the role of dopamine in schizophrenia. Biol Psychiatry 2017;81:52–66.

Garcia-Garcia I, Zeighami Y, Dagher A. Reward prediction errors in drug addiction and Parkinson's disease: from neurophysiology to neuroimaging. Curr Neurol Neurosci Rep 2017;17:46.

Zald DH, Treadway MT. Reward processing, neuroeconomics, and psychopathology. Annu Rev Clin Psychol 2017;13:471–95.

Murray GK, Corlett PR, Clark L, Pessiglione M, Blackwell AD, Honey G, et al. Substantia nigra/ventral tegmental reward prediction error disruption in psychosis. Mol Psychiatry 2008;13:239. 67-76

Huys QJ, Pizzagalli DA, Bogdan R, Dayan P. Mapping anhedonia onto reinforcement learning: a behavioural meta-analysis. Biol Mood Anxiety Disord. 2013;3:12.

Pizzagalli DA, Iosifescu D, Hallett LA, Ratner KG, Fava M. Reduced hedonic capacity in major depressive disorder: evidence from a probabilistic reward task. J Psychiatr Res 2008;43:76–87.

Dowd EC, Frank MJ, Collins A, Gold JM, Barch DM. Probabilistic reinforcement learning in patients with schizophrenia: relationships to anhedonia and avolition. Biol Psychiatry Cogn Neurosci Neuroimaging 2016;1:460–73.

Kumar P, Goer F, Murray L, Dillon DG, Beltzer ML, Cohen AL, et al. Impaired reward prediction error encoding and striatal-midbrain connectivity in depression. Neuropsychopharmacology. 2018;43:1581–88.

Radua J, Schmidt A, Borgwardt S, Heinz A, Schlagenhauf F, McGuire P, et al. Ventral striatal activation during reward processing in psychosis: a neurofunctional meta-analysis. JAMA Psychiatry 2015;72:1243–51.

Rausch F, Mier D, Eifler S, Esslinger C, Schilling C, Schirmbeck F, et al. Reduced activation in ventral striatum and ventral tegmental area during probabilistic decision-making in schizophrenia. Schizophrenia Res 2014;156:143–9.

Rothkirch M, Tonn J, Kohler S, Sterzer P. Neural mechanisms of reinforcement learning in unmedicated patients with major depressive disorder. Brain 2017;140:1147–57.

Gradin VB, Kumar P, Waiter G, Ahearn T, Stickle C, Milders M, et al. Expected value and prediction error abnormalities in depression and schizophrenia. Brain 2011;134:1751–64.

Morris RW, Vercammen A, Lenroot R, Moore L, Langton JM, Short B, et al. Disambiguating ventral striatum fMRI-related BOLD signal during reward prediction in schizophrenia. Mol Psychiatry 2012;17:235. 80-9

Rutledge RB, Moutoussis M, Smittenaar P, Zeidman P, Taylor T, Hrynkiewicz L, et al. Association of neural and emotional impacts of reward prediction errors with major depression. JAMA Psychiatry 2017;74:790–97.

Oades RD, Halliday GM. Ventral tegmental (A10) system: neurobiology. 1. Anatomy and connectivity. Brain Res 1987;434:117–65.

Swanson LW. The projections of the ventral tegmental area and adjacent regions: a combined fluorescent retrograde tracer and immunofluorescence study in the rat. Brain Res Bull 1982;9:321–53.

Lowry JP, Griffin K, McHugh SB, Lowe AS, Tricklebank M, Sibson NR. Real-time electrochemical monitoring of brain tissue oxygen: a surrogate for functional magnetic resonance imaging in rodents. NeuroImage 2010;52:549–55.

Li J, Schwarz AJ, Gilmour G. Relating translational neuroimaging and amperometric endpoints: utility for neuropsychiatric drug discovery. Curr Top Behav Neurosci 2016;28:397–421.

Francois J, Huxter J, Conway MW, Lowry JP, Tricklebank MD, Gilmour G. Differential contributions of infralimbic prefrontal cortex and nucleus accumbens during reward-based learning and extinction. J Neurosci: Off J Soc Neurosci 2014;34:596–607.

Daberkow DP, Brown HD, Bunner KD, Kraniotis SA, Doellman MA, Ragozzino ME, et al. Amphetamine paradoxically augments exocytotic dopamine release and phasic dopamine signals. J Neurosci 2013;33:452–63.

Bernacer J, Corlett PR, Ramachandra P, McFarlane B, Turner DC, Clark L, et al. Methamphetamine-induced disruption of frontostriatal reward learning signals: relation to psychotic symptoms. Am J Psychiatry 2013;170:1326–34.

Curran C, Byrappa N, McBride A. Stimulant psychosis: systematic review. Br J Psychiatry 2004;185:196–204.

Wan X, Peoples LL. Amphetamine exposure enhances accumbal responses to reward-predictive stimuli in a pavlovian conditioned approach task. J Neurosci 2008;28:7501–12.

Dunn MJ, Futter D, Bonardi C, Killcross S. Attenuation of d-amphetamine-induced disruption of conditional discrimination performance by alpha-flupenthixol. Psychopharmacology 2005;177:296–306.

St Onge JR, Chiu YC, Floresco SB. Differential effects of dopaminergic manipulations on risky choice. Psychopharmacology 2010;211:209–21.

Francois J, Conway MW, Lowry JP, Tricklebank MD, Gilmour G. Changes in reward-related signals in the rat nucleus accumbens measured by in vivo oxygen amperometry are consistent with fMRI BOLD responses in man. NeuroImage 2012;60:2169–81.

Behrens TE, Hunt LT, Woolrich MW, Rushworth MF. Associative learning of social value. Nature 2008;456:245–9.

Rutledge RB, Dean M, Caplin A, Glimcher PW. Testing the reward prediction error hypothesis with an axiomatic model. J Neurosci 2010;30:13525–36.

Niv Y, Edlund JA, Dayan P, O'Doherty JP. Neural prediction errors reveal a risk-sensitive reinforcement-learning process in the human brain. J Neurosci 2012;32:551–62.

Klein-Flugge MC, Hunt LT, Bach DR, Dolan RJ, Behrens TE. Dissociable reward and timing signals in human midbrain and ventral striatum. Neuron 2011;72:654–64.

Chase HW, Kumar P, Eickhoff SB, Dombrovski AY. Reinforcement learning models and their neural correlates: an activation likelihood estimation meta-analysis. Cognitive, affective &. Behav Neurosci 2015;15:435–59.

Parkinson JA, Olmstead MC, Burns LH, Robbins TW, Everitt BJ. Dissociation in effects of lesions of the nucleus accumbens core and shell on appetitive pavlovian approach behavior and the potentiation of conditioned reinforcement and locomotor activity by D-amphetamine. J Neurosci 1999;19:2401–11.

du Hoffmann J, Nicola SM. Dopamine invigorates reward seeking by promoting cue-evoked excitation in the nucleus accumbens. J Neurosci 2014;34:14349–64.

Hart AS, Clark JJ, Phillips PEM. Dynamic shaping of dopamine signals during probabilistic Pavlovian conditioning. Neurobiol Learn Mem 2015;117:84–92.

Day JJ, Roitman MF, Wightman RM, Carelli RM. Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat Neurosci 2007;10:1020–8.

Hamid AA, Pettibone JR, Mabrouk OS, Hetrick VL, Schmidt R, Vander Weele CM, et al. Mesolimbic dopamine signals the value of work. Nat Neurosci 2016;19:117–26.

McHugh SB, Marques-Smith A, Li J, Rawlins JN, Lowry J, Conway M, et al. Hemodynamic responses in amygdala and hippocampus distinguish between aversive and neutral cues during Pavlovian fear conditioning in behaving rats. Eur J Neurosci 2013;37:498–507.

Li J, Bravo DS, Louise Upton A, Gilmour G, Tricklebank MD, Fillenz M, et al. Close temporal coupling of neuronal activity and tissue oxygen responses in rodent whisker barrel cortex. Eur J Neurosci 2011;34:1983–96.

Knutson B, Gibbs SE. Linking nucleus accumbens dopamine and blood oxygenation. Psychopharmacology 2007;191:813–22.

Lohani S, Poplawsky AJ, Kim SG, Moghaddam B. Unexpected global impact of VTA dopamine neuron activation as measured by opto-fMRI. Mol Psychiatry 2017;22:585–94.

Decot HK, Namboodiri VM, Gao W, McHenry JA, Jennings JH, Lee SH, et al. Coordination of brain-wide activity dynamics by dopaminergic neurons. Neuropsychopharmacology. 2017;42:615–27.

Ferenczi EA, Zalocusky KA, Liston C, Grosenick L, Warden MR, Amatya D, et al. Prefrontal cortical regulation of brainwide circuit dynamics and reward-related behavior. Science 2016;351:aac9698.

Lohrenz T, Kishida KT, Montague PR. BOLD and its connection to dopamine release in human striatum: a cross-cohort comparison. Philos Trans R Soc Lond B Biol Sci 2016;371:pii: 20150352.

Kennerley SW, Behrens TE, Wallis JD. Double dissociation of value computations in orbitofrontal and anterior cingulate neurons. Nat Neurosci 2011;14:1581–9.

Warren CM, Hyman JM, Seamans JK, Holroyd CB. Feedback-related negativity observed in rodent anterior cingulate cortex. J Physiol Paris 2015;109:87–94.

Sul JH, Kim H, Huh N, Lee D, Jung MW. Distinct roles of rodent orbitofrontal and medial prefrontal cortex in decision making. Neuron 2010;66:449–60.

O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron 2003;38:329–37.

Rohe T, Weber B, Fliessbach K. Dissociation of BOLD responses to reward prediction errors and reward receipt by a model comparison. Eur J Neurosci 2012;36:2376–82.

Stalnaker TA, Liu TL, Takahashi YK, Schoenbaum G. Orbitofrontal neurons signal reward predictions, not reward prediction errors. Neurobiol Learn Mem 2018;153:137–43.

Klein-Flugge MC, Barron HC, Brodersen KH, Dolan RJ, Behrens TE. Segregated encoding of reward-identity and stimulus-reward associations in human orbitofrontal cortex. J Neurosci 2013;33:3202–11.

Kahnt T, Heinzle J, Park SQ, Haynes JD. Decoding the formation of reward predictions across learning. J Neurosci 2011;31:14624–30.

Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nat Neurosci 1998;1:155–9.

Morrison SE, Salzman CD. The convergence of information about rewarding and aversive stimuli in single neurons. J Neurosci 2009;29:11471–83.

Kennerley SW, Wallis JD. Evaluating choices by single neurons in the frontal lobe: outcome value encoded across multiple decision variables. Eur J Neurosci 2009;29:2061–73.

Ogawa M, van der Meer MA, Esber GR, Cerri DH, Stalnaker TA, Schoenbaum G. Risk-responsive orbitofrontal neurons track acquired salience. Neuron 2013;77:251–8.

Harle KM, Zhang S, Ma N, Yu AJ, Paulus MP. Reduced neural recruitment for bayesian adjustment of inhibitory control in methamphetamine dependence. Biol Psychiatry Cogn Neurosci Neuroimaging 2016;1:448–59.

McDannald MA, Esber GR, Wegener MA, Wied HM, Liu TL, Stalnaker TA, et al. Orbitofrontal neurons acquire responses to ‘valueless' Pavlovian cues during unblocking. Elife. 2014;3:e02653.

Schiller D, Weiner I. Lesions to the basolateral amygdala and the orbitofrontal cortex but not to the medial prefrontal cortex produce an abnormally persistent latent inhibition in rats. Neuroscience 2004;128:15–25.

Esber GR, Haselgrove M. Reconciling the influence of predictiveness and uncertainty on stimulus salience: a model of attention in associative learning. Proc. Biol. Sci. 2011;278(1718):2553–61.

Chudasama Y, Robbins TW. Dissociable contributions of the orbitofrontal and infralimbic cortex to pavlovian autoshaping and discrimination reversal learning: further evidence for the functional heterogeneity of the rodent frontal cortex. J Neurosci 2003;23:8771–80.

Everitt BJ, Parkinson JA, Olmstead MC, Arroyo M, Robledo P, Robbins TW. Associative processes in addiction and reward. The role of amygdala-ventral striatal subsystems. Ann N Y Acad Sci 1999;877:412–38.

Schilman EA, Uylings HB, Galis-de Graaf Y, Joel D, Groenewegen HJ. The orbital cortex in rats topographically projects to central parts of the caudate-putamen complex. Neurosci Lett 2008;432:40–5.

Pavlov IP. Conditioned Reflexes. Oxford: Oxford University Press; 1927.

Mackintosh NJ. Overshadowing and stimulus intensity. Anim Learn Behav. 1976;4:186–92.

Jakubowska E, Zielinski K. Differentiation learning as a function of stimulus intensity and previous experience with the CS. Acta Neurobiol Exp 1976;36:427–46.

Grilly DM, Loveland A. What is a "low dose" of d-amphetamine for inducing behavioral effects in laboratory rats? Psychopharmacology 2001;153:155–69.

Homayoun H, Moghaddam B. Orbitofrontal cortex neurons as a common target for classic and glutamatergic antipsychotic drugs. Proc. Natl Acad. Sci. USA 2008;105(46):18041–6.

Knutson B, Bjork JM, Fong GW, Hommer D, Mattay VS, Weinberger DR. Amphetamine modulates human incentive processing. Neuron 2004;43:261–9.

Heinz A, Schlagenhauf F. Dopaminergic dysfunction in schizophrenia: salience attribution revisited. Schizophr Bull 2010;36:472–85.

Gilmour G, Gastambide F, Marston HM, Walton ME. Using intermediate cognitive endpoints to facilitate translational research in psychosis. Curr Opin Behav Sci 2015;4:128–35.

Corlett PR, Fletcher PC. Computational psychiatry: a Rosetta Stone linking the brain to mental illness. Lancet Psychiatry 2014;1:399–402.

Maia TV, Huys QJM, Frank MJ. Theory-based computational psychiatry. Biol Psychiatry 2017;82:382–84.

Valton V, Romaniuk L, Douglas Steele J, Lawrie S, Series P. Comprehensive review: computational modelling of schizophrenia. Neurosci Biobehav Rev 2017;83:631–46.

Akam T, Costa R, Dayan P. Simple plans or sophisticated habits? state, transition and learning interactions in the two-step task. PLoS Comput Biol 2015;11:e1004648.

Miller KJ, Botvinick MM, Brody CD. Dorsal hippocampus contributes to model-based planning. Nat Neurosci 2017;20:1269–76.

Hollon NG, Arnold MM, Gan JO, Walton ME, Phillips PE. Dopamine-associated cached values are not sufficient as the basis for action selection. Proc Natl Acad Sci USA 2014;111(51):18357–62.

Acknowledgements

MEW, MDT, HMM and GG conceived the project, MEW, GG, FG and JF designed the experiment, JF and FG collected the data, JF performed the surgeries, EW, SS, JRH and MEW analysed the data and MEW prepared the paper with input from the other authors. We would like to thank David Bannerman for valuable advice throughout the project, Mike Conway for assistance with surgeries and Thomas Akam, Miriam Klein-Flugge, Stephen McHugh and Marios Panayi for discussions about the modelling, interpretation and analyses. The study is dedicated to the memory of one of the authors, Soon-Lim Shin, a key contributor to the project who sadly passed away to cancer in 2017.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Deceased: Soon-Lim Shin

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Werlen, E., Shin, SL., Gastambide, F. et al. Amphetamine disrupts haemodynamic correlates of prediction errors in nucleus accumbens and orbitofrontal cortex. Neuropsychopharmacol. 45, 793–803 (2020). https://doi.org/10.1038/s41386-019-0564-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41386-019-0564-8