Abstract

The Allostatic Model proposes that Alcohol Use Disorder (AUD) is associated with a transition in the motivational structure of alcohol drinking: from positive reinforcement in early-stage drinking to negative reinforcement in late-stage dependence. However, direct empirical support for this preclinical model from human experiments is limited. This study tests predictions derived from the Allostatic Model in humans. Specifically, this study tested whether alcohol use severity (1) independently predicts subjective responses to alcohol (SR; comprised of stimulation/hedonia, negative affect, sedation and craving domains), and alcohol self-administration and 2) moderates associations between domains of SR and alcohol self-administration. Heavy drinking participants ranging in severity of alcohol use and problems (N = 67) completed an intravenous alcohol administration paradigm combining an alcohol challenge (target BrAC = 60 mg%), with progressive ratio self-administration. Alcohol use severity was associated with greater baseline negative affect, sedation, and craving but did not predict changes in any SR domain during the alcohol challenge. Alcohol use severity also predicted greater self-administration. Craving during the alcohol challenge strongly predicted self-administration and sedation predicted lower self-administration. Neither stimulation, nor negative affect predicted self-administration. This study represents a novel approach to translating preclinical neuroscientific theories to the human laboratory. As expected, craving predicted self-administration and sedation was protective. Contrary to the predictions of the Allostatic Model, however, these results were inconsistent with a transition from positively to negatively reinforced alcohol consumption in severe AUD. Future studies that assess negative reinforcement in the context of an acute stressor are warranted.

Similar content being viewed by others

Introduction

The translation of preclinical theories of alcoholism etiology to clinical samples is fundamental to understanding alcohol use disorders (AUD) and developing efficacious treatments [1]. Human subjects research is fundamentally limited in neurobiological precision and experimental control, whereas preclinical models permit fine grained measurement of biological function. However, the concordance between preclinical models and human psychopathology is often evidenced by face validity alone. The aim of this study, therefore, is to test the degree to which one prominent preclinical model of alcoholism etiology, the Allostatic Model [2,3,4], predicts the behavior and affective responses of human subjects in an experimental pharmacology design. The Allostatic Model was selected for translational investigation due to its focus on reward and reinforcement mechanisms in early vs. late stages of addiction. In this study, we advance a novel translational human laboratory approach to assessing the relationship between alcohol-induced reward and motivated alcohol consumption.

A key prediction of the Allostatic Model is that chronic alcohol consumptions results in a cascade of neuroadaptations, which ultimately blunt drinking-relate hedonic reward and positive reinforcement, while simultaneously leading to the emergence of persistent elevations in negative affect, termed allostasis. Consequently, the model predicts that drinking in late-stage dependence should be motivated by the relief of withdrawal-related negative affect, and hence, by negative reinforcement mechanisms [2,3,4]. In other words, the Allostatic Model suggests a transition from reward to relief craving in drug dependence [5]. The Allostatic Model is supported by studies utilizing ethanol vapor paradigms in rodents that can lead to severe withdrawal symptoms, escalated ethanol self-administration, high motivation to consume the drug as revealed by progressive ratio breakpoints, enhanced reinstatement, and reduced sensitivity to punishment [2, 6, 7]. Diminished positive reinforcement in this model is inferred through examination of reward thresholds in an intracranial self-stimulation protocol [8, 9]. Critically, these allostatic neuroadaptations are hypothesized to persist beyond acute withdrawal, producing state changes in negative emotionality in protracted abstinence [4]. Supporting this hypothesis, exposure to chronic ethanol vapor produces substantial increases in ethanol consumption during both acute and protracted abstinence periods. (e.g., [7, 10, 11]). Despite strong preclinical support, the Allostatic Model has not been validated in human populations with AUD.

Decades of human alcohol challenge research has demonstrated that individuals differences in subjective responses to alcohol (SR) predict alcoholism risk [12, 13]. The Low Level of Response (LR) Model suggests that globally decreased sensitivity to alcohol predicts AUD [14,15,16]. Critically however, research has demonstrated that SR is multi-dimensional [17,18,19]. The Differentiator Model as refined by King et al [20] suggests that stimulatory and sedative dimensions of SR differentially predict alcoholism risk and binge drinking behavior. Specifically, an enhanced stimulatory and rewarding SR, particularly at peak BrAC is associated with heavier drinking and more severe AUD prospectively [20, 21]. The Differentiator Model also suggests blunted sedative SR is an AUD risk factor, however, effect sizes for sedation are generally smaller.

Both the LR and Differentiator models have garnered considerable empirical support in alcohol challenge research (for review and meta-analysis [13]); however, both models share some limitations. Human subjects research has not adequately tested whether SR represent a dynamic construct across the development of alcohol dependence and whether the motivational structure of alcohol consumption is altered in dependence vs. early non-dependent drinking. Recently, King et al. [22] reported that the elevated stimulating and rewarding SR in heavy (vs. light) drinkers remained elevated over a 5-year period. Furthermore, this outcome was particularly strong among the ~10% of heavy drinking participants who showed high levels of AUD progression.

In two previous alcohol challenge studies, we showed that stimulation/hedonia and craving are highly correlated among non-dependent heavy drinkers, whereas no stimulation-craving association was evidenced among alcohol dependent participants [23, 24]. These results were interpreted as being consistent with the Allostatic Model, insofar as the function of stimulation/hedonia in promoting craving appeared diminished in alcohol dependence. Of note, however, neither study observed the hypothesized relationship between negative affect and craving among dependent participants. A primary limitation of these previous studies was the utilization of craving as a proxy end point for alcohol motivation and reinforcement. A recent study of young heavy drinkers found that both stimulation and sedation predicted free-access self-administration via craving [25]. However, since this study did not include moderate–severe AUD participants, it is unclear whether the association between stimulation and self-administration is blunted in later-stage dependence.

This study was designed to test whether SR predicts motivated alcohol self-administration and whether this relationship is moderated by alcohol use severity, thus providing much needed insight about the function of SR in alcohol reinforcement and advancing an experimental framework for translational science. Heavy drinkers ranging in their severity of alcohol use and problems completed a novel intravenous (IV) alcohol administration session consisting of a standardized alcohol challenge followed by progressive-ratio alcohol reinforcement. On the basis of the Allostatic Model, we predicted a strong relationship between stimulation and self-administration at low alcohol use severity, whereas no such association would be observed at greater alcohol use severity. Conversely, it was hypothesized that negative affect would be a stronger predictor of alcohol self-administration among more severe participants. These two hypotheses would thus capture dependence-related blunting of positive reinforcement and enhancement of negative reinforcement.

Methods

Participants

This study was approved by the Institutional Review Board at UCLA. Non-treatment seeking drinkers were recruited between April 2015 and August 2016 from the Los Angeles community through fliers and online advertisements (compensation up to $270).

Initial eligibility screening was conducted via online and telephone surveys followed by an in-person screening session. After providing written informed consent, participants were breathalyzed, provided urine for toxicology screening, and completed a battery of self-report questionnaires and interviews. All participants were required to have a BrAC of 0 mg% and to test negative on a urine drug screen (except cannabis). Female participants were required to test negative on a urine pregnancy test.

Inclusion criteria were as follows: (1) age between 21 and 45, (2) caucasian ethnicity (due to an exploratory genetic aim not reported here), (3) fluency in English, (4) current heavy alcohol use of 14+ drinks per week for men or 7+ for women, (5) if female, not pregnant or lactating, and using a reliable method of birth control (e.g., condoms), and (6) body weight of less than 265lbs to reduce the likelihood of exhausting the alcohol supply during the infusion. Exclusion criteria were as follows: (1) treatment seeking for AUD, (2) current diagnosis of substance use disorder other than nicotine or alcohol, (3) lifetime diagnosis of moderate-to-severe substance use disorder other than nicotine, alcohol, or cannabis, (4) a diagnosis of bipolar disorder or any psychotic disorder, (5) current suicidal ideation, (6) current use of non-prescription drugs, other than cannabis, (7) use of cannabis more than twice weekly, (8) clinically significant physical abnormalities as indicated by physical examination and liver functioning labs, (9) history of chronic medical conditions, such as hepatitis, or a chronic liver disease, (10) current use of any psychoactive medications, such as antidepressants, mood stabilizers, sedatives, or stimulants, (11) score ≥ 10 on the Clinical Institute Withdrawal Assessment for Alcohol-Revised (CIWA-Ar [26]) indicating clinically significant alcohol withdrawal requiring medical management, and (12) fear of, or adverse reactions to needle puncture.

Alcohol administration session

Participants arrived at the UCLA Clinical and Translational Research Center (CTRC) at approximately 10:30AM. At intake, vitals, height, and weight were measured, and participants were provided with a standardized high caloric breakfast. IV lines were placed by a registered nurse at approximately 11:30AM. After participants acclimated to the IV lines, they completed baseline assessments. The alcohol infusion paradigm began at approximately 12:00PM and lasted 180 min. To ensure all participants were safe to discharge, and to disincentivize low-levels of self-administration for early discharge, all participants were required to remain at the CTRC for at least 4 additional hours. Discharge occurred when participant BrAC fell below 40 mg% or 0 mg% if they were driving.

Throughout the infusion, participants were seated in a comfortable chair in a private room. Participants were not able to view the infusion pump or technician’s screen. To control distractions, participants watched a movie (BBC’s Planet Earth). Study staff remained in the room to monitor the infusion, breathalyze the participant, take vital signs, administer questionnaires, and answer questions but they did not significantly engage with participants otherwise.

Alcohol infusion parameters

To enable precise control over BrAC and to dissociate biobehavioral responses to alcohol from responses to cues, alcohol was administered IV (6% ethanol v/v in saline) using a physiologically based pharmacokinetic model implemented in the Computerized Alcohol Infusion System (CAIS; [27,28,29,30]). CAIS estimates BrAC pseudo-continuously (30-s intervals) based on the infusion time course and participants sex, age, height, weight, and breathalyzer readings. The CAIS system was modified for this study to combine two alcohol administration paradigms: a 3-step standard alcohol challenge followed by self-administration. During the challenge, participants were administered alcohol designed to reach target BrACs of 20, 40, and 60 mg%, each over 15 min. BrACs were clamped at each target level while participants completed questionnaires (~5 min). This challenge procedure closely mirrors previous studies by our group (e.g., see ref. [23, 24]).

Following the 60 mg% time point and a required restroom break, participants began the self-administration paradigm. Participants were permitted to exert effort (pressing an electronic button) to obtain additional “drinks” through the CAIS system, according to a progressive ratio schedule. Participants were required to order one “drink” to familiarize themselves with the procedure (participants had previously viewed a demonstration). The progressive ratio was log-linear and determined through simulations and pilot testing. Ratio requirements ranged from 20 responses (1st completion) to 3139 responses (20th completion). Each “drink” increased BrAC by 7.5 mg% over 2.5 min, followed by a decent of −1 mg%/min [28]. A maximum BrAC safety limit was set at 120 mg%. If an infusion would exceed this limit the response button was temporarily inactivated. Except for the first “drink”, participants were given no instruction with respect to their self-administration. After 180 min, the infusion ended, the IV line was removed, and participants were provided lunch.

Measures

Alcohol use severity measures

The Structured Clinical Interview for DSM-5 (SCID; adapted from [31]) assessed for lifetime and current AUD and the exclusionary psychiatric diagnoses. The CIWA-Ar assessed for the alcohol withdrawal severity [26]. A 30-day timeline follow-back (TLFB) assessed drinking quantity and frequency [32]. Participants also completed the Alcohol Dependency Scale (ADS; [33]), the Alcohol Use Disorders Identification Test (AUDIT; [34]), the Penn Alcohol Craving Scale (PACS; [35]), and the Obsessive Compulsive Drinking Scale (OCDS; [36]).

Other baseline measures

Cigarette and marijuana use were assessed using the TLFB [32]. Family history of alcohol-related problems was measured via a family tree questionnaire [37]. Depressive symptomatology was assessed via the Beck Depression Inventory-II [38].

Subjective Responses to Alcohol measures

Based on our previous factor analytic work, SR was assessed along four dimensions: stimulation/hedonia (stimulation), negative affect, sedation/motor intoxication (sedation), and craving [19]. Participant completed SR assessments at baseline, 20, 40, and 60 mg% timepoints during the challenge. Stimulation included the Biphasic Alcohol Effects Scale Stimulation subscale (BAES; [39]) and the Profile of Mood States Positive Mood and Vigor subscales (POMS; [40]). Sedation included the BAES Sedation subscale and the Subjective High Assessment Scale (SHAS; [14]). Negative Affect included the POMS Negative Mood and Tension subscales. Craving was measured by the Alcohol Urge Questionnaire (AUQ; [41]). To incorporate multiple scales per SR per domain, and equally weight scales with discrepant ranges, combined scores were computed within each SR domain by first Z-score transforming each measure across the entire challenge, and then summing these scaled scores.

Power analysis

Target sample size was determined a priori based on a modest higher order interaction effect size of R2 = 0.05. Owing to the nested data structure, we employed an neffective approach that effectively determines the equivalent single-level sample corresponding to our multilevel (i.e. repeated-measures) design [42]. Based on a 4 time point design, and ρ = 0.75 (an estimate based on previous studies), we determined that a sample size of 66 was sufficient to achieve 80% power for the primary aims of this study.

Data analysis

All analyses were conducted in R version 3.3.0 [43]. To minimize measurement noise, and limit false positive risk, an alcohol use severity factor score, computed via principal component analysis, served as the primary predictor variable (see Supplemental Materials).

Data analyses were broken into three sections. First, due to the nested data structure a series of multilevel models [44] tested whether alcohol use severity predicted SR during the alcohol challenge. In each model, SR was predicted by BrAC time point (coded 0–3), alcohol use severity, and their interaction. Intercepts and BrAC slopes were random at Level 2. Expected values were computed from multilevel model coefficients and plotted using ggplot2 [45]. To check robustness, sex, age, BDI, and cigarettes per day were also explored as covariates for all outcomes.

Second, we tested whether alcohol use severity predicted self-administration BrAC curves. CAIS generated 13,323 total BrAC estimates (~200 per subject). To address extreme autocorrelation issues, we averaged BrACs over discreet 10-minute bins resulting in 698 BrAC observations (~11 per subject), which closely tracked raw BrACs (see Supplemental Materials), and substantially reduced autocorrelation (Z = 50.85, p < 0.001). Polynomial models using full information maximum likelihood estimation tested BrAC curves as a function of alcohol use severity. Level-1 autocorrelation was modeled using an AR(1) structure.

Third, we tested whether challenge SR predicted self-administration. SR variables were entered as Level 2 predictors of BrAC curves overall and as moderated by alcohol use severity. Specifically, for each SR variable, we computed via Empirical Bayesian (EB) estimation a level (the expected value of SR at the 40 mg% BrAC time point, the middle alcohol time point), and a slope (the expected linear change in SR over the Challenge; Supplemental Materials). The model building approach started with a fully interactive model followed by singular trimming of nonsignificant predictors for parsimony.

Results

Sample characteristics

Sixty-seven participants completed the research protocol (Fig. 1 & Table 1).

Alcohol use severity factor

A principal component analysis of current and lifetime AUD symptom count from the SCID, drinks per week, drinks per drinking day, monthly drinking days, and binge drinking proportion from the TLFB, and the ADS, AUDIT, CIWA-Ar, OCDS, and PACS, revealed a single component solution (53% of variance explained) with all variables loading ≥ 0.495 on the alcohol use severity factor (Supplemental Materials). Alcohol use severity factor score was associated with age (p < 0.01), depressive symptomatology (p < 0.001), cigarettes per day (p < 0.05) and at a trend-level sex (p = 0.09). As expected, the alcohol use severity factor score was associated with all alcohol-related variables (p’s < 0.001) with the exceptions of family history of alcohol problems, and AUD age of onset.

Alcohol administration overview

Raw BrAC curves from CAIS are displayed in Fig. 2. Average duration of the alcohol challenge was 70.66 ± 5.20 min, and the self-administration paradigm lasted 100.42 ± 5.10 min. BrAC was well controlled across the challenge (17.34 ± 2.00, 38.71 ± 3.21, and 59.10 ± 4.19 mg%). The duration of each time point varied due to the inclusion of additional assessments at 60 mg% (F(2,198) = 103.7, p < 0.001; 20 mg%: 7.58 ± 2.19 min; 40 mg%: 6.72 ± 1.51; 60 mg%: 11.36 ± 2.17). On average participants self-administered 10.85 ± 4.95 “drinks” and reached a maximum BrAC of 96.03 ± 21.42 mg%.

Individual BrAC curves computed via the Computerized Alcohol Infusion System (CAIS). CAIS implements a physiologically based pharmacokinetic model to estimate BrAC pseudo-continuously (30-s intervals) based on the infusion time course and participants sex, age, height, weight, and real-time breathalyzer readings. The alcohol administration paradigm consisted of two components. The alcohol challenge to target BrACs = 20, 40, and 60 mg%, lasted on an average of 70.66 (SD = 5.20) min, and the self-administration paradigm lasted an average of 100.42 (SD = 5.10) min. Participants completed SR measures at each challenge time point

Subjective response to the alcohol challenge

Stimulation increased over rising BrAC (B = 0.28, SE = 0.11, p = 0.011, Fig. 3a). Alcohol use severity did not predict stimulation as a main effect or as a moderator of BrAC slopes (p ≥ 0.705).

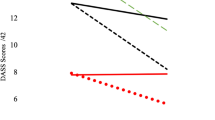

Magnitude of subjective responses to alcohol over the Challenge. Each graph represents the expected value of the subjective response variable estimated from a multilevel model including the predictors of alcohol use severity, BrAC time point, and their interaction. a The Stimulation outcome was a combined outcome including the measures, BAES Stimulation, POMS Vigor, and POMS Positive Mood. b The Sedation outcome was combined from the BAES Sedation and SHAS scales. c Negative Affect combined POMS Tension and Negative Mood. d Alcohol craving was measured using the AUQ. The selected alcohol use Severity factor scores correspond to the mean values for participants who had no current AUD diagnosis (−1.26), mild AUD (−0.05), moderate AUD (1.57), and severe AUD (4.14) according to DSM-5

Sedation also increased over rising BrAC (B = 0.51, SE = 0.07, p < 0.001, Fig. 3b). Participants with greater alcohol use severity reported greater overall sedation (B = 0.21, SE = 0.07, p = 0.006), with no difference in alcohol-induced sedation (i.e., alcohol use severity × BrAC interaction; p = 0.387). Sex, age, and cigarettes per day had no effects (p’s ≥ 0.278). Depressive symptomatology was associated with greater sedation overall (B = 0.33, SE = 0.10, p = 0.002). After controlling for depressive symptomatology, the effect of alcohol use severity was no longer significant (p = 0.10).

Negative affect decreased over BrAC (B = −0.26, SE = 0.06, p < 0.001, Fig. 3c), and alcohol use severity predicted greater negative affect overall (B = 0.27, SE = 0.09, p = 0.003). The alcohol use severity × BrAC interaction was not significant (p = 0.310). Older participants trended towards greater negative affect (B = 0.06, SE = 0.03, p = 0.066). As expected, BDI predicted negative affect (B = 0.50, SE = 0.11, p < 0.001). The alcohol use severity effect remained significant when controlling for age, but not BDI score (p = 0.164).

Lastly, craving increased over the challenge (B = 0.20, SE = 0.03, p < 0.001, Fig. 3d), and alcohol use severity predicted greater overall craving (B = 0.18, SE = 0.04, p < 0.001), but not craving slope (p = 0.859). Male sex and greater BDI scores predicted greater craving overall (B = −0.51, SE = 0.20, p = 0.013; B = 0.17, SE = 0.06, p = 0.012, respectively). The alcohol use severity effect remained significant after controlling for sex and BDI.

As expected, all SR domains were affected by alcohol administration. While alcohol use severity was associated with overall greater craving, sedation, and negative affect, these effects represented baseline differences that were carried forward as opposed to differences in the acute effects of alcohol. Conversely, alcohol use severity did not predict stimulation.

Alcohol use severity and self-administration

Full BrAC curves were modeled to measure motivation at a high resolution. A quartic polynomial curve was the best fitting model (cubic vs. quartic: p < 0.001; quartic vs quintic: p = 0.264). All trial parameters (i.e., bin number coded 0–11) were random at Level 2 (p’s < 0.001) and autocorrelation was substantial (φ = 0.670). As expected, greater alcohol use severity predicted greater self-administration (alcohol use severity × Trial: B = 1.20, SE = 0.60, p = 0.048; Trial2: B = −0.23, SE = 0.10, p = 0.019; Trial3: B = 0.01, SE = 0.01, p = 0.024; Fig. 4 and Supplemental Table). Though significant, the effect of alcohol use severity was relatively modest compared to the full range of BrAC curves (see Fig. 2). Male participants and participants who smoked more cigarettes self-administered more alcohol and older participants tended to maintain higher BrAC (upplemental Figures). The effect of alcohol use severity remained significant after controlling for sex, age, and cigarettes per day. Full results for all BrAC curve analyses are presented in Supplemental Materials.

Alcohol use severity was found to predict greater BrAC curves over the course of the alcohol self-administration. BrAC levels were estimated by the CAIS software in 30-s intervals; however, for analyses, these estimated BrAC values were averaged over 10 min bins. The displayed lines represent the predicted values according to the final multilevel including a quartic time trend, alcohol use severity factor score, and the interaction between alcohol use severity and linear through cubic time terms. The selected alcohol use severity factor scores represent the mean scores for each DSM-5 AUD severity classifications (none = −1.26, mild = −0.05, moderate = 1.57, and severe = 4.14)

Craving and self-administration

Lending construct validity to the study design, craving level strongly predicted BrAC curves (Craving Level × Intercept: B = 4.28, SE = 0.94, p < 0.001; Trial: B = 4.94, SE = 1.42, p < 0.001, Trial2: B = −0.82, SE = 0.23, p < 0.001, Trial3: B = 0.04, SE = 0.01, p = 0.002; Fig. 5). After controlling for craving level, alcohol use severity no longer predicted self-administration (p’s ≥ 0.305). No alcohol use severity × craving level interactions were observed (p’s ≥ 0.151). Interestingly, when covarying for cigarettes per day, the craving level × alcohol use severity × trial interaction was trending (p = 0.078), such that craving level was a marginally better predictor of BrAC curves for participants with lower alcohol use severity. Controlling for sex and age didn’t affect these results.

Final model with craving level and alcohol use severity predicting BrAC self-administration curves. Craving level significantly predicted BrAC curve parameters. After accounting for alcohol craving level, alcohol use severity no longer predicted self-administration curves. The selected alcohol use severity factor scores represent the mean scores for each DSM-5 AUD severity classifications (none = −1.26, mild = −0.05, moderate = 1.57, and severe = 4.14)

Craving slope over the challenge also predicted BrAC curves (Craving Slope × Trial: B = 12.16, SE = 5.03, p = 0.016, Trial2: B = −0.95, SE = 0.39, p = 0.015). In this model, the effect of alcohol use severity remained significant. No craving slope × alcohol use severity interactions were significant (p’s ≥ 0.158). Interestingly, when covarying for sex, several craving slope × alcohol use severity × trial interactions were trending (p’s ≤ 0.094), such that craving slope was a marginally better predictor of BrAC curves for participants with lower alcohol use severity. Controlling for age and cigarettes per day had no effect.

In sum, alcohol craving strongly predicted BrAC curves, with craving slope predicting self-administration independent of alcohol use severity.

Positive reinforcement

Stimulation level did not predict BrAC curves and no alcohol use severity × stimulation level interactions were observed (p’s ≥ 0.360). At a trend level, stimulation slope predicted overall BrAC levels (B = 2.08, SE = 1.21, p = 0.090), though no stimulation level × trial interactions were significant (p’s ≥ 0.174). After controlling for sex, stimulation slope was no longer significant (p ≥ 0.180). Thus, contrary to our hypotheses, stimulation did not predict self-administration, regardless of alcohol use severity.

Negative reinforcement

Negative affect level did not predict BrAC curves and no alcohol use severity × negative affect level interactions were significant (p’s ≥ 0.432). At a trend level, greater alleviation of negative affect predicted greater self-administration among less severe participants, whereas this trend was reversed among higher alcohol use severity (negative affect slope × alcohol use severity: B = 3.18, SE = 1.88, p = 0.095, Supplemental Figures). The trend-level interaction was no longer significant after controlling for sex (B = 2.65, SE = 1.83, p = 0.153). No other negative affect slope effects approached significance (p’s ≥ 0.405). Contrary to our expectations, negative affect did not predict self-administration regardless of alcohol use severity.

Sedation

Greater levels of sedation were associated with lower BrAC curves (Sedation Level × Trial: B = −2.28, SE = 0.88, p = 0.010; Trial2: B = 0.41, SE = 0.14, p = 0.004, Trial3: B = −0.02, SE = 0.01, p = 0.004). Similarly, sedation slope predicted lower levels of self-administration (Sedation Slope × Trial: B = −7.77, SE = 3.64, p = 0.033; Trial2: B = 1.52, SE = 0.58, p = 0.009; Trial3: B = −0.09, SE = 0.03, p = 0.006). No alcohol use severity × sedation interactions were significant (p’s ≥ 0.256). The effects of sedation level and slope remained significant after controlling for sex, age, and cigarettes per day. These sedation results were consistent with the Differentiator and LR models, wherein lower sedation was protective vis-a-vis self-administration.

Discussion

The aim of this study was to develop a clinical neuroscience laboratory paradigm to test predictions emerging from preclinical research. A key tenet of the Allostatic Model is that prolonged drinking produces neurobiological adaptations that diminish the salience of positive reinforcement while simultaneously producing abstinence-related dysphoria and potentiating negative reinforcement [2,3,4]. In this study, we developed a novel IV alcohol administration paradigm in humans that combines standardized alcohol challenge methods with progressive ratio self-administration, providing a reliable assessment of subjective responses and a translational measure of motivation to consume alcohol, respectively. SR was measured in terms of positive dimensions (Stimulation/Hedonia), negative dimensions (Negative Affect), sedation, and craving. Through integrating measures of subjective effects and behavioral reinforcement, we could test whether SR predicted self-administration behavior, thus capturing the relationships between reward and reinforcement central to allostatic processes.

As expected, severity of alcohol use predicted greater overall alcohol craving and greater self-administration. Further validating the paradigm, we observed a robust relationship between self-reported craving for alcohol during the challenge and subsequent reinforcement behavior. Interestingly, alcohol-induced increases in craving (i.e. craving slope) predicted self-administration independent of alcohol use severity suggesting that reactivity to a priming dose of alcohol may represent an independent risk factor for escalated alcohol consumption. Similar reactivity effects have been observed with respect to alcohol and stress [46]. These results suggest that craving is a proximal predictor of alcohol consumption and thus is an appropriate target for intervention research.

Our hypotheses regarding blunted positive reinforcement in severe alcoholism were not supported by these data. Alcohol use severity did not affect stimulation in the challenge, and stimulation did not robustly predict self-administration regardless of alcohol use severity. These results stand in contrast to our previous reports, which found diminished associations between stimulation/hedonia and craving in dependence, as compared to non-dependent heavy drinking [23, 24]. However, our previous studies used craving as a proxy end point for reinforcement, and thus, those results may not generalize to actual motivated alcohol consumption. Several recent CAIS studies have observed significant relationships between stimulation and self-administration [25, 47]; however, multiple study factors, including sample drinking intensity and alcohol use disorder severity, target BrAC, and free-access vs. progressive ratio schedules of reinforcement may explain these discrepancies.

Our hypothesis that negative reinforcement would be stronger among more severe participants were only partially supported. Alcohol use severity was associated with greater levels of depressive symptomatology and basal negative affect, but alcohol use severity did not predict alcohol-induced alleviation of negative affect. Furthermore, negative affect did not robustly predict reinforcement behavior. While these negative affect findings are consistent with our previous studies on craving [23, 24], they appear inconsistent with a body of literature that has demonstrated relationships between negative affect and naturalistic alcohol use [48,49,50,51]. Most studies that have observed a relationship between negative affect and drinking behavior assess negative affectivity as a trait-like variable whereas this study assessed state negative affect immediately prior to the self-administration paradigm. It is possible that participants completing a laboratory paradigm such as this are in an atypically positive mood since they (1) are going to be compensated for their participation (2) are anticipating receiving alcohol, and (3) do not have to deal with daily life hassles during their participation. Secondly, it is possible that the predictors of alcohol self-administration in a controlled laboratory setting are dissociable from predictors of naturalistic drinking which is more susceptible to exogenous factors such as drinking cues, peer influence, drinking habits/patterns, and life stressors. Future studies are necessary to examine these multiple possible explanations.

In terms of sedation, these results were partially consistent with the Differentiator and Low Level of Response Models that advance sedation as a protective factor against excessive alcohol use [14, 15, 20, 52]. Although alcohol use severity was associated with greater overall sedation, this effect represented a baseline difference that was carried forward rather than a difference in the acute responses to alcohol and greater sedation during the challenge did predict lower levels of self-administration. The lack of light-to-moderate drinkers in our sample may explain these counterintuitive challenge results, as most other studies compare lighter drinkers to heavy drinkers [13].

This study should be interpreted in light of its strengths and weaknesses. The study benefits chiefly from a novel, highly controlled, and translational alcohol administration paradigm that measures alcohol reward and reinforcement and isolates reactivity to alcohol-related cues. The primary limitation was the relatively small sample of participants with severe AUD per DSM-5 [53]. The fact that, for ethical reasons, participants were required to be non-treatment seeking and able to produce a zero on a breathalyzer test at each visit (the visit would be rescheduled otherwise; across all visit types in this study the rate of no-show/reschedule was 45%) may have impeded recruitment of severe AUD participants. While severe AUD participants were enrolled, this subgroup was smaller and generally represented the lower range of severe AUD. Allostatic neuroadaptations may occur chiefly at higher levels of dependence severity, such as those induced by the ethanol vapor paradigm and participants at this level of severity would likely have been excluded from this study for safety reasons. The relatively scarcity of severe AUD participants also reduces statistical power to detect effects that are expected to arise at this severe range (e.g. negative reinforcement). That said, our sample is comparable to other “severe” samples recruited in alcohol challenge studies (e.g. ~10% severe AUD with ~ 6.5 mean symptoms in [22]). A substantial self-administration ceiling effect, where 36% of participants reached the BrAC safety threshold, may also have affected our results. Additionally, the sample restriction to Caucasian ethnicity limits the generalizability of these results. Lastly, though this study was cross-sectional, allostatic processes are necessarily longitudinal. In these analyses, alcohol use severity was used as a proxy for this longitudinal process capturing multiple facets of alcohol use and problems; However, this approach assumes a relatively linear and progressive course of alcoholism, which may not represent many AUD patients.

In conclusion, this study represents a novel approach to translating preclinical theories of addiction to human-subjects research. In these data subjective craving strongly predicted reinforcement behavior and sedation was moderately protective. Conversely, we observed relatively little evidence for the allostatic processes of diminished positive reinforcement and enhanced negative reinforcement in participants with relatively severe alcohol use and problems. Further studies refining and enhancing this translational paradigm, for example by including affective manipulations to test the role of stress in reward and reinforcement are warranted. Interestingly, ecological research has highlighted the role of acute stress events in predicting drug use as opposed to basal negative affect which was measured in this study [54]. Furthermore, given the severity of dependence induced by preclinical paradigms, recruitment of more severe AUD samples may be necessary for a robust translational examination.

Data availability

The data set used in this study is available upon request by the senior author (LAR) and full data analysis code is available on the lead authors GitHub profile (https://github.com/sbujarski).

References

Koob GF, Kenneth Lloyd G, Mason BJ. Development of pharmacotherapies for drug addiction: a Rosetta Stone approach. Nat Rev Drug Discov. 2009;8:500–15.

Koob GF, Kreek MJ. Stress, dysregulation of drug reward pathways, and the transition to drug dependence. Am J Psychiatry. 2007;164:1149–59.

Koob GF, Le Moal M. Drug abuse: hedonic homeostatic dysregulation. Science. 1997;278:52–8.

Koob GF, Volkow ND. Neurocircuitry of addiction. Neuropsychopharmacology. 2009;35:217–38.

Heinz A, Löber S, Georgi A, Wrase J, Hermann D, Rey E-R, et al. Reward craving and withdrawal relief craving: assessment of different motivational pathways to alcohol intake. Alcohol Alcohol. 2003;38:35–9.

Meinhardt MW, Sommer WH. Postdependent state in rats as a model for medication development in alcoholism. Addict Biol. 2015;20:1–21.

O’Dell LE,Roberts AJ,Smith RT,Koob GF, Enhanced alcohol self-administration after intermittent versus continuous alcohol vapor exposure. alcohol. Alcohol Clin Exp Res. 2004;28:1676–82.

Schulteis G, Liu J. Brain reward deficits accompany withdrawal (hangover) from acute ethanol in rats. Alcohol. 2006;39:21–8.

Schulteis G, Markou A, Cole M, Koob GF. Decreased brain reward produced by ethanol withdrawal. Proc Natl Acad Sci. 1995;92:5880–4.

Rimondini R, Arlinde C, Sommer W, Heilig M. Long-lasting increase in voluntary ethanol consumption and transcriptional regulation in the rat brain after intermittent exposure to alcohol. FASEB J. 2002;16:27–35.

Valdez GR, Roberts AJ, Chan K, Davis H, Brennan M, Zorrilla EP, et al. Increased ethanol self-administration and anxiety-like behavior during acute ethanol withdrawal and protracted abstinence: regulation by corticotropin-releasing factor. Alcohol Clin Exp Res. 2002;26:1494–501.

Morean ME, Corbin WR. Subjective response to alcohol: a critical review of the literature. Alcohol Clin Exp Res. 2010;34:385–95.

Quinn PD, Fromme K. Subjective response to alcohol challenge: a quantitative review. Alcohol Clin Exp Res. 2011;35:1759–70.

Schuckit MA. Subjective responses to alcohol in sons of alcoholics and control subjects. Arch Gen Psychiatry. 1984;41:879–84.

Schuckit MA. Low level of response to alcohol as a predictor of future alcoholism. Am J Psychiatry. 1994;151:184–9.

Schuckit MA, Smith TL. An 8-year follow-up of 450 sons of alcoholic and control subjects. Arch Gen Psychiatry. 1996;53:202–10.

Lutz JA, Childs E. Test–retest reliability of the underlying latent factor structure of alcohol subjective response. Psychopharmacol (Berl). 2017;234:1209–16.

Ray LA, MacKillop J, Leventhal A, Hutchison KE. Catching the alcohol buzz: an examination of the latent factor structure of subjective intoxication. Alcohol Clin Exp Res. 2009;33:2154–61.

Bujarski S, Hutchison KE, Roche DJO, Ray LA. Factor structure of subjective responses to alcohol in light and heavy drinkers. Alcohol Clin Exp Res. 2015b;39:1193–202..

King AC, de Wit H, McNamara PJ, Cao D. Rewarding, stimulant, and sedative alcohol responses and relationship to future binge drinking. Arch Gen Psychiatry. 2011;68:389–99.

King AC, McNamara PJ, Hasin DS, Cao D. Alcohol challenge responses predict future alcohol use disorder symptoms: a 6-year prospective study. Biol Psychiatry. 2014;75:798–806.

King AC, Hasin D, O’Connor SJ, McNamara PJ, Cao D. A prospective 5-year re-examination of alcohol response in heavy drinkers progressing in alcohol use disorder. Biol Psychiatry. 2016;79:489–98.

Bujarski S, Hutchison KE, Prause N, Ray LA. Functional significance of subjective response to alcohol across levels of alcohol exposure. Addict Biol. 2015a. https://doi.org/10.1111/adb.12293.

Bujarski S, Ray LA. Subjective response to alcohol and associated craving in heavy drinkers vs. alcohol dependents: An examination of Koob’s allostatic model in humans. Drug Alcohol Depend. 2014a. https://doi.org/10.1016/j.drugalcdep.2014.04.015.

Wardell JD, Ramchandani VA, Hendershot CS. A multilevel structural equation model of within- and between-person associations among subjective responses to alcohol, craving, and laboratory alcohol self-administration. J Abnorm Psychol. 2015;124:1050–63.

Sullivan JT, Sykora K, Schneiderman J, Naranjo CA, Sellers EM. Assessment of alcohol withdrawal: the revised clinical institute withdrawal assessment for alcohol scale (CIWA-Ar). Br J Addict. 1989;84:1353–7.

Plawecki MH, Han J-J, Doerschuk PC, Ramchandani VA, O’Connor SJ. Physiologically based pharmacokinetic (PBPK) models for ethanol. Biomed Eng IEEE Trans On. 2008;55:2691–700.

Zimmermann US, Mick I, Vitvitskyi V, Plawecki MH, Mann KF, O’Connor S. Development and pilot validation of computer-assisted self-infusion of ethanol (case): a new method to study alcohol self-administration in humans. Alcohol Clin Exp Res. 2008;32:1321–8.

Zimmermann US, Mick I, Laucht M, Vitvitskiy V, Plawecki MH, Mann KF, et al. Offspring of parents with an alcohol use disorder prefer higher levels of brain alcohol exposure in experiments involving computer-assisted self-infusion of ethanol (CASE). Psychopharmacology. 2009;202:689–97.

Zimmermann US, O’Connor SJ, Ramchandani VA. Modeling alcohol self-administration in the human laboratory. Behav Neurobiol Alcohol Addict. 2013;13:315–53.

First MB. Structured clinical interview for DSM-IV-TR Axis I disorders: patient edition. Biometrics Research Department, Columbia University, New York, New York, USA; 2005.

Sobell LC, Sobell MB, Leo GI, Cancilla A. Reliability of a timeline method: assessing normal drinkers’ reports of recent drinking and a comparative evaluation across several populations. Br J Addict. 1988;83:393–402.

Skinner HA, Allen BA. Alcohol dependence syndrome: measurement and validation. J Abnorm Psychol. 1982;91:199.

Allen JP, Litten RZ, Fertig JB, Babor T. A review of research on the alcohol use disorders identification test (AUDIT). Alcohol Clin Exp Res. 1997;21:613–9.

Flannery BA, Volpicelli JR, Pettinati HM. Psychometric properties of the penn alcohol craving scale. Alcohol Clin Exp Res. 1999;23:1289–95.

Anton RF. Obsessive–compulsive aspects of craving: development of the Obsessive Compulsive Drinking Scale. Addiction. 2000;95:211–7.

Mann RE, Sobell LC, Sobell MB, Pavan D. Reliability of a family tree questionnaire for assessing family history of alcohol problems. Drug Alcohol Depend. 1985;15:61–7.

Beck AT, Steer RA, Brown GK. Manual for the Beck depression inventory-II. San Antonio TX Psychol Corp. 1996;1:82.

Martin CS, Earleywine M, Musty RE, Perrine MW, Swift RM. Development and validation of the biphasic alcohol effects scale. Alcohol Clin Exp Res. 1993;17:140–6.

McNair D, Lorr M, Droppleman LF. Profile of mood states. San Diego Calif. 1992;53:6.

Bohn MJ, Krahn DD, Staehler BA. Development and initial validation of a measure of drinking urges in abstinent alcoholics. Alcohol Clin Exp Res. 1995;19:600–6.

Kim J-S. Multilevel analysis: An overview and some contemporary issues. In: Roger EM, Alberto M-O. editors. The SAGE handbook of quantitative methods in psychology. London: SAGE; 2009. p. 337-61. Accessed date 1/24/2018.

R Development Core Team. R: a language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. 2008. http://www.R-project.org. Accessed date 3/12/2017.

Bliese P. Multilevel: multilevel functions. 2013. https://CRAN.R-project.org/package=multilevel. Accessed date 3/12/2017.

Wickham H. ggplot2: elegant graphics for data analysis. New York: Springer-Verlag. 2009. http://ggplot2.org. Accessed date 3/12/2017.

Mason BJ, Light JM, Williams LD, Drobes DJ. Proof-of-concept human laboratory study for protracted abstinence in alcohol dependence: effects of gabapentin. Addict Biol. 2009;14:73–83.

Stangl BL, Vatsalya V, Zametkin MR, Cooke ME, Plawecki MH, O’Connor S, et al. Exposure-response relationships during free-access intravenous alcohol self-administration in nondependent drinkers: influence of alcohol expectancies and impulsivity. Int J Neuropsychopharmacol. 2017;20:31–9.

Bujarski S, Ray LA. Negative affect is associated with alcohol, but not cigarette use in heavy drinking smokers. Addict Behav. 2014b;39:1723–9..

Carpenter KM, Hasin DS. Drinking to cope with negative affect and DSM-IV alcohol use disorders: a test of three alternative explanations. J Stud Alcohol. 1999;60:694–704.

Greeley J, Oei T. Alcohol and tension reduction. Psychol Theor Drink Alcohol. 1999;2:14–53.

Jackson KM, Sher KJ. Alcohol use disorders and psychological distress: a prospective state-trait analysis. J Abnorm Psychol. 2003;112:599.

Newlin DB, Thomson JB. Alcohol challenge with sons of alcoholics: A critical review and analysis. Psychol Bull. 1990;108:383–402.

American Psychiatric Association. Diagnostic and statistical manual of mental disorders, (DSM-5®). American Psychiatric Pub, Arlington, VA, USA; 2013.

Preston KL, Vahabzadeh M, Schmittner J, Lin J-L, Gorelick DA, Epstein DH. Cocaine craving and use during daily life. Psychopharmacology. 2009;207:291.

Acknowledgements

The authors would like to thank Rui Morimoto, Katy Lunny, Kyle Bullock, Michael Mirbaba, Lyric Tully, Kavya Mudiam, Tsu Xuan Wu, Xuan-Thanh Nguyen, Jessica Jenkins, and Taylor Rohrbaugh, for their contribution to the data collection for this project. Thank you to the UCLA Clinical and Translational Research Center nursing staff who were instrumental in all alcohol administration sessions. Thank you to Emily Hartwell, Aaron Lim, ReJoyce Green, and Jonathan Westman for their input on manuscript preparation. Support for the development of the Computer-Assisted Infusion System was provided by Sean O’Connor, Martin Plawecki, and Victor Vitvitskiy, Indiana Alcohol Research Center (P60 AA007611), Indiana University School of Medicine. The authors would also like to thank Bethany Stangl for her help in setting up the CAIS system. The authors would like to thank Jennifer Krull for her assistance in conceptualizing and implementing the statistical modeling and power analysis employed in this manuscript. Lastly the authors would like to thank Christopher Evans for his assistance in study conceptualization focusing on the translation of preclinical models to human research. Funding & Disclosures: This work was supported by the NIAAA (R21 - AA022752 to LAR). SB was supported as a pre-doctoral trainee and is receiving postdoctoral funding from the NIAAA (F31 - AA022569 to SB & R01 - DA041226 to LAR). LAR has received study medication from Pfizer and Medicinova and consulted for GSK. While there was no formal pre-registration of the study analyses (e.g., in the Open Science Framework), this study was proposed and served as the dissertation for the lead author (SB), and as such the full analytic plan, including the analyses that are presented in the final manuscript were discussed and decided a priori with a team of experts including a quantitative psychologist specializing in multilevel modeling (Jennifer Krull).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

About this article

Cite this article

Bujarski, S., Jentsch, J.D., Roche, D.J.O. et al. Differences in the subjective and motivational properties of alcohol across alcohol use severity: application of a novel translational human laboratory paradigm. Neuropsychopharmacol 43, 1891–1899 (2018). https://doi.org/10.1038/s41386-018-0086-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41386-018-0086-9

This article is cited by

-

High-intensity sweet taste as a predictor of subjective alcohol responses to the ascending limb of an intravenous alcohol prime: an fMRI study

Neuropsychopharmacology (2024)

-

Using naltrexone to validate a human laboratory test system to screen new medications for alcoholism (TESMA)- a randomized clinical trial

Translational Psychiatry (2023)

-

Additive roles of tobacco and cannabis co-use in relation to delay discounting in a sample of heavy drinkers

Psychopharmacology (2022)

-

A deeper insight into how GABA-B receptor agonism via baclofen may affect alcohol seeking and consumption: lessons learned from a human laboratory investigation

Molecular Psychiatry (2021)

-

Translational opportunities in animal and human models to study alcohol use disorder

Translational Psychiatry (2021)