Abstract

Affective valence lies on a spectrum ranging from punishment to reward. The coding of such spectra in the brain almost always involves opponency between pairs of systems or structures. There is ample evidence for the role of dopamine in the appetitive half of this spectrum, but little agreement about the existence, nature, or role of putative aversive opponents such as serotonin. In this review, we consider the structure of opponency in terms of previous biases about the nature of the decision problems that animals face, the conflicts that may thus arise between Pavlovian and instrumental responses, and an additional spectrum joining invigoration to inhibition. We use this analysis to shed light on aspects of the role of serotonin and its interactions with dopamine.

Similar content being viewed by others

INTRODUCTION

The theory of adaptive optimal control concerns learning the actions that maximize rewards and minimize punishments, in both cases over the long run. Optimal control is mathematically straightforward, but suffers from three critical problems: (1) in rich domains, even when given all the information, it can be an extremely hard computation to work out what the optimal action is; (2) it can be expensive to rely on learning in a world in which rewards are scarce and dangers rampant; and (3) some key abstractions in optimal control theory, such as the notion of a single utility function reporting negative and positive subjective values of outcomes, sit ill with the constraints of neural information processing (eg, the firing rates of neurons must be positive). Recent behavioral neuroscience and theoretical studies, combined with a more venerable psychological literature, are providing hints to solutions to these issues. We review these ideas, aiming at the critical impact of dopamine (DA), serotonin (5-HT), and some of their complex interactions.

Optimal control problems are solved through a collection of structurally and functionally different methods (Doya, 1999; Dickinson and Balleine, 2002; Daw et al, 2005; Dayan, 2008; Balleine, 2005), each of which realizes a different tradeoff between the difficulty of calculating the optimal action (called computational complexity) and the expense of learning (called sample complexity). Three particularly important methods are: (1) model-based or goal-directed control; (2) model-free, habitual, or cached control; and (3) Pavlovian control. Both goal-directed and cached controls are involved in instrumental conditioning. In the former, a model of the task is constructed and explicitly searched to work out the evolving worth of each action. In the latter, there is a direct mapping from actions to worths, which is learned from experience in a way that obviates acquiring or searching a model. Pavlovian control depends on evolutionarily pre-programmed responses to predictions and occurrences of reinforcers. Pavlovian responses associated with appetitive outcomes include approach (Glickman and Schiff, 1967), engagement, and consumption (Panksepp, 1998). Pavlovian responses to aversive outcomes include a range of species-typical (Bolles, 1970; Schneirla, 1959) defensive and avoidance behaviors such as inhibition and fight/flight/freeze (Gray and McNaughton, 2003) that are sensitive to the proximity of threats (Blanchard and Blanchard, 1989; McNaughton and Corr, 2004).

Our task is to integrate anatomical, pharmacological, physiological, and behavioral neuroscience data to provide a picture of what role(s) DA and 5-HT have in influencing the three types of controls mentioned above. It should be noted that there is a near overwhelming wealth of data; we had therefore to be highly selective in our discussion, and apologize for the volume we had to omit. Recent reviews such as Haber and Knutson (2010), Jacobs and Fornal (1999), Cooper et al (2002), Cools et al (2008), Dayan and Huys (2009), Berridge (2007), Everitt et al (2008), Tops et al (2009), and Iordanova (2009) should collectively be consulted.

Our main argument is that the nature of the roles depends on two critical dimensions influencing control: valence (reward vs punishment) and action (invigoration vs inhibition) (Ikemoto and Panksepp, 1999). In turn, these dimensions are tied to each other by heuristic biases.

In these terms, one suggested role for DA is initiating appetitively inspired actions such as approach (as in theories of incentive salience; Berridge and Robinson, 1998; Berridge, 2007; Alcaro et al, 2007; Ikemoto and Panksepp, 1999). A second role, which is closely related to this, is mediating general appetitive Pavlovian–instrumental transfer (PIT) and vigor (Niv et al, 2007; Wyvell and Berridge, 2001; Smith and Dickinson, 1998). This has also been related to the role of DA in overcoming effort costs (Salamone and Correa, 2002), voluntary motivation (Mazzoni et al, 2007), and ‘seeking’ behavior (Panksepp, 1998). A final role for DA is representing the appetitive portion of the temporal difference (TD) prediction error (Sutton, 1988), which is the critical signal in reinforcement learning (RL) for acquiring predictions of long-run future rewards and also for choosing appropriate actions (Montague et al, 1996; Schultz et al, 1997; Barto, 1995). These three capacities for DA, which are certainly not mutually exclusive (Alcaro et al, 2007; McClure et al, 2003), all involve rewards. The neuromodulator has a more complex association with punishment, with clear evidence for its release and involvement in some forms in certain aversive paradigms (Beninger et al, 1980b; Moutoussis et al, 2008; Brischoux et al, 2009; Matsumoto and Hikosaka, 2009; Kalivas and Duffy, 1995; Abercrombie et al, 1989; Pezze and Feldon, 2004), but also contrary and constraining data (Schultz, 2007; Mirenowicz and Schultz, 1996; Ungless et al, 2004).

We recently reviewed computational issues associated with the substantial extra intricacies of 5-HT compared with DA (Dayan and Huys, 2009). Notably, there is much evidence for functional opponency between DA and 5-HT. For instance, one evident association is with behavioral inhibition (opponent to approach) in the face of predictions of aversive outcomes (Soubrié, 1986; Deakin, 1983; Deakin and Graeff, 1991; Graeff et al, 1998; Gray and McNaughton, 2003). Equally, just as DA influences vigor in the face of hunger (Niv et al, 2007), 5-HT is associated with quiescence in the face of satiety (Gruninger et al, 2007; Cools et al, 2010). Furthermore, 5-HT neurons are activated (Grahn et al, 1999; Takase et al, 2004, 2005) and 5-HT is released (Bland et al, 2003a) in the face of punishment (Lowry, 2002; Abrams et al, 2004).

The notion of appetitive and aversive opponency itself is one of the most venerable ideas for the neural representation of valence (Konorski, 1967; Grossberg, 1984; Solomon and Corbit, 1974; Dickinson and Dearing, 1979; Brodie and Shore, 1957). Indeed, opponency between DA and 5-HT has been considered in detail by Deakin (1983), Deakin and Graeff (1991), and Graeff et al (1998). Daw et al (2002) suggested a computationally specific version of this idea, for the learning of habitual control. In their model, the phasic activity of DA and 5-HT reported prediction errors for future reward and punishment, and their tonic activity reported long-run average punishment and reward, respectively (ie, the reverse relationship). This account is not tenable in the light of the rich picture of structurally different influences on control, including the Pavlovian controller. It also does not reflect asymmetries in natural environments between reward and punishment, or between safety and danger signaling in terms of their influence on active avoidance. It faces further challenges from recent experiments orthogonalizing valence and activity (Guitart-Masip et al, 2010; Crockett et al, 2009; Huys et al, 2010) that call into question the choice of affective value as the fundamental axis of opponency between the neuromodulators.

In this study we first review optimal control and the different forms of RL with which it is associated. We then consider the critical roles that biases and heuristics have in evading the problems of learning; we argue that these are apparent in the architecture of control as well as in observed behavior. We next consider the implications of these for an updated theory of interaction between 5-HT and DA. Finally, we sum up the claims and main lacunæ of the new view.

The paper in this issue by Cools et al (2010) also considers DA and 5-HT interactions, but it started from the important and complementary viewpoint of the issue of vigor and quiescence rather than Pavlovian–instrumental interactions.

OPTIMAL AND SUBOPTIMAL CONTROL

The formal backdrop for the analysis of DA and 5-HT is that of RL and optimal control—how animals (and indeed robots or systems of any sort) can come to choose actions to maximize their long-run rewards and minimize their long-run punishments. This theory stems from operations research and computer science (Sutton and Barto, 1998; Puterman, 2005; Bertsekas and Tsitsiklis, 1996), but has long had rich links with psychology and neuroscience (Klopf, 1982; Barto, 1989, 1995; Schultz, 2002; Montague et al, 1996; Gabriel and Moore, 1991; Sutton and Barto, 1981; Daw and Doya, 2006; Niv, 2009). We do not have the space to review all this material here; however, we do need to provide the bones of the issues for the application of RL to understanding the choices of animals (see also the descriptions in Daw et al, 2005; Daw and Doya, 2006; Dayan and Seymour, 2008; Niv, 2009; Schultz, 2007). Central to this section are the differences between goal-directed and habitual control; Pavlovian control will be covered in the next section.

RL, Goal-Directed, and Habitual Control

Consider putting an animal into a maze with distinguishable rooms (in RL these are, more abstractly, called states) in which there can be rewards (eg, food or water) and punishments (shocks or predators). The rooms are connected by a haphazard arrangement of exits and perhaps unidirectional passageways, and hence the animal can choose among a small number of actions in each room, getting to a restricted set of other rooms. We consider its task to be to come to take actions to maximize its expected long-run net utility, that is, benefits minus costs over the whole run through the maze. Of course, these utilities, (particularly the appetitive ones) depend on the animal's motivational state (eg, food being immediately valuable to a hungry, not a sated animal; Niv et al, 2006). RL defines a policy to be a systematic set of choices, one exit (or more normally, one probability distribution over exits) for each room in the maze.

RL formalizes the two central and linked concerns for the animal: inference and learning. Take the case that the animal knows the whole layout of the maze. It then still faces the inference problem of choosing which exit to take out of one room. This is hard, because the long-run utility depends not only on the next reward or punishment in the next room, but also on the whole sequence of future rewards and punishments that will unfold from beyond that room. These, in turn, depend on the actions taken in those rooms. This is the same problem that, for instance, chess players face in having to think many steps ahead to work out the benefits of a strategy in terms of winning or losing.

RL crystallizes the problem by noting that, given a whole policy, each room can be endowed with a value. The value of a room is the long-run utility available based on starting in that room, and following the policy. This value incorporates all the rewards and punishments that will be received through following that policy (which incorporates the hard problem mentioned above). Given such values, an optimal choice can be realized by choosing the exit leading to a room that is evaluated most highly. As the values change, the policy that stems from choosing a good exit changes too. If only approximate values are available, choices may, of course, only be suboptimal.

One way to estimate the value of a room is to imagine a fictitious experience (like a form of pre-play; Johnson and Redish, 2007; Foster and Wilson, 2007; Gupta et al, 2010), starting from that room, simulating possible whole future paths that follow the policy, and accumulating the estimated utilities of the outcomes that are predicted. This is called model-based RL, as simulating such paths requires knowledge of the domain that amounts to a model of the passageways between rooms and the available outcomes. Such a model can readily be acquired directly from experience of rooms, transitions, and outcomes. Model-based calculations can also use algorithms that are computationally more efficient than pre-play (Sutton and Barto, 1998; Puterman, 2005; Bertsekas and Tsitsiklis, 1996).

In psychological terms, model-based RL has the property of goal-directed control that changing the utility of an outcome or the contingencies in the world would lead to an immediate change in the choice of actions (Dickinson and Balleine, 2002). This is because the current estimated utilities of all outcomes and the possible transitions are incorporated into the values of rooms through the process of simulation or search in the model.

A different way to perform the estimation is to note that the values of successive rooms should be self-consistent. That is, the value of one room should be the sum of the utility available in that room and the average values of the subsequent rooms to which the policy can lead in a single step. For instance, a room should have a high value if it either offers a large reward itself, or has a passageway to a room that itself has a high value (or both). Eliminating inconsistencies (which are called TD prediction errors; Sutton, 1988) between the estimated values of successive rooms leads to their being correct. Importantly, it is possible to eliminate inconsistencies using just those transitions experienced during learning, without any need for an explicit model of the domain. This method is therefore called model-free (or sometimes cached) RL.

If the values are learned in this way, then, like habits (Dickinson and Balleine, 2002), they will not immediately be sensitive to changes in the utilities of outcomes. This is because explicitly experiencing steps in the maze is necessary to erase inconsistencies.

Model-free and model-based controls differ in the ways they can acquire, generalize, and express information about factors such as the statistical structure or controllability of the environment (Huys and Dayan, 2009), with model-based control likely being able to capture more flexibly, the far finer distinctions.

Controllers in the Brain

There are substantial data on the anatomical substrates of control, reviewed, for instance, in Balleine (2005), Cardinal et al (2002), Haber and Knutson (2010), Sesack and Grace (2010), Hollerman et al (2000), and Balleine and O’Doherty (2010) (see also Wickens et al, 2007; Houk et al, 1994; Bezard, 2006). Strikingly, there is evidence that model-based and model-free strategies are simultaneously deployed by partly different neural structures (Dickinson and Balleine, 2002; Killcross and Coutureau, 2003; Balleine, 2005).

Model-free control depends particularly on the dorsolateral striatum (Jog et al, 1999; Killcross and Coutureau, 2003; Yin et al, 2004; Tricomi et al, 2009). A rather wider set of areas has been implicated in the computationally more complex processes of model-based control, including the ventral (medial) prefrontal cortex (mPFC) and dorsomedial striatum (Balleine and Dickinson, 1998; Killcross and Coutureau, 2003; Yin et al, 2005), together with the basolateral amygdala and the orbitofrontal cortex, which are critically implicated in adapting to changes in the motivational value of stimuli (Padoa-Schioppa and Assad, 2006; Schoenbaum et al, 2009, 2003; Schultz and Dickinson, 2000; Hollerman et al, 2000; Rolls and Grabenhorst, 2008; Mainen and Kepecs, 2009; Fellows, 2007; Cador et al, 1989; Killcross et al, 1997; Holland and Gallagher, 2003; Corbit and Balleine, 2005; Talmi et al, 2008; O’Doherty, 2007; Wallis, 2007; Valentin et al, 2007; Baxter et al, 2000). Related dependencies include the role of ventral mPFC in extinction (Morgan et al, 1993; Morgan and LeDoux, 1995, 1999; Killcross and Coutureau, 2003), the anterior cingulate cortex's putative function in detecting errors and managing response conflicts (Devinsky et al, 1995; Pardo et al, 1990; Awh and Gehring, 1999; Botvinick et al, 2004; Gabriel et al, 1991; Bussey et al, 1997; Parkinson et al, 2000b), and perhaps also the role of hippocampus in non-habitual spatial behavior (White and McDonald, 2002; Doeller and Burgess, 2008).

The nucleus accumbens or ventral striatum is not required for goal-directed (Balleine and Killcross, 1994; Corbit et al, 2001) or habitual actions (Reading et al, 1991; Robbins et al, 1990). However, it is considered an interface between limbic and motor systems and is involved in the expression of Pavlovian responses and the interaction between Pavlovian and instrumental conditioning (Panksepp, 1998; Salamone and Correa, 2002; Balleine and Killcross, 1994; Parkinson et al, 2000b; Mogenson et al, 1980; Ikemoto and Panksepp, 1999; Killcross et al, 1997; Reynolds and Berridge, 2001; Corbit and Balleine, 2003; Hall et al, 2001; Talmi et al, 2008; Berridge, 2007; Berridge and Robinson, 1998; Sesack and Grace, 2010). These effects also depend on the central nucleus of the amygdala (Hall et al, 2001; Killcross et al, 1997; Cador et al, 1989; Parkinson et al, 2000a).

Of particular importance to us, the dopaminergic, serotonergic, and noradrenergic neuromodulatory systems influence, regulate, and plasticize all these other systems in the light of affectively important information. There are extensive, although not complete, interconnections between these structures (see eg, Powell and Leman, 1976; Zahm and Heimer, 1990; Brog et al, 1993; Haber et al, 2000; Groenewegen et al, 1980, 1982, 1999; Joel and Weiner, 2000; Fudge and Haber, 2000; Carr and Sesack, 2000), making for an extremely rich, and as yet incompletely understood, overall network.

As mentioned, goal-directed and habitual control appear to be expressed concurrently (Killcross and Coutureau, 2003). One idea for why this is good is that they represent different tradeoffs between two sorts of uncertainty or inaccuracy: computational noise, which afflicts primarily the model-based controller, and statistically inefficient learning, which afflicts the model-free controller given limited experience (Daw et al, 2005). That is, the task for model-based RL of simulating and following deep paths through the maze (or their algorithmic variants; Puterman, 2005) is computationally very taxing, and will therefore lead to inaccurate estimates of values or excessive energy consumption. In comparison, model-free RL does not have to compute its values, but rather has them directly available. However, it learns using inconsistencies between successive values. As these values are all inaccurate at the outset of learning, they do not provide useful error signals. Model-free RL is therefore slower to learn than model-based RL, which absorbs information more optimally. Thus, model-free RL requires more samples to make good choices, and is less adaptive to changes in the world. In sum, model-based control should dominate at the outset, whereas model-free control takes over at the end of learning.

Managing Energy

An additional facet of the optimal decision problem is choosing how vigorously to perform selected actions. Acting more quickly may imply getting rewards more quickly, or being more certain of avoiding being punished; however, it also takes more energy (Niv et al, 2007). This tradeoff is easiest to express in a model of optimal control designed for problems that continue for very long epochs (as if the animal is placed back into the maze if it ever reaches an exit), in which they optimize the average utility gained per unit time rather than the sum utility over a path (called average-case RL; Sutton and Barto, 1998; Daw and Touretzky, 2002). In this framework, the passage of time is itself costly, that is, there is an opportunity cost for time that is quantified by this average utility. To take an appetitive case, the greater the average, the more reward the animal should expect to get for each timestep in the maze. Not getting that much reward, for instance, by acting too slothfully is therefore not optimal. Vigor and sloth are thus tied to the overall affective valence of the environment. This average reward can be estimated by learning; but it may also be more directly influenced by innate or acquired previous expectations.

Consideration of vigor points toward a much broader tradeoff between energetically expensive, active reward seeking or threat avoiding, externally directed behaviors, and energy conserving, regenerative, digestive, internally directed behaviors (Handley and McBlane, 1991; Tops et al, 2009; Ellison, 1979), a distinction that has been related to that between the sympathetic and the parasympathetic nervous systems (Ellison, 1979). In the terminology of Ellison (1979), these are called, respectively, ergotropic (toward work or energy expenditure) and trophotropic (toward nourishment). (See Box).

BEHAVIORAL PRIORS AND HEURISTICS

As many authors have pointed out, a more pernicious problem than the computational and sample complexities of goal-directed and habitual controls is the potentially calamitous expense for a subject having to engage in learning in the first place. For instance, there is surely an evolutionary disbenefit for organisms that have to learn for themselves to avoid predators by repeated bouts of danger and active escape. Rather, we may expect biases associated with previous expectations about the sorts of decision problems they face. These biases are evident in the choices subjects make, but are also enshrined in the functional architecture of decision-making itself. In this section, we review the biases; in the next section, we draw the relevant conclusions for the interactions between DA and 5-HT.

Layers of Biases

Several types of computational biases can alleviate the need for expensive sampling. The most basic bias is that outcomes, or indeed improvement or worsening in the prospects for future outcomes, have been caused by recent behavior. Thus, as in the law of effect (Thorndike, 1911), whatever actions preceded the delivery of reward should be done more, and whatever preceded punishment should be suppressed. Such a causality bias can be influenced by the recent past, for instance, being overturned by an experience of repeated lack of control. This has been argued to have a particularly important role in phenomena such as learned helplessness (Maier and Watkins, 2005). Here, animals are taught by experiencing inescapable shocks that they cannot control or influence some aspect of one environment. They generalize this fact to other environments, which they therefore fail to explore or exploit appropriately. There are various ways to formalize this lack of control under which this behavior is actually optimal (Huys and Dayan, 2009).

A second bias is that active engagement is needed to secure possible rewards, and hence prediction of increased availability of rewards should energize behavior.

Other biases have to do with the type of response most adapted to a prediction. This is widely evident in Pavlovian responses. In these, predictions are directly tied to actions (ie, requiring value but not action learning), associated, for instance, with species-typical defensive actions (Bolles, 1970; Blanchard and Blanchard, 1971). The potency of Pavlovian conditioning is evident from the ability of Pavlovian responses to compete with instrumental ones, as in omission schedules (Sheffield, 1965; Williams and Williams, 1969), which have been argued as being the tip of an iceberg of more substantial anomalies of human decision making (Dayan et al, 2006; Chen and Bargh, 1999). Biases further arise in ‘preparedness’ to learn, which suggests that there are constraints as to the stimuli that can support predictions about particular outcomes (McNally, 1987).

Perhaps the most important bias for our argument has to do with the status of emitting (Go) vs withholding (No Go) actions. In principle, there could be an orthogonality between the valence of a possible outcome and the nature of the behavior required to collect or avoid it. Active responding or active or passive non-responding could equally be required for rewards or punishments. However, when distant, punishments are often avoided by inhibition (Soubrié, 1986; Crockett et al, 2009), whereas rewards are gained through approach and engagement (Panksepp, 1998), and it appears that this coupling or non-orthogonality is enshrined in the architecture of control. That is, a fundamental structural principle of the basal ganglia appears to be the intimate coupling of Go, the direct pathway, thalamocortical cortical excitation, and reward, and No Go, the indirect pathway, thalamocortical inhibition, and punishment (Frank, 2005; Gerfen et al, 1995; Gerfen, 2000; Brown et al, 1999).

Appetitive Responses

We first consider preparatory and consummatory responses to predictions of rewards. These include approach, engagement, and active exploration (Panksepp, 1998). Such actions are consistent with the previous bias that rewards are relatively rare, and typically require active processes to be collected. The predictors are described as having high levels of incentive salience (Berridge, 2007; Berridge and Robinson, 1998; Alcaro et al, 2007). An additional appetitive bias is the PIT effect (Estes, 1943; Lovibond, 1983) that Pavlovian predictions of future reward can boost the vigor of instrumental actions, even if instrumental and Pavlovian outcomes are different (the so-called general PIT; Balleine, 2005). One suggestion is that appetitive PIT reflects a bias in the assessment of the overall rate of positive reinforcement, which is linked to the vigor of responding by acting as an opportunity cost for the passage of time (Niv et al, 2007).

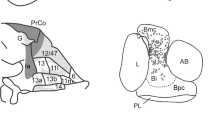

These appetitively motivated behaviors are associated with the shaded upper right-hand quadrant of the graph in Figure 1. This graph is an adaptation of the so-called affective circumplex (Knutson and Greer, 2008; Larsen and Diener, 1992; Posner et al, 2005), replacing arousal, which is the normal ordinate, by action (Crockett et al, 2009; Guitart-Masip et al, 2010; Huys et al, 2010), but leaving the valence axis in its original form. This action axis is intended to include automatic, Pavlovian, responses, partly orchestrated by the nucleus accumbens (Reynolds and Berridge, 2001, 2002, 2008) as well as learned, instrumental, actions associated with the habitual or model-free systems, which are largely associated with the dorsolateral striatum (Yin et al, 2004; Everitt et al, 2008; Tricomi et al, 2009). It is also meant to reflect the level of vigor with which actions are performed. The intimate coupling between invigoration (Ikemoto and Panksepp, 1999) and appetitive valence is a critical part of our argument, and is in line, for instance, with the idea proposed by Wise and Bozarth (1987) that a single common underlying biological structure supports homologous functions in the stimulation of locomotion and positive reinforcement.

The affect–effect plot. This shows a version of the affective circumplex (Larsen and Diener, 1992; Knutson and Greer, 2008), replacing the arousal axis with action and inhibition. The top-right quadrant is associated with better-than-expected outcomes and their Pavlovian and instrumental effects in learning, approach, and vigor. The bottom-left quadrant is associated with worse-than-expected outcomes, and their effects in terms of inhibition and fear learning. The other quadrants involve more complex interactions between reward and punishment. The top-left arrow shows the case for actions associated with active avoidance of punishments following a movement of the origin left along the valence axis to reflect the prediction of danger; the bottom-right with actions that should be avoided to prevent the loss of otherwise expected rewards, associated with a movement of the origin right along this axis. Dopamine seems particularly associated with the upper right-hand quadrant and, because of its association with active avoidance, effects associated with moving the origin leftward. In the context of this paper, serotonin's prime association is with inhibition, i.e., negative values of the ordinate; the possibility of opponency is that it is responsible for the whole bottom-left quadrant.

How goal-directed actions, and the areas supporting them such as the dorsomedial striatum (Yin et al, 2005), fit into this quadrant is unfortunately less clear.

Aversive Responses

Predictions of imminent or future punishment have a much more complex effect on behavior. Partly, this reflects the fundamental asymmetry between reward and punishment that successful response learning leads to repeated experience of rewards, but avoided experience of punishments. Therefore, mechanisms for maintenance and extinction of learnt responses are likely to seem different for rewards and punishments (Mowrer, 1947). However, there is another asymmetry: although it is usually uncomplicatedly safe to approach and engage with rewards, as mentioned above, punishments require a more complex set of species-, threat-, and distance-dependent responses (Blanchard et al, 2005; Bolles, 1970; Gray and McNaughton, 2003; McNaughton and Corr, 2004). First, many dangers arise when subjects execute inappropriate actions in dangerous conditions, such as venturing forth into unsafe terrain. A vital generic heuristic in such circumstances is therefore behavioral inhibition, which helps prevent actions of any sort (Gray and McNaughton, 2003). This link is consistent with the shaded, lower-left, quadrant of the graph in Figure 1, which ties inhibition, as an opponent of action, to aversion, the opponent of reward. Conditioned suppression is another example. This acts like the aversive mirror of appetitive PIT, with a Pavlovian predictor of a shock having the power to suppress or inhibit ongoing, appetitively motivated, instrumental actions.

However, behavioral inhibition is only appropriate in particular circumstances. Often, and particularly in the face of a proximal threat, exactly the opposite is required, namely an active defensive response (Blanchard et al, 2005). The choice of the response depends sensitively on the threat, in ways that animals often cannot afford to have to learn for themselves (Bolles, 1970; McNaughton and Corr, 2004). Indeed, the essential decision as to what to do—fight, freeze, or flee (ultimately controlled by the dorsolateral periacqueductal gray (PAG); the more recuperatory or inhibitory flop being controlled by the ventrolateral PAG; Keay and Bandler, 2001)—depends on a complex risk assessment process. Active, aversively motivated actions can also be elicited by manipulations of activity in the nucleus accumbens, but in the caudal rather than the rostral shell (Reynolds and Berridge, 2001, 2002, 2008). The boundary between appetitively and aversively motivated behaviors depends on contextual factors such as the stressfulness or familiarity of the environment (Reynolds and Berridge, 2008), perhaps consistent with previous expectations about its capacity for potential harm.

The standard way to reconcile the architectural coupling between reward and active, Go, responses and the need for active and instrumental actions in the face of punishments is to introduce the notion of safety, as in forms of two-factor theory (Mowrer, 1947, 1956; Morris, 1975; Kim et al, 2006). This allows the transition from a dangerous state to a neutral or safe one to have the valence and effect of a reward. That is, resetting the baseline expected outcome to be negative (the putative punishment) implies that a neutral outcome will appear positive. It is natural also to extend this explanation to defensive Pavlovian responses. All these responses are occasioned by the opportunity to avoid a threat, and are thus energizing (and hence performed vigorously) and reinforcing (and hence repeated, if possible). They thus fit exactly into the realm of the upper right-hand quadrant of Figure 1 (and illustrated by the rightward-pointing arrow), provided that the origin is shifted leftward, to reflect the inherent danger (Ikemoto and Panksepp, 1999). In terms of Gray and McNaughton (2003), the fight, flight, (active) freezing system, which naturally occupies the upper-left quadrant of the figure, is brought into the upper-right quadrant, which is the territory of the behavioral approach system (BAS). Both are opposed by the behavioral inhibition system, associated with negative values of the ordinate. Coding via safety is parsimonious if the set of actions leading to continuation of punishment or threat is very large, as it is necessary to avoid them all, whereas any action leading to safety is good.

Such a shift of the origin toward negative values is also one way to reconcile the problem posed by Ainslie (2001), in the form of subjects’ apparent elective willingness to experience subjective pain (in the light of mechanisms operating in abnormal circumstances that can suppress it). Pain becomes a predictor that certain protective actions will give rise to future neutral affect (ie, safety), and is thus an appropriate reinforcer.

Safety signaling is thus conceived as the means by which an architecture that couples Go with reward can perform correctly when avoiding punishments requires Go. An obvious question is how an architecture coupling No Go and punishment could perform correctly when rewards require No Go. If the origin of the graph in Figure 1 can be moved rightward, based on the expectation of a reward, then the frustration of losing an expected reward (or of obtaining a smaller one) is endowed with negative valence and processed as punishment. The instrumental difficulties of omission schedules (Williams and Williams, 1969; Sheffield, 1965) or the differential reinforcement of low rates of responding (Staddon, 1965), in which automatic Go actions are penalized by the rules of the experiment, suggest that this shift of the origin may be more complicated than that for the case of punishment.

In sum, the hashed areas in Figure 1 show the seemingly automatic association between predictions of rewards and active engagement and predictions of punishments and behavioral inhibition. In these quadrants, Pavlovian and instrumental responses are generally aligned, and hence learning is easy. The two arrows show safety and frustration signaling that occupy the non-congruent quadrants, and are distinguished by greater difficulties of learning and complexities of the representational structures involved. Table 1 lists some key paradigms that fit into the easy and difficult quadrants of the affect-action graph.

Of course, even if the origin on the valence axis has to move in order to effect these architectural reconciliations, the composite stimulus (danger plus safety, or reward plus frustration) must retain its overall original valence, to guard against masochism or overcautiousness—that is, the aggregate event of punishment followed by its cessation should still be aversive, not appetitive. A way to achieve this would be to ensure that the magnitude of the counterbalancing affective factor be limited by that of the original one. There is some evidence that animals are, in general, surprisingly poor at such reweightings (Pompilio et al, 2006; Clement et al, 2000).

Additional Heuristics

Various other heuristics and priors may also be important for understanding reward and punishment interactions. For instance, one consequence of behavioral inhibition could be pruning of the goal-directed evaluation of states when predicted large punishments arise. This pruning has been suggested as leading to normally overoptimistic evaluation; so that depressive realism (Dayan and Huys, 2008; Alloy and Abramson, 1988; Watson and Clark, 1984) or rumination (Smith and Alloy, 2009) set in when it fails. Overoptimism could equally come from overeager approach and engagement with apparently rewarding options (Smith et al, 2006; Dayan et al, 2006). This is a case in which appetitive and aversive Pavlovian responses have similar implications.

Another critical form of pruning is appropriate in the face of substantial threat. In this case, a sensible heuristic is to downweight small rewards, as it is unrealistic to expect sufficient accumulation to overcome the cost of the punishment. One way to implement this would be for the signal that predicts the threat to have a contrast-enhancing effect on the signal reporting possible rewards, such that small potential or actual rewards would have less effect, and large rewards have more. This would make for a magnitude-dependent interaction between reward and punishment. Alternatively, the prospect of punishment might act as a motivational state that differentiates rewards relevant to escape from punishment from other generic rewards. Irrelevant, non-safety-related rewards would then be flattened selectively in a way reminiscent of motivational states such as hunger, thirst, or sodium deprivation (Dickinson and Balleine, 1994).

Talmi et al (2008) reported a decrease in appetitive sensitivity in a context in which rewards were financial and the punishments involved electrical shocks. This argues against a generic contrast-enhancing effect of punishment (although it is conceivable that the rewards used all fell into the ‘too small’ category for one of the controllers). It would be interesting to compare the effects of rewards that were irrelevant (eg, monetary) or relevant (eg, cessation of shocks) to safety to see if punishment creates a specific motivational state, and corresponding reward dissociation. One consequence of the sort of decrease in appetitive sensitivity that Talmi et al (2008) observed is enhanced exploration, with the lessened effective difference in the values of available options leading to an increased willingness to try ones that do not appear best. This is the same form of exploration that comes from an increased temperature in a softmax model of choice (Sutton and Barto, 1998), and, among its other properties, is a convenient heuristic for avoiding getting stuck performing suboptimal choices. Naturally, taking persistent advantage of any better action that is found requires an ultimate restoration of the appetitive contrast.

OPPONENCY AND BEYOND

The discussion in previous sections has led us to two coupled spectra associated with habits and Pavlovian influences as shown in Figure 1—from invigoration to inhibition, and from reward to punishment. In this section, we put these spectra into the context of general ideas about affective opponency (Konorski, 1967; Grossberg, 1984; Solomon and Corbit, 1974; Brodie and Shore, 1957) and specific notions about DA–5-HT valence opponency (Deakin, 1983; Deakin and Graeff, 1991; Graeff et al, 1998; Kapur and Remington, 1996).

We first briefly introduce the dopaminergic and serotonergic systems, then discuss the properties of these opponencies, consider some additional aspects of the role of 5-HT, and finally consider a third possible form of opponency associated with engagement, but involving norepinephrine (NE) and 5-HT rather than DA and 5-HT. Again, for the sake of space, we had mostly to ignore many factors, including most aspects of anatomical differentiation within DA and 5-HT systems.

The Dopaminergic System

DA cells are located in the midbrain, in the ventral tegmental area (VTA), and in substantia nigra pars compacta (SNc). The dopaminergic system can be divided into three pathways: the nigrostriatal pathway, which projects from the SNc to the dorsal striatum, the mesolimbic pathway, which projects from the VTA to the nucleus accumbens, and the mesocortical pathway, which projects from the VTA to the PFC (Fluxe et al, 1974; Swanson, 1982).

Recorded DA neurons fall into one of three categories (Grace and Bunney, 1983; Goto et al, 2007): (1) inactive neurons; (2) tonically firing neurons, displaying slow, single-spike firing; and (3) burst-firing neurons, exhibiting phasic firing driven by afferent input. Tonic activity reflects general states of arousal or motivation, whereas phasic activity may be related to the detection and nature of punctate salient events. There is evidence that these modes are separately regulated (Floresco et al, 2003), and the functional significance of phasic and tonic firing of DA has been investigated by several authors (Goto et al, 2007; Grace, 1991; Niv et al, 2007; Floresco, 2007). As natural DA phasic activity may be obscured by a general tonic increase in DA function (eg, following DA agonist administration), this duality in firing modes complicates the interpretation of many pharmacological studies, as discussed, for instance, by Beninger and Miller (1998).

There are two major classes of receptors for DA, D1 and D2. Most critically for us, these may be segregated respectively to the two different direct and indirect pathways through the striatum (Aubert et al, 2000; Gerfen, 1992, 2004; but see Aizman et al, 2000; Inase et al, 1997). To a first approximation, the direct pathway facilitates action (Go) and the indirect pathway suppresses it (No Go). Phasic release of DA increases activity in the Go pathway through stimulation of D1 receptors (Hernández-López et al, 1997), which depends on relatively larger concentrations of DA (Goto and Grace, 2005). Conversely, tonic release of DA inhibits activity in the No Go pathway by stimulating D2 receptors (Hernandez-Lopez et al, 2000), which are sensitive to much lower concentrations of DA (Creese et al, 1983).

We concentrate below on the strong links between DA locomotor activity, RL, and the motivational effect of reinforcers (reviewed, eg, in Berridge and Robinson, 1998; Robbins and Everitt, 1992; Wise, 2008). However, we should also note briefly that DA has also been implicated in many other functions. First, DA modulates several aspects of executive function (as reviewed in Robbins, 2005; Robbins and Roberts, 2007; Robbins and Arnsten, 2009; Di Pietro and Seamans, 2007) and behavioral flexibility, modulating attentional set formation and shifting (but not reversal learning) (Floresco and Magyar, 2006). Its influence over working memory (Williams and Goldman-Rakic, 1995) famously follows an inverted U-shaped function; too little as well as too much DA impairs performance. Such nonmonotonicity has been influential as a more general explanatory schema for apparently paradoxical effects, particularly as different subjects may start from different sides of the peak of such curves. There is evidence for an inverse relationship between prefrontal and nucleus accumbens DA, particularly during aversive stress (Brake et al, 2000; Tzschentke, 2001; Del Arco and Mora, 2008; Deutch, 1992, 1993; Pascucci et al, 2007; Wilkinson, 1997). DA released in the dorsolateral striatum has also been implicated in cognitive processing (eg, Darvas and Palmiter, 2009; Baunez and Robbins, 1999).

Another important role of DA is that it modulates the interactions between prefrontal and limbic systems at the level of the nucleus accumbens and the amygdala. As reviewed in Grace et al (2007) and Sesack and Grace (2010), projections from the hippocampus, amygdala, and PFC converge on single neurons in the nucleus accumbens (Callaway et al, 1991; Mulder et al, 1998; Finch, 1996; French and Totterdell, 2002, 2003). Their inputs are gated by the hippocampus or by bursts of PFC activity (O’Donnell and Grace, 1995; Gruber and O’Donnell, 2009), and are differentially modulated by accumbal DA (Charara and Grace, 2003; Brady and O’Donnell, 2004; Floresco et al, 2001). DA also modulates the control of the basolateral amygdala by the mPFC (Kröner et al, 2005; Rosenkranz and Grace, 1999, 2001, 2002; Floresco and Tse, 2007; Grace and Rosenkranz, 2002).

The Serotonergic System and Its Regulation of the Dopaminergic System

5-HT neurons are located in nuclei of the midline of the brain stem. Ascending nuclei projecting to the forebrain mainly comprise the median raphe nucleus (MRN) and dorsal raphe nucleus (DRN); the DRN projects notably to the cortex, amygdala, striatum, thalamus, PAG, and hypothalamus, whereas the MRN innervates the cortex, septal nuclei, hippocampus, and hypothalamus (Azmitia and Segal, 1978; O’Hearn and Molliver, 1984; Geyer et al, 1976). The VTA and the substantia nigra pars reticulata, the nucleus accumbens, and the ventromedial aspect of the caudate nucleus receive a dense 5-HT projection, whereas the SNc and the rest of the caudate nucleus are more sparsely innervated (Lavoie and Parent, 1990; Beart and McDonald, 1982; Hervé et al, 1987). 5-HT neurons display a slow and ‘clock-like’ firing pattern (Jacobs and Fornal, 1991, 1999), but can also exhibit phasic activation to pain (Schweimer et al, 2008). DRN neurons can even be activated by reward (Nakamura et al, 2008; Bromberg-Martin et al, 2010; see Kranz et al, 2010 for a review of the modulation of reward by 5-HT) and, more generally, respond to a large range of specific sensorimotor information (Ranade and Mainen, 2009).

There are at least 14 different receptor subtypes for 5-HT (Cooper et al, 2002; Hoyer et al, 2002), making for a highly intricate and complex range of effects. Critical for us is the substantial experimental evidence that 5-HT regulates DA release (Esposito et al, 2008; Azmitia and Segal, 1978; Beart and McDonald, 1982; Hervé et al, 1987; Parent, 1981; Geyer et al, 1976; Egerton et al, 2008; De Deurwaerdère et al, 2004; Higgins and Fletcher, 2003; Lavoie and Parent, 1990; Spoont, 1992; Harrison et al, 1997; Nedergaard et al, 1988), and we provide some detail on this to make clear how far there is to go to fit all the interactions together. The precise mechanisms by which this happens appear very diverse, and reflect the complexity of DA regulation itself, for example, reducing the bursting behavior of DA cells (Di Giovanni et al, 1999), altering the relative balance between regional DA concentrations (De Deurwaerdère and Spampinato, 1999), or modulating the projections that control DA release (Bortolozzi et al, 2005). Regulation can be tonic, or conditional, on DA being activated (Leggio et al, 2009b; Lucas et al, 2001; Porras et al, 2003; De Deurwaerdère et al, 2005).

Most importantly, 5-HT displays functional tonic inhibitory control over DA, as lesioning the MRN or DRN increases the metabolism of DA in the nucleus accumbens and either reduces (MRN) or leaves unchanged (DRN) that in the PFC (Hervé et al, 1979, 1981). Indeed, 5-HT2C receptors in general tonically inhibit DA release. However, many 5-HT receptor types (5-HT1A, 5-HT2A, 5-HT3, 5-HT4) stimulate DA release (Alex and Pehek, 2007; Di Matteo et al, 2008; Higgins and Fletcher, 2003), and 5-HT generally seems to exert an excitatory influence on the VTA (Beart and McDonald, 1982; Van Bockstaele et al, 1994), including enhancing DA release in the nucleus accumbens (Guan and McBride, 1989).

The opposition between 5-HT2A and 5-HT2C seems particularly striking. Both receptor types have been shown to display constitutive activity (Berg et al, 2005; De Deurwaerdère et al, 2004; Navailles et al, 2006), and exert opposite control over the release of DA in the nucleus accumbens and striatum (Porras et al, 2002; Di Giovanni et al, 1999; De Deurwaerdère and Spampinato, 1999) and in the PFC (Millan et al, 1998; Gobert et al, 2000; Pozzi et al, 2002; Alex et al, 2005; Pehek et al, 2006). 5-HT has a critical role in modulating impulsivity, perhaps partly indirectly by modulating DA release (Dalley et al, 2002, 2008; Winstanley et al, 2004, 2006; Millan et al, 2000a). Indeed, these effects may result in the behavioral observation that 5-HT2A receptors are associated with increased impulsivity, whereas 5-HT2C activity displays the more general correlation of 5-HT with decreased impulsivity (Robinson et al, 2008; Winstanley et al, 2004; Fletcher et al, 2007). Effects of 5-HT2C appear to be mediated by receptors at the level of the origin (VTA) for PFC DA (Alex et al, 2005; Pozzi et al, 2002), the target (striatum) for dorsolateral striatal DA (Alex et al, 2005), and both for the nucleus accumbens (Navailles et al, 2008).

Unfortunately, it is not even that simple. 5-HT2C activity does not always lead to a decrease in DA; thus, constitutive activity of 5-HT2C receptors in the mPFC contributes to the increase in accumbal DA following morphine, haloperidol, or cocaine (Leggio et al, 2009a, 2009b). This may perhaps be related to the antagonism proposed by certain authors between mPFC DA and accumbal DA (Brake et al, 2000; Tzschentke, 2001; Del Arco and Mora, 2008; Deutch, 1992, 1993). 5-HT2C may exert a tonic inhibitory effect on structures involved in invigoration (De Deurwaerdère et al, 2010) in a way that need not depend on modulating DA.

Furthermore, 5-HT1B has been associated with not only decreased amphetamine-induced enhancement of responding for conditioned reward (Fletcher and Korth, 1999) and satiety (Lee et al, 2002), but also increased DA release (Neumaier et al, 2002; Alex and Pehek, 2007; Yan and Yan, 2001; Millan et al, 2003; Di Matteo et al, 2008) as well as increased amphetamine-induced locomotor hyperactivity (Przegalinski et al, 2001). Also, inhibition of innate escape responses has been linked with 5-HT1A (Deakin and Graeff, 1991; Misane et al, 1998).

Much less data exist as to whether DA exerts regulation over 5-HT release, and it is not clear whether this regulation would be excitatory or inhibitory (Ferré et al, 1994; Matsumoto et al, 1996; Ferré and Artigas, 1993; Thorré et al, 1998). This could be taken as evidence for a hierarchical arrangement between 5-HT and DA, with the former exerting its effects by manipulating the latter. In this case, such weaker influences might be expected.

Like DA, 5-HT also modulates higher cognitive functions. For instance, PFC 5-HT has been shown to be necessary for reversal learning, but not attentional set formation or shifting, as recently reviewed in Robinson et al (2007), Clarke et al (2007), Robbins (2005), Robbins and Arnsten (2009), and Di Pietro and Seamans (2007).

Complexities of Opponency

When two systems are involved in representing a single spectrum, a number of complexities arise. First, if both systems have baseline activity, then negative values could be expressed by various possible combinations of below-baseline activity of the system representing positive values and above-baseline activity of the system representing negative values. In a precise sense, there is an additional degree of freedom—the net value only constrains the difference in activation of the two systems, leaving the sum of the activations free to be used to represent another quantity.

Second, the combination of Pavlovian and instrumental, and direct and learned, effects associated with these neuromodulators can make it hard to make clear inferences about the effects of manipulations. This point is well made by Bizot and Thiébot (1996) for the case of impulsivity. For instance, as we noted above, Huys and Dayan (2009) argued that 5-HT could have the direct effect of pruning actions associated with potentially negative outcomes, by virtue of a putative role in making aversive predictions. However, this could mean that any effect of reducing 5-HT on eliminating a capacity to make normal aversive predictions, as suggested by Deakin (1983), Deakin and Graeff (1991), and Graeff et al (1998), could be overwhelmed by a concomitant decrease in pruning, and thus increase in actual negative outcomes themselves. The ubiquity of auto-receptor-based negative feedback control over the activity and release of neuromodulatory neurons (see Bonhomme and Esposito, 1998; Millan et al, 2000a) also complicates experimental analyses. The same is true of the non-monotonic inverted U-shaped curves relating release to function (as seen for DA in its modulation of working memory; Williams and Goldman-Rakic, 1995).

Third, we have argued for the case of valence that there may be circumstances under which the origin of the spectrum can be moved to take a positive or negative value. This would imply that the semantic mappings from activity to valence in the individual systems are not fixed. In particular, safety (which implies an aversive context) can be coded in the same way as a truly appetitive outcome (Mowrer, 1947, 1956; Morris, 1975; Kim et al, 2006). This could lead to apparent cooperativity between otherwise competitive opponents. Some of the key paradigms suggesting cooperation and competition are listed in Table 2.

Finally, as argued in the section ‘Behavioral Priors and Heuristics’, the two formally orthogonal spectra of action and valence are anatomically and functionally coupled. This can make it hard to interpret systemic manipulations of one or other system, as factors associated with affect and effect could masquerade as each other.

We organize our discussion around two characteristic types of effect of affective events and predictions: immediate (or proactive), involving modulating subjects’ engagement with current and future actions, and retroactive, involving reinforcing or suppressing previous actions.

Immediate Effects

DA, affect, and effect

DA in the nucleus accumbens is known to have a role in Pavlovian responding, incentive salience, appetitive PIT, and vigor (Berridge, 2007; Berridge and Robinson, 1998; Alcaro et al, 2007; Niv et al, 2007; McClure et al, 2003; Satoh et al, 2003; Dickinson et al, 2000; Lex and Hauber, 2008; Reynolds and Berridge, 2001, 2002, 2008). More generally, it is very well known that DA agonists enhance (whereas DA antagonists reduce) locomotor activity (see, eg, Beninger, 1983). This suggests that DA is responsible for the positive values along the vertical axis in Figure 1, which captures the spectrum from active engagement in choices and actions to inhibition and withdrawal. In line with this, phasic DA stimulates the Go pathway through the basal ganglia, whereas tonic DA inhibits the No Go pathway. Indeed, phasic activation of DA is directly associated with invigoration (Satoh et al, 2003) (putatively via a DA-dependent modulation consistent with appetitive PIT; Murschall and Hauber, 2006; Lex and Hauber, 2008). It has also been observed that animals act more quickly and vigorously when reward rates are higher, in a way that depends positively on (tonic) levels of DA in the striatum (Salamone and Correa, 2002). Such appetitive aspects are consistent with the model of Niv et al (2007) that optimal vigor is tied to the average rates of reward, reported by tonic levels of DA, perhaps also reflecting the integrated phasic signals. (Differences such as this point between the current model and our original account of opponency (Daw et al, 2002) are discussed in detail in the Discussion section, and also form a key component of Cools et al, 2010.)

However, the involvement of DA in action is not limited to appetitive contexts. Even though many DA neurons are phasically inhibited by aversive outcomes and predictors (Ungless et al, 2004; Mirenowicz and Schultz, 1996), the concentration of DA and the phasic activity of other DA neurons (notably in the mesocortical pathway) both increase in the face of aversion (Iordanova, 2009; Sorg and Kalivas, 1991; Guarraci and Kapp, 1999; Kiaytkin, 1988; Abercrombie et al, 1989; Louilot et al, 1986; Brischoux et al, 2009; Lammel et al, 2008; Matsumoto and Hikosaka, 2009). There is also evidence that active defensive aversively motivated actions are only elicitable from the nucleus accumbens under normal dopaminergic conditions (Faure et al, 2008). Following the interpretation of safety signaling given in the previous section, the benefit of cessation of punishment is a suitable reward, and as such, should also energize behavior.

From a formal viewpoint, only those punishments that are considered controllable should inspire vigor—uncontrollable punishments are not associated with the prospect of safety and should lead to quiescence (Maier and Watkins, 2005; Huys and Dayan, 2009; Cools et al, 2010). Somewhat troubling for this interpretation is that DA efflux in the mPFC (though not the nucleus accumbens; Bland et al, 2003b) is actually increased during inescapable shock (Bland et al, 2003a) (perhaps via the mesocortical pathway; Lammel et al, 2008; Brischoux et al, 2009). One possibility is that this occurs just during the initial assessment of uncontrollability. Evidence for this is that following uncontrollability, a subsequent challenge with morphine does not boost DA efflux, although it does boost 5-HT efflux (Bland et al, 2003a).

The DA signal thus becomes associated more strongly with effect than affect, in that its involvement in active actions remains even when the overall situation is aversive. If the origin of the graph in Figure 1 is moved leftward toward a negative net valence, the former origin now has a positive value—that is, the possibility and means of achieving a neutral state becomes appetitive. This amounts to acknowledging that valence is not absolute (with what is considered reward and punishment being largely dependent on the current baseline), and restores the congruency between action and (apparent) reward.

5-HT and inhibition

The opposite end of the spectrum from invigoration in Figure 1 is inhibition. Consistent with one form of opponency, there are extensive findings on the role of 5-HT in this (Spoont, 1992; Gray and McNaughton, 2003; Soubrié, 1986). This involvement has been especially well documented in aversive situations, where 5-HT inhibits innate responses to fear in the face of imminent threat as well as responses unrelated to escape in the face of distal threat (Deakin and Graeff, 1991; Graeff, 2004). Via its effects on behavioral inhibition, 5-HT has also been hypothesized to mediate optimistic evaluation (Dayan and Huys, 2008), and thereby be implicated in depressive realism (Alloy and Abramson, 1988; Keller et al, 2002) or worse (Carson et al, 2010).

Beside their typical slow ‘clock-like’ firing (Jacobs and Fornal, 1991, 1999), 5-HT neurons can also exhibit phasic activation to pain (Schweimer et al, 2008). Although it is not clear if such phasic activation is associated with momentary inhibition in the rather direct way that phasic DA is with invigoration, experiments such as of Shidara and Richmond (2004) and Hikosaka (2007) certainly provide inspiration for some such mechanisms.

There is ample evidence for the role of 5-HT in mediating inaction following uncontrollable punishment (Maier and Watkins, 2005). 5-HT release is increased during stress in the mPFC and amygdala (Kawahara et al, 1993; Yoshioka et al, 1995; Hashimoto et al, 1999), and inescapable shock activates subpopulations of serotonergic neurons in all raphe nuclei in the rat (Takase et al, 2004). Uncontrollability potentiates the stress-induced increase in the release of 5-HT in the mPFC and nucleus accumbens shell (Bland et al, 2003a, 2003b). Descending connections from the mPFC to the DRN (Peyron et al, 1998; Baratta et al, 2009), which predominantly synapse onto inhibitory cells, inhibit this uncontrollability response (Amat et al, 2005, 2008) when subjects have previous experience with behavioral control over stress. This also blocks the behavioral effects of later uncontrollable stress (Amat et al, 2006), allowing normal invigoration.

On the other hand, 5-HT has also been linked with inhibition in non-aversive contexts. Some cases of inhibition, including correct No Go responding in appetitive settings (Fletcher, 1993; Harrison et al, 1997, 1999), can be viewed as the mirror images of DA's involvement in safety and active avoidance—with, for instance, the net quiescence needed to avoid impulsive responding mirroring the net vigor needed to reach safety (Cools et al, 2010). This preserves the congruence of inhibition with aversion, but does not accommodate other forms of inhibition associated with 5-HT, such as its involvement in the cessation of feeding after satiety (Gruninger et al, 2007), or other issues that may have to do with learning, such as the latent inhibition of conditioning to stimuli previously associated with an absence of affective outcome (Weiner, 1990) and extinction (Beninger and Phillips, 1979).

According to the formal model of opponency, one route by which 5-HT could inspire inhibition is the suppression of activation, for instance, via the suppression of DA. This could be a form of the hierarchical opponency mentioned above, with DA mediating its effects directly, but with 5-HT mediating its affective effects by acting on DA, potentially reassigning behavior away from currently motivated responding, either in appetitive (Sasaki-Adams and Kelley, 2001) or aversive (Archer, 1982) contexts.

Complexities

It has been argued (Frank, 2005; Frank and Claus, 2006; Cohen and Frank, 2009) that dips below baseline in DA activity will exert a particular effect over DA D2 receptors, and thus the indirect or No Go pathway, because D2 receptors have a relatively greater affinity for DA than D1 receptors (Creese et al, 1983; Surmeier et al, 2007). Such dips have directly been observed in electrophysiological recordings, and constitute some of the strongest evidence that DA reports the TD prediction error (Pan et al, 2005; Roesch et al, 2007; Schultz et al, 1997; Morris et al, 2006; Bayer et al, 2007). In this model, the indirect pathway suppresses responses that compete with the favored choice. However, it is not clear if this is a general mechanism for inhibition, having particular difficulty, for instance, if it is necessary to wait and inhibit actions in order to get a reward (as in differential reinforcement of low rates of responding). It is also not clear whether the more general sort of response inhibition that appears to be realized by the subthalamic nucleus and that has been associated with competition among different appetitive responses (Frank, 2006; Frank et al, 2007b; Bogacz and Gurney, 2007) can be adaptively harnessed to prevent responding altogether.

Along with coarse inhibitory control, such as reduction of vigor by effective inhibition of tonic DA, or, in satiation, decreasing sensitivity to food rewards (Simansky, 1996), the complex array of effects of different 5-HT receptors on DA could allow for a range of quite subtle effects, perhaps mediating forms of previous bias such as that noted in the section ‘Behavioral Priors and Heuristics’, with the prospect of punishment acting as a motivational state, devaluing apparent rewards not associated with avoidance or escape, or allowing broader exploration by mediating increased temperature in a softmax model of choice (Sutton and Barto, 1998). This would link 5-HT with the reward sensitivity dimension of impulsivity identified by Franken and Muris (2006).

Learned Effects

As mentioned, repetitive pairing of actions such as pressing a lever and outcomes such as rewards or punishments can lead to both stimulus-response (habit) and action-outcome and stimulus-action-outcome (goal-directed) association learning. We examine the roles of DA and 5-HT in both cases.

Appetitively motivated learning

In terms of appetitive learning, substantial experimental evidence suggests that the phasic activation of DA neurons in the VTA and SNc is consistent with its reporting the TD prediction error for reward (Barto, 1995; Montague et al, 1996; Schultz et al, 1997). This would be an ideal substrate for learning appropriate and inappropriate actions (Wise, 2008; Palmiter, 2008; Frank, 2005; Suri and Schultz, 1999; Montague et al, 2004). This proposition is consistent with overwhelming behavioral evidence showing that restricting DA function often has the same effect as not delivering the reinforcer (in a way that cannot be explained by mere decrease in performance ability, as reviewed in Wise, 2004, 2008), and is further bolstered by the effects on appetitive learning of pharmacological manipulations of DA in normal volunteers (Pessiglione et al, 2006), and also in Parkinson's patients on and off DA-boosting medication (Frank et al, 2004). One prominent suggestion is that phasic bursts of activity in DA neurons act via D1 receptors and the direct pathway in the striatum to boost actions leading to unexpectedly large rewards (Frank, 2005; Frank and Claus, 2006; Frank et al, 2007a). Indeed, recent studies with genetically engineered mice that lack DA (reviewed in Palmiter, 2008) have pinpointed restoration of DA release in the dorsolateral striatum as sufficient for learning reinforced responses.

It is not clear from these results whether learning of actions (as opposed to performing them; Wise, 2004, 2008) by the goal-directed system also has a mandatory dependence on DA. Several paradigms provide elegant approaches to this question by enforcing different dopaminergic conditions during training and testing. Noting the reasonable assumption that aspects of allocentric spatial behavior are associated with non-habitual control (White and McDonald, 2002; Doeller and Burgess, 2008), it is known that the genetically engineered mice that do not produce DA can learn an appetitive T-maze (Robinson et al, 2005), as well as conditioned place preference for morphine (Hnasko et al, 2005) and cocaine (Hnasko et al, 2007) (which, interestingly, appears to be mediated by 5-HT in mutant, but not control, mice), provided DA function is restored during testing. However, in an operant lever pressing task, experimental results are mixed, in that the mice do not appear to have learned the association, but do seem to learn faster than mice that have not been exposed, once DA is restored (Robinson et al, 2007). In cases of cognitively more taxing tasks, DA is needed (Darvas and Palmiter, 2009), although it is not yet clear what this indicates about the interaction between goal-directed and habitual control.

Aversively motivated learning

It has also long been known that DA mediates the acquisition of instrumental, active avoidance (Go) responses to aversive stimuli (Beninger, 1989), and DA may be necessary to acquire Pavlovian startle potentiation to aversive stimuli (Fadok et al, 2009). As seen above in the case of drive effects, this associates DA more tightly with the action than with the valence dimension. Again, the congruence between reward and action can be restored by viewing safety as a reward (ie, moving the origin of the graph in Figure 1 leftward), which is then again coded by enhanced DA activity. Modeling safety thus leads to correct prediction of observed avoidance behavior (Johnson et al, 2001; Moutoussis et al, 2008; Maia, 2010).

There is little evidence for preserved avoidance learning by the goal-directed system in the absence of normal DA. Rats under neuroleptics fail to learn an active avoidance escape response, yet slowly develop the response when tested drug-free in extinction (Beninger et al, 1980a; Beninger, 1989). Thus, DA is necessary for learning the active escape, but not for learning about the aversive value of the cue. It thus seems unlikely that learning negative predictions from actual punishments (ie, in the lower left quadrant of Figure 1) is mediated only, if at all, by dips in tonic DA. This raises the question as to the representation of the phasic TD prediction error associated with the delivery of more punishment than expected, that is, leftward excursions from the origin in its original position in Figure 1.

From the perspective of opponency, we would expect this to involve the phasic activity of 5-HT neurons. Indeed, as we have noted, these are activated in aversive contexts. This is clear from cellular imaging data on the activation of selected groups of 5-HT neurons under conditions of shocks and inescapable stress (Lowry, 2002; Takase et al, 2004), and direct neurophysiological evidence of the same thing from provably serotonergic neurons (Schweimer et al, 2008). Unfortunately, there is far less information about the correlates of phasic 5-HT activity than for phasic DA activity, and indeed newer methods for measuring and manipulating 5-HT in a far more selective manner are needed; we discuss some possibilities later.

Various experimental findings appear to rule out a critical involvement of 5-HT in at least some forms of aversive learning: decreasing 5-HT function seems to facilitate (and increasing 5-HT function to impair) active avoidance learning (eg, Archer, 1982; Archer et al, 1982, and see Beninger, 1989). However, the picture is not completely clear as to the effects on phasic signaling of lesions, depletion, or even dietary manipulation of 5-HT. All these manipulations exert primary control over tonic levels instead, leaving the possibility that indirect action via autoreceptors and adaptation of receptor sensitivities could have an opposite effect. Again, it is important to distinguish effects associated with performance from those with learning.

It is known, although, that genetically engineered mice that lack central 5-HT display enhanced contextual fear learning (Dai et al, 2008). This form of learning, via the hippocampus, may be more closely associated with the goal-directed than the habitual system. However, it certainly shows that 5-HT is unlikely to have a mandatory role for all types of fear learning.

The characterization of particular groups of dopaminergic or putatively dopaminergic neurons that respond to phasic punishment (Brischoux et al, 2009; Lammel et al, 2008; Matsumoto and Hikosaka, 2009) raises the intriguing possibility that a separate DA projection may have the role of the phasic prediction error for punishment, in the same way that we discussed above for vigor. Against this, although, is the observation we noted above that DA does not seem to be mandatory for learning predictions of punishment, and only for turning those predictions into appropriate avoidance actions. Of course, the caveat mentioned above about the need for a better understanding of the effect of experimental manipulations over phasic signaling also applies here.

Also, as mentioned for the case of vigor, it is notable that, in the rat at least, the neurons concerned project to regions likely to be associated with goal-directed control (Brischoux et al, 2009; Lammel et al, 2008). This might, perhaps, be involved in the assessment of the possibility of safety inherent in the leftward movement of the origin in Figure 1, which is in turn related to the goal-directed notions of controllability that we discussed above (Huys and Dayan, 2009; Maier and Watkins, 2005).

Learning to suppress

There are contexts where the appropriate response is to withhold action, for example, to avoid electrical shocks triggered by lever presses. As we mentioned, the role of phasic dips below baseline of DA activity following non-delivery of expected reward (Pan et al, 2005; Roesch et al, 2007; Schultz et al, 1997; Morris et al, 2006; Bayer et al, 2007) in response inhibition is not completely obvious. In comparison, the role of these dips in the process of learning not to emit incorrect responses to avoid losing expected rewards is rather clearer. There is direct evidence from Parkinson's disease (Frank et al, 2004) and genetic studies (Frank et al, 2007a; Frank and Hutchison, 2009) for the involvement in this learning of the D2 receptors believed to be sensitive to these dips (Frank and Claus, 2006; Cohen and Frank, 2009). In terms of Figure 1, expectation of future reward is encoded as a movement rightward of the origin of the graph, so that the formerly neutral zone is now in negative territory; the dips in DA then capture the loss of the expected reward in case of a neutral outcome.

5-HT and Disengagement

DA is not only subject to a potentially hierarchical influence from 5-HT, it is also affected by NE (Millan et al, 2000b; Nurse et al, 1984; Guiard et al, 2008a, 2008b; Villegier et al, 2003). This exhibits a similar pattern of net inhibition at the level of the VTA (Guiard et al, 2008b) coupled with a potential for targeted excitation (Auclair et al, 2004). However, the effect of NE is mostly in the opposite direction, that is, toward ergotropism, and active arousal, seeking, and energy expenditure, rather than trophotropism and replenishment (Ellison and Bresler, 1974; Villegier et al, 2003). Remembering the very original suggestions (Brodie and Shore, 1957), and the fact that there are many 5-HT pathways other than those partnering DA, it is intriguing to consider whether there could indeed be opponency between 5-HT and NE (Ellison and Bresler, 1974; Everitt and Robbins, 1991), as well as between 5-HT and DA. Recent experiments showing that NE and 5-HT control DA release, while inhibiting each other (Auclair et al, 2004; Tassin, 2008), might add evidence to early suggestions of a competition between NE and 5-HT for behavioral control, in an opponency paralleling that between sympathetic and parasympathetic nervous systems (Ellison, 1979).

Hints as to the form of this additional opponency come in data associated with the idea that 5-HT is involved in withdrawal or disengagement from the environment, even in the absence of evident threats or reinforcing benefits of No Go (Ellison, 1979; Beninger, 1989; Tops et al, 2009). For instance, whereas NE is associated with the arousal, exploration, and the active processing of salient and action-relevant stimuli (Bouret and Sara, 2005; Dayan and Yu, 2006; Aston-Jones and Cohen, 2005), 5-HT is involved in disengagement from sensory stimuli (Beninger, 1989; Handley and McBlane, 1991), for example, because they have been associated with neutral outcomes, as in latent inhibition paradigms (Weiner, 1990), or are no longer associated with affective outcomes, as in extinction (Beninger and Phillips, 1979). Its involvement in fatigue (Newsholme et al, 1987; Meeusen et al, 2007) and satiation (Gruninger et al, 2007) may be related to this too.

DISCUSSION

Fathoming how the processing of reward and punishment are integrated in order to produce appropriate, and approximately appropriate, behavior is critical for understanding healthy and diseased decision making. Perhaps, because punishment and its prospect have such critical roles in sculpting choices, they are embedded deeply, and thus obscurely, in the architectural fabrics concerned. Punishment and threat also enjoy very substantial Pavlovian components, whose logic is only slowly becoming less murky.

It might seem self-evidently clear that reward and punishment are functional opponents, implying that the neural systems involved in processing them should be similarly antagonistic. However, we argued that computational and algorithmic adaptations to expectations about the previous structure of the environment make for substantial complexities in the relationship between the processing of punishment and reward. These collectively give rise to a mix of competitive, cooperative, and interactive associations between these opposing affective facets of the world. We suggested (Figure 1) that it is important to take particular account of an axis associated with invigoration and inhibition along with the one associated with valence, and furthermore that the origin of this graph can move leftward or rightward according to expectations of punishment or reward.

Extending, and sometimes contradicting Deakin (1983), Deakin and Graeff (1991), and Daw et al (2002), we considered the role played by DA and 5-HT in the aspects of this that are predominantly associated with Pavlovian and habitual control. DA appears to be responsible for one quadrant of the resulting graph in a rather uncomplicated manner, but dynamic interactions in opponency associated with movement of the origin result in its also being responsible for effects in other quadrants. Most notably, active avoidance seems to be coded in a locally appetitive manner, as in safety signaling. We considered this as an algorithmic by-product of asymmetries in the effect of reward and punishment, making reinforcement of successful escape actions more parsimonious than inhibition of all actions that do not terminate punishment.

The least complicated association of 5-HT appears to be with behavioral inhibition (Soubrié, 1986), that is, the negative component of the action axis. However, we argued that it could also have a critical role in the analogous affective axis. There are suggestions that dips below baseline in the DA signal can report on the disbenefits of poor actions in the face of expected reward, and 5-HT could also mediate some aspects of action learning by modulating those dips. However, it does not appear crucial for all aspects of avoidance response learning (as opposed to fear learning). We also mentioned the notion that there might be opponency between 5-HT (associated with disengagement; Tops et al, 2009) and NE to partner that between 5-HT and DA.

This, and indeed also of Cools et al (2010), amount to a significant evolution of our original model (Daw et al, 2002) of DA and 5-HT opponency, which focused almost exclusively on valence, ignoring Pavlovian effects, asymmetries coming from previous distributions over environments, and the functional and anatomical association between action and valence. The most obvious contradiction is the role of tonic levels of DA and 5-HT, which we previously suggested as reporting average levels of punishment and reward, respectively. Here, we have adapted Niv et al (2007) to suggest that tonic DA, at least, reports the average levels of rewards and controllable punishments as an opportunity cost for the passage of time leading to vigor. Huys and Dayan (2009) and Cools et al (2010) suggest, mutatis mutandis, that tonic 5-HT reports average levels of punishments as an opportunity benefit for the passage of time, leading to quiescence.