Abstract

The dopamine system is thought to be involved in making decisions about reward. Here we recorded from the ventral tegmental area in rats learning to choose between differently delayed and sized rewards. As expected, the activity of many putative dopamine neurons reflected reward prediction errors, changing when the value of the reward increased or decreased unexpectedly. During learning, neural responses to reward in these neurons waned and responses to cues that predicted reward emerged. Notably, this cue-evoked activity varied with size and delay. Moreover, when rats were given a choice between two differently valued outcomes, the activity of the neurons initially reflected the more valuable option, even when it was not subsequently selected.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Wise, R.A. Dopamine, learning and motivation. Nat. Rev. Neurosci. 5, 483–494 (2004).

Schultz, W. Getting formal with dopamine and reward. Neuron 36, 241–263 (2002).

Dayan, P. & Balleine, B.W. Reward, motivation and reinforcement learning. Neuron 36, 285–298 (2002).

Day, J.J., Roitman, M.F., Wightman, R.M. & Carelli, R.M. Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat. Neurosci. 10, 1020–1028 (2007).

Mirenowicz, J. & Schultz, W. Importance of unpredictability for reward responses in primate dopamine neurons. J. Neurophysiol. 72, 1024–1027 (1994).

Fiorillo, C.D., Tobler, P.N. & Schultz, W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science 299, 1898–1902 (2003).

Tobler, P.N., Dickinson, A. & Schultz, W. Coding of predicted reward omission by dopamine neurons in a conditioned inhibition paradigm. J. Neurosci. 23, 10402–10410 (2003).

Hollerman, J.R. & Schultz, W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nat. Neurosci. 1, 304–309 (1998).

Waelti, P., Dickinson, A. & Schultz, W. Dopamine responses comply with basic assumptions of formal learning theory. Nature 412, 43–48 (2001).

Montague, P.R., Dayan, P. & Sejnowski, T.J. A framework for mesencephalic dopamine systems based on predictive hebbian learning. J. Neurosci. 16, 1936–1947 (1996).

Bayer, H.M. & Glimcher, P.W. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron 47, 129–141 (2005).

Nakahara, H., Itoh, H., Kawagoe, R., Takikawa, Y. & Hikosaka, O. Dopamine neurons can represent context-dependent prediction error. Neuron 41, 269–280 (2004).

Pan, W.X., Schmidt, R., Wickens, J.R. & Hyland, B.I. Dopamine cells respond to predicted events during classical conditioning: evidence for eligibility traces in the reward-learning network. J. Neurosci. 25, 6235–6242 (2005).

Morris, G., Nevet, A., Arkadir, D., Vaadia, E. & Bergman, H. Midbrain dopamine neurons encode decisions for future action. Nat. Neurosci. 9, 1057–1063 (2006).

Kawagoe, R., Takikawa, Y. & Hikosaka, O. Reward-predicting activity of dopamine and caudate neurons—a possible mechanism of motivational control of saccadic eye movement. J. Neurophysiol. 91, 1013–1024 (2004).

Cardinal, R.N., Pennicott, D.R., Sugathapala, C.L., Robbins, T.W. & Everitt, B.J. Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science 292, 2499–2501 (2001).

Evenden, J.L. & Ryan, C.N. The pharmacology of impulsive behaviour in rats: the effects of drugs on response choice with varying delays of reinforcement. Psychopharmacology (Berl.) 128, 161–170 (1996).

Herrnstein, R.J. Relative and absolute strength of response as a function of frequency of reinforcement. J. Exp. Anal. Behav. 4, 267–272 (1961).

Ho, M.Y., Mobini, S., Chiang, T.J., Bradshaw, C.M. & Szabadi, E. Theory and method in the quantitative analysis of “impulsive choice” behaviour: implications for psychopharmacology. Psychopharmacology (Berl.) 146, 362–372 (1999).

Mobini, S. et al. Effects of lesions of the orbitofrontal cortex on sensitivity to delayed and probabilistic reinforcement. Psychopharmacology (Berl.) 160, 290–298 (2002).

Kahneman, D. & Tverskey, A. Choices, values and frames. Am. Psychol. 39, 341–350 (1984).

Kalenscher, T. et al. Single units in the pigeon brain integrate reward amount and time-to-reward in an impulsive choice task. Curr. Biol. 15, 594–602 (2005).

Lowenstein, G.E.J. Choice Over Time (Russel Sage Foundation, New York, 1992).

Thaler, R. Some empirical evidence on dynamic inconsistency. Econ. Lett. 8, 201–207 (1981).

Winstanley, C.A., Theobald, D.E., Cardinal, R.N. & Robbins, T.W. Contrasting roles of basolateral amygdala and orbitofrontal cortex in impulsive choice. J. Neurosci. 24, 4718–4722 (2004).

Cardinal, R.N., Winstanley, C.A., Robbins, T.W. & Everitt, B.J. Limbic corticostriatal systems and delayed reinforcement. Ann. NY Acad. Sci. 1021, 33–50 (2004).

Kheramin, S. et al. Effects of orbital prefrontal cortex dopamine depletion on intertemporal choice: a quantitative analysis. Psychopharmacology (Berl.) 175, 206–214 (2004).

Wade, T.R., de Wit, H. & Richards, J.B. Effects of dopaminergic drugs on delayed reward as a measure of impulsive behavior in rats. Psychopharmacology (Berl.) 150, 90–101 (2000).

Cardinal, R.N., Robbins, T.W. & Everitt, B.J. The effects of d-amphetamine, chlordiazepoxide, alpha-flupenthixol and behavioural manipulations on choice of signalled and unsignalled delayed reinforcement in rats. Psychopharmacology (Berl.) 152, 362–375 (2000).

Roesch, M.R., Takahashi, Y., Gugsa, N., Bissonette, G.B. & Schoenbaum, G. Previous cocaine exposure makes rats hypersensitive to both delay and reward magnitude. J. Neurosci. 27, 245–250 (2007).

Roesch, M.R., Taylor, A.R. & Schoenbaum, G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron 51, 509–520 (2006).

Tobler, P.N., Fiorillo, C.D. & Schultz, W. Adaptive coding of reward value by dopamine neurons. Science 307, 1642–1645 (2005).

Kiyatkin, E.A. & Rebec, G.V. Heterogeneity of ventral tegmental area neurons: single-unit recording and iontophoresis in awake, unrestrained rats. Neuroscience 85, 1285–1309 (1998).

Bunney, B.S., Aghajanian, G.K. & Roth, R.H. Comparison of effects of L-dopa, amphetamine and apomorphine on firing rate of rat dopaminergic neurones. Nat. New Biol. 245, 123–125 (1973).

Skirboll, L.R., Grace, A.A. & Bunney, B.S. Dopamine auto- and postsynaptic receptors: electrophysiological evidence for differential sensitivity to dopamine agonists. Science 206, 80–82 (1979).

Niv, Y., Daw, N.D. & Dayan, P. Choice values. Nat. Neurosci. 9, 987–988 (2006).

Haber, S.N., Fudge, J.L. & McFarland, N.R. Striatonigrostriatal pathways in primates form an ascending spiral from the shell to the dorsolateral striatum. J. Neurosci. 20, 2369–2382 (2000).

Joel, D. & Weiner, I. The connections of the dopaminergic system with the striatum in rats and primates: an analysis with respect to the functional and compartmental organization of the striatum. Neuroscience 96, 451–474 (2000).

Yin, H.H., Knowlton, B.J. & Balleine, B.W. Lesions of dorsolateral striatum preserve outcome expectancy, but disrupt habit formation in instrumental learning. Eur. J. Neurosci. 19, 181–189 (2004).

O'Doherty, J. et al. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science 304, 452–454 (2004).

Knowlton, B.J., Mangels, J.A. & Squire, L. A neostriatal habit learning system in humans. Science 273, 1399–1402 (1996).

Hatfield, T., Han, J.S., Conley, M., Gallagher, M. & Holland, P. Neurotoxic lesions of basolateral, but not central, amygdala interfere with Pavlovian second-order conditioning and reinforcer devaluation effects. J. Neurosci. 16, 5256–5265 (1996).

Gallagher, M., McMahan, R.W. & Schoenbaum, G. Orbitofrontal cortex and representation of incentive value in associative learning. J. Neurosci. 19, 6610–6614 (1999).

Baxter, M.G., Parker, A., Lindner, C.C.C., Izquierdo, A.D. & Murray, E.A. Control of response selection by reinforcer value requires interaction of amygdala and orbitofrontal cortex. J. Neurosci. 20, 4311–4319 (2000).

Gottfried, J.A., O'Doherty, J. & Dolan, R.J. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science 301, 1104–1107 (2003).

Parkinson, J.A., Cardinal, R.N. & Everitt, B.J. Limbic cortical-ventral striatal systems underlying appetitive conditioning. Prog. Brain Res. 126, 263–285 (2000).

Lu, L. et al. Central amygdala ERK signaling pathway is critical to incubation of cocaine craving. Nat. Neurosci. 8, 212–219 (2005).

Acknowledgements

We thank Y. Niv, P. Shepard, G. Morris and W. Schultz for thoughtful comments on this manuscript, and S. Warrenburg at International Flavors and Fragrances for his assistance in obtaining odor compounds. This work was supported by grants from the US National Institute on Drug Abuse (R01-DA015718, G.S.; K01-DA021609, M.R.R.), the National Institute of Mental Health (F31-MH080514, D.J.C.), the National Institute on Aging (R01-AG027097, G.S.) and the National Institute of Neurological Disorders and Stroke (T32-NS07375, M.R.R.).

Author information

Authors and Affiliations

Contributions

M.R.R., D.J.C. and G.S. conceived the experiments. M.R.R. and D.J.C. carried out the recording work and assisted with electrode construction, surgeries and histology. The data were analyzed by M.R.R. and G.S., who also wrote the manuscript with assistance from D.J.C.

Corresponding author

Supplementary information

Supplementary Text and Figures

Supplementary Figures 1–6 and Data (PDF 2158 kb)

Rights and permissions

About this article

Cite this article

Roesch, M., Calu, D. & Schoenbaum, G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci 10, 1615–1624 (2007). https://doi.org/10.1038/nn2013

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/nn2013

This article is cited by

-

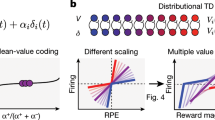

Dopamine transients follow a striatal gradient of reward time horizons

Nature Neuroscience (2024)

-

Striatal dopamine signals reflect perceived cue–action–outcome associations in mice

Nature Neuroscience (2024)

-

Dopamine D2 receptors in nucleus accumbens cholinergic interneurons increase impulsive choice

Neuropsychopharmacology (2023)

-

Brainstem networks construct threat probability and prediction error from neuronal building blocks

Nature Communications (2022)

-

A gradual temporal shift of dopamine responses mirrors the progression of temporal difference error in machine learning

Nature Neuroscience (2022)