Abstract

Purpose:

To determine the individual and combined effects of a simplified form and a review/retest intervention on biobanking consent comprehension.

Methods:

We conducted a national online survey in which participants were randomized within four educational strata to review a simplified or traditional consent form. Participants then completed a comprehension quiz; for each item answered incorrectly, they reviewed the corresponding consent form section and answered another quiz item on that topic.

Results:

Consistent with our first hypothesis, comprehension among those who received the simplified form was not inferior to that among those who received the traditional form. Contrary to expectations, receipt of the simplified form did not result in significantly better comprehension compared with the traditional form among those in the lowest educational group. The review/retest procedure significantly improved quiz scores in every combination of consent form and education level. Although improved, comprehension remained a challenge in the lowest-education group. Higher quiz scores were significantly associated with willingness to participate.

Conclusion:

Ensuring consent comprehension remains a challenge, but simplified forms have virtues independent of their impact on understanding. A review/retest intervention may have a significant effect, but assessing comprehension raises complex questions about setting thresholds for understanding and consequences of not meeting them.

Genet Med advance online publication 13 October 2016

Similar content being viewed by others

Introduction

In recent years, biospecimen research has frequently been in the national spotlight. In some instances, the attention has been positive, such as for scientific discoveries leading to new targeted approaches to detecting and treating cancer and other serious health conditions1,2 and catalyzing large-scale endeavors such as the Precision Medicine Initiative.3,4 In other instances, the spotlight has illuminated a range of concerns, sparking public debate about the ethical use of biospecimens.5,6,7 Partly in response to these concerns, proposed changes to US federal regulations for the protection of human subjects focus heavily on biospecimen research—including new requirements that informed consent for research use must be obtained for nearly all biospecimens, regardless of whether they are originally collected for research, clinical, or other purpose.8,9 To facilitate this massive undertaking, the proposed rules would permit broad consent for future unspecified research and allow widespread use of a consent template to be promulgated by the Secretary of Health and Human Services.10

Informed consent itself, however, is beset with challenges.11,12 Decades of research have amply documented that consent forms are too long and complex and that participants do not understand key aspects of the information disclosed.6 Interventions to improve consent comprehension seem to have met with only minimal success due, in part, to limitations in the available evidence base, including lack of statistical power, nonrandomized designs, poor generalizability, and questionable methods for assessing comprehension.13,14,15,16,17

To help inform the development of ethical approaches to informed consent for biobanking, we conducted a national randomized survey to determine the effect of a simplified consent form on biobanking consent comprehension. Both the consent form and the comprehension measure were empirically derived. We hypothesized that (i) among all survey respondents, comprehension among those given the simplified form would be no worse than that among those given a traditional form (noninferiority of the simplified form) and that (ii) among the subset of respondents with the lowest educational attainment, comprehension would be better with the simplified form than with a traditional form (superiority of the simplified form). We further examined the effect of a review/retest intervention on comprehension and whether the effect differed by consent form and/or education level.

Materials and Methods

We conducted a between-subjects factorial experiment in which participants were randomized to receive either a simplified or a traditional consent form within four educational strata: less than high school diploma, high school diploma/general equivalency diploma, some college, and bachelor’s degree or higher.

Sample

Participants were recruited from GfK’s KnowledgePanel, an online research panel constructed through address-based probability sampling to statistically represent the US population. GfK provides computer and Internet access, free of charge, to panelists who do not already have them. Panelists eligible for this study were at least 25 years old, able to read English, had not participated in medical research in the past year, and had visited a doctor within the previous 5 years. Assuming α = 0.05 and 90% power, our study design and hypothesis tests required 1,848 participants.

Procedures

Online data collection took place between December 2014 and January 2015. After completing eligibility questions and an electronic consent form, participants were randomly assigned to review either a simplified or a traditional consent form for a hypothetical biobank. The simplified form (Supplementary Appendix A online) was based on earlier research by the authors18,19,20 and designed to address succinctly the consent elements required by federal regulations and best-practice guidelines.21,22,23 The traditional form (Supplementary Appendix B online) was constructed to contain the same information but with a level of detail and complexity similar to that found in actual consent forms used by major US biobanks. Supplementary Appendix C online contains the readability characteristics of the forms.

The introduction to the survey informed participants that they would receive a biobanking consent form to read, after which there would be a short quiz. Participants were asked to read the form carefully (“as though you were actually deciding whether or not to take part in a biobank”) and were told that the purpose of the quiz was to “make sure the consent form did a good job explaining what people need to know about a biobank.” Participants were allowed as much time as desired to read the consent form to which they were randomized. They were then asked to complete a 21-item quiz (see Comprehension Measure). Upon starting the quiz, participants were not allowed to go back to the consent form or to a previous quiz question. After completing the quiz, for each item answered incorrectly, participants were shown the corresponding consent form section (“review”) and then presented with an alternate quiz item on that same topic (“retest”). Participants were told whether they answered the retested item correctly. Finally, participants answered general questions about the amount of information provided in the consent form, the merit of taking a quiz to assess understanding, and their hypothetical willingness to participate in a biobank.

The Duke University Health System IRB deemed this study exempt under 45 CFR 46.101(b)(2).

Comprehension measure

We developed the quiz based on the results of a systematic Delphi process to identify the consent information that prospective biobank participants must grasp to give valid consent.24 The premise of this process was that, although certain information may be deemed important for disclosure in consent forms, comprehensive understanding of every aspect of it is not necessary to provide valid consent.25,26 Delphi panelists achieved consensus on 16 statements of minimum necessary understanding, from which we created a 21-item true/false quiz (Supplementary Appendix D online). (Several of the Delphi statements were compound and thus required more than one quiz item to address the different components). For each item, we created two versions (an “A” and a “B” version) to avoid presenting exactly the same item during retesting. We conducted more than 60 cognitive interviews27 across multiple rounds to assess whether people understood the quiz items as intended and whether the items aligned well with the information in both the simplified and traditional consent forms.

On the survey, all participants initially received the A version of the quiz items, with the B versions used only for retesting when needed. Because the quiz was explicitly developed to represent the minimum necessary understanding to provide valid consent, a perfect score of 21 was needed to demonstrate adequate comprehension.

Statistical analysis

Weighting. Survey results were weighted to approximate the US population of English-speaking adults with respect to sex, age, race/ethnicity, education, and geographic region. Weighting methodology included two steps. First, design weights were calculated to reflect the selection probabilities for the starting sample. Second, the design weights were adjusted simultaneously (raked) along several geodemographic dimensions to account for nonresponse. All statistical analyses, except for the description of participant characteristics found in Table 1 , were adjusted for the complex survey sampling design with stratification and weighting.

Cleaning. Participants identified as “speeders” (those who completed the entire survey in less than 25% of the median time) or “refusers” (participants who refused at least half of the six non-quiz survey questions) were dropped from our final analysis.

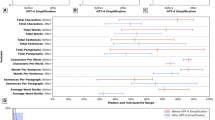

Statistical methods. In addition to descriptive statistics, we examined differences between participants who received the simplified versus traditional consent form. This included differences in initial levels of understanding, measured by the number of quiz items answered correctly prior to review and retest. Confidence limits were estimated for between-group comparisons. We tested both our hypotheses—noninferiority of the simplified form compared with the traditional form and superiority of the simplified form compared with the traditional form in the lowest-education group (less than high school diploma)—by calculating the 95% confidence limits of the differences. The margin of noninferiority and the margin of superiority were both prespecified as 2 (out of 21 possible).

To examine the effect of the review/retest intervention, we first used Rao-Scott chi-square tests and survey design–adjusted t-tests to characterize participants who did and did not answer all 21 quiz items correctly in the first attempt. Among those who answered at least one incorrectly (and thus required some level of review and retesting), we examined the effect of the review/retest procedure and whether these effects differed by consent form and/or education level. To do this, we used general linear regression to model score improvement (from the initial test to the retest) and logistic regression to model the probability of achieving a perfect score (answering all retested items correctly) as a function of simplified versus traditional consent form (Simplified Group versus Traditional Group), less than high school diploma versus high school diploma or higher (lower-Education Group versus Higher-Education Group), and consent form by education-group interaction.

As a proxy for the amount of time spent reviewing the consent forms, we examined the time they were displayed on participants’ screens separately by consent form, using medians with interquartile range (IQR) and a Wilcoxon rank-sum test on unweighted data (due to the severe skewness of the display times). We used survey design–adjusted t-tests to compare responses by consent form regarding (i) the amount of information in the form, (ii) whether giving a quiz to check understanding is a good idea, and (iii) willingness to participate in the hypothetical biobank. We compared the four levels of willingness to participate with respect to final quiz scores (after any review/retesting) using one-way analysis of variance with a linear contrast. We also examined the adjusted relationship between willingness to participate and final quiz scores using a general linear model that included age, sex, and education level.

All statistical analyses were conducted using SAS version 9.4 (SAS Institute, Cary, NC).

Results

Participant characteristics

A random sample of 3,931 members was drawn from GfK’s KnowledgePanel, and 2,279 (58%) responded to the invitation. Of these, 1,936 (85%) qualified for and finished the survey. We omitted 20 from our analyses because they were identified as either speeders or refusers (see “Cleaning” in the Methods section), resulting in a final sample of 1,916 participants. Although we were able to weight our survey sample to approximate the US adult English-speaking population, our unweighted sample reflected good diversity across multiple demographic categories and experimental conditions ( Table 1 ).

Effect of simplified consent form

In general, initial quiz scores (prior to any review/retest) reflect the effect of the simplified form versus the traditional form ( Table 2 ). Consistent with our first hypothesis, comprehension in the Simplified Group was not inferior to that in the Traditional Group (mean score = 17.5 vs. 17.6, respectively; 95% lower confidence limit = −0.44, which does not exceed our prespecified margin of noninferiority).

Initial quiz scores did, however, differ by education; overall, the mean score was 15.2 for the Lower-Education Group and 17.9 for the Higher-Education Group ( Table 2 ). But contrary to our second hypothesis, among those with the least education, comprehension was not superior with the simplified form compared with the traditional form. In the Lower-Education Group, the mean score was 14.9 among those who received the simplified form and 15.5 among those who received the traditional form (difference in means = −0.56, 95% CI: −1.65, 0.53).

As suggested by these scores, quiz performance in the first attempt was generally good. Overall, the mean initial score was 17.5 (out of a maximum possible of 21) and approximately 50% of our weighted sample achieved an initial score of 19 or higher (Supplementary Appendix E online). Quiz items most often answered correctly and incorrectly were the same regardless of the consent form received (Supplementary Appendix F online). Those most often answered correctly dealt with confidentiality protections, collection of basic information, and contact information, whereas those most often answered incorrectly concerned large-scale data sharing and collection of information from medical records.

Despite these positive indicators, only 20% of our weighted sample achieved a perfect quiz score—adequate understanding—in the first attempt ( Table 2 ). This proportion did not differ by consent form received. Compared with the rest of the sample, those who achieved a perfect initial score were significantly younger and more educated, reported higher income and better health, and were more likely to be white non-Hispanic, and employed, and to have Internet access ( Table 3 ). The remaining 80% answered at least one quiz item incorrectly and therefore required some level of review and retesting.

Effect of review/retest

Among those who underwent review/retesting, this process significantly improved quiz scores in every combination of consent form and education level ( Table 4 ). Results of the modeling approaches were consistent with the pattern of findings in Table 4 : overall, improvement in mean quiz score following review/retest was significantly greater in the Simplified Group than in the Traditional Group (mean change = 3.3 vs. 3.0, respectively; difference in means = 0.27, 95% CI: 0, 0.53). The improvement was also significantly greater in the Lower-Education Group than in the Higher-Education Group (mean change = 3.7 vs. 3.0, respectively; difference in means = 0.63, 95% CI: 0.30, 0.95). However, the proportion who achieved adequate comprehension—answered all retested items correctly—was significantly greater in the Higher-Education Group than in the Lower-Education Group (60 vs. 40%, respectively; difference in proportions = 20.2, 95% CI: 13, 27). There was no interaction between consent form and education level for mean score improvement or for proportion achieving a perfect score after review/retest (P = 0.45 and P = 0.99, respectively).

Overall, 57% of the review/retest group were able to achieve a perfect score. Thus, 65% of the entire weighted sample achieved a perfect quiz score, either in the first attempt or through review and retesting ( Table 2 ), and approximately 90% achieved a final score of 19 or higher (Supplementary Appendix E online). The quiz item about collection of information from medical records continued to be the one most often answered incorrectly (Supplementary Appendix F online).

Although improved by the review/retest process, comprehension remained a challenge in the Lower-Education Group, as documented throughout the tables and appendices. For example, only 4% of the Lower-Education Group achieved a perfect quiz score in the first attempt and 42% ultimately achieved this score ( Table 2 ). In contrast, in the Higher-Education Group, these proportions were 22 and 69%, respectively. These proportions did not differ by consent form for either educational group.

Other consent-process measures

Those who received the simplified form spent significantly less time on the consent screens (median = 5 min, IQR = 2–7 min) compared with those who received the traditional form (median = 10 min, IQR = 4–17 min; P < 0.0001). When asked to rate the amount of information in the consent form received, participants gave responses that differed significantly by form (P < 0.0001). In the Traditional Group, 22% rated the information as “too much” or “way too much,” compared with only 7% in the Simplified Group ( Table 5 ). Nearly all participants, regardless of consent form, reacted favorably to the idea of a quiz to help people make sure they understand before consenting to biobanking ( Table 5 ). Approximately two-thirds indicated they probably or definitely would participate if their health-care organization had a biobank ( Table 5 ). Willingness to participate did not differ by consent form received but did differ by quiz score. As shown in Supplementary Appendix G online, higher final quiz scores were positively associated with willingness to participate (P = 0.0008). This finding remained after adjustment for age, sex, and education (P = 0.001).

Discussion

We conducted a national randomized survey to determine the individual and combined effects of a simplified consent form and a review/retest intervention on biobanking consent comprehension. Compared with a more detailed form, the simplified form did not result in inferior understanding of the information that a multidisciplinary panel of experts (which included participant perspectives) deemed essential for valid consent.24 This finding of noninferiority is consistent with our hypothesis and bolsters similar results found in other studies that otherwise had notable limitations (e.g., involved participant populations that were disproportionately male,28 female,29,30 white,30 highly educated,28,30,31 or had substantial prior research experience28,31). Together, this body of evidence suggests that comprehension would not be adversely affected by proposed changes to federal research regulations intended to make informed consent more concise and meaningful:10

Consent forms would no longer be able to be unduly long documents, with the most important information often buried and hard to find. They would need to give appropriate details about the research that is most relevant to a person’s decision to participate … and present that information in a way that highlights the key information. (p. 5936)

Contrary to our expectations, however, the simplified form did not produce significantly better comprehension among those with less education. It is important to note that we created our traditional form to contain the same information as our simplified form, but with a level of detail and reading complexity similar to those of biobanking consent forms actually in use. Many biobanks have made major efforts to improve their forms,32 and these improvements are correspondingly reflected in the ninth- to tenth-grade reading level of our traditional form (Supplementary Appendix C online). Thus, even among those without a high school diploma, the traditional form may have presented less of a reading challenge—in general and in comparison to the simplified form—than might otherwise have been the case.

The fact that we did not find support for our superiority hypothesis should not deter from the goal of making consent forms shorter and more concise. Consent-process measures other than comprehension favor more streamlined forms. For example, some studies suggest that participants may be less anxious and more satisfied with simpler forms.29,30 In our survey, three times as many participants who received the traditional form said there was too much information compared with those who received the simplified form—a result consistent with findings reported by Stunkel et al.28 concerning perceptions of consent form length and amount of detail. Further, our findings of noninferiority with regard to comprehension, together with our observations concerning time spent on the consent-form screens, suggest that participants achieved similar levels of understanding while needing to spend less time with the simplified form than with the traditional form. As proposed in our previous work20:

A likely motivation among research participants is to understand efficiently the choice being presented to them. In other words, they want to spend as much time as necessary, but no more, obtaining information and making a decision about taking part in research. (p. 571)

Even so, it may be that simplification of forms is necessary but not sufficient for achieving adequate comprehension in all population groups, and further research on interventions such as multimedia aids is warranted.33,34,35

Our results also provide support for the role of a comprehension assessment in improving understanding. In our survey, a brief quiz followed by targeted review of consent information and retesting of missed items significantly improved comprehension scores. We designed this Web-based intervention to generally mimic “teach-back,” a technique for checking and correcting understanding that has shown promise when consent is obtained in person.15,16,36,37 In contexts such as biobanking, which may be moving toward Internet-based consent processes (potentially including dynamic consent) that do not rely exclusively on human interaction,38 it is ethically important to find other ways to help ensure that prospective participants understand fundamental aspects of the research. Our participants reacted very favorably toward the quiz, and higher comprehension scores were significantly associated with a higher degree of stated willingness to participate in the hypothetical biobank. Whether or not the review/retest procedure itself is critical—compared with, for example, simply reinforcing key information by providing a succinct explanation of the correct answers to quiz items—is a worthy area for future research.

Despite the generally high levels of comprehension produced by our consent form and review/retest interventions, one-third of our survey sample failed to achieve a score of 100% on the quiz needed to demonstrate adequate comprehension. Although additional enhancements to form and process may lead to further gains in quiz scores, our results raise profound questions regarding the role of comprehension in informed consent. Long considered a foundational pillar of the endeavor (together with disclosure and voluntariness), assessing consent comprehension leads to thorny issues regarding whether to set a threshold for understanding below which the person may not be objectively “informed” and what, if any, the consequences should be for failure to meet that threshold. This is an area of research we are currently pursuing.

Our survey was explicitly designed to address limitations identified in some previous studies of consent interventions. Strengths included a diverse national sample, appropriately powered to test our hypotheses and weighted to the US adult English-speaking population; a randomized design; empirically derived consent forms; and a systematically developed comprehension measure that enabled comparative assessment of our interventions as well as absolute assessment regarding whether adequate comprehension was achieved.

Interpretation of our results is subject to two primary limitations. First, our survey involved a hypothetical biobank, not an actual consent situation. Thus, participants may have paid a different level of attention to the task of reading the consent form and taking the quiz than they would if making a real-life participation decision. They may have paid more attention based on our survey instructions alerting them that there would be a quiz. To the extent that this may have helped improve comprehension, we suggest that participants in actual consent situations be told up front, “We will ask you a few questions to be sure we did a good job explaining what people need to know about taking part in a biobank.” However, our primary concern in designing the survey was that participants would pay less attention, given that their participation was anonymous and performance on the quiz entailed no consequence. To address this, we formulated our survey instructions to emphasize that participants’ input would provide valuable assistance in improving consent forms and processes for others in the future.

Second, we selected a true/false format for our comprehension measure to minimize the cognitive burden involved in testing the 21 elements of information encompassed in the statements resulting from our Delphi process. We developed two versions of each quiz item to avoid rote answers in the second attempt. Even so, one obvious disadvantage is the 50/50 chance of guessing the correct answer to a true/false item. We offered “Don’t know” as an answer option to provide a ready alternative to guessing or skipping. Another disadvantage with true/false items is the difficulty in assessing potentially problematic survey response patterns, such as straight-lining (respondents clicking the same answer every time). We removed “speeders” from our data set but otherwise did not second-guess response patterns. Finally, despite rigorous pretesting, it is possible that for some participants incorrect answers were due to the wording of some items. Consent forms typically comprise mostly positively worded statements about what will happen if one participates; therefore, devising quiz items for which the correct answer is “false” (entailing a negatively worded stem) was challenging. We are currently working on improvements to the quiz, including the development of concise multiple-choice items.

We hope the results of this research will prove useful to the evolution of effective, participant-friendly approaches to biobanking consent forms and processes, as well as contribute methodologically and conceptually to ongoing efforts to study and improve informed consent more broadly.

Disclosure

The authors declare no conflict of interest.

References

Hughes SE, Barnes RO, Watson PH. Biospecimen use in cancer research over two decades. Biopreserv Biobank 2010;8:89–97.

Bledsoe MJ, Grizzle WE. Use of human specimens in research: the evolving United States regulatory, policy, and scientific landscape. Diagn Histopathol (Oxf) 2013;19:322–330.

Collins FS, Varmus H. A new initiative on precision medicine. N Engl J Med 2015;372:793–795.

Kaiser J. Biomedical research. NIH plots million-person megastudy. Science 2015;347:817.

Bayefsky MJ, Saylor KW, Berkman BE. Parental consent for the use of residual newborn screening bloodspots: respecting individual liberty vs ensuring public health. JAMA 2015;314:21–22.

Beskow LM. Lessons from HeLa cells: the ethics and policy of biospecimens. Annu Rev Genomics Hum Genet 2016;17:395–417.

Mello MM, Wolf LE. The Havasupai Indian tribe case–lessons for research involving stored biologic samples. N Engl J Med 2010;363:204–207.

Hudson KL, Collins FS. Bringing the common rule into the 21st century. N Engl J Med 2015;373:2293–2296.

Emanuel EJ. Reform of clinical research regulations, finally. N Engl J Med 2015;373:2296–2299.

Notice of Proposed Rulemaking. Federal policy for the protection of human subjects. Federal Register 2015;80:53933–54061.

Grady C. Enduring and emerging challenges of informed consent. N Engl J Med 2015;372:2172.

Cressey D. Informed consent on trial. Nature 2012;482:16.

Flory J, Emanuel E. Interventions to improve research participants’ understanding in informed consent for research: a systematic review. JAMA 2004;292:1593–1601.

Cohn E, Larson E. Improving participant comprehension in the informed consent process. J Nurs Scholarsh 2007;39:273–280.

Nishimura A, Carey J, Erwin PJ, Tilburt JC, Murad MH, McCormick JB. Improving understanding in the research informed consent process: a systematic review of 54 interventions tested in randomized control trials. BMC Med Ethics 2013;14:28.

Tamariz L, Palacio A, Robert M, Marcus EN. Improving the informed consent process for research subjects with low literacy: a systematic review. J Gen Intern Med 2013;28:121–126.

Montalvo W, Larson E. Participant comprehension of research for which they volunteer: a systematic review. J Nurs Scholarsh 2014;46:423–431.

Beskow LM, Dean E. Informed consent for biorepositories: assessing prospective participants’ understanding and opinions. Cancer Epidemiol Biomarkers Prev 2008;17:1440–1451.

Beskow LM, Friedman JY, Hardy NC, Lin L, Weinfurt KP. Developing a simplified consent form for biobanking. PLoS One 2010;5:e13302.

Beskow LM, Friedman JY, Hardy NC, Lin L, Weinfurt KP. Simplifying informed consent for biorepositories: stakeholder perspectives. Genet Med 2010;12:567–572.

National Cancer Institute. Best Practices for Biospecimen Resources. 2016; http://biospecimens.cancer.gov/practices/2016bp.asp. Accessed May 2016.

Vaught J, Lockhart NC. The evolution of biobanking best practices. Clin Chim Acta 2012;413:1569–1575.

McGuire AL, Beskow LM. Informed consent in genomics and genetic research. Annu Rev Genomics Hum Genet 2010;11:361–381.

Beskow LM, Dombeck CB, Thompson CP, Watson-Ormond JK, Weinfurt KP. Informed consent for biobanking: consensus-based guidelines for adequate comprehension. Genet Med 2015;17:226–233.

Wendler D, Grady C. What should research participants understand to understand they are participants in research? Bioethics 2008;22:203–208.

Appelbaum PS. Understanding “understanding”: an important step toward improving informed consent to research. AJOB Primary Res 2010;1:1–3.

Willis GB. Cognitive Interviewing: A Tool for Improving Questionnaire Design. Sage: Thousand Oaks, CA, 2005.

Stunkel L, Benson M, McLellan L, et al. Comprehension and informed consent: assessing the effect of a short consent form. IRB 2010;32:1–9.

Davis TC, Holcombe RF, Berkel HJ, Pramanik S, Divers SG. Informed consent for clinical trials: a comparative study of standard versus simplified forms. J Natl Cancer Inst 1998;90:668–674.

Coyne CA, Xu R, Raich P, et al.; Eastern Cooperative Oncology Group. Randomized, controlled trial of an easy-to-read informed consent statement for clinical trial participation: a study of the Eastern Cooperative Oncology Group. J Clin Oncol 2003;21:836–842.

Enama ME, Hu Z, Gordon I, Costner P, Ledgerwood JE, Grady C ; VRC 306 and 307 Consent Study Teams. Randomization to standard and concise informed consent forms: development of evidence-based consent practices. Contemp Clin Trials 2012;33:895–902.

Clayton EW, Smith M, Fullerton SM, et al.; Consent and Community Consultation Working Group of the eMERGE Consortium. Confronting real time ethical, legal, and social issues in the Electronic Medical Records and Genomics (eMERGE) Consortium. Genet Med 2010;12:616–620.

Palmer BW, Lanouette NM, Jeste DV. Effectiveness of multimedia aids to enhance comprehension of research consent information: a systematic review. IRB 2012;34:1–15.

McGraw SA, Wood-Nutter CA, Solomon MZ, et al. Clarity and appeal of a multimedia informed consent tool for biobanking. IRB 2012;34:9–19.

Simon CM, Klein DW, Schartz HA. Interactive multimedia consent for biobanking: a randomized trial. Genet Med 2016;18:57–64.

Palmer BW, Cassidy EL, Dunn LB, Spira AP, Sheikh JI. Effective use of consent forms and interactive questions in the consent process. IRB 2008;30:8–12.

Kripalani S, Bengtzen R, Henderson LE, Jacobson TA. Clinical research in low-literacy populations: using teach-back to assess comprehension of informed consent and privacy information. IRB 2008;30:13–19.

Simon CM, Klein DW, Schartz HA. Traditional and electronic informed consent for biobanking: a survey of U.S. biobanks. Biopreserv Biobank 2014;12:423–429.

Acknowledgements

We thank the following individuals, who provided input as members of the advisory group assembled for this grant: John Alexander (Duke University), Jeffrey R. Botkin (University of Utah), John M. Falletta (Duke University), Steven Joffe (University of Pennsylvania), Bartha Maria Knoppers (McGill University), Karen J. Maschke (The Hastings Center), and P. Pearl O’Rourke (Partners Healthcare). This work was supported by a grant (R01-HG-006621) from the National Human Genome Research Institute (NHGRI). The content is solely the responsibility of the authors and does not necessarily represent the official views of NHGRI or the National Institutes of Health. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Supplementary information

Supplementary Appendixes

(PDF 531 kb)

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/

About this article

Cite this article

Beskow, L., Lin, L., Dombeck, C. et al. Improving biobank consent comprehension: a national randomized survey to assess the effect of a simplified form and review/retest intervention. Genet Med 19, 505–512 (2017). https://doi.org/10.1038/gim.2016.157

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/gim.2016.157

Keywords

This article is cited by

-

Developing model biobanking consent language: what matters to prospective participants?

BMC Medical Research Methodology (2020)

-

Assessing the stability of biobank donor preferences regarding sample use: evidence supporting the value of dynamic consent

European Journal of Human Genetics (2020)