Abstract

Interest in cytogenetics may be traced to the development of the chromosomal theory of inheritance that emerged from efforts to provide the basis for Darwin's theory “On the origin of species by means of natural selection.” Despite their fundamental place in biology, chromosomes and genetics had little impact on medical practice until the 1960s. The discovery that a chromosomal defect caused Down syndrome was the spark responsible for the emergence of medical genetics as a clinical discipline. Prenatal diagnosis of trisomies, biochemical disorders, and neural tube defects became possible and hence the proliferation of genetic counseling clinics. Maternal serum screening for neural tube defects and Down syndrome followed, taking the new discipline into social medicine. Safe amniocentesis needed ultrasound, and ultrasound soon found other applications in obstetrics, including scanning for fetal malformations. Progress in medical genetics demanded a gene map, and cytogeneticists initiated the mapping workshops that led to the human genome project and the complete sequence of the human genome. As a result, conventional karyotyping has been augmented by molecular cytogenetics, and molecular karyotyping has been achieved by microarrays. Genetic diagnosis at the level of the DNA sequence is with us at last. It has been a remarkable journey from disease phenotype to karyotype to genotype, and it has taken <50 years. Our mission now is to ensure that the recent advances such as prenatal screening, microarrays, and noninvasive prenatal diagnosis are available to our patients. History shows that it is by increased use that costs are reduced and better methods discovered. Chromosome research has been behind the major advances in our field, and it will continue to be the key to future progress, not least in our appreciation of chromosomal variation and its importance as a mechanism in Darwinian evolution.

Similar content being viewed by others

Main

When Charles Darwin's book On the Origin of Species was published almost 150 years ago,1 nothing was understood about the mechanism of heredity that might explain the biological variation through which natural selection operates. After the rediscovery of Mendel's laws at the beginning of the 20th Century, and the appreciation by Boveri and Sutton that chromosomes during cell division followed these laws,2,3 scientists sought to understand the nature of continuous and discontinuous variation and its significance for evolution. By 1912, Morgan and Bridges had discovered linkage and established the chromosomal theory of inheritance (see Bridges4). Controversy in this area continued for many years and culminated in Fisher's book The Genetical Theory of Natural Selection.5

Before their significance was fully understood, chromosomes were recognized in plants around the mid 19th Century, coincidental with refinements in the compound microscope and the emergence of cellular pathology. Drawings of human chromosomes in cancer cells first appeared in 1879,6 and attempts were made to count human chromosomes in 1891 and 1898.7 Estimates were made of an average of 24 per cell. Drawings of almost recognizable human chromosomes in corneal cells were published by Flemming in 1882,8 and Waldeyer is credited with coining the name chromosome in 1888.9 Despite the link between chromosomes and mendelism, discovered in 1902, and the heated controversy between Bateson, who espoused mendelism, and the biometricians Pearson and Weldon, who favored Galton's Law of Ancestral Inheritance, there appeared to be little interest in human chromosomes during the next decade. The X chromosome was described in invertebrates, and there were a number of papers on plant chromosomes. It seems that the overwhelming interest was to find a mechanism for the inheritance of the variation in species described by Darwin.

Human chromosomes surfaced once more in 1912, when Winiwarter studied chromosomes in sections of human gonads.10 Counts of 47 in testes and 48 in ovaries convinced him that humans, like locusts, must have an XO/XX sex-determining system. Painter in 1921 refuted this result and described the Y chromosome that Winiwarter had missed.11 On the basis of Winiwarter's studies, he concluded that there were 48 chromosomes in both sexes, despite noting that he could count only 46 in the clearest mitotic figures. Over the next 20 years, at least eight separate investigators confirmed the 48 number until Tjio and Levan proved them all wrong in 1956 with the report of 46 chromosomes in cultured cells.12

The significance of chromosomes was first appreciated in 1902, and Garrod (with Bateson) recognized that alkaptonuria and several other rare inborn errors of metabolism represented human examples of Mendelian traits.13 Hemophilia, color blindness, and brachydactyly were recognized as further examples. The origin of medical genetics may seem to stem from these observations, but it is doubtful if families benefited from this knowledge as there is no record of genetic counseling with the aim of avoiding future affected pregnancies. This was despite an understanding of sex-linked, autosomal dominant and recessive inheritance. It was not until 1937 that Bell and Haldane described the first example of linkage in humans in X-linked pedigrees transmitting both hemophilia and color blindness.14 Both Haldane and Fisher commented in the 1920s on the possibility of predicting, through linkage, the occurrence of disorders such as Huntington disease. However, the controversial views on contraception, the virtual prohibition of therapeutic abortion, and the abhorrence for the eugenic practices advocated by the Third Reich are among the reasons why clinical genetic counseling did not advance between the two World Wars. Perhaps, it was also because the second generation of academic geneticists, including Fisher, Haldane, and Sewall Wright, had greater interest in population and mathematical genetics, statistics, and evolution. Fisher's successor at the Galton Laboratory in 1946 was Lionel Penrose, and he combined expertise in mathematical genetics with interests in clinical genetics, especially in patients with Down syndrome and other learning disorders.15 Emphasis was on understanding the causative factors and modes of inheritance rather than on the reproductive options that might be offered to affected families.

The following years saw many advances in genetics, especially in biochemical genetics, blood group genetics, and the hemoglobinopathies. The major advancement at the time was the resolution of the structure of the DNA molecule by Watson and Crick in 1953.16 Although the science of genetics was transformed by this fundamental observation, its full impact on medical genetics was not felt until the recombinant DNA technology became established some 20 years later, when DNA techniques were first used to diagnose genetic defects. However, it stimulated a great interest among clinicians. Hospital clinics were established in the United States at a number of centers, notably in Baltimore and Madison. Victor McKusick began a postgraduate teaching program in medical genetics at Johns Hopkins Hospital, and his famous Bar Harbor Course was started a few years later in 1959.

THE MODERN ERA

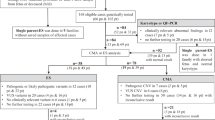

The discovery of the correct chromosome number of 46 in humans in 1956, by Tjio and Levan,12 heralded in the modern era of human cytogenetics. The breakthrough was due to the improved cell culture methods, colchicine-induced arrest for accumulation of mitoses and the serendipitous observation that hypotonic treatment of mitoses before fixation led to the improved separation of chromosomes in squash preparations. (Apparently, in 1952, water was used inadvertently instead of isotonic solutions independently by Hsu in Texas, Makino in Sapporo, and Hughes in Cambridge.17–19 It is fair to say that, unknown to the West, similar techniques were used successfully 20 years earlier in Russia by Zhivago.20) Chromosome analysis, using fresh bone marrow (Fig. 1), was introduced by Ford in 1958.21 Those of us studying the apparent sex reversal in Turner and Klinefelter syndrome in 1958 attempted this method unsuccessfully to determine the sex chromosome status of these patients, but the first successful diagnosis of a human chromosome aberration came in January 1959 with the publication by Lejeune of trisomy 21 in three cases of Down syndrome.22 I date the emergence of modern medical genetics from that date because, within a few months, the search began for other chromosomal syndromes with mental handicap and multiple malformations. The 45,X and 47,XXY sex chromosome abnormalities in Turner and Klinefelter syndromes were discovered first.23,24 Then, clinicians John Edwards, working with David Harnden in Oxford, and Dave Smith, working with Klaus Patau in Madison, described trisomies 18 and 13, respectively.25,26 Both clinicians looked for the constellation of multiple malformations and dysmorphology that was the characteristic of the Down and Turner syndromes, and this led them quickly to study the infants with these trisomies. Less astute clinicians were referring cases of anencephaly, Mendelian disorders and all sorts of single malformations as possible candidates for chromosome abnormality, but without success. I recall being asked in the summer of 1959 to see a newborn child with a possible trisomy at the Johns Hopkins Obstetric Department. The child had normal chromosomes, but the child in the next cot was an infant with trisomy 13. The pattern of dysmorphology that was associated with chromosomal syndromes soon became established. The pioneers in these early chromosome studies met in Denver in 1960 to agree to a chromosomal nomenclature,27 but, at that stage of the discipline, complete accord was difficult, and errors such as the identification of the chromosome trisomic in Down syndrome as 21 and not 22 (the smallest) were perpetuated.28

1959 and 1960 were the years of awakening interest in human cytogenetics. The bone marrow method (Fig. 1) was replaced by the leukocyte method of Moorhead,29 and this simple technique opened up the field to every pathology laboratory. The key ingredient was phytohaemagglutinin, added to remove red cells from plasma, and this caused lymphocyte transformation. (Unknown to the authors, autolysis of red cells had been noted to induce cell division in lymphocytes by Chrustschoff in Moscow 25 years earlier,30 another unfortunate example of Russian science being ignored in the West.) The most notable discoveries at that time were the Philadelphia chromosome in chronic myeloid leukemia31 and the three-generation families with translocation Down syndrome.32 The former confirmed Theodor Boveri's prediction about the chromosomal basis of cancer,33 whereas the latter indicated the need to screen children with Down syndrome for translocations, especially those born to young mothers. Here was a diagnostic service that could be offered to families anxious about the recurrence of Down syndrome. More genetic clinics were set up in part to fulfill this need. These chromosome discoveries fuelled interest in other areas of human genetics, especially inborn errors, hemoglobinopathies, and dominant skeletal and neurological disorders such as achondroplasia and Huntington disease. Clinicians became much more aware of the need for patients to be advised about the risks of recurrence of genetic disorders in general. Before 1960, there were several genetics clinics in the United States, including McKusick's Moore Clinic at Johns Hopkins Hospital, but only two or three in Britain, the first pioneered by Fraser Roberts in 1945 and continued by Cedric Carter into the 1970s. There were no National Health Service consultant posts in medical genetics in Britain before 1970, and the few available pioneers had either University or Medical Research Council appointments.

The early 1960s were years in which techniques were improved, chromosome-specific patterns of DNA replication were discovered,34 meiotic chromosomes were re-examined,35 and new structural chromosome aberrations were identified. The high frequency of chromosome aberrations in spontaneous abortion was recognized.36 Ohno showed that the sex chromatin body was formed by one of the two Xs in female cells,37 and Lyon developed her theory of dosage compensation of X-linked genes due to random X-inactivation.38

HUMAN GENE MAPPING

Chromosome loss in interspecific somatic cell hybrids between mouse and human cells was discovered in 1967 as a productive technique for chromosome mapping, the first success being thymidine kinase locus to chromosome 17.39 In the following year, the assignment of the Duffy blood group locus was made by linkage to a centric polymorphism on chromosome 1.40 These two methods initiated the start of the human gene mapping (HGM) program and spurred on those who had recognized that progress in medical genetics required maps, similar to those available for Drosophila, maize, and mouse. By the time of the first HGM workshop in 1973, 152 X-linked loci were known, but only 64 autosomal loci had been assigned to their respective chromosomes. In 1991, the 11th and last of the HGM workshops were conducted, and by this time, there were 221 X-linked genes and 2104 assigned autosomal gene loci as well as another 8000 DNA segment markers.28 For the next 5 years, the Human Genome Organization organized single chromosome mapping workshops (which Bronwen Loder and I coordinated) and later, genome mapping workshops dealing with other aspects such as mutation databases, telomeres and centromeres, nomenclature, and comparative mapping. The genetic markers developed by the HGM workshops were extensively used during this period for the diagnosis of genetic disease through linkage, and many of these disease genes were isolated by positional cloning. The HGM workshops and their successors represent the foundations on which the Human Genome Project was built, leading to the draft sequence of the human genome some 34 years after the first human autosomal gene was mapped.

IN SITU HYBRIDIZATION AND BANDING

Meanwhile, in 1969, two other great technological advances were made almost simultaneously. Pardue and Gall were the first to show that satellite DNA, a form of repetitive DNA, could be hybridized to denatured chromosomes in situ on microscope slides using radioactive-labeled DNA probes that were detected by autoradiography.41 The regions to which the DNA probes annealed were also stained selectively by Giemsa. Various modifications of the denaturing process, using alkali, heat, or proteolytic enzymes, led to the transverse dark and light Giemsa bands along the chromosomes.42,43 Miraculously, each chromosome was found to have a distinctive banding pattern that allowed unequivocal identification. Zech and Caspersson discovered the same patterns independently using quinacrine fluorescence.44 Immediately, a whole range of chromosome deletions, duplications, and inversions could be recognized. Patients previously reported to have “normal” karyotypes were recalled, and new chromosomal syndromes were discovered. The chromosome bands also improved the resolution of gene mapping and led to the characterization of aberrations in cancer cells, such as the 9:22 translocation in chronic myeloid leukemia.45 Cytogenetics was once again contributing significantly to medical practice.

The isotopic DNA/RNA probes used in the early days of in situ hybridization could not be used for mapping single copy genes as it was not possible to make them sufficiently radioactive. This problem was resolved by the advances in recombinant DNA technology in the late 1970s that allowed the construction of DNA libraries and the cloning of DNA fragments in bacteria in sufficient quantity to give good hybridization signals. The globin, insulin, and kappa light chain immunoglobulin genes were all mapped in 1981,46 the year that the mitochondrial genome was fully sequenced. The following year saw the introduction of fluorescence in situ hybridization,47 and it is arguably this technique that revolutionized gene mapping.48 It is still the standard mapping method 25 years later.

PRENATAL DIAGNOSIS AND SCREENING

The next milestone in human cytogenetics, again in 1969, was the introduction of prenatal diagnosis for chromosome aberrations and metabolic disorders.49,50 Amniocentesis at around 16 weeks' gestation with the culture of amniotic fluid cells for fetal chromosome analysis was established principally to exclude Down syndrome in older mothers and in those who had had a previous affected child. Less frequent indications were Down syndrome translocations and X-linked recessive disorders where the mother was a carrier. In Britain, the Abortion Act of 1967 allowed the termination of pregnancy when it could be shown that the mother had a substantial risk of producing a child with a severe handicapping condition, and this not only applied to Down syndrome but also to male fetuses at 50% risk of an X-linked disorder. Our early studies from 1969 to 1970 demonstrated that by far the majority of pregnancies tested for these indications were found to have a normal fetus.51 Prenatal diagnosis allowed mothers to plan for future healthy offspring when this could not have been contemplated without the procedure. Bearing in mind the high frequency of chromosomal defects in spontaneous abortion, pregnancies that tested positive at 16 weeks could be regarded as having escaped miscarriage earlier, and this may have helped families to consider termination as a reasonable, albeit very distressing, option.

One important consequence of the introduction of prenatal diagnosis was an increased number of referrals to genetics clinics, suggesting that when parents had the option of stopping the pregnancy, genetic counseling become not only useful but also essential. It is important to note that obstetric ultrasound, pioneered by Ian Donald in Glasgow,52 was used first in medical genetics to guide the amniocentesis needle, exclude twins, and avoid the anterior placenta. The Medical Research Council Trial on the Safety of Amniocentesis concluded that the risk of miscarriage related to the procedure was about 1% and emphasized the important role of ultrasound. This undoubtedly stimulated the use of sonar in obstetrics and the development of improved ultrasound equipment.

The discovery of raised amniotic alphafetoprotein (AFP) in fetuses with open spina bifida and anencephaly in 197253 introduced another important indication for amniocentesis, especially in Scotland and Wales where the birth frequency of neural tube defects (NTDs) was more than 5 per 1000. Immediately after the introduction of AFP testing, it was found that maternal serum levels of AFP reflected levels in amniotic fluid so that it was possible to screen for NTDs using maternal blood. However, success depended on the accurate estimation of gestation as normal serum AFP levels increased during pregnancy. Many regions in the United Kingdom postponed maternal serum AFP screening on the grounds that ultrasound was not reliable. I believe that it was largely due to AFP screening in other areas that led to experience in ultrasound that made the early introduction of the fetal anomaly scan possible. Thanks largely to AFP screening, the birth frequency of NTDs in Scotland was reduced from 5 per 1000 in 1975 to 1.7 per 1000 in 1981.54 By then, the resolution of ultrasound was such that many pregnancies affected with spina bifida could be identified at 20 weeks by sonar alone.

Prenatal screening for chromosome aberrations grew out of the NTD screening program when Merkatz observed that maternal serum AFP levels were lower in Down syndrome pregnancies than in unaffected pregnancies.55 Those of us who had stored maternal serum samples were able to confirm this and to detect small numbers of cases prospectively. Other biochemical markers and ultrasound markers, such as nuchal translucency, were soon discovered that improved the detection rates in screening programs. Nowadays, using the Contingent Test that incorporates maternal age, nuchal translucency, and various serum levels, it is possible to detect 60% of Down syndrome fetuses in the first trimester for a false-positive rate of 1.2%. Seventy-five percent of pregnancies are identified as having a very low risk, and the remainder can be retested for serum levels in the second trimester. This strategy achieves an overall detection rate of 91% for a false-positive rate of 4.5%.56 Since the introduction of prenatal screening for chromosome defects, there has been a progressive decline in the need for amniocentesis and a commensurate reduction in related miscarriage.

A desire to move away from invasive prenatal diagnosis has stimulated research into safer techniques using fetal cells from the maternal circulation. Unfortunately, this has not proved to be practicable as insufficient numbers of cells are present and enrichment methods are unreliable. However, DNA and RNA in maternal plasma derived from cells shed from the placenta have been used successfully to determine fetal sex in the first trimester and to diagnose dominant disorders inherited from the father.57 The most useful application so far has been to determine the Rhesus blood group of the fetus at risk of hemolytic disease of the newborn. Current research by Dennis Lo and colleagues indicates that fetal trisomies may be detected from ratios of allele-specific markers in placental-specific RNA.58

THE POLYMERASE CHAIN REACTION

Much of modern molecular cytogenetics depends on the introduction of the polymerase chain reaction (PCR) in 1988.59 PCR exploits the enzyme Taq polymerase to amplify DNA between primer sequences. The technique has application to mutation testing, gene mapping, and almost all aspects of DNA analysis and sequencing. Using random DNA primers, almost any sample of DNA can be amplified in any species. PCR is used extensively in modern methods of forensic DNA fingerprinting and, for example, in the production and labeling of chromosome paint probes from chromosome-specific DNA that in 1992 were used first in multicolour-fluorescence in situ hybridization techniques for karyotype analysis.60 Chromosome-specific DNA is made by PCR from chromosomes sorted in a dual laser flow cytometer or from PCR amplification of microdissected chromosomes. Cross-species reciprocal chromosome painting has had an important application in the study of mammalian karyotype evolution.61 Chromosome-specific paints have become useful Darwinian markers in tracing lines of descent, in taxonomic studies, and in studying evolutionary mechanisms. My laboratory currently uses chromosome-specific DNA and gene mapping techniques to investigate the evolution of sex-determining mechanisms in vertebrates.62 We have much to learn about human genetics from these comparative studies.

COMPARATIVE GENOME HYBRIDIZATION AND MICROARRAYS

1992 saw the introduction of comparative genome hybridization (CGH).63 This is a form of reverse chromosome painting used to detect chromosome deletions and duplications from a patient's total genomic DNA rather that from his or her karyotype. The patient's DNA is labeled in one color and mixed with control DNA labeled in another color. Both are mixed and hybridized to normal metaphases and the ratio of the two colors determined along the length of each chromosome. Duplications are recognized by the predominance of the subject DNA color, whereas deletions are revealed by the predominance of the control DNA color. CGH has been useful both in screening for small constitutional chromosome aberrations in patients and in detecting aberrations in cancer cells.

We learned from the publication of the draft sequence of the human genome in 2001 that humans have <25,000 genes instead of the 80,000 that had been initially predicted.64 The sequence provides the means for identifying these genes, including those that cause monogenic disease or that confer susceptibility and resistance to common multifactorial disease. Search for linkage of common disease phenotypes to genetic markers, especially single nucleotide polymorphisms and haplotypes, has become an important strategy in medical genetics. The discovery of a number of susceptibility genes in both Type 1 and Type 2 diabetes and in rheumatoid arthritis is a significant example.65 In another strategy, termed array CGH and used to detect microdeletions and microduplications, DNA sequences that are spaced at defined distances from one another are chosen from the genome database. A low-resolution array may have 3000 markers spaced at approximately 1-Mb intervals along the chromosome. Higher resolution arrays, containing a million DNA “marker” sequences, are also available. These DNA sequences are arrayed as spots on glass slides, and the patient's DNA sample plus control is hybridized to the microarrays, which are then screened for duplications and deletions by the same CGH methodology used for chromosomal CGH. The patient to control ratios are plotted on chromosome maps to reveal regions of genetic imbalance (Fig. 2). At high resolution, imbalances of <1 Mb are detected, and it may be difficult to distinguish pathologic imbalance from normal (benign) copy number variants.66,67 The problem may be resolved by determining if the same variant is present in controls from the normal population or in normal first-degree relatives. The great advantage of the technology is the ability to interrogate in a genome-wide fashion and at high resolution. This is a huge improvement over classical karyotyping, and it is to be expected that array CGH technology will replace much of the diagnostic cytogenetics that is current today.68 However, the present array CGH technology is expensive and beyond the means of many genetic services. Only increased take-up can be expected to reduce costs and bring the advantages within reach of more of our patients.

CONCLUSIONS

Advances in cytogenetics have been the stimulus for progress in medical genetics and have made genetic diagnosis possible today at the level of the DNA molecule rather than the phenotype. This is something that we dreamed about in 1956 and could have scarcely contemplated occurring in such a comparatively short time. Our specialty can take pride in this achievement, and I, for one, feel it a great privilege to have worked in the field for the past 50 years.

Looking back to the beginning of the 20th Century, the early geneticists were searching for laws that explained the variation on which natural selection operated. They found their answer in the chromosomes. One hundred and fifty years after the publication of On the Origins of Species Darwin would have been pleased to learn about copy number variation because such variation lies at the heart of evolutionary mechanisms.

References

Darwin C . On the origin of species by means of natural selection, or the preservation of favoured races in the struggle for life. London: John Murray, 1859.

Boveri T . Ergebnisse uber die Konstitution der Chromatischen Substanz des Zellkerns. Jena: Gustav Fischer, 1904.

Sutton WS . The chromosomes in heredity. Biol Bull 1903; 4: 231–251.

Bridges CB . Non-disjunction as proof of the chromosome theory of heredity. Genetics 1916; 1: 107–163.

Fisher RA . The genetical theory of natural selection. Oxford: Clarendon Press, 1930.

Arnold T . Beobachtungen uber Kentheilungen in den Zellen der Geschwulste. Virchow's Arch 1879; 78: 279–301.

Flemming W . Uber Chromosomenzahl beim Menschen. Anat Anz 1898; 14: 171–174.

Flemming W . Zellsubstanz, Kern, und Zelltheilung. Leipzig: Verlag Vogel, 1882.

Waldeyer W . Uber Karyokinese und ihre Bezeihungen zu den Befruchtungsvorgangen. Arch Mikrosk Anat 1888; 32: 1–122.

Winiwarter H . Etudes sur la spermatogenese humaine. Arch Biol (Liege) 1912; 27: 91–189.

Painter TS . The Y chromosome in mammals. Science 1921; 53: 503–504.

Tjio JH, Levan A . The chromosome number of man. Hereditas 1956; 42: 1–6.

Garrod AE . The incidence of alkaptonuria: a study in chemical individuality. Lancet 1902; ii: 1616–1620.

Bell J, Haldane JBS . The linkage between the genes for colour blindness and haemophila in man. Proc R Soc B 1937; 123: 119–150.

Penrose LS . A clinical and genetic study of 1280 cases of mental defect. MRC Special Report 229. London: HMSO, 1938.

Watson JD, Crick FHC . Molecular structure of nucleic acids. Nature 1953; 171: 737–738.

Hsu TC . Mammalian chromosomes in vitro. I. The karyotype of man. J Heredity 1952; 43: 167–172.

Makino S, Nishimura I . Water pre-treatment squash technique. Stain Technol 1952; 27: 1–7.

Hughes A . Some effects of abnormal tonicity on dividing cells in chick tissue cultures. Quar J Micro Sci 1952; 93: 207–220.

Zhivago P, Morosov B, Ivanickaya A . Uber die Einwirkung der Hypotonie auf die Zellteilung in den Gewebkulturen des embryonalen Hertzens. C R Acad Sci URSS 1934; 3: 385–386.

Ford CE, Jacobs PA, Lajtha LG . Human somatic chromosomes. Nature 1958; 181: 1565–1568.

Lejeune J, Gautier M, Turpin R . Etudes des chromosomes somatique de neuf enfants mongoliens. CR Acad Sci Paris 1959; 248: 1721–1722.

Ford CE, Jones KW, Polani PE, de Almeida JC, Briggs JH . A sex chromosome anomaly in a case of gonadal dysgenesis (Turner's syndrome). Lancet 1959; i: 711–713.

Jacobs PA, Strong JA . A case of human intersexuality having a possible XXY sex determining mechanism. Nature 1959; 183: 302–303.

Edwards JH, Harnden DG, Cameron AH, Crosse VM, Wolff OH . A new trisomic syndrome. Lancet 1960; i: 787–790.

Patau K, Smith DW, Therman E, Inhorn SL, Wagner HP . Multiple congenital anomaly caused by an extra autosome. Lancet 1960; i: 790–793.

Denver Conference A proposed standard system of nomenclature of human mitotic chromosomes. Lancet 1960; i: 1063–1065.

Ferguson-Smith MA . From chromosome number to chromosome map: the contribution of human cytogenetics to genome mapping. In: Sumner AT, Chandley AC, editors. Chromosomes today, Vol. 11, Chap. 1. London: Chapman and Hall, 1993: 3–19.

Moorhead PS, Nowell P, Mellman W, Battips D, Hungerford D . Chromosome preparations of leukocytes cultured from human peripheral blood. Exp Cell Res 1960; 20: 613–616.

Chrustschoff GK, Berlin EA . Cytological investigations on cultures of normal blood. J Genet 1935; 31: 243–261.

Nowell PC, Hungerford DA . A minute chromosome in human chronic myelocytic leukaemia [abstract]. Science 1960; 132: 1497.

Polani PE, Briggs JH, Ford CE, Clarke CM, Berg JM . Amongol girl with 46 chromosomes. Lancet 1960; i: 721–724.

Boveri T . Zur Frage der Entstehung maligner Tumoren. Jena: Gustav Fischer, 1914.

German JL . DNA synthesis in human chromosomes. Trans N. Y Acad Sci 1962; 24: 395–407.

Ferguson-Smith MA . The sites of nucleolus formation in human pachytene chromosomes. Cytogenetics 1964; 3: 124–134.

Carr DH . Chromosome studies in spontaneous abortion. Obstet Gynec 1965; 26: 308–326.

Ohno S, Kaplan WD, Kinosita R . Formation of the sex chromatin by a single X chromosome in liver cells of Rattus norvegicus. Exp Cell Res 1959; 18: 415–418.

Lyon MF . Gene action in the mammalian X chromosome of the mouse (Mus musculus L.). Nature 1961; 190: 372–373.

Weiss M, Green H . Human-mouse hybrid cell lines containing partial complements of human chromosomes and functioning human genes. Proc Nat Acad Sci USA 1967; 58: 1104–1111.

Donahue RP, Bias WB, Renwick JH, McKusick VA . Probable assignment of the Duffy blood group locus to chromosome 1 in man. Proc Nat Acad Sci USA 1968; 61: 949–955.

Pardue ML, Gall JG . Chromosomal localisation of mouse satellite DNA. Science 1970; 168: 1356–1358.

Sumner AT, Evans HJ, Buckland RA . New technique for distinguishing between human chromosomes. Nature 1971; 232: 31–32.

Seabright M . A rapid banding technique for human chromosomes. Lancet 1971; ii: 971–972.

Caspersson T, Zech L, Johansson C . Differential banding of alkylating fluorochromes in human chromosomes. Exp Cell Res 1970; 60: 315–319.

Rowley JD . A new consistent chromosomal abnormality in chronic myelogenous leukaemia identified by quinacrine fluorescence and Giemsa staining. Nature 1973; 243: 290–293.

Malcolm S, Barton P, Murphy C, Ferguson-Smith MA . Chromosomal localisation of a single copy gene by in situ hybridisation: beta globin genes on the short arm of chromosome 11. Ann Hum Genet 1981; 45: 135–141.

Langer PR, Waldrop AA, Ward DC . Enzymatic synthesis of biotin-labelled polynucleotides: novel nucleic acid affinity probes. Proc Nat Acad Sci USA 1981; 78: 6633–6637.

Lichter P, Tang CC, Call CK, et al. High resolution mapping of human chromosome 11 by in situ hybridisation with cosmid clones. Science 1990; 247: 64–69.

Steele MW, Breg WR . Lancet 1966; i: 383–385.

Nadler HL . Antenatal detection of hereditary disorders. Pediatrics 1968; 42: 912–918.

Ferguson-Smith ME, Ferguson-Smith MA, Nevin NC, Stone M . Chromosome analysis before birth and its value in genetic counselling. Br Med J 1971; 4: 69–74.

Donald I, Brown TG . Br J Radiol 1961; 40: 604.

Brock DJH, Sutcliffe RG . Alphafetoprotein in the antenatal diagnosis of anencephaly and spina bifida. Lancet 1972; ii: 197–199.

Ferguson-Smith MA . The reduction in anencephalic and spina bifida births by maternal serum alphafetoprotein screening. Br Med Bull 1983; 39: 365–372.

Merkatz IR, Nitowsky HM, Macri JN, Johnson WE . An association between low maternal serum alphafetoprotein and fetal chromosomal abnormalities. Am J Obstet Gynecol 1984; 148: 886–894.

Cuckle HS, Malone FD, Wright D, et al. Contingent screening for Down syndrome—results from the FaSTER trial. Prenat Diagn 2008; 28: 89–94.

Lo YMD, Corbetta N, Chamberlain PF, et al. Presence of fetal DNA in maternal plasma and serum. Lancet 1997; 350: 485–487.

Lo YMD, Tsui NBY, Chiu RWK, et al. Plasma placental RNA allelic ratio permits non-invasive prenatal chromosomal aneuploidy detection. Nature Med 2007; 13: 218–223.

Saiki RK, Gelfand DH, Stoffel S, et al. Primer-directed enzymatic amplification of DNA with a thermostable DNA polymerase. Science 1988; 239: 487–491.

Schrock E, Du Manoir S, Veldman T, et al. Multicolor spectral karyotyping of human chromosomes. Science 1996; 273: 494–497.

Ferguson-Smith MA, Trifonov V . Mammalian karyotype evolution. Nature Rev. Genet 2007; 8: 950–962.

Rens W, O'Brien PCM, Grutzner F, et al. The multiple sex chromosomes of platypus and echidna are not completely identical and several share homology with the avian Z. Genome Biol 2007; 8: R243.

Kallioniemi A, Kallioniemi OP, Sudar D, et al. Comparative genomic hybridisation for molecular cytogenetic analysis of solid tumours. Science 1992; 258: 818–821.

Human Genome Sequencing Consortium Initial sequencing and analysis of the human genome. Nature 2001; 409: 860–921.

Wellcome Trust Case Control Consortium Genome-wide association study of 14,000 cases of seven common diseases and 3000 shared controls. Nature 2007; 447: 661–678.

Iafrate AJ, Feuk L, Rivera MN, et al. Detection of large scale variation in the human genome. Nat Genet 2004; 36: 949–951.

Tuzun E, Sharp AJ, Bailey JA, et al. Fine-scale structural variation of the human genome. Nat Genet 2005; 37: 727–32.

Vermeesch JR, Fiegler H, de Leeuw N, et al. Guidelines for molecular karyotyping in constitutional genetic diagnosis. Eur J Hum Genet 2007; 15: 1105–1114.

Author information

Authors and Affiliations

Corresponding author

Additional information

Disclosure: The author declares no conflict of interest.

The Pruzansky Lecture presented at the American College of Medical Genetics Annual Clinical Genetics Meeting, 14th March, 2008.

Rights and permissions

About this article

Cite this article

Ferguson-Smith, M. Cytogenetics and the evolution of medical genetics. Genet Med 10, 553–559 (2008). https://doi.org/10.1097/GIM.0b013e3181804bb2

Received:

Accepted:

Issue Date:

DOI: https://doi.org/10.1097/GIM.0b013e3181804bb2

Keywords

This article is cited by

-

History and evolution of cytogenetics

Molecular Cytogenetics (2015)