Abstract

Purpose

Randomised controlled trials (RCTs) represent a gold standard for evaluating therapeutic interventions. However, poor reporting clarity can prevent readers from assessing potential bias that can arise from a lack of methodological rigour. The Consolidated Standards of Reporting Trials statement for non-pharmacological interventions 2008 (CONSORT NPT) was developed to aid reporting. RCTs in ophthalmic surgery pose particular challenges in study design and implementation. We aim to provide the first assessment of the compliance of RCTs in ophthalmic surgery to the CONSORT NPT statement.

Method

In August 2012, the Medline database was searched for RCTs in ophthalmic surgery reported between 1 January 2011 and 31 December 2011. Results were searched by two authors and relevant papers selected. Papers were scored against the 23-item CONSORT NPT checklist and compared against surrogate markers of paper quality. The CONSORT score was also compared between different RCT designs.

Results

In all, 186 papers were retrieved. Sixty-five RCTs, involving 5803 patients, met the inclusion criteria. The mean CONSORT score was 8.9 out of 23 (39%, range 3.0–14.7, SD 2.49). The least reported items related to the title and abstract (1.6%), reporting intervention adherence (3.1%), and interpretation of results (4.7%). No significant correlation was found between CONSORT score and journal impact factor (R=0.14, P=0.29), number of authors (R=0.01, P=0.93), or whether the RCT used paired-eye, one-eye, or two-eye designs in their randomisation (P=0.97).

Conclusions

The reporting of RCTs in ophthalmic surgery is suboptimal. Further work is needed by trial groups, funding agencies, authors, and journals to improve reporting clarity.

Similar content being viewed by others

Introduction

The randomised controlled trial (RCT) is a cornerstone of medical research and evidence-based medicine. RCTs are widely regarded as the ‘criterion standard’ for evaluating the effectiveness of an intervention. They are classed in the Levels of Evidence as level 1b by the Oxford Centre for Evidence-based Medicine.1 However, poorly reported RCTs are associated with bias in estimating the effectiveness of interventions,2, 3 and inconsistencies between the conclusions and results.4 Adequate and accurate reporting is vital to facilitate critical appraisal and interpretation of the data by the readers.

The Consolidated Standards of Reporting Trials (CONSORT) statement was developed to provide a minimum set of standards for transparent reporting of RCTs. The original CONSORT statement, published in 1996,5 has since been revised in 2001,6, 7 and updated most recently in 2010.8 Additionally, an extension to the statement was developed to address specific issues surrounding the reporting of RCTs evaluating surgical interventions.9 The 2008 CONSORT extension for non-pharmacological treatment interventions (CONSORT NPT) is an extension on the 2001 CONSORT checklist that incorporates additional issues relating to masking difficulty, intervention complexity, and inconsistent care providers' expertise that commonly affect surgical RCTs.10, 11

RCTs in ophthalmology represent further challenges for researchers;12 for example, each patient has the potential to contribute two data points. Studies in ophthalmology may require alternative designs and hence alternative methods of analysis to accommodate this.13, 14 Previously, reporting of RCT abstracts in ophthalmology has been suboptimal.15 A review of 24 ophthalmology RCTs published in 1999 found that only an average of 33.4 out of 57 descriptors were adequately reported to the standard described in the 1996 CONSORT statement.16 We are unaware of previous assessments regarding the compliance of RCTs in ophthalmic surgery to the CONSORT NPT, and could find no reference in a computerised search of the PubMed database.

The primary objective of this study was to assess the compliance of recent RCTs in ophthalmic surgery to the 2008 CONSORT NPT extension of the CONSORT 2001 statement. The secondary objectives included identifying any associations between CONSORT NPT compliance and surrogate markers of article quality, including ISI 2011 impact factor of the publishing journal, number of authors, number of patients in the trial, and whether the study was a single- or multi-centre study. The association between CONSORT score and different designs in randomisation of ophthalmology RCTs was also analysed.

Materials and methods

Search method

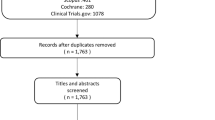

The Medline database was searched during August 2012 for RCTs from the period 1 January 2011 to 31 December 2011 for the Medical Subject Headings ‘Ophthalmic Surgical Procedures’ NOT ‘Pharmacology’, with the ‘explode’ function activated. Limitations were set for English language and trials on human subjects. Results were then manually searched independently by two authors (ACY and AK) for RCTs that satisfied the inclusion criteria. The RCTs were identified by reviewing the titles and abstracts of the results. Where there was insufficient information in the title and abstract for determining inclusion, the full article was obtained and reviewed. The two authors then resolved any conflicts in article selection by consensus. Where differences remained, a third author (CFC) was consulted to make the final decision. After the final selection was confirmed, all full articles were obtained. The search protocol is summarised in the PRISMA (Preferred Reporting for Systematic Reviews and Meta-Analyses) flow diagram (Figure 1).

Studies were only included if they were randomised, parallel-group RCTs in humans, involving a surgical procedure as at least one intervention arm. Excluded were studies involving purely pharmacological interventions, cost-effectiveness or economic analyses, interim analyses, short communications, simulation studies, and studies involving only cadaveric eyes.

Scoring

The papers were then scored independently by two authors (ACY and AK) against the 23 items on the 2008 CONSORT NPT extension of the 2001 CONSORT checklist. Each item was given an equal weighting, scoring 1 each, for a total of 23. Articles were scored 1 for an item if all information detailed in the respective item was reported, an approach reflective of the latest CONSORT 2010 guidelines.8 Otherwise the item was scored 0. Two items were subdivided in the CONSORT NPT statement: item 4 included three parts (4A, 4B, and 4C), and item 11 had two parts (11A, 11B). For these items each had its parts scored independently, with each worth a third and one-half, respectively. The resulting mark out of 23 was termed the ‘CONSORT score’. After initial scoring, any discrepancies in scores between the two authors were settled by consensus. If agreement could not be reached, the third author (CFC) was consulted for the final decision.

Secondary analyses

The relationship between the CONSORT score and several surrogate markers of article quality were also analysed (all prespecified). These included the number of authors;17, 18 number of patients; ISI 2011 impact factor of publishing journal;19 and whether the study was a single or multi-centre study. The relationship between the CONSORT score and different designs in randomisation of ophthalmology RCTs, as defined by Lee et al,12 was analysed: paired-eye design, one-eye design, and two-eye design.

Statistical analyses

Inter-rater reliability was assessed using the Cohen’s kappa score calculation. Spearman Rank correlation coefficient was used to assess the relationship between CONSORT score and surrogate markers of article quality. The Mann–Whitney U test was used to measure inter-group differences between single- and multi-centre trials. The Kruskal–Wallis test was used to analyse the CONSORT scores between different study designs: paired-eye, one-eye, and two-eye designs. Differences in CONSORT score between same-group, different-group, and mixed two-eye designs were also analysed using the Kruskal–Wallis test. All statistical analyses were carried out using SPSS (version 22.0; SPSS Inc., Chicago, IL, USA).

Results

In all, 186 articles were retrieved from the search of the Medline database (Figure 1). Of these, 69 articles were selected. Following review of the full articles, four articles were excluded: two for not being RCTs, and two for being unrelated to ophthalmology. The remaining 65 RCTs, involving 5803 patients, met the inclusion criteria. Inter-observer concordance for article selection had a kappa score of 0.91. In total 1495 items were scored. Following the initial round of scoring, the authors’ scores were disputed on 50 items (2.8%). All 50 disputed items were resolved following discussion. The kappa score for the initial round of scoring was 0.94.

The mean CONSORT score of the 65 RCTs was 8.9 out of 23 (39%, range 3.0–14.7, SD 2.49). The compliance for individual items is shown in Table 1 and Figure 2. The poorest-reported items were item 1: title and abstract (one paper, 1.6%), item 4c: details of how adherence with protocol was assessed (two papers, 3.1%), and item 20: interpretation of results (three papers, 4.7%). No paper adequately reported all items in the CONSORT checklist.

Six journals’ impact factors were not listed in ThompsonReuters’ Journal Citation Reports,19 which included 7 of the 65 RCTs. For the 58 remaining papers, there was no correlation between CONSORT score and the impact factor (Spearman rho=0.14, P=0.29, Cohen’s d=3.297), Figure 3. There was no correlation between CONSORT score and the number of authors (Spearman rho=0.01, P=0.93, Cohen’s d=1.533). There was no statistically significant difference between the scores of single- and multi-centre trials (P=0.58, Cohen’s d=0.226), or between paired-eye, one-eye, or two-eye RCT designs (P=0.98, partial η2=0.001). In addition, there was no statistical difference in CONSORT score between same-group, different-group, and mixed two-eye RCT designs (P=0.97, partial η2=0.005).

Discussion

RCT adherence to the CONSORT NPT checklist varied considerably. The CONSORT score ranged widely from 3 to 14.7 out of 23 items in this study. Several items integral to trial reporting, such as the background, rationale, objectives, and hypotheses, were well reported. Notably, adherence was over 95% to item 2: background, item 5: specifying objectives/hypotheses, and item 22: general interpretation of results in the context of current evidence. Despite this, the mean score was only 8.9 out of 23 items (39%) on the CONSORT NPT. No RCTs obtained a full score.

Suboptimal compliance of RCT reporting to CONSORT is also found across many other surgical specialties including urological surgery,20 general surgery,21 neurosurgery,22 orthopaedic surgery,23 plastic surgery,24 and vascular surgery,20 as well as medical specialties such as cardiology.25 The deficiencies identified in previous studies include particularly poor reporting of randomisation implementation, masking status, and healthcare providers.26, 27 Similar deficiencies in reporting quality were found in our study. A review of 164 RCTs by Agha et al20 in six surgical specialties reported an average CONSORT score of only 11.2 out of the 22 items (51%) using the 2001 CONSORT statement. In our study, the same statement was used with the additional CONSORT NPT extension. The slightly lower CONSORT scores in our study is likely accounted for by the additional criteria within the extension.

The compliance to individual items was similarly varied. Inter-item variability appears globally consistent across other specialties.20, 21, 22, 23, 24, 25, 28 In our study, over 90% of RCTs adequately reported scientific background and explaining rationale (item 2), reporting objectives or hypotheses (item 5), and interpreting results in the context of current evidence (item 22). This might be considered unsurprising, as these items represent the better recognised and readily achievable standards in the reporting of RCTs. High levels of reporting to item 2,20, 25 item 5,21, 24, 25 and item 2220, 25 have also been reported in other specialties. Despite this, 15 of the 23 items were reported in less than 50% of the RCTs. Of these items, nine items were reported in less than 25% of the RCTs. Similar findings have been found in a wide range of surgical specialties.20, 21, 22, 23, 24, 28 Although most RCTs reported at least one aspect described by the item, a common reason for failure to score on an item was a failure to report all aspects highlighted by that item.

The least reported item was related to the title and abstract (item 1). This was adequately reported in only 1 RCT (1.6%). Previous studies have shown this item to be well reported in other specialties.20, 22, 24 However, these studies assess compliance against the CONSORT 2001 statement. In our study, RCTs generally mentioned ‘randomisation’ in the abstract or title fulfilling one aspect of the item. However, RCTs often failed to describe additional aspects of items as defined by the CONSORT NPT extension: the experimental treatment, care provider, centres involved, and masking status. Our pre-determined scoring strategy required all aspects of the item to be described to award the score, reflective of the CONSORT 2010 guidelines.8 Indeed, these findings are consistent with Camm et al25 assessing reporting of items to the CONSORT 2010 statement. Sufficiently detailed abstracts are essential as the readers often base their assessment of trials on the abstract information. The value of complete abstract reporting is highlighted by the CONSORT Extension for Abstracts checklist.29 Despite the publication of the checklist, Knobloch and Vogt30 identified a mean compliance of only 9.46 out of the 17 items in the abstract extension checklist in 39 abstracts from the Annals of Surgery. Similarly, Berwanger et al31 reviewed 227 abstracts from the NEJM, JAMA, BMJ, and The Lancet, finding that only 21 abstracts (9.3%) specified masking status.

There was no correlation between CONSORT score and surrogate markers of article quality. This is perhaps an unsurprising reflection that the CONSORT statement is more an assessment tool for the quality of RCT reporting rather than an assessment tool for the quality of RCT design itself. Neither the higher number of authors nor the higher journal impact factor was associated with improved CONSORT compliance, contrary to the popular belief that such markers help identify superior articles.17, 18 Indeed, the evidence for association between surrogate markers of quality and CONSORT score is inconsistent. Camm et al25 highlighted a significant association between impact factor and CONSORT 2010 score in RCTs concerning anti-arrhythmic agents. Balasubramanian et al21 found that CONSORT score was significantly associated with higher author number, multi-centre studies, and impact factor in general surgery. However, Agha et al20 reported no significant difference between CONSORT score and the same surrogate markers. Additionally, previous studies have also shown no link between higher impact factor and improved trial methodology.32 Rigorous adoption of CONSORT by journals, however, has been shown to correlate with improved reporting quality.33, 34, 35, 36, 37

Fulfilment of the CONSORT checklist items was suboptimal across different types of RCT design. There was no significant difference in CONSORT score between single- and multi-centre trials (P=0.16). In addition, there was no significant difference (P=0.46) in trials randomising two eyes to the same group, different group, or a combination of same group and different group (mixed). This indicates that the need for improvement in reporting quality is not confined to specific types of study, but is applicable globally.

Healthcare providers face particular challenges in conducting surgical RCTs compared to pharmaceutical trials.12, 38, 39, 40, 41 Notable difficulties include achieving and implementing masking, addressing varying expertise levels of care providers, and varying patient volumes of centres. Furthermore, inadequate funding and difficulty in securing consent may contribute to the lack of sufficient patient numbers, leading to low sample size and inadequate study power.42, 43 These factors may affect the accuracy in evaluating the effectiveness of interventions.44 The CONSORT NPT extension provides a specific checklist to highlight the standards of reporting of these factors, which are not necessarily relevant to pharmaceutical trials.

Accurate and complete reporting of RCTs in ophthalmic surgery is especially important due to the potential added level of complexity of study design. The presence of two potential data points (ie two eyes) may lead to considerable heterogeneity in design, randomisation method, and statistical analysis.12, 45 Although there is a need to accurately inform readers of alternative statistical methodology, statistical consideration with respect to study design is often under-reported in many RCTs in ophthalmology.12 In our study, 32 of the 64 RCTs (50%) adequately satisfied item 12 (regarding statistical methods). Poor reporting quality can prevent readers from assessing the potential bias that can arise from a lack of methodological rigour.46

Inadequate adherence to the CONSORT NPT may arise from failure at any of the four stages of the awareness-to-adherence model of compliance to guidelines (awareness, agreement, adoption, and adherence) defined by Pathman et al.47 Given the heterogeneity of study designs in ophthalmic surgery, authors may be reluctant to consider using a checklist tool that was not developed for such a design. In addition, the adoption of the CONSORT statement and its extensions into journals’ ‘Instructions to Authors’ has been suboptimal.48, 49, 50, 51 Despite a 73% increase since 2003, Hopewell et al49 found that only 62 of 165 (38%) high-impact journals mentioned the CONSORT statement in their ‘Instructions to Authors.’ Although 50 of 57 responding editors (88%) stated that their journal recommended CONSORT, only 35 of 56 respondents (62%) stated that this was a requirement. Endorsement of the CONSORT extensions was noted to be especially lacking. The possibility should be considered that other factors such as journal word counts may encourage authors to include CONSORT items only selectively.

There are various limitations to this study. The search was restricted to articles in the English language and from the Medline database. The period studied was restricted to 2011, preventing any analysis of the temporal trends in CONSORT score. The number of RCTs including in this period was relatively small, limiting the power to examine the relationship between CONSORT scores and surrogate markers of RCT quality. Some CONSORT items may be included in associated RCT protocols in the public domain that were not analysed. Pragmatic difficulties arise in the scoring of RCT compliance to the CONSORT NPT. Many items contain multiple elements. Whether reviewers score items in regard to the multiple elements is a potential area of subjectivity. Subjectivity was minimised in this study by predefining the scoring strategy among the reviewers. The item was only scored if all elements were reported. This is on the basis that CONSORT items represent absolutely fundamental information; ‘the minimum criteria,’ that should be reported in a RCT.8 Furthermore, all items on the checklist were given equal weighting to minimise subjectivity. Although this may not reflect their relative importance, it is nonetheless an objective approach to analyse deficits, patterns, as well as overall compliance.

The 2008 CONSORT NPT extension will benefit from updating to be brought in line with the CONSORT 2010 checklist. Key updates would include addition of the three new items regarding trial registration, availability of the trial protocol, and the declaration of funding. General changes might focus on reducing obfuscation by alterations in wording: replacing, simplifying, or removing misused words or phrases. In addition, greater specificity and subdivisions of items would help to address the additional requirements for NPTs.

There is a need to improve the quality of reporting of RCTs in ophthalmic surgery. The adoption of CONSORT by journals is associated with improved reporting quality,33, 34, 35, 36, 37, 52 and therefore we recommend journals are explicit towards authors regarding CONSORT before submission and peer review. Editors, peer reviewers, authors, and developers of reporting guidelines will benefit from working closely with groups such as the Enhancing the Quality and Transparency of Health Research Network to support development and dissemination of reporting guidelines.53 Further development of the CONSORT Statement may help to improve compatibility to RCTs with alternative methodologies including within-person randomised trials, common in ophthalmic surgery. Future extensions to the CONSORT Statement will hopefully start to address this.27

Conclusion

In conclusion, our findings suggest that the 2008 CONSORT NPT guidelines are not being met in 2011. It is recommended that the authors, funding agencies, peer-reviewers, and journal-editors in ophthalmology collaborate to enhance the integration of CONSORT into the RCT publication process. Evolution and further extension of CONSORT will hopefully help to incorporate studies with alternative methodologies such as are seen in ophthalmology.

References

Oxford Centre for Evidence-based Medicine. Levels of Evidence, 2009. http://www.cebm.net/index.aspx?o=1025. (accessed 9 March 2013).

Schulz KF, Hayes RJ, Altman DG . Empirical evidence of bias: dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA 1995; 273 (5): 408–412.

Moher D, Pham B, Jones A, Cook DJ, Jadad AR, Moher M et al. Does quality of reports of randomised trials affect estimates of intervention efficacy reported in meta-analyses? Lancet 1998; 352 (9128): 609–613.

Boutron I, Ravaud P, Altman DG . Reporting and interpretation of randomized controlled trials with statistically nonsignificant results for primary outcomes. JAMA 2010; 303 (20): 2058–2064.

Begg C, Cho M, Eastwood S, Horton R, Moher D, Olkin I et al. Improving the quality of reporting of randomized controlled trials: The CONSORT statement. JAMA 1996; 276 (8): 637–639.

CONSORT group. CONSORT Statement 2001 Checklist, 2001. Available from http://www.consort-statement.org/mod_product/uploads/CONSORT%202001%20checklist.pdf. (accessed 9 March 2013).

Moher D, Schulz KF, Altman D, CONSORT Group (Consolidated Standards of Reporting Trials). The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials. JAMA 2001; 285 (15): 1987–1991.

Schulz KF, Altman DG, Moher D, CONSORT Group. CONSORT 2010 Statement: updated guidelines for reporting parallel group randomised trials. BMJ 2010; 340: c332.

CONSORT group. Extensions of the CONSORT statement, 2012. Available from http://www.consort-statement.org/extensions/. (accessed 9 March 2013).

Boutron I, Moher D, Altman DG, Schulz KF, Ravaud P, CONSORT Group. Extending the CONSORT statement to randomized trials of non pharmacologic treatment: explanation and elaboration. Ann Intern Med 2008; 148 (4): 295–309.

Boutron I, Moher D, Altman DG, Schulz KF, Methods Ravaud P, Processes of the CONSORT Group. Example of an extension for trials assessing non pharmacologic treatments. Ann Intern Med 2008; 148 (4): W60–W66.

Lee CF, Cheng AC, Fong DY . Eyes or subjects: are ophthalmic randomized controlled trials properly designed and analyzed? Ophthalmology 2012; 119 (4): 869–872.

Ray WA, O’Day DM . Statistical analysis of multi-eye data in ophthalmic research. Invest Ophthalmol Vis Sci 1985; 26 (8): 1186–1188.

Gauderman WJ, Barlow WE . Sample size calculations for ophthalmologic studies. Arch Ophthalmol 1992; 110 (5): 690–692.

Scherer RW, Crawley B . Reporting of randomized clinical trial descriptors and use of structured abstracts. JAMA 1998; 280 (3): 269–272.

Sánchez-Thorin JC, Cortés MC, Montenegro M, Villate N . The quality of reporting of randomized clinical trials published in Ophthalmology. Ophthalmology 2001; 108 (2): 410–415.

Figg WD, Dunn L, Liewehr DJ, Steinberg SM, Thurman PW, Barrett JC et al. Scientific collaboration results in higher citation rates of published articles. Pharmacotherapy 2006; 26 (6): 759–767.

Willis DL, Bahler CD, Neuberger MM, Dahm P . Predictors of citations in the urological literature. BJU Int 2011; 107 (12): 1876–1880.

Thomson Reuters. Journal Citation Reports, 2011. Available from http://admin-apps.webofknowledge.com/JCR/JCR. (accessed 9 March 2013).

Agha R, Cooper D, Muir G . The reporting quality of randomised controlled trials in surgery: a systematic review. Int J Surg 2007; 5 (6): 413–422.

Balasubramanian SP, Wiener M, Alshameeri Z, Tiruvoipati R, Elbourne D, Reed MW . Standards of reporting of randomized controlled trials in general surgery: can we do better? Ann Surg 2006; 244 (5): 663–667.

Kiehna EN, Starke RM, Pouratian N, Dumont AS . Standards for reporting randomized controlled trials in neurosurgery. J Neurosurg 2011; 114 (2): 280–285.

Parsons NR, Hiskens R, Price CL, Achten J, Costa ML . A systematic survey of the quality of research reporting in general orthopaedic journals. J Bone Joint Surg Br 2011; 93 (9): 1154–1159.

Karri V . Randomised clinical trials in plastic surgery: survey of output and quality of reporting. J Plast Reconstr Aesthet Surg 2006; 59 (8): 787–796.

Camm CF, Chen Y, Sunderland N . An assessment of the Reporting Quality of Randomised Controlled Trials Relating to Anti-Arrhythmic Agents (2002-2011). Int J Cardiol 2013; 168 (2): 1393–1396.

Mills EJ, Wu P, Gagnier J, Devereaux PJ . The quality of randomized trial reporting in leading medical journals since the revised CONSORT statement. Contemp ClinTrials 2005; 26 (4): 480–487.

Altman DG, Moher D, Schulz KF . Improving the reporting of randomised trials: the CONSORT Statement and beyond. Stat Med 2012; 31 (25): 2985–2997.

Chen HL, Liu K . Reporting in randomized trials published in International Journal of Cardiology in 2011 compared to the recommendations made in CONSORT 2010. Int J Cardiol 2012; 160 (3): 208–210.

Hopewell S, Clarke M, Moher D, Wager E, Middleton P, Altman DG et al. CONSORT for reporting randomised trials in journal and conference abstracts. Lancet 2008; 371 (9609): 281–283.

Knobloch K, Vogt PM . Adherence to CONSORT abstract reporting suggestions in surgical randomized-controlled trials published in Annals of Surgery. Ann Surg 2011; 254 (3): 546 (author reply 546–547).

Berwanger O, Ribeiro RA, Finkelsztejn A, Watanabe M, Suzumura EA, Duncan BB et al. The quality of reporting of trial abstracts is suboptimal: survey of major general medical journals. J Clin Epidemiol 2009; 62 (4): 387–392.

Agha RA, Camm CF, Edison E, Orgill DP . The methodological quality of randomized controlled trials in plastic surgery needs improvement: A systematic review. J Plast Reconstr Aesthet Surg 2012; 66 (4): 447–452.

Devereaux PJ, Manns BJ, Ghali WA, Quan H, Guyatt GH . The reporting of methodological factors in randomized controlled trials and the association with a journal policy to promote adherence to the Consolidated Standards of Reporting Trials (CONSORT) checklist. Control Clin Trials 2002; 23 (4): 380–388.

Moher D, Jones A, Lepage L . Use of the CONSORT statement and quality of reports of randomized trials: a comparative before-and-after evaluation. JAMA 2001; 285 (15): 1992–1995 Available from http://www.ncbi.nlm.nih.gov/pubmed/11308436.

Alvarez F, Meyer N, Gourraud PA, Paul C . CONSORT adoption and quality of reporting of randomized controlled trials: a systematic analysis in two dermatology journals. Br J Dermatol 2009; 161 (5): 1159–1165.

Plint AC, Moher D, Morrison A, Schulz K, Altman DG, Hill C et al. Does the CONSORT checklist improve the quality of reports of randomised controlled trials? A systematic review. Med J Aust 2006; 185 (5): 263–267.

Han C, Kwak K, Marks DM, Pae CU, Wu LT, Bhatia KS et al. The impact of the CONSORT statement on reporting of randomized clinical trials in psychiatry. Contemp Clin Trials 2009; 30 (2): 116–122.

McCulloch P, Taylor I, Sasako M, Lovett B, Griffin D . Randomised trials in surgery: problems and possible solutions. BMJ 2002; 324 (7351): 1448–1451.

Gelijns AC, Ascheim DD, Parides MK, Kent KC, Moskowitz AJ . Randomized trials in surgery. Surgery 2009; 145 (6): 581–587.

Farrokhyar F, Karanicolas PJ, Thoma A, Simunovic M, Bhandari M, Devereaux PJ et al. Randomized controlled trials of surgical interventions. Ann Surg 2010; 251 (3): 409–416.

Boutron I, Guittet L, Estellat C, Moher D, Hróbjartsson A, Ravaud P . Reporting methods of blinding in randomized trials assessing non pharmacological treatments. PLoS Med 2007; 4 (2): e61.

Watson A, Frizelle F . The end of the one-eyed surgeon? Time for more randomised controlled trials of surgical procedures. N Z Med J 2004; 117 (1203): U1096.

Solomon M, McLeod R . Surgery and the randomised controlled trial: past, present and future. Med J Aust 1998; 169 (7): 380–383.

Halm EA, Lee C, Chassin MR . Is volume related to outcome in health care? A systematic review and methodologic critique of the literature. Ann Intern Med 2002; 137 (6): 511–520.

Evans J . Reliable and accessible reviews of the evidence for the effect of health care: the role of the Cochrane Collaboration and the CONSORT statement. Eye (London) 1998; 12 (Pt 1): 2–4.

Juni P, Altman DG, Egger M . Systematic reviews in health care: assessing the quality of controlled clinical trials. BMJ 2001; 323 (7303): 42–46.

Pathman DE, Konrad TR, Freed GL, Freeman VA, Koch GG . The awareness-to-adherence model of the steps to clinical guideline compliance. The case of pediatric vaccine recommendations. Med Care 1996; 34 (9): 873–889.

Altman DG . Endorsement of the CONSORT statement by high impact medical journals: survey of instructions for authors. BMJ 2005; 330 (7499): 1056–1057.

Hopewell S, Altman DG, Moher D, Schulz KF . Endorsement of the CONSORT Statement by high impact factor medical journals: a survey of journal editors and journal ‘Instructions to Authors’. Trials 2008; 9: 20.

Schriger DL, Arora S, Altman DG . The content of medical journal Instructions for authors. Ann Emerg Med 2006; 48 (6): 743–749 749.e1–4.

Tharyan P, Premkumar TS, Mathew V, Barnabas JP, Manuelraj . Editorial policy and the reporting of randomized controlled trials: a survey of instructions for authors and assessment of trial reports in Indian medical journals. 2004-05 Nat Med J India 21 (2): 62–68.

Kane RL, Wang J, Garrard J . Reporting in randomized clinical trials improved after adoption of the CONSORT statement. J Clin Epidemiol 2007; 60 (3): 241–249.

EQUATOR Network. Welcome to the EQUATOR Network website. Available from http://www.equator-network.org/home/. (accessed 9 March 2013).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no conflict of interest.

Additional information

This work has been previously presented at the 117th Annual Meeting of the Japanese Ophthalmological Society, 4–7 April 2013, in Tokyo, Japan, and in the Royal College of Ophthalmologists Annual Congress, 21–23 May 2013, in Liverpool, UK.

Rights and permissions

About this article

Cite this article

Yao, A., Khajuria, A., Camm, C. et al. The reporting quality of parallel randomised controlled trials in ophthalmic surgery in 2011: a systematic review. Eye 28, 1341–1349 (2014). https://doi.org/10.1038/eye.2014.206

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/eye.2014.206

This article is cited by

-

Systematic review of sample size calculations and reporting in randomized controlled trials in ophthalmology over a 20-year period

International Ophthalmology (2023)

-

The clinician’s guide to randomized trials: interpretation

Eye (2022)

-

Randomized controlled trials comparing surgery to non-operative management in neurosurgery: a systematic review

Acta Neurochirurgica (2019)

-

Compliance of systematic reviews in ophthalmology with the PRISMA statement

BMC Medical Research Methodology (2017)