In 2018, the US Federal Trade Commission (FTC) won a US$50-million ruling against the publisher OMICS for deceptive business practices. The FTC’s investigation found that OMICS accepted and published nearly 69,000 articles in academic disciplines with little or no peer review. The judgement against the infamous publisher, located in Hyderabad, India, proved difficult to enforce. But the ensuing stigma still carries a penalty. In the two years after the FTC filed its complaint, the articles OMICS published under its imprint fell by 40%. After all, a publisher with no reputation is preferable to a publisher with a bad one.

Predatory publishers take publication fees without performing advertised services such as archiving, indexing or quality control. They often use outright deception, such as fake editorial boards or impact factors, to appear legitimate. Researchers might submit work to these outlets naively or cynically; even unread or sloppy articles are rewarded by some universities’ tenure, hiring and promotion decisions. Often, these unvetted articles attract little attention. However, because they sometimes get harvested by non-selective academic search engines such as Google Scholar, they could be found — and read — as part of the scientific corpus.

A year after the FTC judgement, principal scientific adviser to the Government of India Krishnaswamy VijayRaghavan lamented the difficulty of stamping out the “menace” of predatory publishers. He likened them to the Hydra, the creature of Greek myth that sprouts two heads for each one severed.

To get a better look at this many-headed monster, we constructed a database of publishers that have not been indexed in selective bibliographic databases such as Web of Science or Scopus. Currently, this database, called Lacuna, indexes more than 900,000 papers across 2,300 journals from 10 publishers, a small fraction of the fringe of academic publishing. At present it includes mainly journals that falsely advertise peer review and other scholarly services. However, our long-term goal is to index publications across the legitimacy spectrum, from malicious fakes to scrappy, under-resourced start-ups. Already, our preliminary work has uncovered deceptive practices we hadn’t anticipated. OMICS branding has been removed from many titles, for example. And predatory journals are re-issuing — seemingly on their own initiative without any consent — actual, peer-reviewed articles that have been published elsewhere.

Better tracking is one strand of a broader strategy to defeat this Hydra. Other strands are better education and incentives for authors submitting manuscripts, and greater transparency around how legitimate journals vet work.

Buried branding

In 2020, OMICS changed hundreds of URLs and overhauled websites and typesetting to remove references to OMICS. It also introduced a ‘Hilaris’ brand. Although the titles of the rebranded journals remained listed on the OMICS web pages, mentions of OMICS are absent on the Hilaris web pages, as well as those for other subsidiaries. The Journal of Surgery, for example, continues under the new brand with the same DOI prefix, ISSN, and editor-in-chief, with no mention of OMICS.

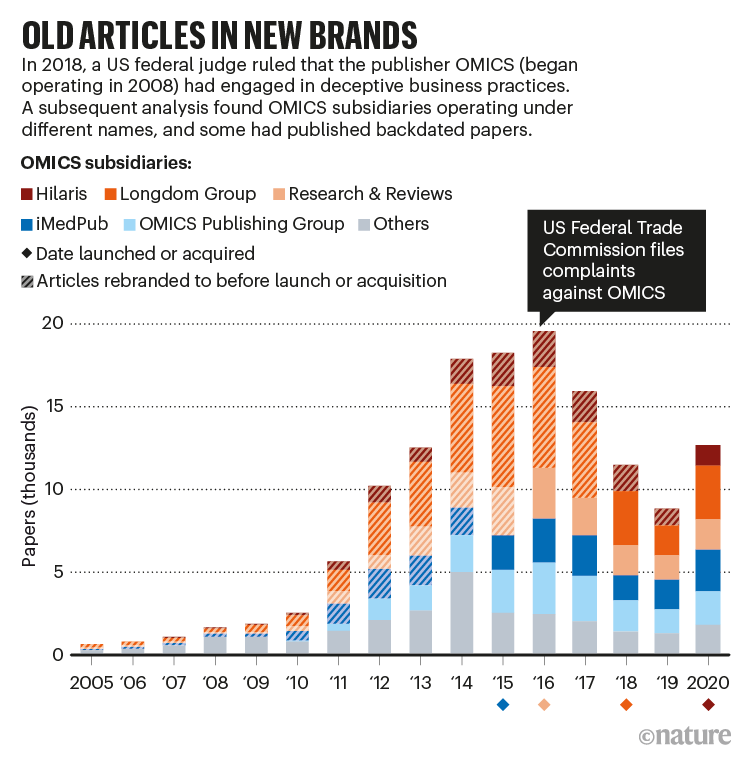

We followed links for the 737 journals listed on the OMICS website. More than 80% (600) are labelled with other brands that are distinct corporate entities. Among the most prominent, Longdom has addresses in Spain and Belgium; Hilaris is also located in Belgium, but at a different address. iMedPub LTD is located in the United Kingdom. The number of journals has grown faster than the number of publications, suggesting that many journals are shells with little content. Furthermore, the content in the subsidiaries is backdated (see ‘Old articles in new brands’). Although these subsidiaries were incorporated beginning in 2015 and as recently as 2020, articles dating to years before are associated only with the new titles, without any mention of OMICS. (Hilaris, iMedPub, Longdom and OMICS did not respond to our enquiries about backdating and whether this was part of a rebranding practice.)

Source: K. SIler et al.

We think OMICS is retconning the publishing histories of many of its journals. Here’s an example: Advances in Pharmacoepidemiology & Drug Safety published its first issue in 2012 under the OMICS imprint, then removed the OMICS logo in 2015 and appeared as a standalone journal until it was rebranded as a Longdom imprint in 2019. At its inception, Robert H. Howland from the University of Pittsburgh in Pennsylvania and Richard L. Slaughter from Wayne State University in Detroit, Michigan, were listed as editors-in-chief. Howland told the FTC in 2016 that he’d been listed as editor without his consent or knowledge. Under Longdom, only Slaughter is listed as editor-in-chief. He died in 2016.

Bootlegged articles

One tactic predatory journals have used is to mimic longstanding legitimate journals online (or sometimes to acquire the titles). Predators rely on the journal’s reputation to collect fees1 without providing scholarly services. In August, scholar Anna Abalkina at the Free University of Berlin reported that a list of COVID-19 publications maintained by the World Health Organization contained hundreds of papers from three such journals, many entirely out of scope. (A journal supposedly about linguistics had papers on COVID-19, nutrition and gestational anaemia).

Indexing for our Lacuna database uncovered another alarming practice: re-publishing bootlegged copies of papers from legitimate sources, under new DOIs, without crediting the original journal, and sometimes not the original author. A researcher perusing what seem to be ‘back issues’ sees real peer-reviewed articles copied from legitimate journals.

Several anomalies led us to discover that at least nine papers in the Journal of Bone Research and Reports, under the iMEDPub LTD brand, were directly lifted from the Elsevier journal Bone Reports. (We reported this to Bone Reports; an Elsevier representative says the matter is now under investigation.) The first clue was the bizarre names of some authors, such as “urban center” and “parliamentarian”. Many author names appeared with an extra character (for example, “John Smitha” and “Mary Jonesb”) — indicating that they were copied from a document containing superscripts.

Predatory journals: no definition, no defence

Some publishing institutions were nonsensical, including “university of canadian province” and “urban center university”. Author affiliations were listed in absurd ways: New Orleans was renamed “point of entry” and North Carolina was dubbed “old North State”. Some authors’ e-mail addresses were those of non-authors. (When we contacted authors of the Bone Reports articles, none was aware that their articles had been bootlegged; they responded with a mixture of anger, amusement and bafflement.)

Titles of Bone Reports papers were modified by the use of synonyms: for example, “A novel application of the ultrasonic method” became “a completely unique application of the unhearable [sic] technique.” (This particular article was republished in at least two OMICS journals.) Some articles were fully plagiarized from the Elsevier source, with the only difference being redacted sentences. In other cases, words in the Elsevier article were replaced with synonyms, perhaps to create the illusion of originality and evade plagiarism detection. Swaps included “knowledge” for “data” and “intellectual issues” for “cognitive disorders”. More convoluted replacements included incorrectly interpreted acronyms: for example, the common word “an” became “Associate in Nursing”, and “sd” was written as “Mount Rushmore State” (a nickname for the US state of South Dakota) instead of “standard deviation”. Other scholars have identified similar ‘tortured phrases’ in different journals2.

To generate these differences, we hypothesize that OMICS used some sort of rudimentary synonym-generating software, or perhaps the works were translated from English to another language and then back to English. Other ‘papers’ were filled with text from unknown sources, perhaps translated from papers in languages other than English. OMICS backdated their mangled copies, creating the illusion that they pre-dated the original, legitimate Elsevier publications.

Market deception

Why go to all this trouble? One possibility is that OMICS is seeding fledgling journals to attract paying customers. Also, OMICS has footnotes in some plagiarized articles claiming that work was presented at predatory conferences, falsely suggesting that these are vibrant, professional events. (The FTC judgement found that such conferences are a significant source of revenue for the company.)

There is evidence that this practice is not limited to OMICS. A team at scholarly-services firm Cabells International compiles lists of predatory publishers and has also identified bootlegging in a hijacked journal (that is, an illegitimate ‘clone’ of a legitimate journal). The fake journal website appeared above its genuine counterpart in web searches, and an article it contained showed page numbers from the original publication, a bright white rectangle where the original journal’s name had been obscured, and even someone’s finger holding a paper being photographed. Other scholars have also found evidence of ‘cloning’ and ‘recycling’ to produce a ‘fictitious archive’ for journals collecting publishing fees3. Our work putting together the Lacuna database should help to identify and track these sorts of practices.

Adaptable foe

Predatory publishing has flourished as more reputable journals charge authors publication fees and scholars remain under intense pressure to publish. OMICS is just the tip of the iceberg of a swiftly evolving fraudulent business model. Following the ruling against OMICS, economist Derek Pyne at Thompson Rivers University in Kamloops, Canada, remarked that there were hundreds of smaller illegitimate publishers. “Too many … for the FTC to go after.”

Vice-chairman of the India University Grants Commission Bhushan Patwardhan cautioned that predatory publishers are a “determined and adaptable foe”. If a publisher gains notoriety, creating new websites under other brands is cheap, easy, and profitable. The low marginal costs of online publishing allow scam journals to operate from anywhere, particularly where their business practices can operate with impunity. To fight them, it is essential to know how they attract researchers and avoid detection.

By one estimate, respected indexes such as Web of Science cover only about one-third of scholarly publications. Tens of thousands of non-English-language journals are excluded, as are titles that do not meet citation thresholds. And the presence or absence of a journal in those databases is not enough to distinguish between fake or legitimate publishers.

The Lacuna database aims to tabulate published work omitted from major indexing systems: this will enable exploration of shades of legitimacy across scholarly communication and reveal diverse publishing venues, as well as illegitimate, niche and emergent journals.

Though it can be convenient to talk about predatory and legitimate journals, these are not binary classifications. There are different types and degrees of questionable publishing practices4. Capturing data for journals that lack indexing and metadata will enable further analysis by librarians, researchers, administrators and policymakers. That will enable understanding of non-indexed publishers of various shades of legitimacy to underpin scientometric insights and inform policies.

Starve the Hydra

Instead of repeatedly severing heads for new ones to regrow, policy that combats predatory publishing should focus on starving the Hydra of resources. Here’s what we recommend.

Audit peer reviews. To determine whether a journal is predatory, evaluators rely on many ‘indirect’ clues, such as dead links on websites, poor English grammar, or lack of listings in institutions such as the Committee on Publishing Ethics (COPE) or the Directory of Open Access Journals (DOAJ). But it is the content of peer review that shows how seriously journals vet submissions. If journals are unwilling to publish their peer reviews, these should be subject to audit by funders.

Do authors comply when funders enforce open access to research?

Falsifying peer review on a large scale would be very difficult for egregious predatory journals. Quasi-predatory journals would reveal poor-quality or ignored reviews. High-status journals coasting on reputation might also be exposed. Even with greater transparency, demarcating legitimate and illegitimate journals will be contentious. However, that demarcation should be based on the most relevant information, not on indirect clues and status signals.

Mandating some form of open peer review dovetails with other initiatives to improve science by sharing data. Breaking open the ‘black box’ would demystify the process and provide new insights5. Sharing blinded peer reviews online — or at least confidentially with stakeholders — would allow funders, researchers, librarians and institutions to identify scams and encourage good practices in legitimate journals.

Link quality assurance to funding. Modern universities have systems to vet vendors. They could expand those systems to include payments to journals (both subscription-based and those with article-processing charges). Requirements could include open peer review, as well as adherence to the Fair Open Access Principles, which stipulate explanations of how publishing fees are spent. Mandates from funders have already spurred changes in scholarly publishing, such as those around open access driven by requirements of the US National Institutes of Health, the Bill and Melinda Gates Foundation and the Wellcome.

Instead of relying on third-party lists of acceptable and unacceptable journals (such as Beall’s or Cabells’ lists, which can stigmatize well-meaning but resource-limited publishers), funders could mandate that publishing fees can only be paid to journals that adhere to transparency rules.

This would require journals to change practices, but digitization means that publishers can collect and archive peer-review data more readily than before. Scientific funders and taxpayers deserve accountability for the billions of dollars invested annually in scholarly publishing. Scholars deciding where to submit work deserve greater transparency about peer review (for example, content, rejection rates and average time to decision). This transparency will both starve the Hydra and improve standards for all journals.

Support good-faith emergent journals. Several platforms — such as the Public Knowledge Project’s Open Journal Systems — allow dissemination of journals at a modest cost. SciELO (in Brazil) and Redalyc (in Mexico) are examples of academic-publishing infrastructures that provide quality, low-cost open-access journals for scholars and issues in their native languages. Our preliminary analyses found that academics in Latin America were much less likely to publish in OMICS journals than were those in Central Asia, the Middle East and Africa. New criteria for legitimacy can prevent well-intentioned, emergent journals from being misclassified. Institutionalizing paths to legitimacy for new publishers would lower barriers to entry for disadvantaged scholars and institutions.

Don’t reward papers in predatory journals. Many universities and funders unintentionally feed predatory publishers when they put value on quantity and use ill-informed metrics to gauge quality. Authors who publish in questionable journals span the continuum between well-meaning and naive, to dishonest and complicit6. Informing researchers — especially early-career researchers — of the dangers is essential; so is revising policies so that researchers are not tempted to buy ‘easy’ publications.

However, our hope is to move beyond this, by destroying the monster with systemic changes to the scholarly publishing system, rather than placing extra monitoring burdens on individual scholars. If funders and institutions reward transparent quality journals, predatory journals will starve.

Do authors comply when funders enforce open access to research?

Do authors comply when funders enforce open access to research?

Predatory journals: no definition, no defence

Predatory journals: no definition, no defence