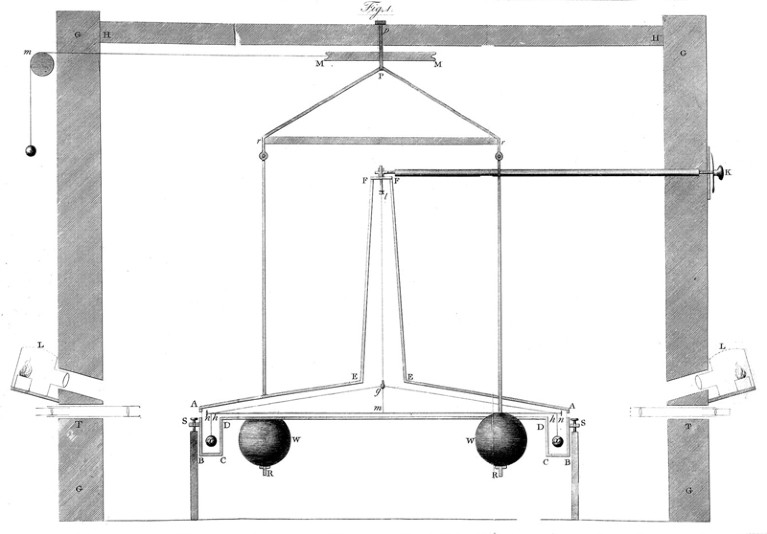

More than two centuries after Henry Cavendish devised a torsion balance to determine the constant of gravitation, metrologists have yet to agree on the constant’s precise value.Credit: The Royal Society

Everyone’s talking about reproducibility — or at least they are in the biomedical and social sciences. The past decade has seen a growing recognition that results must be independently replicated before they can be accepted as true.

A focus on reproducibility is necessary in the physical sciences, too — an issue explored in this month’s Nature Physics, in which two metrologists argue that reproducibility should be viewed through a different lens. When results in the science of measurement cannot be reproduced, argue Martin Milton and Antonio Possolo, it’s a sign of the scientific method at work — and an opportunity to promote public awareness of the research process (M. J. T. Milton and A. Possolo Nature Phys. 26, 117–119; 2020).

Trustworthy data underpin reproducible research

The authors — at the International Bureau of Weights and Measures in Paris, and at the National Institutes of Standards and Technology in Gaithersburg, Maryland, respectively — draw on three case studies, each one an instalment in the quest to measure one of the fundamental constants of nature.

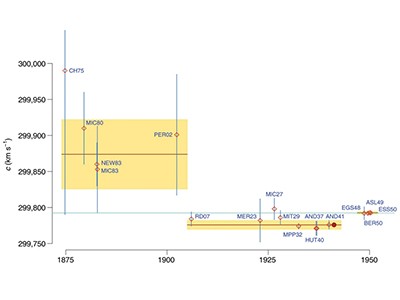

The researchers chose the speed of light (c); Planck’s constant (h), a number that links the amount of energy a photon carries to its frequency; and the constant of gravitation (G), a measure of the strength of the gravitational force between two bodies.

For both Planck’s constant and the speed of light, different laboratories have arrived at the same number using different methods — a sign of reproducibility. In the case of Planck’s constant, there’s now enough confidence in its value for it to become the basis of the International System of Units definition of the kilogram that was confirmed last May.

However, despite numerous experiments spanning three centuries, the precise value of G remains uncertain. The root of the uncertainty is not fully understood: it could be due to undiscovered errors in how the value is being measured; or it could indicate the need for new physics. One scenario being explored is that G could even vary over time, in which case scientists might have to revise their view that it has a fixed value.

If that were to happen — although physicists think it unlikely — it would be a good example of non-reproduced data being subjected to the scientific process: experimental results questioning a long-held theory, or pointing to the existence of another theory altogether.

Metrology is key to reproducing results

Questions in biomedicine and in the social sciences do not reduce so cleanly to the determination of a fundamental constant of nature. Compared with metrology, experiments to reproduce results in fields such as cancer biology are likely to include many more sources of variability, which are fiendishly hard to control for.

But metrology reminds us that when researchers attempt to reproduce the results of experiments, they do so using a set of agreed — and highly precise — experimental standards, known in the measurement field as metrological traceability. It is this aspect, the authors contend, that helps to build trust and confidence in the research process.

One of the wider lessons from Milton and Possolo’s commentary is that researchers from different domains must continue to talk and to share their experiences of reproducibility. At the same time, we should be careful about assuming that there’s something inherently wrong when researchers cannot reproduce a result even when adhering to the best agreed standards.

Irreproducibility should not automatically be seen as a sign of failure. It can also be an indication that it’s time to rethink our assumptions.

Trustworthy data underpin reproducible research

Trustworthy data underpin reproducible research

Metrology is key to reproducing results

Metrology is key to reproducing results

Largest overhaul of scientific units since 1875 wins approval

Largest overhaul of scientific units since 1875 wins approval